Your browser does not fully support modern features. Please upgrade for a smoother experience.

Please note this is an old version of this entry, which may differ significantly from the current revision.

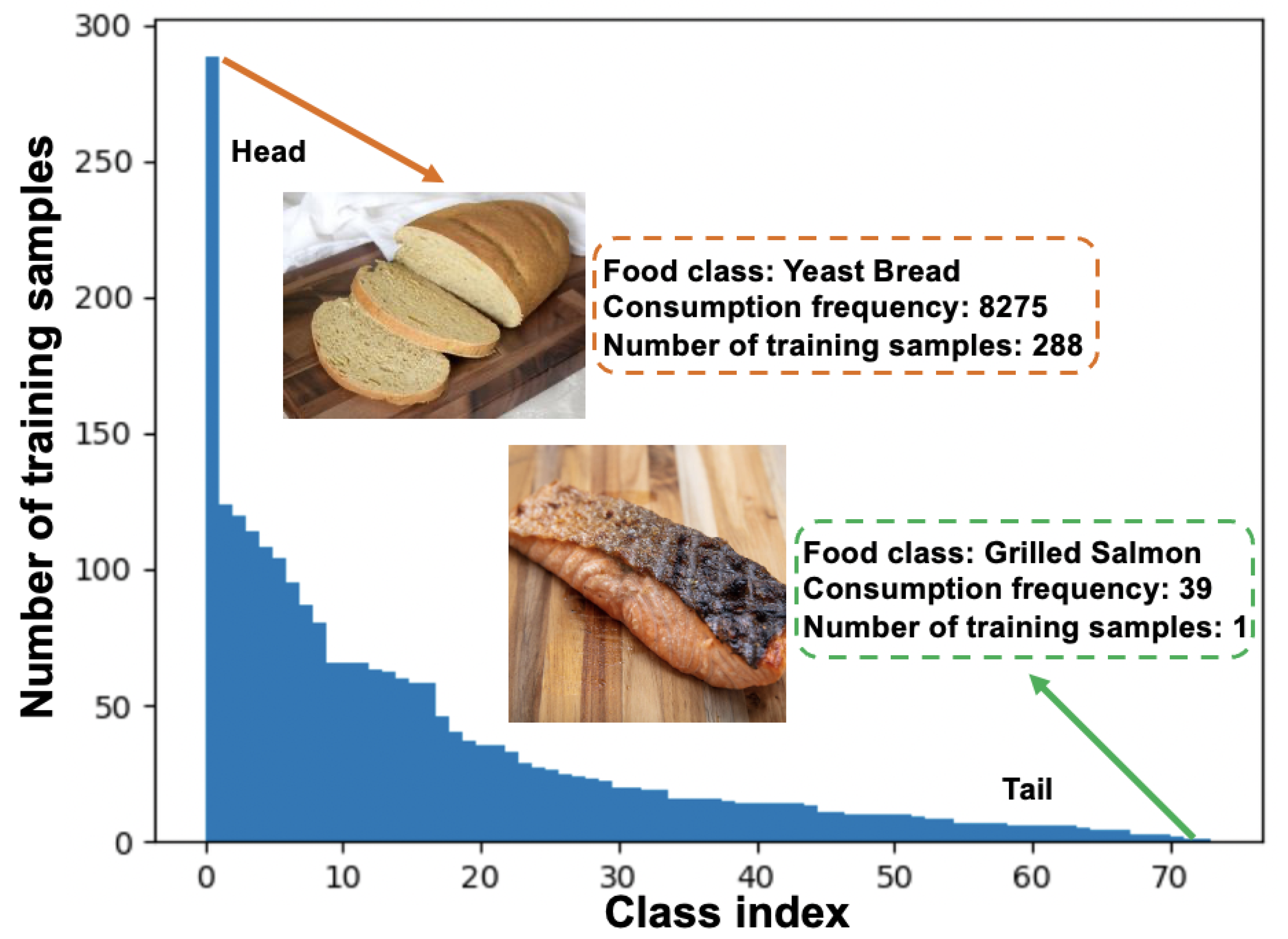

Food classification serves as the basic step of image-based dietary assessment to predict the types of foods in each input image. However, foods in real-world scenarios are typically long-tail distributed, where a small number of food types are consumed more frequently than others, which causes a severe class imbalance issue and hinders the overall performance. In addition, none of the existing long-tailed classification methods focus on food data, which can be more challenging due to the inter-class similarity and intra-class diversity between food images.

- food classification

- long-tail distribution

- image-based dietary assessment

- benchmark datasets

- food consumption frequency

- neural networks

1. Introduction

Accurate identification of food is critical to image-based dietary assessment [1][2], which facilitates matching the food to the proper identification of that food in a nutrient database with the corresponding nutrient composition [3]. Such linkage makes it possible to determine dietary links to health and diseases such as diabetes [4]. Dietary assessment, therefore, is very important to healthcare-related applications [5][6] due to recent advances in novel computation approaches and new sensor devices. In addition, the application of image-based dietary assessments on mobile devices has received increasing attention in recent years [7][8][9][10], which can serve as a more efficient platform to alleviate the burden on participants and enhance the adaptability to diverse real-world situations. The performance of image-based dietary assessment relies on the accurate prediction of foods in the captured eating scene images. However, most current food classification systems [11][12][13] are developed based on class-balanced food image datasets such as Food-101 [14], where each food class contains the same number of training data. However, this rarely happens in the real world since food images usually have a long-tailed distribution, where a small portion of food classes (i.e., the head class) contain abundant samples for training, while most food classes (i.e., the tail class) have only a few samples, as shown in Figure 1. Thus, long-tailed classification, defined as the extreme class imbalance problem, leads to classification bias towards head classes and poor generalization ability for recognizing tail food classes. Therefore, the food classification performance in the real world may drop significantly without considering the class imbalance issue, which would in turn constrain the applications of image-based dietary assessments. Researchers analyze the long-tailed class distribution problem for food image classification and develop a framework to address the issue with the objective of minimizing the performance gap when applied in real-life food-related applications.

Figure 1. An overview of the VFN-LT that exhibits a real-world long-tailed food distribution. The number of training samples is assigned based on the consumption frequency, which is matched through NHANES from 2009 to 2016 among 17,796 healthy U.S. adults.

As few existing long-tailed image classification methods target food images, two benchmark Long-Tailed food datasets are introduced at first, including Food101-LT and VFN-LT. Similar to [15], Food101-LT is constructed as a long-tailed version of the original balanced Food101 [14] dataset by following the Pareto distribution. In addition, as shown in Figure 1, VFN-LT is also used and provides a new and valuable long-tailed distributed food dataset where the number of samples for each food class exhibits the distribution of consumption frequency [16], defined as how often a food is consumed in one day according to the National Health and Nutrition Examination Survey (https://www.cdc.gov/nchs/nhanes/index.html, accessed on 21 April 2023) (NHANES) from 2009 to 2016 among 17,796 U.S. healthy adults aged 20 to 65, i.e., the head classes of VFN-LT are the most frequently consumed foods in the real world for the population represented. It is also worth noting that both Food101-LT and VFN-LT are of a heavier-tailed distribution than most existing benchmarks such as CIFAR100-LT [17], which is simulated by following a general exponential distribution.

An intuitive way to address the class imbalance issue is to undersample the head classes and oversample the tail classes to obtain a balanced training set containing a similar number of samples for all classes. However, there are two major challenges: (1) How to undersample the head classes to remove the redundant samples without compromising the original performance. (2) How to oversample the tail classes to increase the model generalization ability as naive repeated random oversampling can further intensify the overfitting problem, resulting in a worse performance especially in heavy-tailed distributions. In addition, food images are known to be more complicated than general objects for various downstream tasks such as classification, segmentation and image generation due to their inter-class similarity and intra-class diversity, which becomes more challenging in long-tailed data distributions with a severe class imbalance issue.

2. Food Classification

The most common deep-learning-based methods [18] for food classification apply off-the-shelf models such as ResNet [19] with pre-training on ImageNet [20] to fine tune [21] food image datasets [22][23][24] such as Food-101 [14]. To achieve a higher performance and address the issue of inter-class similarity and intra-class diversity, the most recent work proposed the construction of a semantic hierarchy based on both visual similarity [11] and nutrition information [25] to perform optimization on each level. In addition, food classification has also been studied under different scenarios such as large-scale recognition [12], few-shot learning [26] and continual learning [27][28]. However, none of the existing methods study long-tailed food classification where the severe class imbalance issue in real life may significantly degrade the performance. Finally, though the most recent work targets the multi-labeled ingredient recognition [29], the focus of this research is on long-tailed single food classification, where each image contains only one food class and the training samples for each class are heavily imbalanced.

3. Long-Tailed Classification

Existing long-tailed classification methods can be categorized into two main groups including: (i) re-weighting and (ii) re-sampling. Re-weighting-based methods aim to mitigate the class imbalance problem by assigning tail classes or samples with higher weights than the head classes. The inverse of class frequency is widely used to generate the weights for each class, as in [30][31]. In addition, a variety of loss functions have been proposed to adjust weights during training, including label-distribution-aware margin loss [17], balanced Softmax [32] and instance-based focal loss [33]. Alternatively, re-sampling-based methods aim to generate a balanced training distribution by undersampling the head classes as described in [34] and oversampling the tail classes as shown in [34][35], in which all tail classes were oversampled until class balance was achieved. However, a drawback to undersampling is that valuable information of the head classes can be lost and naive oversampling can further intensify the overfitting problem due to the lack of diversity of repeated samples. A recent work [36] proposed performing oversampling by leveraging CutMix [37] to cut a randomly generated region in tail class samples and mix it with head class samples. However, the performance of existing methods on food data still remain under-explored, presenting additional challenges to other object recognition.

This entry is adapted from the peer-reviewed paper 10.3390/nu15122751

References

- He, J.; Shao, Z.; Wright, J.; Kerr, D.; Boushey, C.; Zhu, F. Multi-task Image-Based Dietary Assessment for Food Recognition and Portion Size Estimation. In Proceedings of the 2020 IEEE Conference on Multimedia Information Processing and Retrieval, Shenzhen, China, 6–8 August 2020; pp. 49–54.

- He, J.; Mao, R.; Shao, Z.; Wright, J.L.; Kerr, D.A.; Boushey, C.J.; Zhu, F. An end-to-end food image analysis system. Electron. Imaging 2021, 2021, 285-1–285-7.

- Shao, Z.; He, J.; Yu, Y.Y.; Lin, L.; Cowan, A.; Eicher-Miller, H.; Zhu, F. Towards the Creation of a Nutrition and Food Group Based Image Database. arXiv 2022, arXiv:2206.02086.

- Anthimopoulos, M.M.; Gianola, L.; Scarnato, L.; Diem, P.; Mougiakakou, S.G. A food recognition system for diabetic patients based on an optimized bag-of-features model. IEEE J. Biomed. Health Inform. 2014, 18, 1261–1271.

- Allegra, D.; Battiato, S.; Ortis, A.; Urso, S.; Polosa, R. A review on food recognition technology for health applications. Health Psychol. Res. 2020, 8, 9297.

- Shao, Z.; Han, Y.; He, J.; Mao, R.; Wright, J.; Kerr, D.; Boushey, C.J.; Zhu, F. An Integrated System for Mobile Image-Based Dietary Assessment. In Proceedings of the 3rd Workshop on AIxFood, Virtual Event, 20 October 2021; pp. 19–23.

- Vasiloglou, M.F.; van der Horst, K.; Stathopoulou, T.; Jaeggi, M.P.; Tedde, G.S.; Lu, Y.; Mougiakakou, S. The human factor in automated image-based nutrition apps: Analysis of common mistakes using the goFOOD lite app. JMIR MHealth UHealth 2021, 9, e24467.

- Kawano, Y.; Yanai, K. Foodcam: A real-time food recognition system on a smartphone. Multimed. Tools Appl. 2015, 74, 5263–5287.

- Boushey, C.; Spoden, M.; Zhu, F.; Delp, E.; Kerr, D. New mobile methods for dietary assessment: Review of image-assisted and image-based dietary assessment methods. Proc. Nutr. Soc. 2017, 76, 283–294.

- Zhu, F.; Bosch, M.; Woo, I.; Kim, S.; Boushey, C.J.; Ebert, D.S.; Delp, E.J. The use of mobile devices in aiding dietary assessment and evaluation. IEEE J. Sel. Top. Signal Process. 2010, 4, 756–766.

- Mao, R.; He, J.; Shao, Z.; Yarlagadda, S.K.; Zhu, F. Visual aware hierarchy based food recognition. arXiv 2020, arXiv:2012.03368.

- Min, W.; Wang, Z.; Liu, Y.; Luo, M.; Kang, L.; Wei, X.; Wei, X.; Jiang, S. Large scale visual food recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2023. Early access.

- Wu, H.; Merler, M.; Uceda-Sosa, R.; Smith, J.R. Learning to make better mistakes: Semantics-aware visual food recognition. In Proceedings of the 24th ACM international conference on Multimedia, Amsterdam, The Netherlands, 15–19 October 2016; pp. 172–176.

- Bossard, L.; Guillaumin, M.; Van Gool, L. Food-101—Mining Discriminative Components with Random Forests. In Computer Vision—ECCV 2014: 13th European Conference, Zurich, Switzerland, 6–12 September 2014, Proceedings, Part VI 13; Springer International Publishing: Cham, Switzerland, 2014.

- Liu, Z.; Miao, Z.; Zhan, X.; Wang, J.; Gong, B.; Yu, S.X. Large-scale long-tailed recognition in an open world. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 2537–2546.

- Lin, L.; Zhu, F.M.; Delp, E.J.; Eicher-Miller, H.A. Differences in Dietary Intake Exist Among US Adults by Diabetic Status Using NHANES 2009–2016. Nutrients 2022, 14, 3284.

- Cao, K.; Wei, C.; Gaidon, A.; Arechiga, N.; Ma, T. Learning imbalanced datasets with label-distribution-aware margin loss. In Proceedings of the Advances in Neural Information Processing Systems, Vancouver, BC, Canada, 8–14 December 2019; Volume 32.

- Christodoulidis, S.; Anthimopoulos, M.; Mougiakakou, S. Food recognition for dietary assessment using deep convolutional neural networks. In New Trends in Image Analysis and Processing–ICIAP 2015 Workshops: ICIAP 2015 International Workshops, BioFor, CTMR, RHEUMA, ISCA, MADiMa, SBMI, and QoEM, Genoa, Italy, 7–8 September 2015, Proceedings 18; Springer International Publishing: Cham, Switzerland, 2015; pp. 458–465.

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778.

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M.; et al. ImageNet Large Scale Visual Recognition Challenge. Int. J. Comput. Vis. 2015, 115, 211–252.

- Yanai, K.; Kawano, Y. Food image recognition using deep convolutional network with pre-training and fine-tuning. In Proceedings of the IEEE International Conference on Multimedia & Expo Workshops, Turin, Italy, 29 June–3 July 2015; pp. 1–6.

- Farinella, G.M.; Allegra, D.; Moltisanti, M.; Stanco, F.; Battiato, S. Retrieval and classification of food images. Comput. Biol. Med. 2016, 77, 23–39.

- Kawano, Y.; Yanai, K. Automatic Expansion of a Food Image Dataset Leveraging Existing Categories with Domain Adaptation. In Proceedings of the ECCV Workshop on Transferring and Adapting Source Knowledge in Computer Vision (TASK-CV), Zurich, Switzerland, 6–7 September 2014.

- Farinella, G.M.; Allegra, D.; Stanco, F. A Benchmark Dataset to Study the Representation of Food Images. In Proceedings of the Europen Conference of Computer Vision, Workshops, Zurich, Switzerland, 6–12 September 2014; pp. 584–599.

- Mao, R.; He, J.; Lin, L.; Shao, Z.; Eicher-Miller, H.A.; Zhu, F. Improving Dietary Assessment Via Integrated Hierarchy Food Classification. In Proceedings of the 2021 IEEE 23rd International Workshop on Multimedia Signal Processing (MMSP), Tampere, Finland, 6–8 October 2021; pp. 1–6.

- Jiang, S.; Min, W.; Lyu, Y.; Liu, L. Few-shot food recognition via multi-view representation learning. ACM Trans. Multimed. Comput. Commun. Appl. (TOMM) 2020, 16, 1–20.

- He, J.; Zhu, F. Online Continual Learning for Visual Food Classification. In Proceedings of the IEEE/CVF International Conference on Computer Vision Workshops, Montreal, BC, Canada, 11–17 October 2021; pp. 2337–2346.

- He, J.; Mao, R.; Shao, Z.; Zhu, F. Incremental Learning In Online Scenario. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 13926–13935.

- Gao, J.; Chen, J.; Fu, H.; Jiang, Y.G. Dynamic Mixup for Multi-Label Long-Tailed Food Ingredient Recognition. IEEE Trans. Multimed. 2022.

- Huang, C.; Li, Y.; Loy, C.C.; Tang, X. Learning deep representation for imbalanced classification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 5375–5384.

- Wang, Y.X.; Ramanan, D.; Hebert, M. Learning to model the tail. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; Volume 30.

- Ren, J.; Yu, C.; Ma, X.; Zhao, H.; Yi, S. Balanced meta-softmax for long-tailed visual recognition. Adv. Neural Inf. Process. Syst. 2020, 33, 4175–4186.

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal loss for dense object detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2980–2988.

- Van Hulse, J.; Khoshgoftaar, T.M.; Napolitano, A. Experimental perspectives on learning from imbalanced data. In Proceedings of the 24th International Conference on Machine Learning, Corvallis, OR, USA, 21–24 March 2007; pp. 935–942.

- Buda, M.; Maki, A.; Mazurowski, M.A. A systematic study of the class imbalance problem in convolutional neural networks. Neural Netw. 2018, 106, 249–259.

- Park, S.; Hong, Y.; Heo, B.; Yun, S.; Choi, J.Y. The Majority Can Help The Minority: Context-rich Minority Oversampling for Long-tailed Classification. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 6887–6896.

- Yun, S.; Han, D.; Oh, S.J.; Chun, S.; Choe, J.; Yoo, Y. Cutmix: Regularization strategy to train strong classifiers with localizable features. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Repblic of Korea, 27–28 October 2019; pp. 6023–6032.

This entry is offline, you can click here to edit this entry!