Falls by an older person are a significant public health issue because they can result in disabling fractures and cause severe psychological problems that diminish a person’s level of independence. Falls can be fatal, particularly for the elderly. Fall Detection Systems (FDS) are automated systems designed to detect falls experienced by older adults or individuals. Early or real-time detection of falls may reduce the risk of major problems.

1. Introduction

According to one study [1], falls are the leading cause of injury-related death for seniors aged 79 or over and the second most prevalent cause of injury-related (unintentional) mortality for adults of all ages. A person’s quality of life (QoL) is influenced by their intellectual ability, which has been documented to be impaired when elderly persons become bedridden after falls. A fall detection system is an aid with the main purpose of generating an alert if a fall has occurred. They show great promises of mitigating some of the detrimental impacts of falls. Fall detectors have a substantial impact on how soon assistance is provided after a fall as well as decreasing the fear of falling. Falling and being afraid of falling are related: being terrified of having fallen may increase the likelihood that a person will suffer a fall [2]. Numerous studies have been done to create strategies and methods for improving the functional abilities of the elderly and ill. Some systems use cameras, sensors, and computer technology. Such systems for older persons can both improve the capacity for independent living by enhancing their sense of security in a supportive environment and reduce the amount of physical labor required for their care by reducing the need for nurses or other support employees [3].

2. Fall Detection Strategies

Sensors and machine learning are vital parts of fall detection and fall recognition. Multiple methods of fall detection used many types of sensors. To acquire the final decision of fall events from the sensor data, machine learning, deep learning, and artificial intelligence are used. Researchers worldwide have shown significant interest in human activity recognition. Over the past few years, numerous approaches have been introduced, focusing on identifying various activities such as walking, running, jumping, jogging, falling, and more. The authors of [

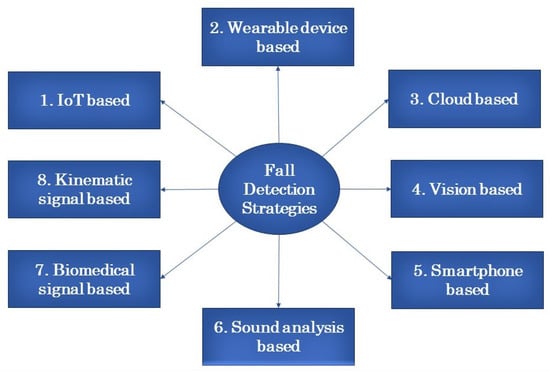

4] contributed a valuable resource in the realm of real-time Human Activity Recognition (HAR) by offering a comprehensive reference for real-time fall detection. Fall detection holds particular importance among these activities as it poses a common and dangerous risk for people of all ages, particularly impacting the elderly. Typically, these applications utilize sensors capable of detecting sudden movement changes. These sensors can be integrated into wearable devices such as smartphones, necklaces, or smart wristbands, making them easily wearable. In fall detection strategies, the first step is to recognize human activity, which enables the identification of fall events. Efficient recognition of human activity plays a crucial role in accurately detecting falls. Major strategies of fall detection are illustrated in

Figure 2.

Figure 2. Fall detection strategies.

2.1. IoT-Based

An Internet of Things-based system can also be helpful for detecting falls, and researchers have made significant strides in both the detection and management of falls. Gia et al. [

5] introduced a wearable IoT-based system to ensure an appropriate degree of control and reliability for their fall detection system. The wearable nodes are inexpensive, light, and adaptable, but they have limited operational lifespans, suffer from interruptions, and are inconsistent. The system is efficient and feasible, but complicated. Chatterjee et al. [

6] proposed an intelligent healthcare system with IoT and a decision support system. The system is low-cost, provides ambient assisted living, and improves the experience of patients.

2.2. Wearable Device Based

Much research has been done on wearable devices that are connected through wireless technology. Pierleoni et al. [

11] proposed a system to detect falls that combines a magnetometer, gyroscope, and accelerometer to overcome the limitations of a single accelerometer, but they did not present the data well and only briefly discussed current issues and challenges. Baga et al. [

12] offered a system that can manage neuro-degenerative diseases by minimizing the size of wearable sensors. They presented a modular system architecture that can be adapted to any disease.

2.3. Cloud-Based

Pan et al. [

19] suggested a model “PD Dr” mobile cloud-based mHealth application. It tests hand movement while a person is resting, walking, or turning, but does not provide a long-term continual assessment. Al Hussein et al. [

20] proposed a framework that transmits a voice signal to the cloud and processes the signal to detect PD. Two different databases and four ML classifiers are used, and the accuracy is up to 97%. The system has not been applied in real-life settings. Depari et al. [

21] proposed an architectural model to connect prototype instruments to the cloud that offers low-cost real-time data and stable, accessible, and compatible message-oriented strategies. It cannot be used for interchanging raw or metadata.

2.4. Vision-Based

Kamarol et al. [

26] proposed an appearance-based template to perform facial expression recognition in video streams. It performs several state-of-the-art expression-based feature selection methods. Classification is easy, but not checked during complex movements such as dancing, jumping, or other such movements that are not regularly done. Xie et al. [

27] provided a tool that uses the histogram sequence of local speech dialects or binary patterns from three orthogonal planes (LGBP-TOPs). The machine does well in detecting simple falls, but the accuracy of the system needs to be improved. Suja et al. [

28] proposed a geometrically focused method for the identification of six specific emotions in video sequences that improved accuracy compared to other methods. Evaluation cannot be determined in real-time conditions.

2.5. Smartphone Based

Smartphones currently play a vital role in our daily life. They can be helpful for detecting falls and notifying others about a fall. Mostarac et al. [

42] created a system that can detect falls using three-axis accelerometric data. It has low power consumption, good portability, and is of small size. The paper has a lack of discussion about the post-fall condition. Fang et al. [

43] displayed a fall detection prototype that has been implemented on an Android-based platform. The suggested system consists of three parts: sensing the accelerometer data from the mobile embedded sensors, understanding the link between fall behavior and the acquired data, and sending a message to predefined contacts when a fall is detected. The findings demonstrate a 72.22% sensitivity and a 73.78% specificity for identifying falls originating from human activities, including sitting, walking, and standing. It is a low-cost fall detection system that works with readily purchased products and wireless technologies, eliminating the need to modify gear, set up the surroundings, or wear extra sensors. It also shows the location of the devices.

2.6. Sound Analysis-Based

Al Mamun et al. [

47] proposed a cloud-based framework for patients from rural areas to obtain instructions from doctors by sending voice recordings via cloud-based systems. Doukas et al. [

48] proposed an architecture to detect falls via fall sounds and patient motion. Fall detection is possible from the voice of a person who falls, and notification can be given in times of emergency. The system uses sensors affixed to the body, which is the main demerit. M. Pham et al. [

49] proposed a smart home plan to monitor a patient’s activity and give a proper report to a caregiver. The perfect home setup helps to collect appropriate data for processing. It will not provide any help in the event of an emergency.

2.7. Biomedical-Based

In [

53], researchers propose a model-based fall discrimination method that uses a microwave Doppler sensor. They modeled human falls mathematically in their simulation and compared the measurement waveform and the observed waveform to detect falls, with high accuracy. Thome et al. [

54] proposed an application for automatic fall detection and future pervasive health monitoring. LHMM (Layered Hidden Markov Model) helps to solve the problem of inference. It is robust to low-level step errors and independently extracts features. One problem is that there is no collaboration between points of view. Hwang et al. [

55] developed an approach for detecting falls that utilizes signals acquired from a system linked to the chest. It is specifically designed for long-term and ongoing ambulatory surveillance of seniors in crisis situations and real-time monitoring that can distinguish falls from daily activity. Its use is limited because Bluetooth has a small range.

2.8. Kinematic-Signal-Based

Hidden Markov Models (HMMs) have been successfully applied in fall detection systems due to their ability to model temporal dependencies in sensor data and to handle noisy or incomplete sensor readings. HMM-based fall detection systems have shown promising results in accurately detecting falls and reducing false alarms. Ref. [

58] is an informative and well-written paper that provides a comprehensive overview of the Hidden Markov Model (HMM) and its applications in human activity recognition and fall detection. The authors have discussed the strengths and weaknesses of the HMM approach and highlighted several key studies that demonstrate its effectiveness. Ref. [

59] is a well-written and insightful paper that provides a comprehensive review of the latest techniques in biosignal processing and activity modeling for multimodal human activity recognition. The authors have discussed the challenges and opportunities in this field and highlighted several key studies that demonstrate the effectiveness of these techniques. This paper is a valuable resource for researchers interested in developing advanced multimodal activity recognition systems. The researchers in [

60] presented a recent method for detecting and recognizing falls using Hidden Markov Models (HMMs). This technique is straightforward, easy to understand, and can be applied to various scenarios, resulting in significant enhancements in machine learning (ML) efficiency.

3. Datasets of Fall Detection

Researchers have created various types of datasets for the detection and analysis of falls. Among them, the tFall, UMAFall, UPFall, MobiFall, and DLR datasets are the most prominent. The researchers of [

67] developed the UP-Fall Detection dataset, a public multimodal dataset for fall detection. Studies on modality techniques for fall detection and classification are needed. They also offered a fall detection system that relies on a 2D CNN inference approach and numerous cameras to examine pictures in defined time frames as well as retrieving attributes utilizing an optical flow method that gathers details on the overall velocity in two successive pictures. When compared to state-of-the-art approaches using a simple CNN network architecture, the suggested multi-vision-based methodology identifies human falls with an accuracy of 95.64%. The researchers [

68] studied acceleration-based fall detection utilizing peripheral accelerometers or cell phones. Many studies utilize this unique dataset, which has gathered data on various situations. The system utilizes two distinct classification algorithms and evaluates the datasets as either raw values or with modified values so that the baselines have equivalent circumstances. The researchers use the tFall, MobiFall, and DLR datasets and test them with multiple ML algorithms to see which one gives better accuracy. Among them, the tFall dataset gave the best accuracy. In [

69], researchers said that UMAFall is a novel dataset of movement traces obtained by the methodical simulation of a set of preset ADLs, including falls. The UMAFall dataset includes five wearable sensors placed at five separate points on the subjects’ bodies to record motion. It identifies three types of falls by tracing the movement of 17 experimental objects. The tracks contain acceleration and gyroscopes and include magnetometer data collected concurrently from four Bluetooth-enabled sensor motes as well as signals sampled by an accelerometer incorporated in a smartphone, which serves as the data sink for a wearable wireless sensor network. The study of [

70] introduces a dataset of human activity that can be used to evaluate novel concepts and make impartial comparisons between various algorithms for fall detection and activity recognition based on smartphone inertial sensor data. The dataset includes signals captured from a smartphone’s accelerometer and gyroscope sensors for four kinds of falls and nine different everyday activities. The problem with this dataset is that the “inactivity” time could not be validated; therefore, there is only a brief amount of data left after a certain activity. Based on variations in the geometry of human silhouettes in vision monitoring, the research of [

34] suggested a novel method for accurately identifying fall events. The curvelet transformation and area ratios are used to accomplish this task of identifying human posture in photographs.

This entry is adapted from the peer-reviewed paper 10.3390/s23115212