Your browser does not fully support modern features. Please upgrade for a smoother experience.

Please note this is an old version of this entry, which may differ significantly from the current revision.

Subjects:

Robotics

Unmanned aerial vehicles (UAVs) can experience significant performance issues during flight due to heavy CPU load, affecting their flight capabilities, communication, and endurance.

- sensor

- SLAM

- lightweight

- UAV system

1. Introduction

With the continuous advancement of modern technology, the application range of UAVs is becoming increasingly widespread. In recent years, UAVs have been appearing more frequently in people’s sight. During UAV flights, surveying tasks need to be completed in preparation for future navigation inspections. The SLAM process in the UAV system requires high real-time performance, especially in terms of CPU information processing speed, which has become the main research direction at present. Based on the type of sensor used, SLAM systems can be categorized as visual SLAM, lidar SLAM, and multi-sensor fusion SLAM.

The camera offers abundant visual information at a low cost and in a compact form factor, enabling robot localization and navigation. However, purely visual SLAM performance often deteriorates in low-textured environments. To address this issue, researchers have combined points with other geometric entities such as lines as lines [1] or planes [2]. In human-made environments, a pose-graph optimization strategy can be used to take advantage of structural constraints such as parallelism or orthogonality of walls. Another well-known approach for reducing rotation drift is to adopt the Manhattan World (MW) assumption [3]. However, most of the methods discussed above use RGB-D cameras in human-made environments, which may not be universally applicable. Moreover, the accuracy of the system depends heavily on the estimation of the ground plane and Manhattan Axes (MA). Recently, with the development of deep learning, a combined system of SLAM and a Convolutional Neural Network (CNN) has emerged. In [4], a table retrieval method is proposed for data association and loop closure using semantic information in a dynamic environment. Each landmark is associated with its own semantic and location information to improve the accuracy of the system.

To address the limitations of purely visual SLAM, the fusion of vision and IMU data have become mainstream. The IMU is primarily used to measure acceleration and rotational motion, providing high-frequency and outlier-free inertial measurements. However, the IMU’s long-term operation may result in significant accumulated drift, which necessitates the initialization of all IMU parameters and real-time optimization in the later stages. This is critical for visual–inertial odometry (VIO) and visual–inertial SLAM systems, and researchers are actively seeking ways to quickly complete the initialization of the IMU and suppress its noise and bias. Currently, the initialization methods of VIO systems can be broadly categorized into two main approaches: loosely coupled [5,6] and tightly coupled [7]. The loosely coupled approach involves separate initialization processes for the IMU and the camera, followed by minimizing the distance between their poses. In VINS-Mono [5], keyframes and map points are initially obtained using visual odometry, and IMU parameters are optimized through aligning the IMU pre-integrated rotation with visual measurements by covariance propagation of the error term. However, this approach estimates the velocity as an unknown variable and overlooks the accelerometer bias, leading to incomplete initialization information. On the other hand, ORB-SLAM3 [6] is a visual–inertial tightly coupled system that employs MAP estimation to estimate scale, gravity direction, biases, and velocity during IMU initialization, while the tightly coupled approach directly establishes constraints between the camera and IMU during the initialization process to optimize various parameters. OpenVINS [7] is a tightly coupled initialization approach that leverages camera poses to establish visual constraints, enabling the estimation of initial velocity, gravity, and three-dimensional coordinates of feature points. Subsequently, multiple-frame velocity and position relationships are obtained through first-order and second-order integration, respectively. BASALT [8] employs a two-level SLAM system that optimizes the noise and bias of the IMU in both stages. In contrast to other systems, it does not directly utilize the pre-integrated IMU measurements in the mapping stage. Instead, it extracts short-term visual–inertial tracking information from the marginalized information of the VI-odometry stage. This approach not only reduces the dimensionality of the global optimization problem but also enhances the accuracy of the optimization results. GVINS [9] employs a coarse-to-fine approach to initialize GNSS visual–inertial states using MAP estimation and integrates their raw measurements within a probabilistic framework. It is capable of providing drift-free 6-DoF global pose estimation in complex environments where GNSS signals may be obstructed or entirely unavailable.

One of the challenges in developing SLAM systems is ensuring algorithm robustness and real-time performance while working with limited computing resources, such as cheap and low-performance processors. This is especially important for battery-powered robots, where computational efficiency is crucial for extending the robot’s endurance. To address these challenges, researchers have proposed various approaches. For example, Ref. [10] uses a direct method to initialize the system and tracks non-keyframes for state estimation at the front-end. At the back-end, sliding window and marginalization are adopted to limit the number of keyframes and perform nonlinear optimization. Similarly, FastORB-SLAM [11] tracks keypoints between incoming frames without computing descriptors, exploiting motion smoothness and constraints on epipolar geometry to refine the correspondence. ORB-SLAM2S [12] includes a lightweight front-end that uses a sparse optical flow method for non-keyframes and descriptors, achieving faster speed performance compared to ORB-SLAM2. However, these methods often replace ORB features with direct methods and optical flow methods to track feature points, which can lead to reduced system accuracy if feature points are not extracted properly.

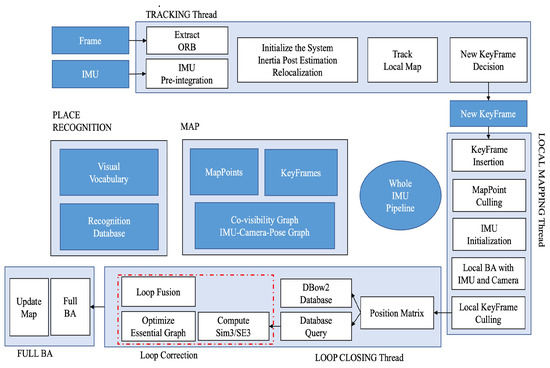

Our laboratory is developing an inspection robot that relies on the Robot Operating System (ROS) and object detection algorithms to achieve mapping and monitoring. Endurance and real-time tracking are key factors for inspection robots. Therefore, we propose a lightweight stereo-inertial SLAM based on nonlinear optimization and feature tracking, which achieves fast tracking, better robustness, and a lower CPU load. The overall system architecture is shown in Figure 1. Our three main contributions focus on speeding up tracking, reducing CPU consumption, and maintaining system accuracy. The main contributions are summarized as follows:

Figure 1. The whole frame of system.

-

A coarse-to-fine optimization approach. Coarse optimization is for faster IMU initialization to replace the constant velocity model and speed up the tracking process, while fine optimization ensures localization accuracy.

-

A novel visual–inertial pose graph as an observer decides which variables need to be optimized to prevent over-optimization.

-

Fusion of IMU data with loop closure to further reduce CPU load.

2. A Lightweight UAV System

In recent years, visual SLAM has gained increasing attention from researchers due to advancements in sparse nonlinear optimization theory and computer performance. Most visual SLAMs rely on point features and MAP estimation because of their general applicability. In the feature-based method, the system’s robustness and localization accuracy are improved by minimizing the feature reprojection error, while photometric Bundle Adjustment (BA) is used to optimize the pose by minimizing the photometric error of a set of pixels in the direct method. Cameras provide rich visual information at low cost. However, point features have several drawbacks. First, point features extracted by vision sensors are highly sensitive to environmental conditions and fail to track when the texture is poor or the image is blurred. Moreover, they are vulnerable to illumination changes. Finally, point features are sparse, making them challenging to use in robot path planning.

SLAM involves three types of data association [13]: short-term data association for feature point tracking, medium-term data association for bundle adjustment in local maps, and long-term data association for loop closure. This approach is followed by most current visual SLAM systems. Nonlinear optimization methods have been shown to have better accuracy than filtering, so the current mainstream approach is to select representative frames (keyframes) for input into backend optimization. The keyframe-based approach provides better accuracy with less computation, and has become an important standard for current SLAM systems. PTAM [14] is a representative system for keyframes, with two parallel threads for camera pose tracking and mapping to achieve short-term and medium-term data association. ORB-SLAM [15,16] has three parallel threads for tracking, local mapping, and loop closing, representing short-term, medium-term, and long-term data association, respectively. ORB features are used for short-term data association to compute the pose between frames. Medium-term data association uses keyframes and map points to minimize reprojection errors with bundle adjustment, while the loop closure thread uses the bag-of-words library DBoW2 [17] for long-term data association. These methods have greatly improved the accuracy of ORB-SLAM.

Multi-sensor fusion systems can significantly improve state estimation accuracy and robustness due to the complementarity between sensors. Adding an IMU can solve the problem of scale in monocular SLAM, where the image frame lacks depth information of the environment. Most visual–inertial fusion SLAM systems are tightly coupled and classified as either filter-based or optimization-based systems. The earliest multi-sensor fusion SLAM, MSCKF [18], relies on the feature method and adopts the EKF filtering method for optimization, adding camera poses at different times to the state vector. On the other hand, OKVIS [19] is the most representative system based on the nonlinear optimization method and uses keyframes, relying on the error propagation model to optimize the inertial. Some previous methods, such as [20,21], have limitations in their solution process or initialization scale accuracy. Recently, Ref. [22] proposed a robust stereo inertial odometry based on self-supervised feature points, using an improved multi-task CNN to extract feature points and incorporating an IMU to deal with rapid camera movements.

This entry is adapted from the peer-reviewed paper 10.3390/drones7060338

This entry is offline, you can click here to edit this entry!