Your browser does not fully support modern features. Please upgrade for a smoother experience.

Please note this is an old version of this entry, which may differ significantly from the current revision.

An increasing number of people tend to convey their opinions in different modalities. For the purpose of opinion mining, sentiment classification based on multimodal data becomes a major focus. Sentiment analysis at the document level aims to identify the opinion on a main topic expressed by a whole document.

- document-level multimodal sentiment classification

- graph convolutional networks

1. Introduction

Sentiment analysis at the document level aims to identify the opinion on a main topic expressed by a whole document. Instead of understanding the sentiment at the sentence or aspect level, document-level sentiment analysis (DLSA) tends to extract the overall sentiment of the whole document. Driven by the commercial demands, document-level sentiment analysis (DLSA), on the basis of deep learning algorithms, is currently widely employed to deal with the online product reviews [1]. That is, the general sentiment toward a product or service based on an overwhelming abundance of textual data is captured directly and classified as either positive, neutral or negative [2]. As such, DLSA is capable of delivering opinions in a way that clearly facilitates the product recommendation and sales prediction [3].

Typically, the task of DLSA mainly focuses on dealing with the textual information. In line with the flourish of deep neural networks, researchers exploit a variety of methods to extract textual features and capture the context information from the document. Zhou et al. utilize a convolutional neural network (CNN) to extract a sequence of higher-level phrase representations and feed them into a long short-term memory recurrent neural network (LSTM) to obtain the sentence representation [4]. Yang et al. propose a hierarchical attention network, which aims to extract the features at both the word and sentence level to construct the document representation [5]. The DLSA models based on graph neural networks are also developed [6]. In this model, graphs for each input text are built with significant local features extracted and the memory consumption reduced.

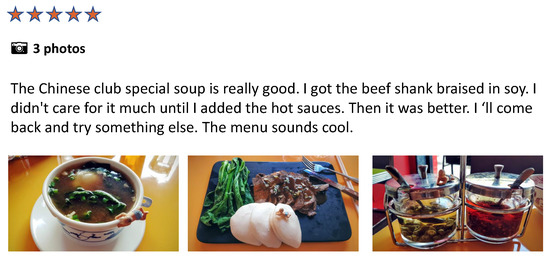

More recently, the widespread use of smartphones has given rise to more opportunities to express opinions via different modalities (i.e., textual, acoustic and visual modalities). On social media, the text and the image are generally taken to mutually reinforce and complement each other; see Figure 1. For this reason, there is an ongoing trend to devise document-level multimodal sentiment analysis (DLMSA) methods that tackle multimodal information. In practice, the major challenge of DLMSA models lies in aligning and fusing textual and visual information using data of distinguishing format and structure. On the task of multimodal sentiment analysis, Zadeh et al. work on computing the outer product between modalities to characterize the multimodal relevance [7]. However, this scheme greatly increases the feature vector dimension, which results in the difficulty and complexity of model training. Furthermore, recent publications report the multimodal fusion at the feature level. Truong et al. consider visual information as a source of alignment at the sentence level and assign more attention to image-related sentences [8]. In addition, Du et al. use image features to emphasize the text segment by the attention mechanism and take a gating unit to retain valuable visual information [9].

Figure 1. An example of multimodal review.

2. Document-Level Sentiment Analysis

Sentiment analysis is a major focus in the field of natural language processing that has gained an increasing amount of attention. Sentiment analysis determines sentiment polarity or predicts sentiment scores from a given text. With the advancement in social media, massive user-generated texts are accessible, which has further promoted the research in sentiment analysis [10].

In general, a document consists of multiple sentences. While once restricted to processing methods, development in DLSA greatly progresses with advances in deep learning algorithms. On the basis of deep neural networks, a variety of DLSA approaches are reported [11,12]. Chen et al. train a convolutional neural network (CNN), which is applied to sentence-level sentiment analysis via pre-trained word vectors, achieving a satisfying working performance [11]. Lai et al. propose an integrated model by combining the superiorities of recurrent neural networks (RNNs) and convolutional neural networks (CNNs) [13]. That is, the context information is captured via RNNs, while the document representation and the local feature of sentence are derived via CNNs. On the other hand, RNN-based methods, integrated with attention mechanisms, also have their distinctiveness in DLSA [14,15]. Specifically, the hierarchical-structure networks are the most pronounced to process on both word and sentence levels. Yang et al. establish a hierarchical attention network that, respectively, applies attention mechanisms to word and sentence levels, which fuses more valuable information into each document [5]. Huang et al. develop a hierarchical multi-attention network to accurately assign the attentive weights on distinguishing levels [16]. Huang et al. establish a hierarchical hybrid neural network with multi-head attention to extract the global and local features of each document [17]. Due to the distinguishing contribution of each sentence to the sentiment polarity, Choi et al. propose a gating-mechanism-based method to identify the sentence importance in a document [18]. So far, there is an ongoing trend to model the document based on its hierarchical structure and thus precisely extract the document feature [19,20].

3. Document-Level Multimodal Sentiment Analysis

In the multimodal sentiment analysis domain, deep-learning based methods play a pivotal role. Previous work tends to directly fuse unimodal features to construct a multimodal representation for sentiment analysis [21,22,23]. In [21,22], feature vectors from different modalities are concatenated for multimodal integration. Soujanya et al. extract textual and visual features using CNN, concatenate the multimodal features, and classify the sentiment polarity via a multicore learning classifier. However, such approaches fail to deal with the cross-modal interaction [23]. In [7], a TFN model is proposed to use tensor outer products to dynamically model data across modalities. This approach generally results in oversized models for training.

More recently, studies have addressed multimodal interaction and information fusion, especially by using attention mechanisms [24,25,26,27]. Amir et al. develop a multi-level attention network to extract multimodal interaction by assuming the interactions of different information between modalities [24]. Xu et al. propose a visual feature guided attention LSTM model to extract words for sentiment delivery and aggregate the representation of informative words with visual semantic features, objects and scenes [25]. Since textual and visual information reinforce and complement each other, Xu et al. construct a co-memory network to iteratively interact the textual and visual information for multimodal sentiment analysis [26]. Similarly, Zhu et al. apply an image–text interaction network for multimodal analysis to explore the interaction between text and image regions through a cross-modal attention mechanism [27].

Notwithstanding, all the aforementioned work is carried out based on the one-to-one correspondence between text and images. While in practice, for most multimodal samples such as blog posts and e-commerce reviews, no conformity between text and image information is set in advance. For example, a single document can contain multiple images. As we know, the DLMSA is a more text-oriented task, and the image features are auxiliary for better analysis [8,28]. Instead of directly feeding images into sentiment classifiers, visual information is typically considered as a source on sentence-level alignment. Truong et al. exploit pre-trained VGG networks to obtain image features and then align the visual information as attention to each sentence, based on which more focus is assigned to image-related sentences [8]. Guo et al. leverage a set of distance-based coefficients for image and text alignment and learn sentiment representations of documents for online news sentiment classification [29]. Aiming to obtain the sentiment-related information, Du et al. propose a method based on a gated attention mechanism [9]. In this method, a pre-trained CNN is taken to extract fine-grained features of images, and then, the gated attention network is employed to fuse the image and text representations, based on which a better sentiment analysis result is achieved.

This entry is adapted from the peer-reviewed paper 10.3390/math11102335

This entry is offline, you can click here to edit this entry!