1. Pressure Management: Controlling Pressure Build-Up and Geomechanical Complications

The commercial-scale deployment of carbon storage involves sequestering large amounts of carbon dioxide in the porous media of a host subsurface structure. The storage space for CO2 is created by the expansion of the formation rock pore space, together with a corresponding reduction in the volume of the native fluid due to compression. Although the storage system formation fluid can be displaced or withdrawn to facilitate the accommodation of injected CO2, pressure build-up in the host formation is still anticipated when deploying carbon storage on a commercial scale.

- (1)

-

Closed systems, where the storage formation is surrounded by impervious boundaries and blocked vertically by impervious sealing units.

- (2)

-

Semi-closed systems, where the storage system is enclosed laterally by impervious boundaries but overlain and/or underlain by semi-previous sealing units.

- (3)

-

Open system, where the lateral boundaries are too far to be affected by pressure disturbances [

71].

The effect of pressure build-up in closed systems has a more significant impact on CO

2 storage capacity than in open and semi-closed systems due to the absence of pressure bleed-off [

71,

72,

73,

74]. While closed systems do not present any environmental risk of brine leakage during CO

2 injection, pressure build-up must be kept safely below the maximum pressure (fracture pressure) that can be tolerated by a given formation to preserve the mechanical integrity of the storage site from the tensile or shear failure of the caprock and/or reactivation of the existing fractures and faults [

75]. On the other hand, modeling studies have shown that pressure build-up also limits the storage capacity of open systems. Elevated pressure may cause brine displacement into freshwater aquifers through localized pathways, such as leaky faults and wells [

57,

75,

76], which could pose environmental risks. Meanwhile, reservoir pressurization is effectively reduced in semi-closed and open storage units due to the pressure bleed-off caused by brine migration into semi-sealing units and/or lateral brine displacement [

77]. Based on the discussion above, it can be said that the effective storage capacity of the reservoir is not limited only by the pore volume of the formation rocks but also by the maximum permissible build-up pressure. Szulczewski et al. [

78] have shown that the pressure constraint is the principal limiting factor for CO

2 storage in the short term, while limited porosity prevails in the long term. The initial pressure build-up in the reservoir mainly depends on the properties of the formation rock such as porosity, permeability, anisotropy, pore compressibility, etc. [

79,

80], whereas these parameters are reservoir-dependent and uncontrollable [

69].

Therefore, it is essential to formulate a technical and economically feasible development plan for CO

2 storage based on site characteristics that optimize the CO

2 injection rate maximize the efficiency of long-term storage while maintaining pressure build-up below the cut-off threshold, which is a fraction of the fracture pressure of the host formation (~90%). This avoids unwanted geomechanical complications [

81].

To mitigate these issues and, therefore, comply with the pressure management policy, several researchers have proposed development schemes and plans that aim at extracting brine from aquifers to potentially increase the amount of CO

2 that can be effectively injected and to control pressure build-up at saline aquifer storage sites. For example, Court et al. [

61] and Buscheck et al. [

62] demonstrated in synthetic models the significant benefits of pressure control through brine production. They showed that brine production had no significant effect on the conformational shape of the CO

2 plume, as the latter depends on the characteristics of the storage formation. However, brine production comes with setbacks due to additional pumping, transportation, treatment, and disposal requirements, which increase costs. To address this, Birkholzer et al. [

81] demonstrated that it is possible to reduce the amount of brine produced in CO

2 storage operations with proper placement of the wells and optimization of their rates. Cihan et al. [

82] applied this technique by addressing the optimization problem in a realistic example of the Vedder Formation in California, USA, minimizing the ratio of produced brine volume over the volume of injected CO

2. Other researchers have attempted to optimize the CO

2 injection schedule in saline aquifers while considering both alternatives (with and without brine production), along with economic profit constraints imposing that caprock fracture pressure cannot be reached at any time and placed in the reservoir. Santibanez-Borda et al. [

60] engaged in a novel optimization strategy to maximize CO

2 storage and pre-tax revenues in Cenozoic Sandstones of the Forties in the North Sea. They injected CO

2 while simultaneously producing brine to control pressure build-up. More details about the optimization method applied and the results obtained will be given in

Section 3 of the full paper.

2. Geological Storage Security: Improving Residual and Solubility Trapping

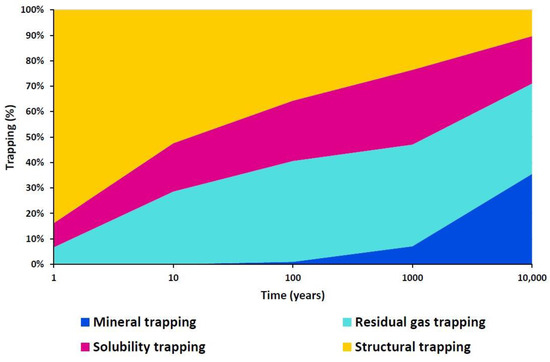

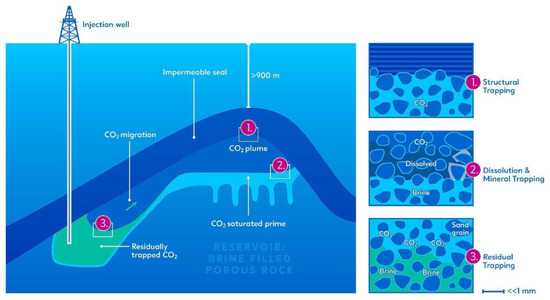

To achieve geological storage, CO

2 is typically injected as a supercritical fluid deep below a confining geological formation at depths greater than 800 m. A combination of physical and chemical trapping mechanisms is encountered, which are effective over different time intervals and scales [

72,

73] (

Figure 1). Physical trapping occurs when CO

2 is stored as a free gas or supercritical fluid and can be classified into two mechanisms: static trapping in stratigraphic and structural traps and residual trapping in the pore space at irreducible gas saturation. Additionally, CO

2 can be trapped by solubility through its dissolution in subsurface fluids and may participate in chemical reactions with the rock matrix, leading to mineral trapping [

83].

Figure 1. Trapping mechanisms scale over time intervals.

Since carbon storage’s primary purpose is to provide permanent and long-term underground CO

2 storage, the safety and security features of each CO

2 trapping mechanism encountered in underground formations must be considered. Storage security increases with reduced CO

2 mobilization, making mechanisms immobilizing CO

2 extensively researched to reduce the risk of leakage from potential outlets in the formation (e.g., fractures, faults, and abandoned wells) [

84]. Solubility, residual, and mineral trapping are considered safe mechanisms. Solubility trapping provides a safe trapping mechanism because CO

2 dissolved in brine (or oil) is unlikely to abandon the solution unless a significant pressure drop occurs at the storage site. Furthermore, when CO

2 is dissolved in brine, the CO

2–brine solution density increases, resulting in convective mixing, which acts to prevent buoyant CO

2 flow toward the caprock [

85,

86,

87]. Residual trapping is also recognized as a safe trapping mechanism as it represents the fastest method to remove CO

2 from its free phase with timescales in the range of a few years to a few decades [

88,

89,

90,

91]. Within its displacement in the formation, the front and tail of the CO

2 plume undergo drainage and imbibition saturation-dependent processes, leaving traces of residual gas trapped in the rock pore spaces. Over timescales of hundreds of years, dispersion, diffusion, and dissolution are expected to minimize residual CO

2 concentration [

92]. Mineral trapping of CO

2 occurs when dissolved CO

2 combines with metal cations, mainly Ca, Fe, and Mg, resulting in the precipitation of carbonate minerals. The effectiveness of this type of trapping is determined by several factors, including the availability of non-carbonate mineral-derived metal cations in the formation of brine, the rate of carbonate and non-carbonate mineral dissolution in the presence of CO

2 and resulting solution pH, and the conditions necessary for secondary mineral precipitation, such as the degree of supersaturation and availability of nucleation sites [

93]. Mineral trapping is generally considered to be the most stable and secure of the four but is very slow in typical sedimentary rocks, with timescales of centuries or millennia [

94].

Figure 2 provides a schematic microscopic view of the porous media, illustrating the primary trapping mechanisms encountered in storage formation.

Figure 2. A schematic microscopic view of the CO2 storage mechanisms in porous media (reproduced from Stephanie Flude, CC BY).

Residual gas, dissolution, and particularly, mineral trapping mechanisms, share a relatively low contribution to underground CO

2 storage when compared to structural trapping over a typical operational injection period of 30 years [

88,

95,

96]. Although mineralization is the safest trapping mechanism, it is a very slow chemical process that can take hundreds to thousands of years, while solubility and residual trapping are considered two short-term and low-risk mechanisms for safe CO

2 storage. Therefore, properly designing the CO

2 injection schedule and storage development plan can optimize these mechanisms. This policy is essential to ensure the safety and security of long-term containment of underground CO

2 storage.

Although the main difficulty of implementing this policy is that the predominant upward buoyancy-driven displacement of CO

2 limits the horizontal access of the CO

2 plume to the fresh brine of the aquifer, most studies anticipate this problem by considering different strategies, which are combined to apply computational optimization. Leonenko and Keith [

97] investigated the role of brine injection on top of a CO

2 plume in accelerating the dissolution of CO

2 in formation brines. They found that such a technique could significantly improve CO

2 solubility at the aquifer scale and concluded that reservoir engineering techniques could be used to increase storage efficiency and possibly reduce the risk of leaks at a relatively low cost. Kumar [

98] applied an optimization approach to minimize structurally trapped CO

2 in heterogeneous two-dimensional models by simulating 10 years of injection and 200 years of equilibration. In one example, they showed that the optimization decreased the likelihood of structural trapping by 43% compared to the base case. Similarly, Hassanzadeh et al. [

99] studied the introduction of brine injectors into saline aquifers and showed that the injection of brine increased the rate of dissolution of carbon dioxide in aquifers, enhancing the dissolution trapping mechanism. They also proposed a reservoir engineering technique to optimize the location of the brine injectors to maximize the injection rates. In 2009, Nghiem et al. [

100] found that optimal control of a water injector placed above a CO

2 injector enhances residual and solubility trapping. In another study, in 2010, Nghiem et al. [

101] optimized the location and operating conditions of a water injection well located above the CO

2 injector and applied a bi-objective optimization approach to quantify the compromise or trade-offs between the optimization of residual and dissolution trapping. In turn, Shafaei et al. [

102] studied the co-injection of carbon dioxide and brine into the same well by injecting brine in the well’s tubing and CO

2 using the annual space between the tubing and the well’s casing, making it possible for the injection to be achievable at lower wellhead pressure, thus reducing compression costs.

On the other hand, Rasmusson et al. [

103] found that the simultaneous injection of CO

2 and brine, as well as the use of a water-alternating gas (WAG) scheme, had a beneficial effect on both residual and solubility trapping. In a recent study, Vo Thanh et al. [

104] compared continuous CO

2 injection against a WAG injection scheme and they demonstrated that the effectiveness of the WAG procedure in enhancing both trapping mechanisms was higher compared to continuous CO

2 injection. For their analysis, they considered the impact of reservoir heterogeneity by running WAG scenarios on 200 geological realizations of the aquifer. Other proposed methods to reduce buoyant CO

2 storage and eliminate the risk of buoyant migration involve surface dissolution, which aims to dissolve CO

2 in brine extracted from the storage formation and then inject the fully saturated CO

2 brine back into the storage system [

105]. Other studies on surface dissolution are also involved in finding an optimal design of the injection and extraction strategy [

106]. It should also be noted that surface dissolution reduces the degree of pressure build-up during injection and eliminates the displacement of brine [

105,

106]. Therefore, optimizing CO

2 injection schedules, well control strategies, and implementing an effective storage development plan are critical for enhancing long-term containment and storage security through improved solubility and residual trapping.

3. CO2-EOR Carbon Storage Compliance: Joint Co-Optimization

Traditionally, CO

2-EOR operations focus on maximizing oil production, with CO

2 storage considered a secondary priority due to the cost–benefit imbalance of purchasing CO

2 for enhanced oil recovery projects. Oil and gas operators seek to reduce the amount of CO

2 needed to sweep the reservoir, leading to technical conflicts in designing operating parameters that can simultaneously achieve high recovery and high CO

2 sequestration. While carbon storage has become an additional revenue source for operators due to the prevailing carbon tax regimes, such as 45Q in the United States, studies have shown that the tangible economic benefits of EOR often outweigh those of carbon storage, especially given the costs and challenges of implementing carbon sequestration at a commercial level, as this dispute ends in favor of oil recovery [

107,

108]. As a result, design parameters for CO

2-EOR+ projects must be redefined to optimize CO

2 storage volume while maximizing underground permanent CO

2 storage at the end of the field lifecycle without sacrificing additional oil revenue. To achieve this goal, it is necessary to adopt a co-optimization engineering policy, meaning that design parameters of a given storage site development plan (e.g., carbon injection strategy) must be selected to simultaneously achieve desirable oil recovery while ensuring the best achievable amount of carbon stored underground.

Many technical studies address the co-optimization of oil recovery and CO

2 sequestration in CO

2-flooded EOR processes, and this conflict can be resolved in the form of a tradeoff. In 2000, Malik and Islam [

109] studied various phenomena affecting oil recovery and carbon sequestration in the Weyburn field in Canada using reservoir modeling. They discussed technical conflicts involved in achieving optimal operating conditions for the simultaneous objectives of higher oil recovery and higher CO

2 storage and proposed the use of horizontal injection wells to jointly optimize both objectives. Jessen et al. [

110] also highlighted the need for the proper design of injection gas composition and well completion to jointly optimize oil recovery and CO

2 storage.

Other researchers have investigated the co-optimization problem of CO

2-EOR and CO

2 storage, i.e., CO

2-EOR+ reporting various development strategies/plans that affect oil production, carbon storage, and other effects without considering a specific objective function [

110,

111,

112,

113,

114,

115,

116,

117,

118,

119,

120,

121,

122]. Meanwhile, in the absence of a direct relationship on how to achieve the technical strategy of co-optimizing CO

2 EOR and carbon storage in practice, a second group approached this difficulty by explicitly considering maximizing oil recovery, carbon storage, or a weighted combination of the two objectives using injection strategy techniques [

123,

124,

125,

126,

127,

128,

129,

130]. Furthermore, a third group of studies considers the economic aspects in the application of co-optimization of CO

2 EOR and carbon storage to honor the co-optimization policy by explicitly considering the maximization of the net present value (NPV) of the project profit or some related performance parameters [

131,

132,

133,

134,

135,

136,

137,

138,

139,

140].

4. Displacement Control: Sweep Efficiency Performance Control in CO2-EGR Applications

CO

2 storage with enhanced gas recovery (CO

2-EGR/CSEGR) promotes natural gas production while permanently storing CO

2 underground in gas reservoirs. Although natural gas reservoirs are primarily composed of methane (>95%), CO

2 and CH

4 also exist in a gaseous state and are mixable under atmospheric conditions. However, the physical properties of the two components (CO

2 and CH

4) differ significantly at typical reservoir thermodynamic conditions (

µCO2~

2µCH4,

ρCO2~2PCH4) [

26]. The original concept of CO

2-EGR was first proposed by van der Burgt et al. in 1992 [

141] and, since then, numerous numerical simulation studies have demonstrated the technical feasibility of carbon storage with enhanced gas recovery. For example, Oldenburg et al. [

142] carried out 2D model simulations of the injection of CO

2 into a depleted natural gas reservoir under isothermal conditions and homogeneous reservoir properties (porosity 0.35 and permeability in the Y-Z directions 1.0 × 10

−12, 1.0 × 10

−14 m

2). In two simulation scenarios (Scenario I: reservoir pressurization scenario: CO

2 injection for 10 years with no CH

4 production, Scenario II: CO

2 injection with simultaneous CH

4 production), they showed that 99% pure CH

4 can be produced for about five years in Scenario I with a very high CH

4 production rate. Similarly, 99% pure CH

4 can be produced for about five years in Scenario II in conjunction with CO

2 injection, but the methane production rate is lower than in Scenario I.

Meanwhile, several simulation studies have revealed that CO

2 preferential flow pathways can easily form due to reservoir heterogeneity, which can compromise CO

2-EGR performance. For example, Oldenburg and Benson [

38] extended the analysis of Oldenburg et al. by accounting for formation heterogeneity and applied the log normal distribution in the Y-direction without correlation in the Z-direction. Their work showed that heterogeneity in the formation led to preferential flow paths for injected CO

2, with such a phenomenon being favorable for injectivity and carbon sequestration, allowing the storage of larger quantities of CO

2. However, preferential flow also resulted in early breakthroughs and, therefore, affected the application of enhanced gas recovery. Rebscher and Oldenburg [

143], in turn, used a 3D grid model to investigate, in detail, the application of CSEGR in the Salzwedel-Peckensen natural gas field in Germany and demonstrated for the base case scenario that CO

2 injection sweeps CH

4 toward the production well, with breakthroughs occurring in the unit with the highest permeability due to preferential flow paths. Similar results were obtained by other researchers [

143,

144,

145].

To address this problem, it is crucial to design the CO2-EGR process with parameters (e.g., CO2 injection schedule, well location, etc.), that allow for the greatest storage capacity and highest natural gas recovery. Maintaining high sweep efficiency by delaying CO2 breakthroughs and stabilizing the displacement process is a critical subsurface engineering policy that must be considered during the design implementation of CSEGR applications, particularly in the injection plan or/and well control strategies.

To honor this engineering policy, researchers have investigated several strategies to delay CO

2 breakthroughs and stabilize the displacement process, such as the effect of water injection and formation water in high permeability strata by blocking the fast flow paths and thus promoting CO

2 dissolution [

37,

145,

146,

147]. Others have examined the effects of CO

2 injection time on CSEGR performance [

36,

148]. Moreover, Hussein et al. [

149] showed in a simulation study that the CO

2 injection rate is a key parameter in CSEGR along with the injection timing as it plays an important role in representing the optimal injection rate strategy. The locations of the CO

2 injection wells and the natural gas production wells are also two other critical factors of the injection strategy in CSEGR implementation, as shown by Hou et al. [

150]. In addition, Liu et al. [

151] highlighted the impact of injection/production well perforation, whereas other studies considered the optimization of CO

2 injection strategies in CSEGR applications [

152,

153].

This entry is adapted from the peer-reviewed paper 10.3390/cleantechnol5020031