Lung auscultation has long been used as a valuable medical tool to assess respiratory health and has gotten a lot of attention in recent years, notably following the coronavirus epidemic. Lung auscultation is used to assess a patient’s respiratory role. Modern technological progress has guided the growth of computer-based respiratory speech investigation, a valuable tool for detecting lung abnormalities and diseases. Several recent studies have reviewed this important area, but none are specific to lung sound-based analysis with deep-learning architectures from one side and the provided information was not sufficient for a good understanding of these techniques.

1. Introduction

The most promising and popular machine learning technique for disease diagnosis, and in particular for illness identification in general, is the deep learning network. It is not surprising given the dominance of diagnostic imaging in clinical diagnostics and the natural suitability of deep learning algorithms for image and signal pattern recognition.

Deep learning can achieve better prediction accuracy and generalization ability despite requiring more training time and computational resources, which suggests that deep learning has a better learning ability. When compared to conventional machine learning, deep learning can quickly and automatically extract information from an image. Traditional machine learning techniques have difficulty identifying audio and images with comparable properties, while the deep learning approach can manage this with ease

[1]. Processing sound signals allows for the quick extraction of important information. Traditional machine learning involves performing learning using instructive characteristics, such as Mel Frequency Cepstral Coefficients (MFCCs). In automatic speech and speaker recognition, MFCCs

[2] are characteristics that are frequently used. Deep learning entails learning end-to-end directly from data. Both MFCC and conventional machine learning are accomplished through this. In other words, signal processing is formulated as a learning issue in deep learning. Many artificial intelligence (AI) engineers share this opinion

[3].

Deep neural networks may be trained without supplying lesion-based characteristics on massive databases of lung acoustic signals and spectrogram imagery with no provide lesion-based criteria to recognize lung disease status for patients with greater specificity and sensitivity. The key benefit of adopting this computerized illness detection method is model uniformity (on a single input sample, a model predicts the same values every time), high specificity, dynamic result generation, and high sensitivity. Additionally, because a method may have some responsiveness, accuracy and operating points may be adjusted to match the requirements of various clinical scenarios, for example, a screening system with excellent sensitivity.

2. The Regular Lung Sound

The regular lung sound waveforms can be divided into:

-

Vesicular breath or normal lung sound: The sound is more high-pitched during inhalation than exhalation, and more intense; it is also continuous, rustling in quality, low-pitched, and soft.

-

Bronchial sound breathing: The sound is high-pitched, hollow, and loud. However, it could be a sign of a health problem if a doctor hears bronchial breaths outside the trachea.

-

Normal tracheal breath sound: It is high-pitched, harsh, and very loud.

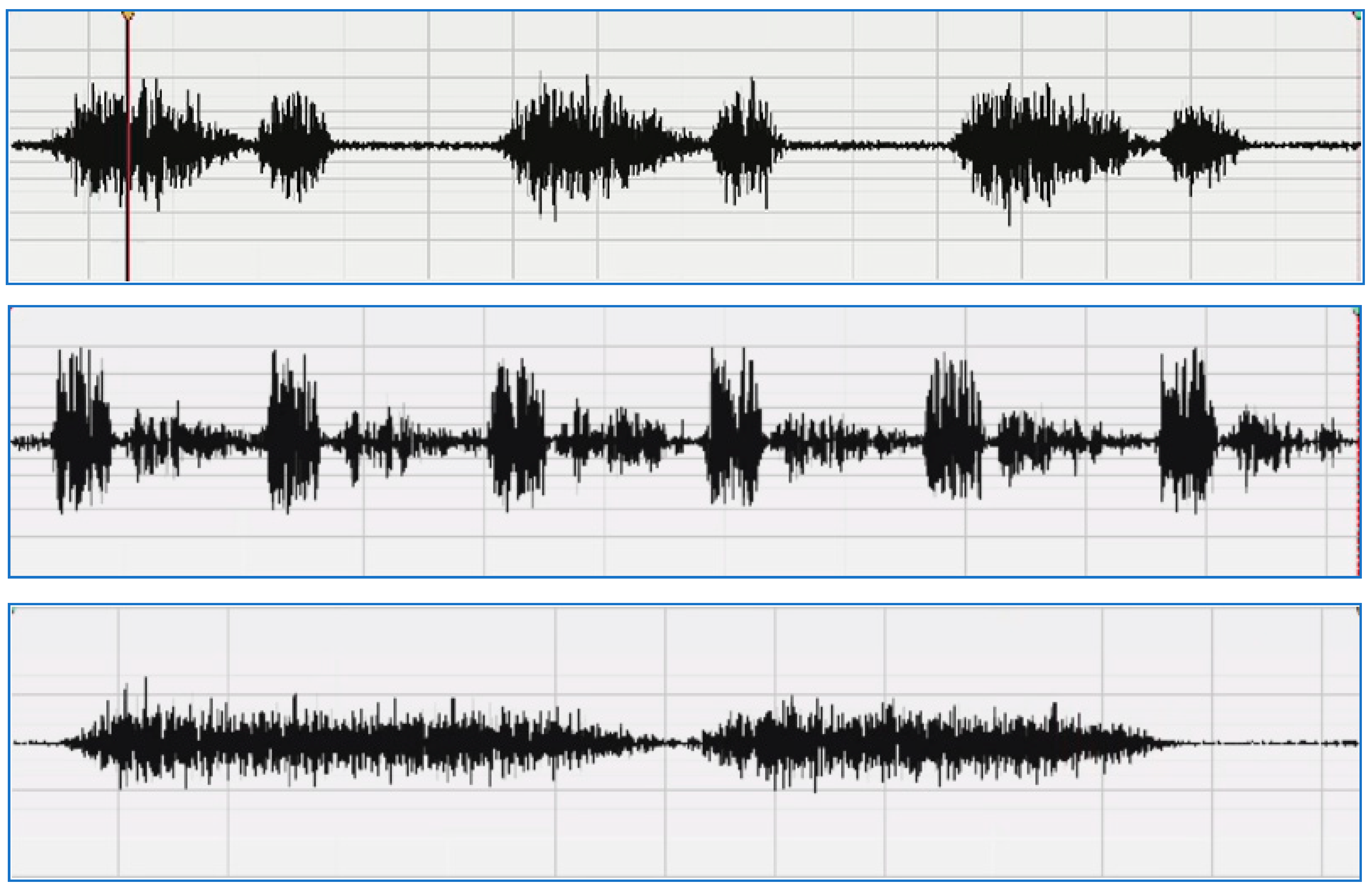

A sample of a normal lung sound waveform is shown in Figure 1.

Figure 1. Sample of a normal lung sound waveform

[4]: vesicular—normal (

upper), bronchial (

middle), normal tracheal (

lower).

3. The Wheezing Lung Sound

The wheezing sound is a continuous and high-pitched sound and is distinguished into:

-

Squawks: A squawk is a momentary wheeze that happens while breathing in.

-

Wheezes with numerous notes are called polyphonic wheezes, and they happen during exhalation. The pitch of them may also rise as exhalation nears its conclusion.

-

Monophonic wheezes can last for a long time or happen during both phases of respiration. They can also have a constant or variable frequency.

4. Crackles Sound

Generally speaking, crackles can be heard while inhaling. They may have a bursting, bubbling, or clicking sound to them.

Coarse: Coarse crackles are louder, lower in pitch, and linger longer in the larger bronchi tubes than fine crackles do. Although they usually occur during inhalation, they can also occur during exhalation.

Medium: These are brought on by mucus bubbling up in the two tiny bronchi, which carry air from the trachea to the lungs. The bronchi are divided into progressively smaller channels that ultimately lead to alveoli, or air sacs.

Fine: These delicate, high-pitched noises are particular to narrow airways. Fine crackles may occur more frequently than coarse crackles during an intake than during an exhalation.

5. Rhonchi Sound

Low-pitched, continuous noises called rhonchi have a snoring-like quality. Rhonchi can happen when exhaling or when exhaling and inhaling, but not when inhaling only. They take place as a result of fluid and other secretions moving about in the major airways.

6. Stridor and Pleural Rub Sounds

-

A high-pitched sound called stridor forms in the upper airway. The sound is caused by air squeezing through a constricted portion of the upper respiratory system.

-

The rubbing and cracking sound known as "pleural rub" is caused by irritated pleural surfaces rubbing against one another.

For efficient respiratory infection therapy, early diagnosis and patient monitoring are critical. In clinical practice, lung auscultation, or paying attention to the patient’s lung sound by means of stethoscopes, is used to diagnose respiratory disorders. Lung sounds are typically characterized as normal or adventitious. The majority of frequent adventitious lung noises heard above the usual signals are crackles, wheezes, and squawks, and their presence typically suggests a pulmonary condition

[5][6][7].

The traditional techniques of lung illness diagnosis were detected using an AI-based method

[8] or a spirometry examination

[9], both of which required photos as input to identify the disorders. Going to a hospital for an initial analysis by X-ray or chest scan in the event of a suspected lung condition, such as an asthma attack or heart attack, is time-consuming, expensive, and sometimes life-threatening. Furthermore, model training with a large number of X-ray images with high quality (HD) is required for autonomising an AI-based system of image-based recognition, which is challenging to obtain each time. A less and simpler resource-intensive system that is able to aid checkup practitioners in making an initial diagnosis is required instead.

In the event of a heart attack, asthma, chronic obstructive pulmonary disease (COPD), and other illnesses, the sounds created through the body’s inner organs vary dramatically. Automated detection of such sounds to identify if a person is in danger of lung sickness is inefficient and self-warning for both the doctor and patients. The technology may be utilized by clinicians to verify the occurrence of a lung ailment. On the contrary, the future extent of this technology consists of integration with smart gadgets and microphones to routinely record people’s noises and so forecast the potential of a case of lung illness.

Nonetheless, the rapid advancement of technology has resulted in a large rise in the volume of measured data, which often renders conventional analysis impractical due to the time required and the high level of medical competence required. Many researchers have offered different artificial intelligence (AI) strategies to automate the categorization of respiratory sound signals to solve this issue. Incorporating machine learning (ML) techniques such as hidden Markov models and support vector machine (SVM)

[7] (HMM), CNN, residual networks (ResNet), long short-term memory (LSTM) networks, and recursive neural networks (RNN) are examples of deep learning (DL) architectures

[9].

Furthermore, much research has been conducted on feature selection and extraction approaches for automated lung sound analysis and categorization. When performing feature extraction from lung sounds, spectrograms, MFCC, wavelet coefficients, chroma features, and entropy-based features are some of the most typically picked features.

This entry is adapted from the peer-reviewed paper 10.3390/diagnostics13101748