Your browser does not fully support modern features. Please upgrade for a smoother experience.

Please note this is an old version of this entry, which may differ significantly from the current revision.

Objects in aerial images often have arbitrary orientations and variable shapes and sizes. As a result, accurate and robust object detection in aerial images is a challenging problem.

- aerial images

- arbitrary-oriented object detection

- dynamic deformable convolution

- self-normalizing channel attention

- ReResNet-50

1. Introduction

Object detection in aerial images is used to locate objects of interest on the ground and identify their categories; this has become an important research topic in the field of computer vision. Objects in natural images can maintain their orientations due to gravity, while objects in aerial images often have arbitrary orientations. The shape and scale of objects in these aerial images change dramatically, making object detection in aerial images a challenging problem [1,2,3]. In recent years, the Convolutional Neural Network (CNN) has made important breakthroughs. The CNN is widely used in various visual tasks, especially in the field of aerial images [4,5,6,7,8]. Correspondingly, several aerial image datasets have been released; these promote the continuous advance of related research work.

Existing aerial image object detection methods are generally based on the object detection framework used for natural images [9,10,11,12]. By elaborately designing specific mechanisms to cope with object rotation changes, including loss functions [13,14], enlarging the scale of training samples with various rotation changes [4,15], rotation invariant and rotation variant feature extraction, detection robustness and accuracy have been improved significantly. These methods usually adopt convolution operations with fixed weights; these make the network unable to cope with the drastic changes in the scale, orientation and shape of objects effectively. In addition, the categories of objects in aerial images are complex and diverse, and the semantic feature representation capabilities of the existing detection methods are insufficient, which often affect the detection performance.

With the development of remote sensing technology, the resolution and the file sizes of aerial images are constantly increasing. Due to the limited budget, limited logistical resources and the power consumption in some aerospace systems, including satellites and aircraft, Zhang et al. [16] proposed a hardware architecture for the CNN-based aerial images object detection model. To see the issue from a different perspective, Li et al. [17] proposed a lightweight convolutional neural network. Recent advancements in remote sensing have widened the range of applications for 3D Point Cloud (PC) data. This data format poses several new issues concerning noise levels, sparsity and required storage space; as a result, many recent works address PC problems using deep learning solutions due to their capability to automatically extract features and achieve high performances [18,19].

2. Related Object Detection Methods

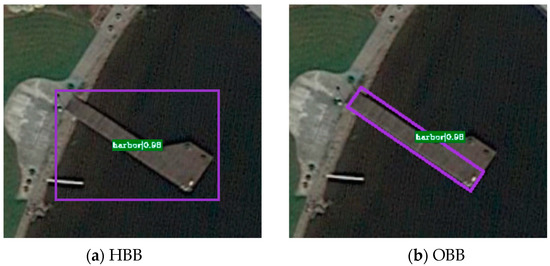

Most object detection methods use a Horizontal Bounding Box (HBB) to denote the location of objects in aerial images. However, because of the dense distribution of objects in aerial images, the large vertical-horizontal ratio and arbitrary orientations, the use of HBB always contains some background regions; this causes interference in classification tasks, and the predicted object position is not accurate enough as a result. To cope with these challenges, aerial image object detection is usually formulated as an oriented object detection task by using an Oriented Bounding Box (OBB). The comparison of HBB and OBB is shown in Figure 1.

Figure 1. The comparison of (a) HBB and (b) OBB.

It can be seen from Figure 1 that, when compared with HBB, OBB can denote the position of objects with arbitrary orientations more precisely. Therefore, OBB is usually used for arbitrary-oriented object detection in aerial images.

Current mainstream arbitrary-oriented object detectors can be divided into three categories: single-stage detectors [20,21,22,23], two-stage detectors [24,25,26,27] and refine-stage detectors [28,29,30,31]. These are introduced separately below.

2.1. Single-Stage Object Detector

Single-stage object detectors have a high detection speed that is generally based on the YOLO series [11], SSD [32], and other single-stage frameworks. Yang et al. [13] proposed a regression loss based on Gaussian Wasserstein distance to solve the problems of boundary discontinuity and its inconsistency between detection performance evaluation and loss function in arbitrary-oriented object detection. The authors further simplified the network model [33] based on the Gaussian model and the Kalman filter, in which a loss function was proposed for rotating object detection. The model can achieve trend-level alignment with SkewIoU loss instead of the strict value level identity.

Aerial images often use OBB for object detection. This leads to a large number of rotation-related parameters and anchor configurations in the anchor-based detection methods. Zhao et al. [27] proposed a different polar detector, which located an object by its center point, directed it by four polar angles and measured it using the polar ratio system. Yi et al. [26] applied the horizontal keypoint-based object detector to arbitrary-oriented object detection tasks. The experimental results showed that these two different methods can achieve the rapid detection of arbitrary-oriented objects, but that the detection accuracy needs to be improved.

2.2. Two-Stage Object Detector

Compared with single-stage detectors, two-stage object detectors often have high detection accuracy but with a lower detection speed. Currently, two-stage object detectors have become the mainstream in arbitrary-oriented object detectors.

In order to eliminate the loss discontinuity at the boundary of rotating object, Yang et al. [28,30] proposed an IoU-smooth L1 loss by the combination of IoU and smooth L1 loss. It is a rotating IoU loss without differentiability. Inspired by this, Yang et al. [34] further proposed a new rotation detection baseline to address the boundary problem by transforming angular prediction from a regression problem to a classification task with little accuracy degradation.

Ding et al. [4] proposed a multi-stage detector based on Cascade RCNN, which contains Rotation Regions of Interest Learner (RRoI Learner) and RRoI warping, to transform HRoI to RRoI. Han et al. [8] proposed Rotation-invariant RoI Align (RiRoI Align) to extract rotation-invariant features from rotation-equivariant features according to the orientation of RoI. These methods lead to confused sequential marking points when using rotating anchors. Therefore, Xu et al. and Wang et al. [6,7,35] employed quadrilateral masks to describe arbitrary-oriented objects precisely; they also used sequential label points to solve the above problems.

Xie et al. [31] proposed a two-stage arbitrary-oriented object detection framework that includes oriented RPN, an oriented RCNN header and a detection header that can refine RROI.

In general, the two-stage object detector can effectively deal with objects with various rotation angles, can improve the detection robustness and accuracy by designing the network structure and can accommodate loss function, feature fusion strategy, attention mechanism and so on.

2.3. Refine-Stage Object Detector

To obtain higher detection accuracy, many refined one-stage or two-stage object detectors are proposed; these can not only improve detection speed, but also obtain higher detection accuracy.

To address the problem of feature misalignment, Yang et al. [21] designed a Feature Refining Module (FRM) that uses feature interpolation to obtain the position information of refining anchor points and reconstructed feature maps to realize feature alignment. Han et al. [36] proposed a single-shot alignment network for oriented object detection that aims at alleviating the inconsistency between the classification score and location accuracy via deep feature alignment. To overcome the boundary discontinuity issue, Yang et al. [37] proposed a regression-based object detector that uses Angle Distance and Aspect Ratio Sensitive Weighting (ADARSW) to make the detector sensitive to angular distance and object aspect ratio. Different from refined one-stage detectors, the second stage of a refined two-stage detector is used for proposal classification and regression, allowing it to obtain a higher detection accuracy.

These methods can improve detection robustness and accuracy by elaborately designing network structure, loss function and feature extraction strategy to effectively cope with the various rotation angles of objects. As large numbers of methods are constantly proposed, the experimental data begin to randomize, seriously affecting the accuracy of the experimental results. To address the problem of experimental data, Giordano et al. [38] go in depth regarding methods, resources, experimental settings and performance results to observe and study all the aspects that derive from the stages. However, there are still some problems to be solved. When designing the network structure, more complex modules, such as feature fusion and attention mechanism, are usually adopted, inevitably increase model complexity. To solve the above problems, in this paper, a two-stage arbitrary-oriented object detection method is proposed based on DDC and SCAM. This method can dynamically adjust convolution kernel parameters according to the input image and enhance the semantic feature representation capability, thus improving detection performance.

This entry is adapted from the peer-reviewed paper 10.3390/electronics12092132

This entry is offline, you can click here to edit this entry!