The blending of human and mechanical capabilities has become a reality in the realm of Industry 4.0. Human–machine interaction (HMI) is a crucial aspect of Society 5.0, in which technology is leveraged for solving social challenges and improving quality of life. The key objective of HMI is to create a harmonious relationship between humans and machines where they work together towards a common goal. This is achieved by focusing on the strengths of each component, with machines handling tasks that require speed and accuracy while humans focus on tasks that require creativity, critical thinking, and empathy.

- human–machine interface

- HMI

- Society 5.0

- Artificial Intelligence (AI)

- industry 5.0

1. Introduction

2. Human–Computer Interaction (HCI)

Key Milestones

3. Human–Machine Interaction (HMI)

3.1. Key Enabling Technologies and Goals of HMI

3.2. Augmented Intelligence

3.3. Brain Computer Interface (BCI)

- Signal quality: One of the key challenges in the development of BCIs is obtaining high-quality signals from the human brain. By default, brain signals are weak and can be easily contaminated by noise and interference from other sources, such as muscles and other electronic devices. Therefore, accurate detection and interpretation of brain activity is a challenging task [11];

- Invasive vs. non-invasive BCIs: The current implementations of BCIs can be divided into two major categories: i) invasive and ii) non-invasive. Invasive BCIs require the implantation of electrodes directly into the brain, while non-invasive BCIs use external sensors to detect brain activity. Despite the fact that invasive BCIs can provide higher-quality signals, they are also riskier and more expensive. On the contrary, non-invasive BCIs are safer for humans and more accessible, at the expense of lower-quality signals [12];

- Training and calibration: BCIs require substantial effort in terms of training and calibration in order to work effectively. Furthermore, it is imperative for users to learn how to control their brain activity in such a way that can be detected and interpreted by the BCI. As a result, this can be a time-consuming and frustrating process for some users, causing discomfort [13];

- Limited bandwidth: BCIs often have limited bandwidth, thus allowing only a limited range of brain activity to be detected and interpreted. Therefore, the types of actions that can be controlled using a BCI are still limited [14];

-

Ethical and privacy concerns: Indeed, BCIs have been evidenced to be really useful for the future of human–computer interfaces. However, there are certain ethical and privacy concerns. For example, data ownership legislation needs to be established. Furthermore, issues regarding data misuse and the development of suitable mechanisms to counteract such issues need to be explored [15].

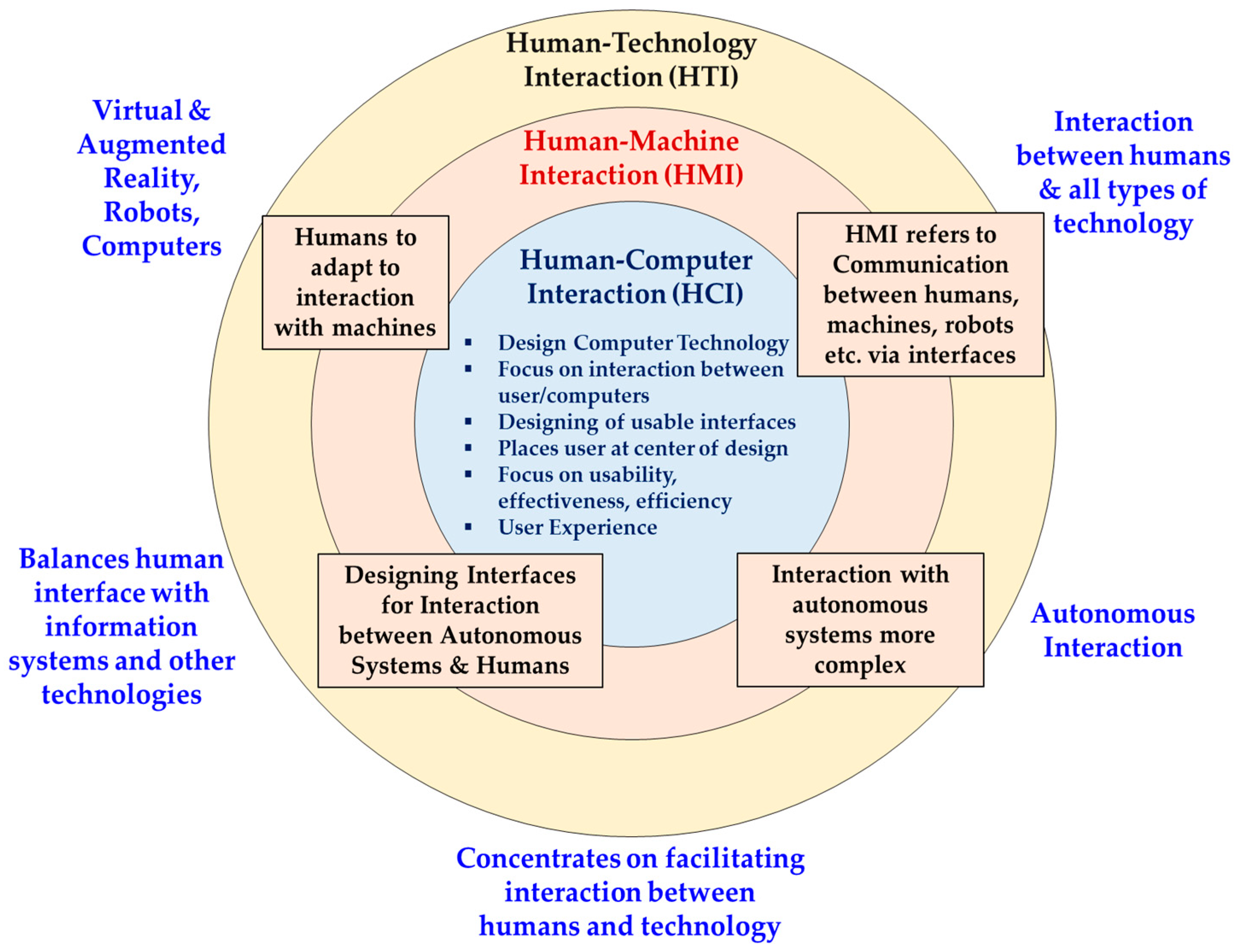

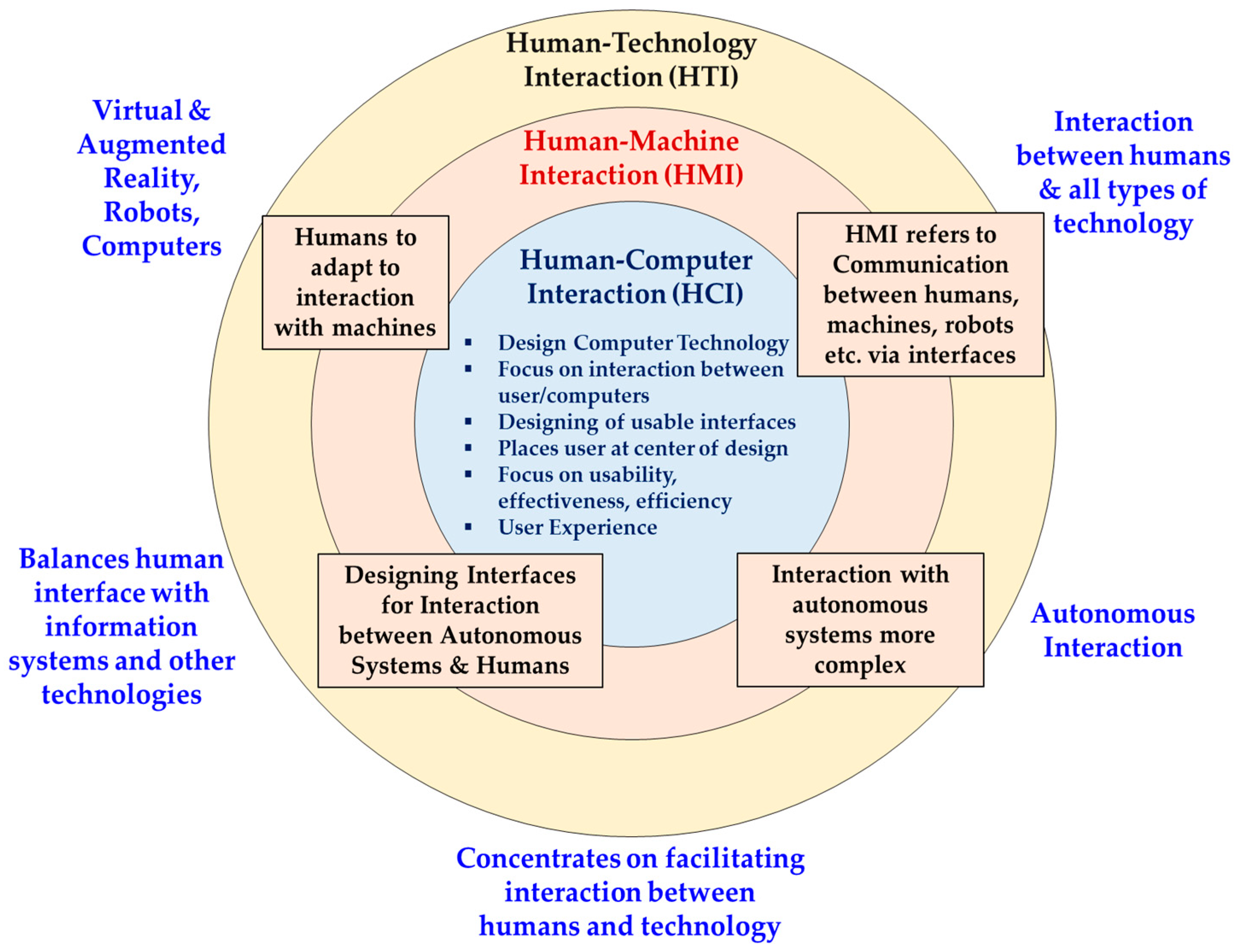

Figure 3. Comparison between HCI, HMI, and HTI (adapted from [16]).

Figure 3. Comparison between HCI, HMI, and HTI (adapted from [16]).

4. Human–Centric Manufacturing (HCM)

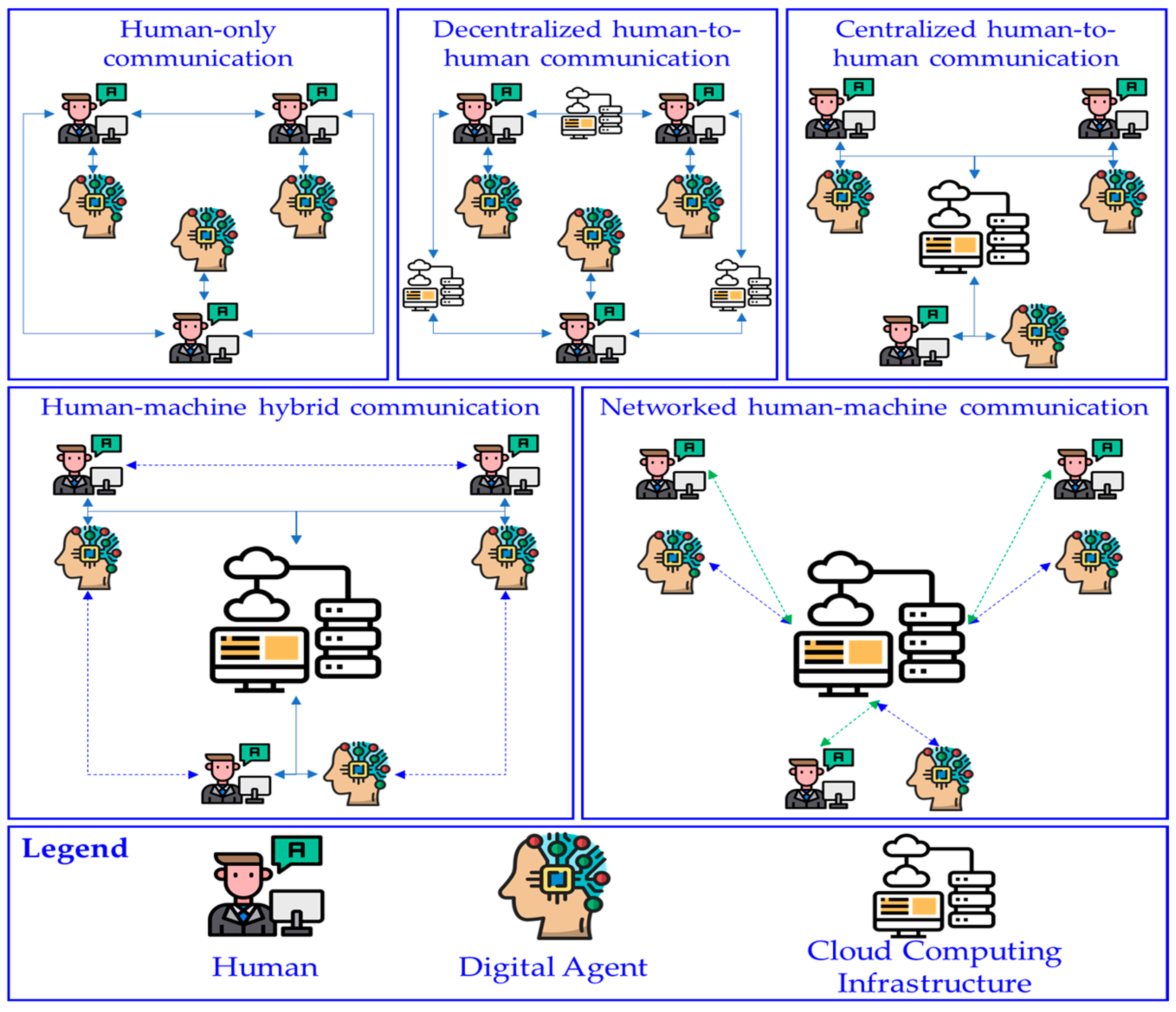

Human–centric manufacturing (HCM) is an approach to manufacturing that places the human operator at the center of the manufacturing process. It aims to create a work environment that is safe, healthy, and comfortable for workers, while also optimizing manufacturing efficiency and productivity. Human–machine interaction (HMI) is a key component of HCM, as it is essential for creating interfaces between humans and machines that are intuitive and easy to use. HMI plays a critical role in HCM by enabling workers to interact with machines in a way that is natural and efficient. This involves designing interfaces that are intuitive and user-friendly while also providing feedback to the user to help them understand the status of the machine and the manufacturing process. Collaborative intelligence is enabled by empathic understanding between humans and machines . By creating interfaces that are intuitive and user-friendly, HMI can help create a manufacturing environment that is safe, healthy, and comfortable for workers, while also optimizing manufacturing efficiency and productivity (Figure 4).

Figure 4. Types of HCPS communications.

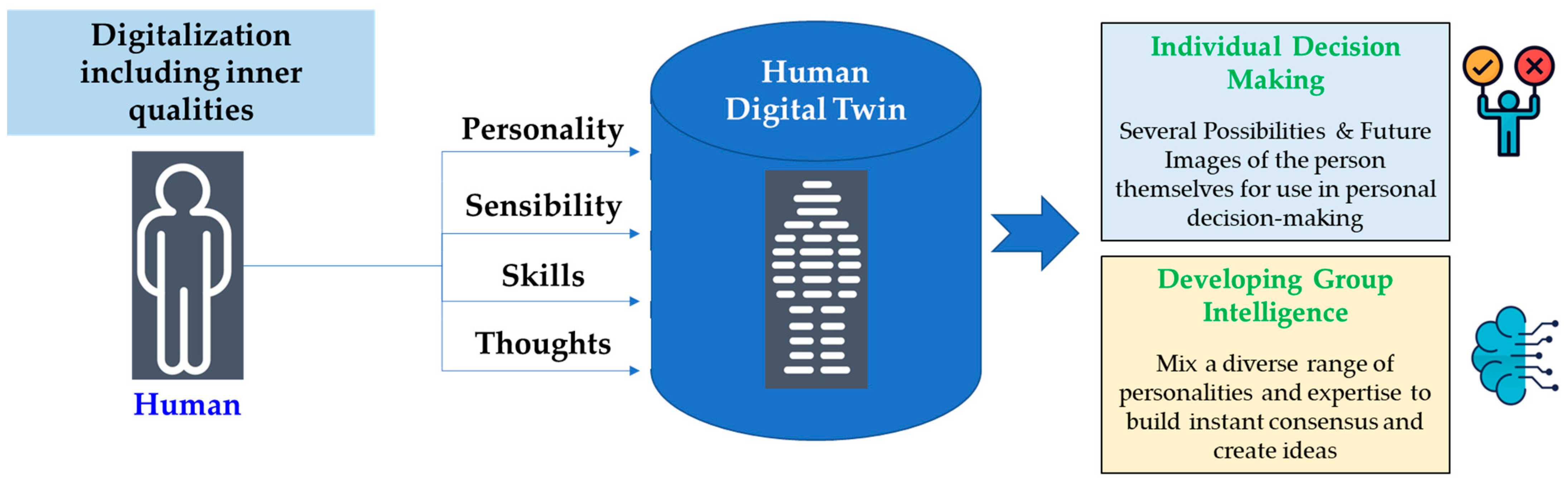

5. Human Digital Twins

A human digital twin is a virtual representation of a real human being that is created using digital data. It is a model that replicates the physiological, biological, and behavioral characteristics of an individual, allowing for simulations and predictions of their responses to different stimuli, situations, or environments. Here are some of the key characteristics of a human digital twin (Figure 5):

1) Real time data

2) Multi-dimensional representation

3) Personalization

4) Machine Learning and AI

5) Simulation and Prediction

Figure 5. Human digital twins.

6. Discussion

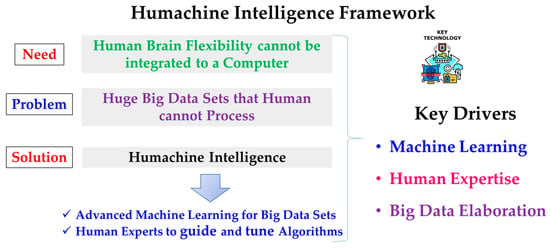

6.1. Humachine Framework

Humachines are necessary for the future because they can bring significant benefits and opportunities in various domains, including:-

Enhanced productivity and efficiency: humachines can augment human capabilities with the speed, accuracy, and consistency of machines, leading to higher productivity and efficiency in many industries;

-

Improved decision making: combining human reasoning and intuition with Machine Learning algorithms can lead to better decision making, reducing errors and improving outcomes;

-

Advanced healthcare: Humachines can help healthcare professionals in diagnoses, treatment planning, and monitoring, leading to more accurate and personalized healthcare;

-

Innovation and creativity: by collaborating with machines, humans can access vast amounts of data, tools, and insights that can fuel innovation and creativity in various fields;

-

Automation of mundane tasks: automation of repetitive and mundane tasks can free up human time and energy to focus on more meaningful and creative tasks, leading to higher job satisfaction and engagement.

References

- Leng, J.; Sha, W.; Wang, B.; Zheng, P.; Zhuang, C.; Liu, Q.; Wuest, T.; Mourtzis, D.; Wang, L. Industry 5.0: Prospect and retrospect. Journal of Manufacturing Systems 2022, 65, 279-295, https://doi.org/10.1016/j.jmsy.2022.09.017.

- Huang, S.; Wang, B.; Li, X.; Zheng, P.; Mourtzis, D.; Wang, L. Industry 5.0 and Society 5.0—Comparison, complementation and co-evolution. Journal of Manufacturing Systems 2022, 64, 424-428, https://doi.org/10.1016/j.jmsy.2022.07.010.

- Di Marino, C.; Rega, A.; Vitolo, F.; Patalano, S. Enhancing Human-Robot Collaboration in the Industry 5.0 Context: Workplace Layout Prototyping. In Advances on Mechanics, Design Engineering and Manufacturing IV: Proceedings of the International Joint Conference on Mechanics, Design Engineering & Advanced Manufacturing, JCM 2022, Ischia, Italy, 1–3 June 2022; Springer International Publishing: Cham, Switzerland, 2022; pp. 454–465. https://doi.org/10.1007/978-3-031-15928-2_40

- Mourtzis, D.. Design and Operation of Production Networks for Mass Personalization in the Era of Cloud Technology; Mourtzis, D., Eds.; Elsevier: Amsterdam, The Netherlands, 2021; pp. 1-393.

- Firyaguna, F.; John, J.; Khyam, M.O.; Pesch, D.; Armstrong, E.; Claussen, H.; Poor, H.V Towards Industry 5.0: Intelligent Reflecting Surface (IRS) in Smart Manufacturing. IEEE Communications Magazine 2022, -, -, https://doi.org/10.48550/arXiv.2201.02214.

- McFarlane, D.C.; Latorella, K.A. The Scope and Importance of Human Interruption in Human-Computer Interaction Design. Human–Computer Interaction 2009, 17, 1-61, https://doi.org/10.1207/S15327051HCI1701_1.

- Wójcik, M. Augmented intelligence technology. The ethical and practical problems of its implementation in libraries. Library Hi Tech 2021, 39, 435-447, https://doi.org/10.1108/LHT-02-2020-0043.

- Li, Q.; Sun, M.; Song, Y.; Zhao, D.; Zhang, T.; Zhang, Z.; Wu, J. Mixed reality-based brain computer interface system using an adaptive bandpass filter: Application to remote control of mobile manipulator. Biomedical Signal Processing and Control 2023, 83, -, https://doi.org/10.1016/j.bspc.2023.104646.

- Middendorf, M.; McMillan, G.; Calhoun, G.; Jones, K.S. Brain-computer interfaces based on the steady-state visual-evoked response. IEEE Transactions on Rehabilitation Engineering 2000, 8, 211-214, https://doi.org/10.1109/86.847819.

- Kubacki, A. Use of Force Feedback Device in a Hybrid Brain-Computer Interface Based on SSVEP, EOG and Eye Tracking for Sorting Items. Sensors 2021, 21, -, https://doi.org/10.3390/s21217244.

- Huang, D.; Wang, M.; Wang, J.; Yan, J. A survey of quantum computing hybrid applications with brain-computer interface. Cognitive Robotics 2022, 2, 164-176, https://doi.org/10.1016/j.cogr.2022.07.002.

- Liu, L.;Wen, B.;Wang, M.;Wang, A.; Zhang, J.; Zhang, Y.; Le, S.; Zhang, L.; Kang, X. Implantable Brain-Computer Interface Based On Printing Technology. In Proceedings of the 2023 11th InternationalWinter Conference on Brain-Computer Interface (BCI), Gangwon, Republic of Korea, 20–22 February 2023; pp. 1–5. https://doi.org/10.1109/BCI57258.2023.10078643

- Mu,W.; Fang, T.;Wang, P.; Wang, J.; Wang, A.; Niu, L.; Bin, J.; Liu, L.; Zhang, J.; Jia, J.; et al. EEG Channel Selection Methods for Motor Imagery in Brain Computer Interface. In Proceedings of the 2022 10th International Winter Conference on Brain-Computer Interface (BCI), Gangwon-do, Republic of Korea, 21–23 February 2022; pp. 1–6. https://doi.org/10.1109/BCI53720.2022.9734929

- Cho, J.H.; Jeong, J.H.; Kim, M.K.; Lee, S.W. Towards Neurohaptics: Brain-computer interfaces for decoding intuitive sense of touch. In Proceedings of the 2021 9th International Winter Conference on Brain-Computer Interface (BCI), Gangwon, Republic of Korea, 22–24 February 2021; pp. 1–5. https://doi.org/10.1109/BCI51272.2021.9385331

- Zhang, Y.; Xie, S.Q.; Wang, H.; Zhang, Z. Data Analytics in Steady-State Visual Evoked Potential-Based Brain–Computer Interface: A Review. IEEE Sensors Journal 2020, 21, 1124-1138, https://doi.org/10.1109/JSEN.2020.3017491.

- Coetzer, J.; Kuriakose, R.B.; Vermaak, H.J. Collaborative decision-making for human-technology interaction-a case study using an automated water bottling plant. Journal of Physics: Conference Series 2020, -, -.