This paper presents a systematic review about how deep learning is being applied to solve some 5G issues. Differently from the current literature, we examine data from the last decade and the works that address diverse 5G specific problems, such as physical medium state estimation, network traffic prediction, user device location prediction, self network management, among others.

- 5G, Systematic review, Deep Learning

Introduction

According to Cisco, the global Internet traffic will reach around 30 GB per capita by 2021, where more than 63% of this traffic is generated by wireless and mobile devices[1]. The new generation of mobile communication system (5G) will deal with a massive number of connected devices at base stations, a massive growth in the traffic volume, and a large range of applications with different features and requirements. The heterogeneity of devices and applications makes infrastructure management even more complex. For example, IoT devices require low-power connectivity, trains moving at 300 KM/h need a high-speed mobile connection, users at their home need fiber-like broadband connectivity \cite{joseph2019towards} whereas Industry 4.0 devices require ultra reliable low delay services. Several underlying technologies have been put forward in order to support the above. Examples of these include Multiple Input Multiple Output (MIMO), antenna beamforming[1] , Virtualized Network Functions (VNFs)[2], and the use of tailored and well provisioned network slices[3].

Some data based solutions can be used to manage 5G infrastructures. For instance, analysis of dynamic mobile traffic can be used to predict the user location, which benefits handover mechanisms[4]. Other example is the evaluation of historical physical channel data to predict the channel state information, which is a complex problem to address analytically[5]. Another example is the network slices allocation according to the user requirements, considering network status and the resources available[6]. All these examples are based on data analysis. Some examples are based on historical data analysis, used to predict some behavior, and others are based on the current state of the environment, used to help during decision making process. These type of problems can be address through machine learning techniques.

However, the conventional machine learning approaches are limited to process natural data in their raw form[7]. For many decades, constructing a machine learning system or a pattern-recognition system required a considerable expert domain knowledge and careful engineering to design a feature extractor. After this step, the raw data could be converted into a suitable representation to be used as input to the learning system[8] .

In order to avoid the effort for creating a feature extractor or suffering possible mistakes in the development process, techniques that automatically discover representations from the raw data were developed. Over recent years, deep learning (DL) has outperformed conventional machine learning techniques in several domains such as computer vision, natural language processing, and genomics[9].

According to[8], DL methods "are representation-learn\-ing methods with multiple levels of representation, obtained by composing simple but non-linear modules that each transforms the representation at one level (starting with the raw input) into a representation at a higher, slightly more abstract level''. Therefore, several complex functions can be learned automatically through sufficient and successive transformations from raw data.

Similarly to many application domains, DL models can be used to address problems of infrastructure management in 5G networks, such as radio and compute resource allocation, channel state prediction, handover prediction, and so on. This paper presents a systematic review of the literature in order to identify how DL has been used to solve problems in 5G environments. The goals of this research is to identify some of the main 5G problems addressed by DL, highlight the specific types of suitable DL models adopted in this context and finally describe their findings. In addition, will delineate major open challenges when 5G networks meet deep learning solutions.

Methodology

Both 5G and deep learning are technologies that have received considerable and increasing attention in recent years. Deep learning has become a reality nowadays due to the availability of powerful off-the-shelf hardware and the emergence of new processing processing units such as GPUs. The research community has taken this opportunity to create several public repositories of big data to use in the training and testing of the proposed intelligent models. 5G on the other hand, has a high market appeal as it promises to offer new advanced services that, up until now, no other networking technology was able to offer. 5G importance is boosted by the popularity and ubiquity of mobile, wearable, and IoT devices.

The main goal of this work is to answer the following research questions:

- What are the main problems deep learning is being used to solve?

- What are the main learning types used to solve 5G problems (supervised, unsupervised, and reinforcement)?

- What are the main deep learning techniques used in 5G scenarios?

- How the data used to train the deep learning models is being gathered or generated?

- What are the main research outstanding challenges in 5G and deep learning field?

The search string used to identify relevant literature was: (5G and ``deep learning''). It is important to limit the number of strings in order to keep the problem tractable and avoid cognitive overwhelming. We considered the following databases as the main sources for our research: IEEE Xplore, Science Direct, ACM Digital Library, and Springer Library.

The search returned 3, 192, 161, and 116 papers (472 in total) from ACM Digital Library, Science Direct, Springer Library, and IEEE Xplore, respectively. We performed this search in early November 2019. After reading all the 472 abstracts and using the cited criteria for inclusion or exclusion, 60 papers were selected for the ultimate evaluation. However, after reading the 60 papers, two papers were discarded because they were considered as being out of scope of this research. Next, two others were eliminated. The first paper was discarded because it was incomplete, and the second one was removed due presenting several inconsistencies in its results. Therefore, a total of 56 papers were selected for the for ultimate data extraction and evaluation.

Results

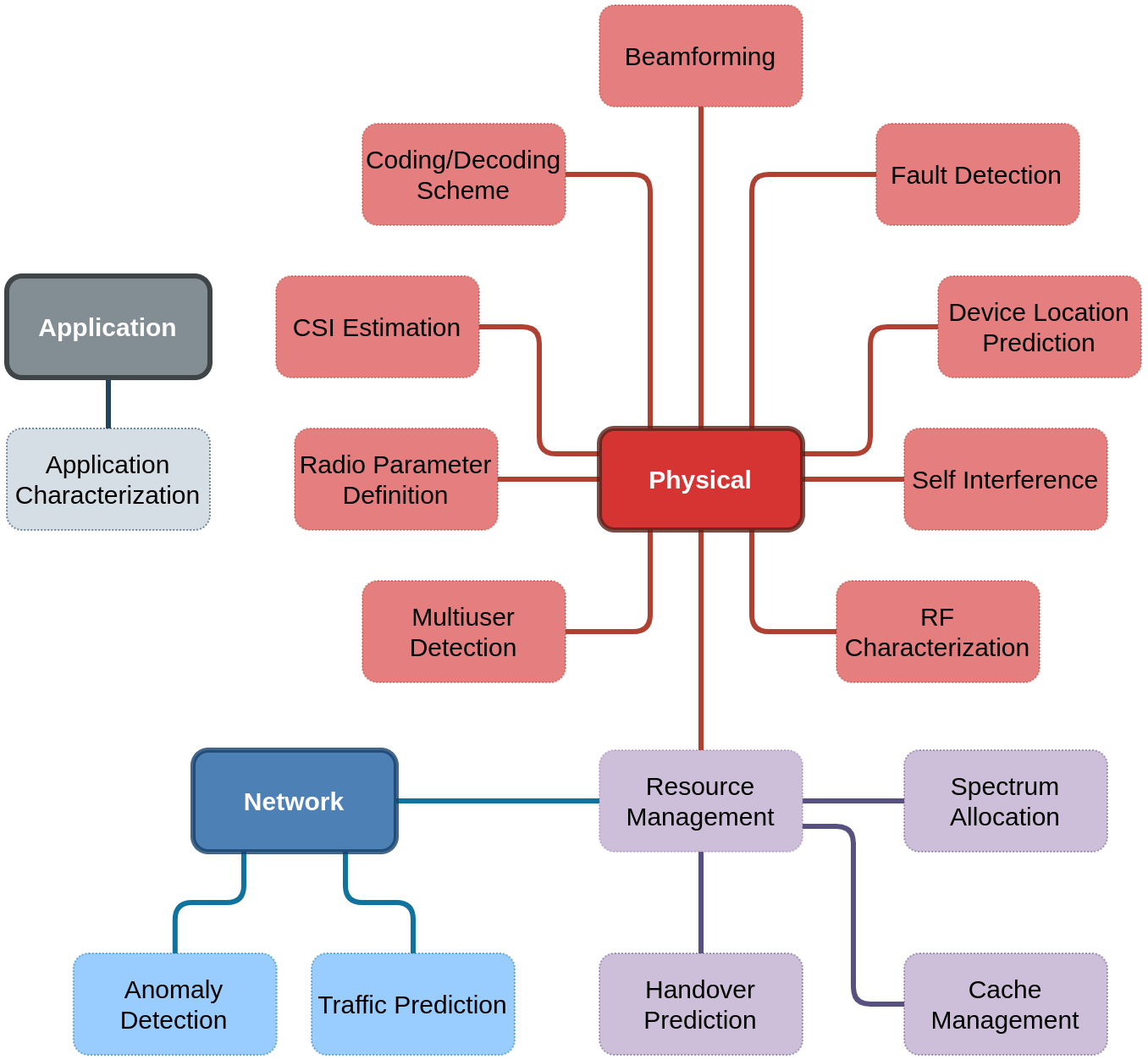

Figure 1 presents the main problems considered in the papers found in this systematic review. The identified problems can be categorized in three main layers: physical medium, network, and application.

At physical level of the OSI reference model, we detected papers that addressed problems related to channel state information (CSI) estimation, coding/decoding scheme representation, fault detection, device prediction location, self interference, beamforming definition, radio frequency characterization, multi user detection, and radio parameter definition. At network level, the works addressed traffic prediction through deep learning models and anomaly detection. Research on resource allocation can be related to the physical or network level. Finally, at the application level, existing works proposed deep learning-based solutions for application characterization.

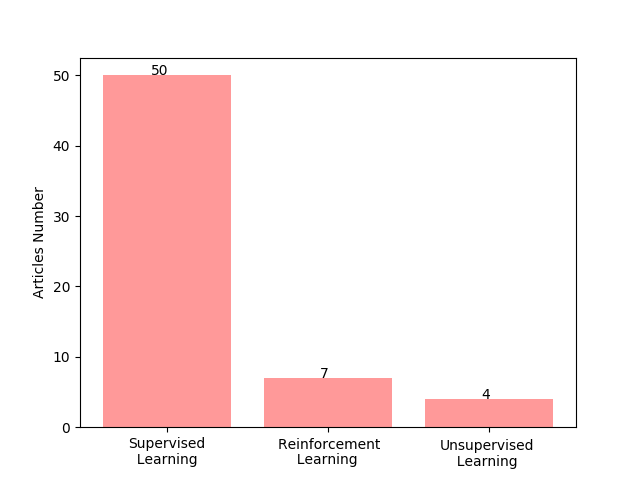

The works captured in this systematic review used three different learning techniques, as shown in Figure 2. The majority of the these works used supervised learning (fifty articles), followed by reinforcement learning (seven articles), and unsupervised learning (four articles only).

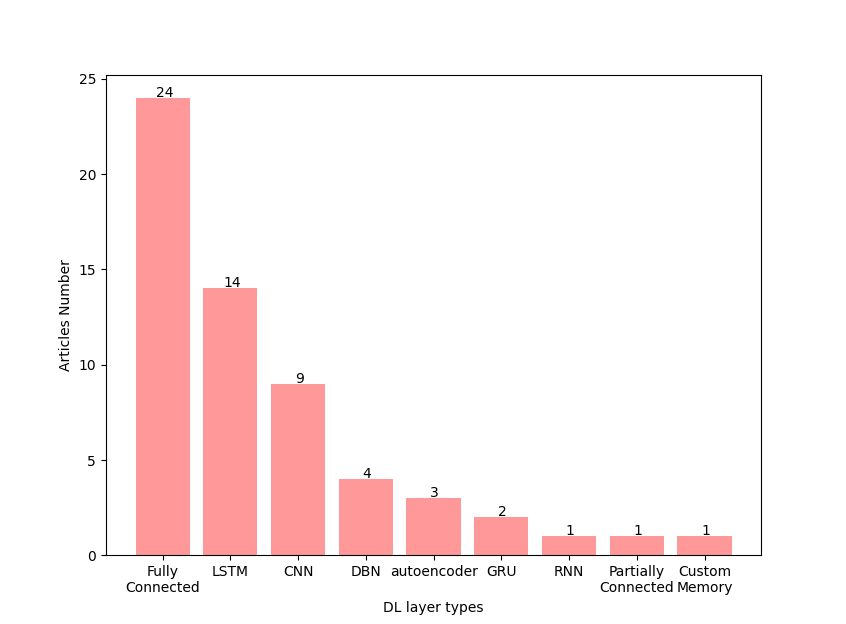

Figure 3 shows the common deep learning techniques used to address 5G problems in the literature. Traditional neural networks with fully connected layers is the deep learning technique that most appears in the works (reaching 24 articles), followed by long short-term memory (LSTM) (with 14 articles), and convolutional neural network (CNN) (adopted by only 9 articles).

Discussions

As presented in this systematic review, all the selected papers are very recent as most of them were published in the year 2019 (57.1%). The oldest paper we examined is from the year 2015. This reflects the novelty and hotness of the technologies 5G and deep learning, and of course their integration.

5G is a technology in development and is set to solve several limitations present in the previous generations of cellular communication systems. It offers services, so far limited, such as massive connectivity, security, trust, large coverage, ultra-low latency (in the range of 1 ms over the air interface), throughput, and ultra-reliability, (99.999% of availability). On the other side of the spectrum, deep learning has received a lot of attention in the last few years as it has surpassed several state-of-the-art solutions in several fields, such as computer vision, text recognition, robotics, etc. The many reviewed recent publications attest the benefits that 5G technology would enjoy by making use of deep learning advances.

For the purpose of illustration only, resource allocation in real cellular wireless networks can be formulated and solved using tools from optimization theory. However, the solutions often used have a high computational complexity. Deep learning models may be a surrogate solution keeping the same performance but with reduced computational complexity.

We also noted that many works (a total of 25 to be precise) were published in conferences with few pages (around six pages). We believe that they represent works in progress, as they only show initial results. It reinforces the general view that the the integration between 5G and deep learning is still an evolving area of interest with many advances and contributions expected soon.

By observing the different scenarios considered in the examined articles, they generally do not focus on a real application (30 out of 57 articles found). However, a project called Mobile and wireless communications Enablers for the Twenty-twenty Information Society (METIS) published a document that explained several 5G scenarios and their specific requirements. Nine use cases are presented: gaming, marathon, media on demand, unnamed aerial vehicles, remote tactile interaction, e-health, ultra-low cost 5G network, remote car sensing and control, and forest industry on remote control. Each of these scenarios have different characteristics and different requirements regarding 5G networks. For instance, remote tactile iterations scenarios can be considered a critical application (e.g., remote surgeries) and demand ultra-low latency (not be greater than 2 ms) and high reliability (99.999%). On the other hand, in the marathon use case, the participants commonly use attached tracing devices. This scenario must handle thousands of users simultaneously requiring high signaling efficiency and user capacity. As result, we believe that in order to achieve high impact results, deep learning solutions need to be targeted towards addressing use cases with specific requirements instead of trying to deal with the more general picture. Planning deep learning models for dynamic scenarios can be a complex task, since deep learning models need to capture the patterns present in the dataset. Thus, if the data varies widely between scenarios, it can certainly impact the performance of the models. One approach that can be used to deal with this limitation is the use of reinforcement learning. As presented, seven works considered this paradigm in their solutions. Indeed, this approach considers training software agents to react to the environment in order to maximize (or minimize) a metric of interest. This paradigm can be a good approach to train software agents to dynamically adapt according to changes in the environment, and thus meet the different requirements of the use cases presented above.

However, reinforcement learning requires an environment where the software agent needs to be inserted during their training. Simulators can be a good approach, due the low cost of implementation. For example, consider an agent trained to control physical medium parameters instead of having to manually set up these, e.g., by fine tuning rules and thresholds. After training, the agent must be placed in a scenario with greater fidelity for validation, for example a prototype that can represent a real scenario. Finally, the reinforcement learning agent can be deployed in a software-driven solution in the real scenario. These steps are necessary to avoid the drawbacks to deploying a non trained agent within a real operating 5G network. This is a cost, operators cannot afford.

Conclusions

This work presented a systematic review on the use of deep learning models to solve 5G related problems. 5G stands to benefit from deep learning as reported in this review. Though these models remove some of the traditional modeling complexity, developers need to determine the right balance between performance and abstraction level. More detailed models are not necessarily more powerful and many times the added complexity cannot be justified.

The review has also shown that the used deep learning techniques range across a plethora of possibilities. A developer must carefully opt for the right strategy to a given problem. We also showed that many works developed hybrid approaches in an attempt to cover a whole problem. Deep learning techniques are often also combined in the case of 5G with optimization algorithms such as genetic algorithm among others to produce optimized solutions.

Overall, the use of deep learning in 5G has already produced many important contributions and one expects these to evolve even further in the near future despite the many limitations identified in this review.

The entire article has been published on https://doi.org/10.3390/a13090208.

References

- Maksymyuk, Taras, et al. "Deep learning based massive MIMO beamforming for 5G mobile network." 2018 IEEE 4th International Symposium on Wireless Systems within the International Conferences on Intelligent Data Acquisition and Advanced Computing Systems (IDAACS-SWS). IEEE, 2018.

- Carlos Hernan Tobar Arteaga; Faiber Botina Anacona; Kelly Tatiana Tobar Ortega; Oscar Mauricio Caicedo Rendon; A Scaling Mechanism for an Evolved Packet Core Based on Network Functions Virtualization. IEEE Transactions on Network and Service Management 2020, 17, 779-792, 10.1109/tnsm.2019.2961988.

- Gu, Ruichun, and Junxing Zhang. "GANSlicing: a GAN-based software defined mobile network slicing scheme for IoT applications." ICC 2019-2019 IEEE International Conference on Communications (ICC). IEEE, 2019.

- Chuanting Zhang; Haixia Zhang; Dongfeng Yuan; Minggao Zhang; Citywide Cellular Traffic Prediction Based on Densely Connected Convolutional Neural Networks. IEEE Communications Letters 2018, 22, 1656-1659, 10.1109/lcomm.2018.2841832.

- Changqing Luo; Jinlong Ji; Qianlong Wang; Xuhui Chen; Pan Li; Channel State Information Prediction for 5G Wireless Communications: A Deep Learning Approach. IEEE Transactions on Network Science and Engineering 2018, 7, 227-236, 10.1109/tnse.2018.2848960.

- Joseph, Samuel, Rakesh Misra, and Sachin Katti. "Towards self-driving radios: Physical-layer control using deep reinforcement learning." Proceedings of the 20th International Workshop on Mobile Computing Systems and Applications. 2019.

- Goodfellow, Ian and Bengio, Yoshua and Courville, Aaron and Bengio, Yoshua. Deep learning; MIT press: Cambridge, 2016; pp. 1.

- Yann LeCun; Yoshua Bengio; Geoffrey Hinton; Deep learning. Nature 2015, 521, 436-444, 10.1038/nature14539 10.1038/nature14539.

- Bilal Hussain; Qinghe Du; Sihai Zhang; Ali Imran; Qammer Hussain Abbasi; Mobile Edge Computing-Based Data-Driven Deep Learning Framework for Anomaly Detection. IEEE Access 2019, 7, 137656-137667, 10.1109/access.2019.2942485.