Your browser does not fully support modern features. Please upgrade for a smoother experience.

Please note this is a comparison between Version 2 by Jessie Wu and Version 1 by Antonio Jorge Forte.

Pain is a complex and subjective experience, and traditional methods of pain assessment can be limited by factors such as self-report bias and observer variability. AI-based voice analysis can be an effective tool for pain detection in adult patients with various types of pain, including chronic and acute pain.

- pain

- biomarkers

- artificial intelligence

- voice

- machine learning

- vocal biomarkers

- natural language processing

- pain assessment

- voice analysis

1. Background

It has been established that pain represents a significant issue in the realm of public health within the United States. However, its subjective nature is a challenge for effective pain management [1][2][3]. The International Society for the Study of Pain (IASP) defines pain as “an unpleasant sensory and emotional experience associated with, or resembling that associated with, actual or potential tissue damage” [4][5][6]. The previous definition was revised in light of the many variables that affect how intense one’s painful episodes are, including past painful experiences, cultural and social contexts, individual pain tolerance, gender, age, and mental or emotional state [2][7].

Despite a thorough understanding of the pathophysiological processes underlying the physical pain response and the technological advances to date, pain is often poorly managed. Misdiagnosis of pain levels through associated subjective biases, as it is currently performed, can increase unnecessary costs and risks. In addition, poor pain relief can result in emotional distress and is linked to several consequences, including chronic pain [8][9][10].

Self-reports and observations form the basis of well-defined instruments for measuring pain and pain-related factors. Visual analog scales (VAS), the McGill Pain Questionnaire, and the numeric rating scales (NRS) are examples of patient-reported outcome measures (PROs, self-reports, or PROMs), which are frequently considered “the gold standard” for measuring acute and chronic pain. As these strategies depend on patients’ accounts, they are exclusively applicable to individuals with no verbal or cognitive impairments [1][6][11][12]. Because of this, all of these established techniques are inapplicable to newborns, delirious, sedated, and ventilated patients, or individuals with dementia or developmental and intellectual disorders [13]. Such patients are entirely dependent on others’ awareness of nonverbal pain cues. In light of this, observational pain scales are advised for use among adults in critical conditions when self-reported pain measurements are not feasible. Even so, the reliability and validity of these instruments are still limited, because even qualified raters cannot ensure an unbiased judgment [5][14][15][16][17][18][19].

Automatic pain recognition has transitioned from being a theory to becoming a highly important area of study in the last decade [10]. As a result, a few studies have concentrated on utilizing artificial intelligence (AI) to identify or classify pain levels using audio inputs.

2. Artificial I ntelligence Techniques Used in Pain Detection

Artificial intelligence (AI) describes a system’s ability to mimic human behavior and exhibit intelligence, which is now viewed as a branch of engineering. The adoption of low-cost, high-performance graphics processing units (GPUs) and tensor processing units (TPUs), quicker cloud computing platforms with a high digital data storage capacity, and model training with cost-effective infrastructure for AI applications have all contributed to AI’s unprecedented processing power [20][21][22][23].

There are two main categories of AI applications in the field of medicine: physical and virtual. The virtual subfield involves machine learning (ML), natural language processing (NLP), and deep learning (DL). On the other hand, the physical subfield of AI in medicine involves medical equipment and care bots (intelligent robots), which assist in delivering medical care [24][25][26][27].

Because of their cutting-edge performance in tasks such as image classification, DL algorithms have become increasingly prominent in the past ten years. The ability of a machine to comprehend text and voice is described as NLP. NLP has many practical applications, including speech recognition and sentiment analysis.

Machine learning algorithms may be classified into three categories: unsupervised (capability to recognize patterns), supervised (classification and prediction algorithms based on previous data), and reinforcement learning (the use of reward and punishment patterns to build a practical plan for a specific problem space). ML has been applied to a variety of medical fields, including pain management [25][27][28].

To establish an automated pain evaluation system, it is essential to document the pertinent input data channels. Modality is the term used to describe the behavioral or physiological sources of information. The principal behavioral modalities are auditory, body language, tactile, and facial expressions [29][30].

The availability of a few databases with precise and representative data linked to pain has allowed for recent developments in the field of automatic pain assessment [31], with most works focusing on the modeling of facial expressions [7].

Numerous databases have been established by pooling data from diverse modalities acquired from distinct cohorts of healthy individuals and patients. Most studies use the following publicly available databases: UNBC-McMaster, BioVid Heat Pain, MIntPAIN, iCOPE, iCOPEvid, NPAD-I, APN-db, EmoPain, Emobase 2010, SenseEmotion, and X-ITE.

The BioVid Heat Pain Database is the second most frequently used dataset for pain detection after the UNBC-McMaster Shoulder Pain Archive Database. In the first case, video recordings of 90 healthy people under the influence of heat pain applied to the forearm are collected. The second is made up of 200 videos of participants’ faces as they experience pain through physical manipulation of the shoulder [29][32][33][34].

Voice, meanwhile, has thus far received little consideration. Out of all the publicly available databases, BioVid Heat Pain, SenseEmotion, X-ITE, and Emobase use audio as one of their modalities. To fully leverage the potential of these databases, it is imperative to employ deep learning algorithms. Systems built on deep learning operate in two stages: training and inference. During training, the system is presented with a large dataset so as to teach it to recognize patterns and make predictions. Then, the trained model is used for inference, creating predictions based on the new data [29][32][33][35].

3. Artificial Intelligence Models Used in Pain Detection from Voice

Multiple artificial neurons are merged to create an artificial neural network (ANN). These artificial neurons imitate the behavior and structure of biological neurons. Furthermore, to enhance the efficiency and precision of the ANN, the neurons are organized into layers for ease of manipulation and precise mathematical representation.

The operation of artificial neurons is governed by three fundamental principles: multiplication, summation, and activation. Initially, each input value is multiplied by a distinctive weight. Subsequently, a summation function aggregates all the weighted inputs. Finally, at the output layer, a transfer function transmits the sum of the previous inputs and bias [36][37].

The topology of an ANN refers to the way in which different artificial neurons are coupled with one another [36]. Different ANN topographies are appropriate for addressing different issues.

The most critical topologies are as follows:

- (1)

-

Recurrent Artificial Neural Networks (RNNs).

- (2)

-

Feed-Forward Artificial Neural Networks (FNNs).

- (3)

-

Convolutional Neural Networks (CNNs): These use multiple layers to automatically learn features from the input data.

- (4)

-

Long Short-Term Memory (LSTMs): It can handle vanishing and exploding gradients, which are common problems in the training of RNNs.

- (5)

-

Multitask Neural Network (MT-NN): This employs the sharing of representations across associated tasks to yield a more advanced generalization model.

Bi-directional Artificial Neural Networks (Bi-ANN) and Self-Organizing Maps (SOM) can be named as other types [36][37][38].

An artificial neural network can solve a problem once its topology has been selected and it has been tuned and learned the right behavior [36][37].

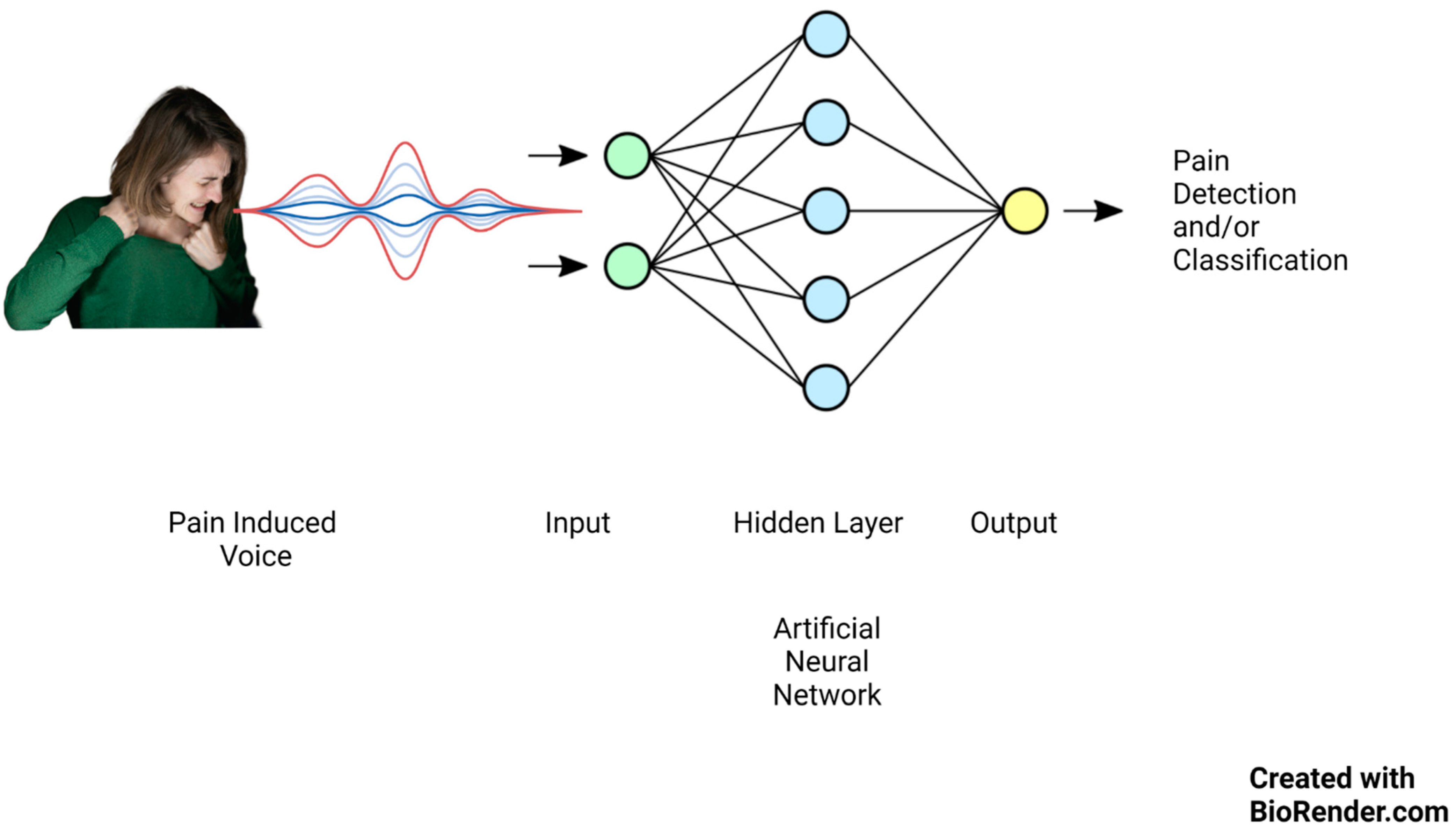

Lately, there has been significant focus on artificial intelligence algorithms in sound event classification and voice recognition, as shown in Figure 1.

Figure 1.

Artificial neural network mechanism of action on pain-induced vocalization.

Keyword spotting (KWS), wake-up word (WUW), and speech command recognition (SCR) are three essential techniques in speech processing that enable machines to recognize spoken words and respond accordingly.

Keyword identification technology is an automated approach to recognizing specific keywords within an uninterrupted spoken language and vocalization flow.

KWS systems are less reliant on high-quality audio inputs. They are created to be cheap and flexible and to run accurately and reliably on low-resource gadgets such as embedded edge devices [39][40][41][42].

Researchers have begun to create algorithms in order to automate pain level assessment using speech due to developments in signal processing and machine learning methods. As an example, Tsai et al. [14] employed bottleneck LSTM to detect pain based on a subset of the triage dataset, specifically prosodic signals. Later, Li et al. [7] introduced age and gender factors into a variational acoustic model.

References

- Keskinarkaus, A.; Yang, R.; Fylakis, A.; Mostafa, S.E.; Hautala, A.; Hu, Y.; Peng, J.; Zhao, G.; Seppänen, T.; Karppinen, J. Pain fingerprinting using multimodal sensing: Pilot study. Multimed. Tools Appl. 2021, 81, 5717–5742.

- Tsai, F.-S.; Weng, Y.-M.; Ng, C.-J.; Lee, C.-C. Pain versus Affect? An Investigation in the Relationship between Observed Emotional States and Self-Reported Pain. In Proceedings of the 2019 Asia-Pacific Signal and Information Processing Association Annual Summit and Conference (APSIPA ASC), Lanzhou, China, 18–21 November 2019.

- Duca, L.M.; Helmick, C.G.; Barbour, K.E.; Nahin, R.L.; Von Korff, M.; Murphy, L.B.; Theis, K.; Guglielmo, D.; Dahlhamer, J.; Porter, L.; et al. A Review of Potential National Chronic Pain Surveillance Systems in the United States. J. Pain 2022, 23, 1492–1509.

- Shu, L.; Xie, J.; Yang, M.; Li, Z.; Li, Z.; Liao, D.; Xu, X.; Yang, X. A Review of Emotion Recognition Using Physiological Signals. Sensors 2018, 18, 2074.

- Nazari, R.; Sharif, S.P.; Allen, K.; Nia, H.S.; Yee, B.-L.; Yaghoobzadeh, A. Behavioral Pain Indicators in Patients with Traumatic Brain Injury Admitted to an Intensive Care Unit. J. Caring Sci. 2018, 7, 197.

- Berger, S.E.; Baria, A.T. Assessing Pain Research: A Narrative Review of Emerging Pain Methods, Their Technosocial Implications, and Opportunities for Multidisciplinary Approaches. Front. Pain Res. 2022, 3, 896276.

- Li, J.-L.; Weng, Y.-M.; Ng, C.-J.; Lee, C.-C. Learning Conditional Acoustic Latent Representation with Gender and Age Attributes for Automatic Pain Level Recognition. In Proceedings of the Interspeech 2018, Hyderabad, India, 2–6 September 2018.

- Han, J.S.; Bird, G.C.; Li, W.; Jones, J.; Neugebauer, V. Computerized analysis of audible and ultrasonic vocalizations of rats as a standardized measure of pain-related behavior. J. Neurosci. Methods 2005, 141, 261–269.

- Ren, Z.; Cummins, N.; Han, J.; Schnieder, S.; Krajewski, J.; Schuller, B. Evaluation of the pain level from speech: Introducing a novel pain database and benchmarks. In Proceedings of the 13th ITG-Symposium, Oldenburg, Germany, 10–12 October 2018. Speech Communication.

- Werner, P.; Lopez-Martinez, D.; Walter, S.; Al-Hamadi, A.; Gruss, S.; Picard, R.W. Automatic Recognition Methods Supporting Pain Assessment: A Survey. IEEE Trans. Affect. Comput. 2019, 13, 530–552.

- Breau, L.M.; McGrath, P.; Camfield, C.; Rosmus, C.; Finley, G.A. Preliminary validation of an observational checklist for persons with cognitive impairments and inability to communicate verbally. Dev. Med. Child Neurol. 2000, 42, 609–616.

- Gruss, S.; Geiger, M.; Werner, P.; Wilhelm, O.; Traue, H.C.; Al-Hamadi, A.; Walter, S. Multi-modal signals for analyzing pain responses to thermal and electrical stimuli. JoVE (J. Vis. Exp.) 2019, 146, e59057.

- Takai, Y.; Yamamoto-Mitani, N.; Ko, A.; Heilemann, M.V. Differences in Pain Measures by Mini-Mental State Examination Scores of Residents in Aged Care Facilities: Examining the Usability of the Abbey Pain Scale–Japanese Version. Pain Manag. Nurs. 2014, 15, 236–245.

- Tsai, F.-S.; Weng, Y.-M.; Ng, C.-J.; Lee, C.-C. Embedding stacked bottleneck vocal features in a LSTM architecture for automatic pain level classification during emergency triage. In Proceedings of the 2017 Seventh International Conference on Affective Computing and Intelligent Interaction (ACII), San Antonio, TX, USA, 23–26 October 2017.

- Helmer, L.M.; Weijenberg, R.A.; de Vries, R.; Achterberg, W.P.; Lautenbacher, S.; Sampson, E.L.; Lobbezoo, F. Crying out in pain—A systematic review into the validity of vocalization as an indicator for pain. Eur. J. Pain 2020, 24, 1703–1715.

- Roulin, M.-J.; Ramelet, A.-S. Generating and Selecting Pain Indicators for Brain-Injured Critical Care Patients. Pain Manag. Nurs. 2015, 16, 221–232.

- Manfredi, P.L.; Breuer, B.; Meier, D.E.; Libow, L. Pain Assessment in Elderly Patients with Severe Dementia. J. Pain Symptom Manag. 2003, 25, 48–52.

- Hong, H.-T.; Li, J.-L.; Weng, Y.-M.; Ng, C.-J.; Lee, C.-C. Investigating the Variability of Voice Quality and Pain Levels as a Function of Multiple Clinical Parameters. Age 2019, 762, 6.

- Lotan, M.; Icht, M. Diagnosing Pain in Individuals with Intellectual and Developmental Disabilities: Current State and Novel Technological Solutions. Diagnostics 2023, 13, 401.

- Nagireddi, J.N.; Vyas, A.K.; Sanapati, M.R.; Soin, A.; Manchikanti, L. The Analysis of Pain Research through the Lens of Artificial Intelligence and Machine Learning. Pain Physician 2022, 25, e211.

- Mundial, F.E.; Schwab, K. The Fourth Industrial Revolution: What it means, how to respond. In Proceedings of the 2016 World Economic Forum, Dubai, United Arab Emirates, 11–12 November 2016.

- House of Lords Select Committee A. AI in the UK: Ready, Willing and Able; House of Lords: London, UK, 2018; p. 36.

- De Neufville, R.; Baum, S.D. Collective action on artificial intelligence: A primer and review. Technol. Soc. 2021, 66, 101649.

- Cornet, G. Chapter 4. Robot companions and ethics: A pragmatic approach of ethical design. J. Int. Bioéth. 2013, 24, 49–58.

- Hamet, P.; Tremblay, J. Artificial intelligence in medicine. Metabolism 2017, 69, S36–S40.

- Jung, W.; Lee, K.E.; Suh, B.J.; Seok, H.; Lee, D.W. Deep learning for osteoarthritis classification in temporomandibular joint. Oral Dis. 2023, 29, 1050–1059.

- Liu, Z.; He, M.; Jiang, Z.; Wu, Z.; Dai, H.; Zhang, L.; Luo, S.; Han, T.; Li, X.; Jiang, X.; et al. Survey on natural language processing in medical image analysis. Zhong Nan Da Xue Xue Bao Yi Xue Ban 2022, 47, 981–993.

- Theofilatos, K.; Pavlopoulou, N.; Papasavvas, C.; Likothanassis, S.; Dimitrakopoulos, C.; Georgopoulos, E.; Moschopoulos, C.; Mavroudi, S. Predicting protein complexes from weighted protein–protein interaction graphs with a novel unsupervised methodology: Evolutionary enhanced Markov clustering. Artif. Intell. Med. 2015, 63, 181–189.

- Gkikas, S.; Tsiknakis, M. Automatic assessment of pain based on deep learning methods: A systematic review. Comput. Methods Programs Biomed. 2023, 231, 107365.

- Qin, K.; Chen, W.; Cui, J.; Zeng, X.; Li, Y.; Li, Y.; You, X. The influence of time structure on prediction motion in visual and auditory modalities. Atten. Percept. Psychophys. 2022, 84, 1994–2001.

- Thiam, P.; Kessler, V.; Amirian, M.; Bellmann, P.; Layher, G.; Zhang, Y.; Velana, M.; Gruss, S.; Walter, S.; Traue, H.C.; et al. Multi-Modal Pain Intensity Recognition Based on the SenseEmotion Database. IEEE Trans. Affect. Comput. 2019, 12, 743–760.

- Walter, S.; Gruss, S.; Ehleiter, H.; Tan, J.; Traue, H.C.; Crawcour, S.; Werner, P.; Al-Hamadi, A.; Andrade, A.O. The biovid heat pain database data for the advancement and systematic validation of an automated pain recognition system. In Proceedings of the 2013 IEEE International Conference on Cybernetics (CYBCO), Lausanne, Switzerland, 13–15 June 2013.

- Lucey, P.; Cohn, J.F.; Prkachin, K.M.; Solomon, P.E.; Matthews, I. Painful data: The UNBC-McMaster shoulder pain expression archive database. In Proceedings of the 2011 IEEE International Conference on Automatic Face & Gesture Recognition (FG), Santa Barbara, CA, USA, 21–23 March 2011.

- Rodriguez, P.; Cucurull, G.; Gonzalez, J.; Gonfaus, J.M.; Nasrollahi, K.; Moeslund, T.B.; Roca, F.X. Deep Pain: Exploiting Long Short-Term Memory Networks for Facial Expression Classification. IEEE Trans. Cybern. 2022, 52, 3314–3324.

- Gouverneur, P.; Li, F.; Shirahama, K.; Luebke, L.; Adamczyk, W.M.; Szikszay, T.M.; Luedtke, K.; Grzegorzek, M. Explainable Artificial Intelligence (XAI) in Pain Research: Understanding the Role of Electrodermal Activity for Automated Pain Recognition. Sensors 2023, 23, 1959.

- Krenker, A.; Bešter, J.; Kos, A. Introduction to the artificial neural networks. In Artificial Neural Networks: Methodological Advances and Biomedical Applications; InTech: Rijeka, Croatia, 2011; pp. 1–18.

- O’Shea, K.; Nash, R. An introduction to convolutional neural networks. arXiv 2015, arXiv:151108458.

- Tsai, S.-T.; Fields, E.; Xu, Y.; Kuo, E.-J.; Tiwary, P. Path sampling of recurrent neural networks by incorporating known physics. Nat. Commun. 2022, 13, 7231.

- Mohan, H.M.; Anitha, S. Real Time Audio-Based Distress Signal Detection as Vital Signs of Myocardial Infarction Using Convolutional Neural Networks. J. Adv. Inf. Technol. 2022, 13, 106–116.

- Zehetner, A.; Hagmüller, M.; Pernkopf, F. Wake-up-word spotting for mobile systems. In Proceedings of the 2014 22nd European Signal Processing Conference (EUSIPCO), Lisbon, Portugal, 1–5 September 2014.

- Hou, J.; Xie, L.; Zhang, S. Two-stage streaming keyword detection and localization with multi-scale depthwise temporal convolution. Neural Netw. 2022, 150, 28–42.

- Qin, H.; Ma, X.; Ding, Y.; Li, X.; Zhang, Y.; Ma, Z.; Wang, J.; Luo, J.; Liu, X. BiFSMNv2: Pushing Binary Neural Networks for Keyword Spotting to Real-Network Performance. IEEE Trans. Neural Netw. Learn. Syst. 2023, 1–13.

More