Your browser does not fully support modern features. Please upgrade for a smoother experience.

Please note this is a comparison between Version 1 by Shuran Zheng and Version 4 by Jessie Wu.

Abstract

The Simultapplications of Simultaneous Lneous Localization and Mapping (SLAM) technique has achieved astonishing progress over the last few decades and has generated considerable interest in the autonomous driving community. With its conceptual roots in navigation and mapping, SLAM outperforms some traditional positioning and localization techniques since it can support more reliable and robust localization and Mapping (SLAM), planning, and controlling to meet some key criteria for autonomous driving. In this study the authors first give an overview of the different SLAM implementation approaches and then discuss the applications of SLAM for autonomous driving with respect to different driving scenarios, vehicle system components and the characteristics of the SLAM approaches are. The authors then discussed. A real-world road test is presented some challenging issues and current solutions when applying SLAM for autonomous driving. Some quantitative quality analysis means to evaluate the characteristics and performance of SLAM systems and to monitor the risk in SLAM estimation are reviewed. In addition, this study describes a real-world road test to demonstrate a multi-sensor-based modernized SLAM procedure for autonomous driving. The numerical results show that a high-precision 3D point cloud map can be generated by the SLAM procedure with the integration of Lidar and GNSS/INS. Online four–five cm accuracy localization solution can be achieved based on this pre-generated map and online Lidar scan matching with a tightly fused inertial system.- Simultaneous Localization and Mapping

- autonomous driving

- localization

- high definition map

1. Introduction

DAutonomous (also callepending on the different characteristics of Simultaneous Localization and Mapping (SLAM) techniques, there could be different applications ford self-driving, driverless, or robotic) vehicle operation is a significant academic as well as an industrial research topic. It is predicted that fully autonomous vehicles will become an important part of total vehicle sales in the next decades. The promotion of autonomous driving. One classification of the applications is whether they are offline or online. A map satisfying a high-performance requirement is typically generated offline, such as the High Definitionvehicles draws attention to the many advantages, such as service for disabled or elderly persons, reduction in driver stress and costs, reduction in road accidents, elimination of the need for conventional public transit services, etc. (HD) map [1][2].

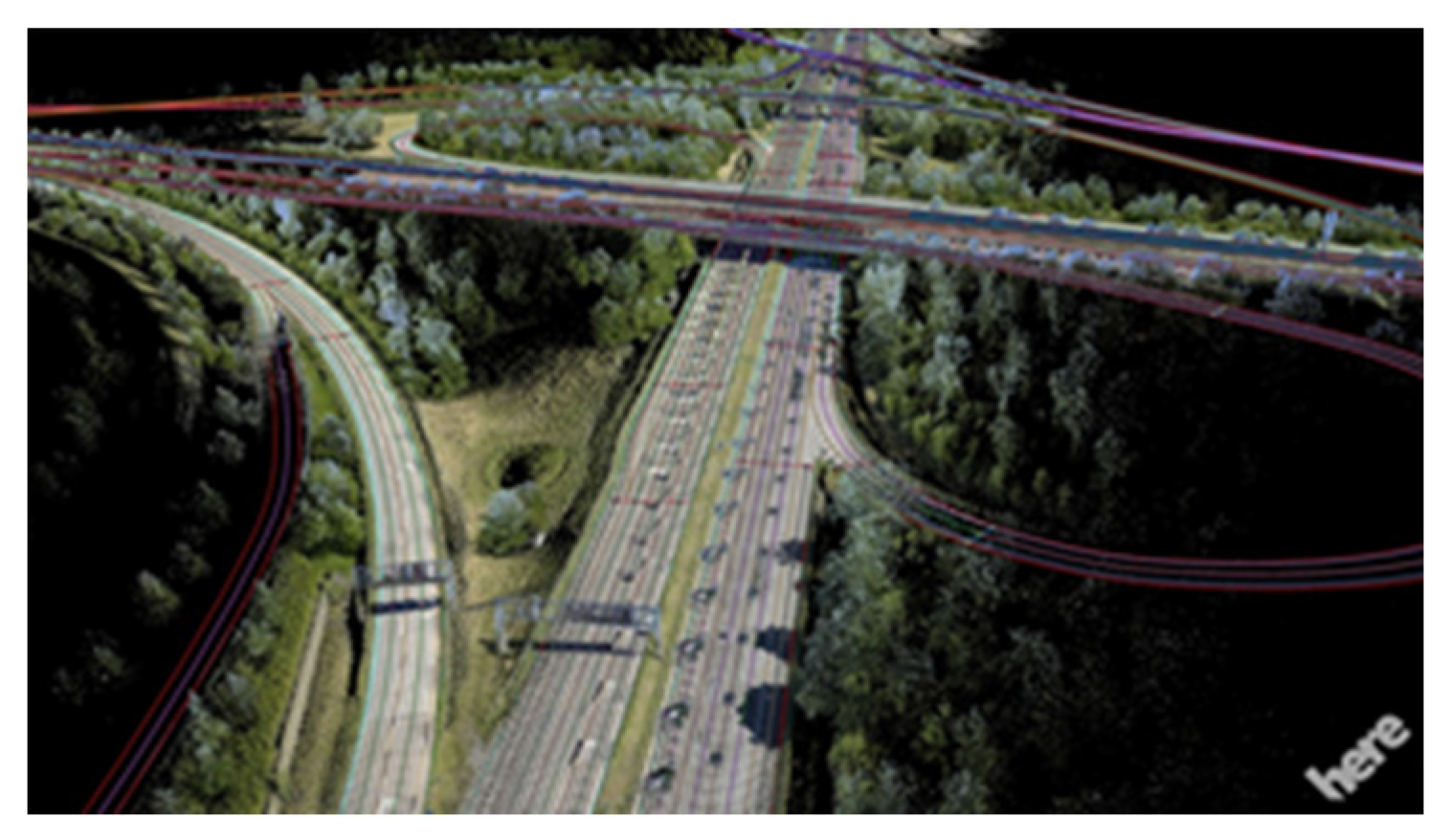

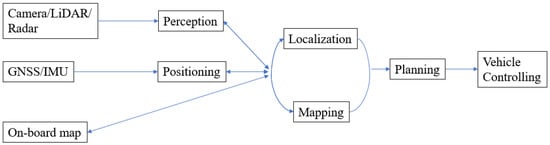

A Ftypical autonomor this kind of 3D point cloud map, an offline mapus vehicle system contains four key parts: localization, perception, planning, and controlling (Figure 1). generaPosition ing is the process ensures the accuracy and reliability of the map. Such maps can be pre-generated to support the real-time operations of autonomous vehicles.

2. High Definition Map Generation and Updating

Aof obtaining a (moving or static) object’s coordinates with respect to a given coordinate system. The coordinate system may be a local coordinate system or a geodetic datum such as WGS84. Localization is a process of estated earlier, SLAM can be used to generate digital maps used for autonomous driving, such as the HD mapimating the carrier’s pose (position and attitude) in relation to a reference frame or a map. The perception system monitors the road environment around the host vehicle and [1].identifies Duintereste to the stringent requirements, high quality sensors are usedd objects such as pedestrians, other vehicles, traffic lights, signage, etc.

Figure 1. Functional components of an autonomous driving system.

By Lidar is one of the core sensors for automated cars as it can etermining the coordinates of objects in the surrounding environment a map can be generate high-density 3D point clouds. Higd. This process is known as Mapping.

Path-end GNSS and INS technology are also used to provide accurate position information. Cameras can provideplanning is the step that utilizes localization, mapping, and perception information that is similar to the information detected by human eyes. The fusion of sensor data and analysis of road informo determine the optimal path in subsequent driving epochs, guiding the automated vehicle from one location to generate HD maps needs considerable computational power, which is not feasible in current onboard vehicleanother location. This plan is then converted into action using the controlling systems. Ther components, e.g., brake control before the HD map is buidetected traffic lights, etc.

All t-up offline, using techniques such as optimization-based SLAM. The offline map creationhese parts are closely related. The location information for both vehicle and road entities can be performobtained by drivcombining the road network several times to collect information, and then all the collectedposition, perception, and map information. In contrast, localization and mapping can be used to support better perceptive sensor informon. Accurate localization and posierception information is processed together to improve the accuracy of the finalessential for correct planning and controlling.

Tthere road environment and road rules may change, for instance, the speed limit may be reduced due to road work,are some key requirements that need to be considered for the localization and perception steps. The first is accuracy. For autonomous driving, the information about where the road infrastructure may be changed due to building developments and where the vehicle is within the lane supports the planning and controlling steps. To realize these, and so on. Therefore the HD map needs frequent updates. Such updates can utilize the online data collected from any autonomous car. For example, the data is transmitted to central (cloud) computers where the updateto ensure vehicle safety, there is a stringent requirement for position estimation at the lane level, or even the “where-in-lane” level (i.e., the sub-lane level). Recognition range is important because the planning and controlling steps need enough processing time for the vehicle to react [3]. cRomputations are performed. Other cars can receive such cloud-based updates and make a timely adjustment tobustness means the localization and perception should be robust to any changes while driving, such as driving plans. Jo et al. [3] pscenarioposed a SLAM change update (SLAMCU) algorithm, utilizing a Rao–Blackwellized PF apps (urban, highway, tunnel, rural, etc.), lighting conditions, weather, etc.

Troach for onlineditional vehicle posilocalization and (new) map state estimation. In the work of [4], a nperception techniques cannot mew feature layer of HD maps can be generated using Graph SLAM when a vehicle is temporarily stopped or in a parking lot. The new feature layer from one vehicle can then be uploadt all of the aforementioned requirements. For instance, GNSS error occurs as the signals may be distorted, or even blocked, by trees, urban canyons, tunnels, etc. Often an inertial navigation system (INS) is used to the map cloud and integrated with that from other vehicles into a new feature layer in the map cloud, thus enabling more precise and robust vehicle localization. In the work of Zhang et al.support navigation during GNSS signal outages, to continue providing position, velocity, and altitude information. However, inertial measurement bias needs frequently estimated corrections or calibration, which is best achieved using GNSS measurements. Nevertheless, an [5], integreal-time semantic segmentation and Visual SLAM were combined to generate semantic point cloud data ted GNSS/INS system is still not sufficient since highly automated driving requires not only positioning information of the host vehicle, but also the spatial characteristics of the roadobjects in the surrounding environment, which was then matched with a pre-constructed HD map to confirm map elements that have not changed, and generate new elements when appearing. Hence perceptive sensors, such as Lidar and Cameras, are often used for both localization and perception. Lidar can acquire a 3D point cloud directly and map the environment, with the aid of GNSS and INS, to an accuracy that can reach the centimeter level in urban road driving conditions [4]. However, thue high cos facilitating crowdsource updates of HD maps.

3. Small Local Map Generation

SLAMt has limited the commercial adoption of Lidar systems cain also be used for small local areas. One example is within parking areas. The driving speed in a parking lot is low, therefore the vision technique will be more robustvehicles. Furthermore, its accuracy is influenced by weather (such as rain) and lighting conditions. Compared to Lidar, Camera systems have lower accuracy but are also affected by numerous error sources [5][6]. Neverthan in other high-speed driving scenarios. The parking area could be unknown (public parking lot or garage), or known (home zone)–both caseseless, they are much cheaper, smaller in size, require less maintenance, and use less energy. Vision-based systems can provide abundant environment information, similar to what human eyes can perceive, and the data can benefit from SLAM. Since SLAM can be used fused with other sensors to determine the location of detected features.

A map with rich rout GNSS signals, it is suitable for vehicles in indoor or underground parking areas, using just the perceptive sensor and odometry measurements (velocity, turn angle) or IMU measuad environment information is essential for the aforementioned sensors to achieve accurate and robust localization and perception. Pre-stored road information makes autonomous driving robust to the changing environment and road dynamics. The recognition range requirements. For unknown public parking areas, the posi can be satisfied since an onboard map can provide timely information of the car and the obstacles, such as pillars, sidewalls, etc., can be estimated at the same time, guiding the parking system. For home zone parking, the pre-generated map and a frequent parking trajectory can be storen the road network. Map-based localization and navigation have been studied using different types of map information. Google Map is one example as it provides worldwide map information including images, topographic details, and satellite images [7], and within the automate is available via mobile phone and vehicle system. Each time the car returns home, re-localization using the stored map can be carried out by matching detapps. However, the use of maps will be limited by the accuracy of the maps, and in some selected features with the map. The frequenareas the map’s resolution may be inadequate. In [8], the trajectory could be used for the planning and controlling steps.

Aauthors considered low-accuracy maps for navigation by combining data from other sensors. They detected moving objects using approach thatLidar data and utilizes multi-level surface (MLS) maps to locate the vehicle, and to calculate and plan the vehicle path within indoor parking areas wad a GNSS/INS system with a coarse open-source GIS map. Their results show their fusion technique can successfully detect and track moving objects. A precise curb-map-based localization method that uses a 3D-Lidar sensor and a high-precision map is proposed in [69]. InHowever, this rearch, graph-based SLAM was used for mapping, method will fail when curb information is lacking, or obstructed.

Recently, so-canlled the MLS map is then used to plan a global path from the start to the destination,“high-definition” (HD) maps have received considerable interest in the context of autonomous driving since they contain very accurate, and large volumes of, road network information [10]. aAccordind to robustly localize the vehicle with laser range measurements. In theg to some major players in the commercial HD map market, 10–20 cm accuracy has been achieved work of [11][712], and grid map and an Extended Kalman Filter (EKF) SLAM algorithm were used with W-band radar for autonomous back-in parking. In this research, an efficient EKF SLAM algorithm was proposed to enable real-time processing. In [8]it is predicted that in the next generation of HD maps, a few centimeters of accuracy will be reached. Such maps contain considerable information on road features, not only the static road entities and road geometry (curvature, grades, etc.), but also traffic management information such as traffic signs, traffic lighe authors proposed an around-view monitor (AVM)/ Lidar sensor fusion method to recognizts, speed limits, road markings, and so on. The autonomous car can use the HD map to precisely locate the parkinghost-car within the road lane and to provide rapid loop closing performance. The above studies have demonstrated that both filter-based SLAM and optimizatiestimate the relative location of the car with respect to road objects by matching the landmarks which are recognized by onboard sensors with pre-stored information within the HD map.

Therefon-bre, mased SLAM can be used tops, especially HD maps, play several roles in support efficient and accurate vehicle parking assistance (local area mapping and localization), even without GNSS. In the work of Qin et al.of autonomous driving and may be able to meet the stringent requirements of accuracy, precision, recognition ranging, robustness, and information richness. However, the application of the “map” for autonomous driving is also facilitated by [9],techniques pose graph optimuch as Simultaneous Localization is performed so as to achieve an optimized trajectory and a global map of a parking lot, with semantic features such as guide signs, parking lines, andand Mapping (SLAM). SLAM is a process by which a moving platform builds a map of the environment and uses that map to deduce its location at the same time. SLAM, which is widely used in the robotic field, has been demonstrated [13][14] as being apeed bumps. These kinds of features are more (long-term) stable and robust than traditional geometrical feaplicable for autonomous vehicle operations as it can support not only accurate map generation but also online localization within a previously generated map.

Wituh appropres, especially in underground parking environments. An EKF was then used to complete the localization system for autonomous iate sensor information (perception data, absolute and dead reckoning position information), a high-density and accurate map can be generated offline by SLAM. When driving, the self-driving.

4. Localization within the Existing Map

In mcap-based localization, ar can locate itself within the pre-stored map by matching method isthe sensor data to the map. SLAM can also be used to match “live” data with map information, using methodsaddress the problem of DATMO (detection and tracking of moving objects) su[15] which ais Iterative Closest Point (ICP), Normal Distribution Transform (NDT), and othersimportant for detecting pedestrians or other moving objects. [1][10].As Tthese algorithms can be linked to the SLAM problem since SLAM executes loop closing and re-localization using similar methods. For a SLAM problem, the ability to recognize a previously mapped object or feature an static parts of the environment are localized and mapped by SLAM, the dynamic components can concurrently be detected and tracked relative to the static objects or features. However, SLAM also has some challenging issues when applied to autonomous driving applications. For instance, “loop closure” can be used to relocatduce the vehicle accumulated bias within the environment is essential for coSLAM estimation in indoor or urban scenarios, but it is not normally applicable to highway scenarios.This paper will recting the mapsview some [11].key Ttechereforeniques for SLAM, the reuse of a pre-generated map toapplication of SLAM for autonomous driving, and suitable SLAM techniques related to the applications. lSection 2 gives a brief introducalize the vehicle can be consition to the principles and characteristics of some key SLAM techniques. Section 3 descred an extension of aibes some potential applications of SLAM algorithm. In other words, the pre-generated and stored mapfor autonomous driving. Some challenging issues in applying the SLAM technique for autonomous driving are discussed in canSection 4. beA treated as a type of “real-world road test to show the performance of a multi-sensor” to support-based SLAM procedure for autonomous driving is described in Section 5. The lcocalizationnclusions are given in Section 6.

References

- Liu, R.; Wang, J.; Zhang, B. High definition map for automated driving, overview and analysis. J. Navig. 2020, 73, 324–341. Litman, T. Autonomous Vehicle Implementation Predictions: Implications for Transport Planning. In Proceedings of the 2015 Transportation Research Board Annual Meeting, Washington, DC, USA, 11–15 January 2015.

- HERE. HERE HD Live Map—The Most Intelligent Vehicle Sensor. 2017. . Available online: https://here.com/en/products-services/products/here-hd-live-map (accessed on 19 March 2017).Katrakazas, C.; Quddus, M.; Chen, W.H.; Deka, L. Real-time motion planning methods for autonomous on-road driving: State-of-the-art and future research directions. Transp. Res. C-EMER 2015, 60, 416–442.

- Jo, K.; Kim, C.; Sunwoo, M. Simultaneous localization and map change update for the high definition map-based autonomous driving car. Sensors 2018, 18, 3145. Seif, H.G.; Hu, X. Autonomous driving in the iCity- HD maps as a key challenge of the automotive industry. Engineering 2016, 2, 159–162.

- Kim, C.; Cho, S.; Sunwoo, M.; Jo, K. Crowd-sourced mapping of new feature layer for high-definition map. Sensors 2018, 18, 1472. Suganuma, N.; Yamamto, D.; Yoneda, K. Localization for autonomous vehicle on urban roads. J. Adv. Control Autom. Robot. 2015, 1, 47–53.

- Zhang, P.; Zhang, M.; Liu, J. Real-Time HD Map Change Detection for Crowdsourcing Update Based on Mid-to-High-End Sensors. Sensors 2021, 21, 2477. Fuentes-Pacheco, J.; Ruiz-Ascencio, J.; Rendon-Mancha, J.M. Visual simultaneous localization and mapping: A survey. Aritf. Intell. Rev. 2015, 43, 55–81.

- Kummerle, R.; Hahnel, D.; Dolgov, D.; Thrun, S.; Burgard, W. Autonomous driving in a multi-level parking structure. In Proceedings of the 2009 IEEE International Conference on Robotics and Automation, Kobe, Japan, 12–17 May 2009; pp. 3395–3400. Pupilli, M.; Calway, A. Real-time visual SLAM with resilience to erratic motion. In Proceedings of the 2006 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’06), 2006, New York, NY, USA, 17–22 June 2006; pp. 1244–1249.

- Lee, H.; Chun, J.; Jeon, K. Autonomous back-in parking based on occupancy grid map and EKF SLAM with W-band radar. In Proceedings of the 2018 International Conference on Radar (RADAR), Brisbane, QLD, Australia, 27–31 August 2018; pp. 1–4. He, L.; Lai, Z. The study and implementation of mobile GPS navigation system based on Google maps. In Proceedings of the International Conference on Computer and Information Application (ICCIA), Tianjin, China, 3–5 December 2010; pp. 87–90.

- Im, G.; Kim, M.; Park, J. Parking line based SLAM approach using AVM/LiDAR sensor fusion for rapid and accurate loop closing and parking space detection. Sensors 2019, 19, 4811. Hosseinyalamdary, S.; Balazadegan, Y.; Toth, C. Tracking 3D moving objects based on GPS/IMU navigation solution, Laser Scanner Point Cloud and GIS Data. ISPRS Int. J. Geo.-Inf. 2015, 4, 1301–1316.

- Qin, T.; Chen, T.; Chen, Y.; Su, Q. AVP-SLAM: Semantic Visual Mapping and Localization for Autonomous Vehicles in the Parking Lot. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 24 October 2020–24 January 2021; pp. 5939–5945. Wang, L.; Zheng, Y.; Wang, J. Map-based Localization method for autonomous vehicle using 3D-LiDAR. IFCA Pap. 2017, 50, 278–281.

- Zheng, S.; Wang, J. High definition map based vehicle localization for highly automated driving. In Proceedings of the 2017 International Conference on Localization and GNSS (ICL-GNSS), Nottingham, UK, 27–29 June 2017; pp. 1–8. Liu, R.; Wang, J.; Zhang, B. High definition map for automated driving, overview and analysis. J. Navig. 2020, 73, 324–341.

- Bresson, G.; Alsayed, Z.; Yu, L.; Glaser, S. Simultaneous localization and mapping: A survey of current trends in autonomous driving. IEEE Trans. Intell. Veh. 2017, 2, 194–220. HERE. HERE HD Live Map—The Most Intelligent Vehicle Sensor. 2017. . Available online: https://here.com/en/products-services/products/here-hd-live-map (accessed on 19 March 2017).

- TomTom. 2017, RoadDNA, Robust and Scalable Localization Technology. Available online: http://download.tomtom.com/open/banners/RoadDNA-Product-Info-Sheet-1.pdf (accessed on 25 May 2019).

- Bresson, G.; Alsayed, Z.; Yu, L.; Glaser, S. Simultaneous localization and mapping: A survey of current trends in autonomous driving. IEEE Trans. Intell. Veh. 2017, 2, 194–220.

- Kuutti, S.; Fallah, S.; Katsaros, K.; Dianati, M.; Mccullough, F.; Mouzakitis, A. A survey of the state-of-the-art localization techniques and their potentials for autonomous vehicle applications. IEEE Internet Things 2018, 5, 829–846.

- Wang, C.C.; Thorpe, C.; Thrun, S.; Hebert, M.; Durrant-Whyte, H. Simultaneous Localization, Mapping and Moving Object Tracking. Int. J. Robot. Res. 2007, 26, 889–916.

More