Several LC-MS-based proteomics strategies are widely used to study the proteome of biological systems in medical research. They include top-down proteomics or the analysis of intact proteins (e.g., KRAS proteoforms in colorectal cancer cases); targeted proteomics used to verify, validate, and absolutely quantify candidate cancer biomarkers; and bottom-up or shotgun proteomics used to study whole proteomes

[2][3][4][5][6][7][2,3,4,5,6,7]. The latter approach is widely utilized in the study of patient cohorts suffering cancer and other diseases. Shotgun proteomics workflows comprise several steps: selection of sample type, assessment of sample size, sample processing, data acquisition from the mass spectrometer, data cleaning and statistics, data interpretation and visualization, and machine learning. Unlike other omics technologies, there is little protocol standardization in LC-MS-based proteomics workflows, and therefore each project is carefully carried out according to a previously discussed experimental design of varied complexity depending on the number of samples and their nature, quantification method, enrichment of PTMs, and bioinformatics analyses.

2. LC-MS-Based Proteomics Strategies from Sample Selection to Data Acquisition in Cancer Research: Steps and Main Considerations

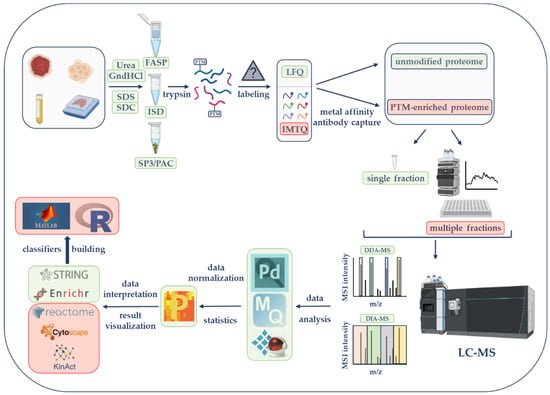

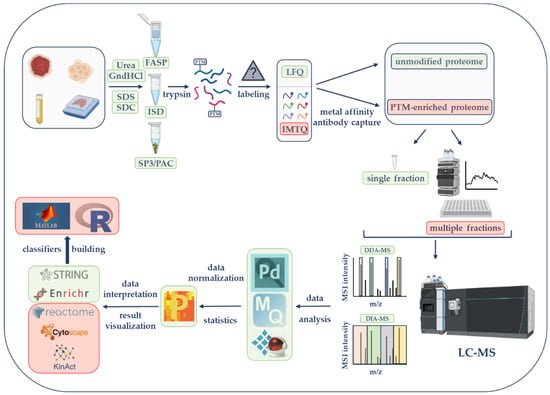

Each step of an LC-MS-based proteomics workflow represents an opportunity to maximize proteome coverage and obtain the most successful findings. Therefore, all possible approaches at each step must be carefully considered in order to create the most productive workflow. Here, the core steps and propose simple and efficient tools to augment the quality and quantity of MS-based data are described (

Figure 1).

Figure 1. Liquid chromatography–mass spectrometry (LC-MS)-based proteomics workflow. Initial steps consist of sample lysis and solubilization in the presence of chaotropic agents (urea or guanidine hydrochloride, GndHCl) or detergents (e.g., sodium dodecyl sulfate, SDS; sodium deoxycholate, SDC). Samples are further processed by the filter-aided sample preparation (FASP), the in-solution digestion (ISD) procedure, single-pot, solid-phase-enhanced sample preparation (SP3), or protein aggregation capture (PAC) before trypsin digestion. According to the selected quantification approach, peptides will be kept unlabeled (for label-free quantification, LFQ) or will be labeled with tandem mass tags (TMTs) for isobaric mass tag quantification (IMTQ). A small portion will be utilized for the characterization of the unmodified or so-called global proteome while the rest of the sample will be used for posttranslational modifications (PTMs). Unmodified and modified samples can be analyzed as single fractions or as multiple fractions after being chromatographically fractionated. Peptides will be run on a mass spectrometer with data-dependent acquisition (DDA) or data-independent acquisition (DIA) methods. MS data will be analyzed by commercially or publicly available software followed by the utilization of several bioinformatics tools to perform gene ontology enrichment, protein network, and PTM characterization studies. These and more software and online applications

ca

n be seenre described in

original paper's tableTable 1. The final step will involve the use of several artificial intelligence tools for classification modeling. The steps illustrated in this figure represent the most efficient strategies according to researchers' experience. Steps framed with a green rectangle correspond to basic global proteomics workflows, while those framed with a light-red rectangle are utilized by experienced researchers or by those that seek PTM information. The figure was created with features obtained from BioRender (

https://biorender.com/ (accessed on 25 May 2022)).

2.1. Sample Type’s Selection and Cohort Size

LC-MS-based proteomics can analyze any type of oncological samples from which proteins can be extracted. These include freshly frozen tissue or cells, formalin-fixed paraffin embedded (FFPE) tissue, blood fractions plasma or serum, feces, and other biological fluids such as urine, saliva, buccal swabs, and cerebral spinal fluid. While it might not be possible to select a sample’s type in some retrospective projects because of material availability, it is becoming easier to find different sample types (i.e., tissue and plasma) from the same patient thanks to the standard operating procedures (SOPs) that are being stablished in prospective studies by new biobanking policies

[8][9][12,13]. In fact, the development and compliance of SOPs that include detailed criteria for proper sample collection (e.g., reagents and chemicals added; duration of the procedure) and storage (e.g., addition of cryoprotectants; storage temperature and acceptable duration) have become essential to guarantee sample quality and reduce variability of the project data. However, more efforts are required toward the elaboration of global SOPs that can facilitate sample sharing among different research groups and hospital biobanks.

Besides sample availability, three main factors determine the choice of sample type for proteomics research. The first factor is the tumor type and location. Biofluids in closer contact with tumors are probably a better source for potential biomarkers.

The second factor is the researcher’s skills along with equipment availability in the laboratory. The sample must be optimally processed in order to obtain the highest number of identified proteins and accurate quantitative values. Thus, while sample preparation for LC-MS analysis of leukemic blasts can involve uncomplicated procedures

[10][11][12][13][14,15,16,17], FFPE tissue and plasma (key sample types in cancer proteomics) require more complex protocols that include reversal of chemical crosslinking, removal of reagents and protein extraction, and effective depletion of most abundant proteins, respectively

[14][15][16][18,19,20]. Recently, a protocol that combines tissue disruption by ultrasonication, heat-induced antigen retrieval, and two alternative methods for efficient detergent removal has enabled satisfactory quantitative proteomic analysis of limited amounts of FFPE material

[17][21]. Currently, plasma researchers are mainly using columns to selectively deplete the most abundant plasma proteins

[18][22]. However, issues of reproducibility and indirect removal of relevant proteins have already been reported

[19][20][23,24]. As an alternative, the use of nanoparticles with different surface chemistry was proven to identify ~4000 plasma proteins

[21][22][25,26]. Nonetheless, the cost of this procedure, which is only available in a robotic system, becomes especially high in discovery studies.

The last factor to consider is the number of study subjects and their samples needed to achieve an acceptable study power, typically 80%. Although Levin demonstrated that for a study to be powered at 80% with a detectable fold change of 1.5 comparing two sample groups for all proteins, the minimum sample size was 60 per group

[23][27], Nakayasu et al., found that the number of required biological replicates in a study of that power depends on the variability

[24][11]. The variability in a study is the sum of the biological and the technical variability. Moreover, the study design (i.e., number of biological replicates and number of groups) depends on heterogeneity and homogeneity in a group or between groups. Therefore, it is important to identify samples that are homogenous in a group during the study design, and it is desirable that the groups to compare are as different as possible. Furthermore, the biological variability in a study is highly dependent on the sample origin; i.e., cancer cells are expected to have less variability compared to tumor tissue. The lower the variability in a study the higher the power of analysis, and as a result, a higher number of statistically changed proteins with smaller differences will be found.

The power of previously published LC-MS-based proteomics studies was rarely described. However, current journal practices and policies promote the inclusion of detailed descriptions of the experimental design that provide the necessary power of the study.

2.2. Sample Preparation Strategies

The choice of a sample preparation methodology is a key step of any LC-MS-based proteomics workflow

[25][26][28,29]. Only the use of unbiased preparation approaches that produce a high number of identified and quantified proteins can provide satisfactory descriptions of the proteomes under study. Most sample processing for LC-MS analysis can be mainly categorized into in-solution (ISD), filter-based, and bead-based methods (

Figure 1). While ISD protocols extract proteomes by the addition of concentrated solutions of chaotropic agents or detergents such as urea and guanidine hydrochloride (GndHCl) or sodium deoxycholate (SDC), respectively

[27][28][30,31], filter-based or bead-based workflows allow protein extraction with detergents such as sodium dodecyl sulfate (SDS) or SDC and digestion in the presence of ammonium bicarbonate buffer after detergent removal. Other buffers such as HEPES and triethylammonium bicarbonate (TEAB) are used during digestion and are compatible with tandem mass tag (TMT)-labeling for relative quantification (see

Section 2.3).

Although classical ISD protocols are less frequently used, recent attractive ISD solutions such as microreactor tips with on-column TMT labeling

[29][32] and SDC-based ISD with TMT labeling in a 96-well plate format, SimPLIT

[30][33], have been presented as efficient, fast, and low-cost approaches for the digestion of fluorescence-activated cell sorting (FACS)-sorted samples and global proteomics samples, respectively.

The first sample preparation and digestion methodology for MS-based proteomics using spin filters with a ≥3000 molecular weight cutoff membrane was introduced nearly two decades ago

[31][34]. However, this method did not become popular in the proteomics community until it was presented as filter-aided sample preparation (FASP), which incorporated urea in a high concentration to successfully remove SDS

[32][35]. Since then, FASP in combination with StageTip-based fractionation and multi-enzyme digestion FASP protocols has been extensively used for in-depth analysis of proteomes

[33][34][35][36,37,38]. Magnetic bead-based sample preparation approaches for proteomics experiments were introduced, such as single-pot, solid-phase-enhanced sample preparation (SP3), and the protein aggregation capture (PAC). SP3 uses carboxylate-modified hydrophilic beads that bind proteins in a nonselective fashion through the use of ethanol-driven solvation capture. It is compatible with most of the common chemical agents used to facilitate cell or tissue lysis such as detergents, chaotropes, salts, and organic solvents

[36][39]. As the entire SP3 procedure occurs in a single sample tube and takes little time when compared to other procedures

[37][40], it is not surprising that the SP3 technology is becoming more and more popular among new and experienced MS-based proteomics researchers

[38][39][41,42]. PAC, which employs the inherent instability of denatured proteins for non-specific immobilization on microparticles by aggregation capture, was shown to be more efficient than ISD and FASP procedures in the preparation of phosphopeptides and peptides from tissue and secretome samples

[40][43]. Both protocols were also reported to be successful on automated devices

[41][42][43][44][8,44,45,46].

To secure optimal sample preparation protocols for LC-MS-based proteomic studies aiming at the discovery of acute myeloid leukemia (AML) biomarkers, the research group has been testing novel techniques over the past few years. Evaluating ISD and FASP proteomic workflows with leukemic blast samples isolated from peripheral blood was started

[13][17]. Using two different quantitative approaches, label-free (LF) and stable isotopes labeling with amino acids in cell culture (SILAC), FASP workflows were selected to produce the highest number of quantified proteins with reduced number of missed cleavages. However, the use of fractionation methods such as the mixed mode with styrene-divinylbenzene-reverse phase sulfonate plugs in both FASP and ISD workflows, employing one (trypsin) and two proteases (Lys-C and trypsin) at the digestion step, respectively, quantified approximately 2200 proteins with an Orbitrap Elite mass spectrometer (Thermo Scientific, Waltham, MA, USA).

Because of the long processing time in the FASP procedure, the performance of the ISD method using GndHCl in the lysis buffer and two proteases and the SP3 strategy using lysis buffers containing SDS or GndHCl and one protease with HeLa cell and human plasma samples were compared

[16][20]. The results showed that the SP3 protocol, using either buffer, achieved the highest number of LF-quantified proteins in HeLa cells (5895–6131 without peptide fractionation; 7817–8136 with high pH reversed-phase LC fractionation) and plasma samples (397–411 without depletion and fractionation steps; 1397 after Top12 abundant protein depletion and high pH reversed-phase LC fractionation). Therefore, the SP3 protocol with SDS-based lysis buffer for the proteomic analysis of AML samples have been used

[11][12][15,16].

Thus, it is recommended that the use of the SP3 procedure which represents a very robust and efficient processing tool for both concentrated and diluted protein materials

[38][45][46][47][41,47,48,49]. To facilitate large studies, the use of automation (e.g., KingFisher

TM Flex, Thermo Fisher Scientific, Waltham, MA, USA) in a 96-well format was proved to have a great impact on the reproducibility of bead-based sample preparation protocols

[41][48][8,50].

2.3. Quantification Strategies

Quantitative LC-MS-based proteomics experiments involve the use (or not, as in the LF quantification (LFQ) approach) of specific mass tags that are recognized by the instrument and are usually introduced into proteins or peptides metabolically or by chemical means, respectively

[49][51]. SILAC utilizes the cell’s own metabolism to incorporate isotopically labeled amino acids into its proteome, which can be mixed with the proteome of unlabeled cells

[50][51][52,53]. Thus, differences in protein expression can be analyzed by comparing the abundance of the labeled versus unlabeled proteins. The chemical derivatization processes include methodologies such as isotope-coded affinity tags (ICATs), dimethyl labeling, and isobaric mass tags among others

[52][53][54][55][56][54,55,56,57,58]. Isobaric tags for relative and absolute quantification (iTRAQ), which consist of a reporter group, a balance group, and a peptide reactive group, are used to quantify up to eight peptide samples

[57][59]. When the samples are pooled and analyzed simultaneously, the same peptide from the different samples will appear at the same mass in the MS1 scan. However, when the peptides are fragmented at the MS2 level, the peptide fragments provide amino acid sequence information and tag fragments, i.e., reporter ions. The ratios of these reporter ions are representative of the proportions of that peptide in each of the eight samples

[57][59].

LFQ was introduced early in the past decade as an alternative procedure to expensive and time-consuming stable isotope-based labeling methods. LFQ quantification is based on the intensities obtained from the extracted ion chromatogram (XIC) of MS1 signals or on spectral counting of the precursors, whereas peptide identification is carried out, as described for isobaric tag quantification, with peptidic features from fragment ions at MS2

[58][60]. It requires initial measurement of the sample concentration under consistent conditions and a strict adherence to the sample preparation workflow, including fractionation to resolve peptides with a consequent increase in the coverage of complex proteomes. LFQ has become highly employed in global proteomics and phosphoproteomics thanks to algorithms such as MaxLFQ, which handles fraction-dependent normalization information, calculation of pair-wise sample protein ratios from the peptide XIC ratios, and transfer of peptide identifications in one run to unidentified peptides in the subsequent run by matching their mass and retention times (i.e., the “match-between-runs, MBR” feature)

[59][61]. Therefore, MBR can significantly increase the number of annotated identifications and provide more data for downstream quantification of proteins

[60][62]. Recently, MBR has also been applied to TMT quantification using the three-dimensional MS1 features to transfer identifications from identified to unidentified MS2 spectra between LC-MS runs in order to utilize reporter ion intensities in unidentified spectra for quantification

[61][63].

The TMT labeling system is used at the peptide level and consists of mass tagging reagents of the same nominal mass. Similar to ITRAQ labels, these tags are composed of an amine-reactive group, a spacer arm, and a mass reporter that are used for MS2 quantification. Commercial TMT kits (Thermo Fisher Scientific) contain 6, 10, 11, 16, or 18 labels (also called channels) that can be used in different experiment sets when a reference channel comprising a small aliquot from each sample serves as a normalization bridge among the different sets. This allows accurate quantification of large sample cohorts. Despite the tag cost, more and more proteomics researchers are using the TMT labeling approach since several optimized TMT labeling protocols covering important issues such as the peptide:tag ratio and reaction buffer have been recently published in addition to simplified commercial and free software workflows

[62][63][64][64,65,66].

2.4. PTM Enrichments

The study of protein regulation by covalent modifications, PTMs, becomes necessary to understand the complexity and functionality of proteomes in cancer development

[65][67]. PTMs that involve a mass increase in a peptide sequence can be identified and quantified with the LC-MS technology. Because of the substoichiometric abundance of many PTMs, their study involves enrichment procedures in order to remove unmodified peptides.

Phosphopeptide enrichment has been classically performed using metal oxide affinity chromatography (MOAC) with titanium dioxide beads, immobilized metal affinity chromatography (IMAC) with iron affinity gel, and sequential elution from IMAC (SIMAC) with a combination of both reagents

[66][67][68][69,70,71]. Researchers' group successfully constructed a dataset comprising more than 12,000 quantified class I (i.e., probability of site localization ≥ 0.75) phosphorylation sites from approximately 3000 proteins in an AML cohort with 41 patients using the IMAC protocol

[69][72]. Nonetheless, the enrichment procedure has been remarkably eased by the use of magnetic material (e.g., MagReSyn Ti-IMAC HP beads from Resyn Biosciences) in the last few years

[70][73].

2.5. Peptide Fractionation to Increase Proteome Coverage

Peptide fractionation is a necessary step before LC-MS analysis in order to achieve maximal proteome coverage in samples from complex organisms. Most popular fractionation techniques are based on peptide properties such as charge, polarity, and hydrophobicity

[71][74]. Strong cation exchange, strong anion exchange, and mixed mode methodologies have been widely used as stuck disks on pipette tips or in the in-StageTip format

[13][35][72][17,38,75]. However, in order to produce more fractions and take advantage of the increasing sensitivity of last generation mass spectrometers, offline high pH reversed-phase chromatography using C18 sorbents proved to be an excellent strategy to quantify up to 8434 mouse protein groups and 16,152 localized class I phosphosites when 46 and 12 TMT-labeled peptidic fractions were analyzed during a 30 min and a 60 min elution gradient, respectively

[42][44]. Using the same number of peptidic fractions and length of LC gradients, 11,292 protein groups and 30,304 localized class I phosphosites were identified in HeLa lysates in an LFQ strategy

[73][76].

Alternatively, high-resolution isoelectric point focusing (HiRIEF) applied at the (iTRAQ-labeled) peptide level in the 3.7–5.0 pH range identified 13,078 human and 10,637 mouse proteins when the 72 fractions obtained from the strip were analyzed during a 50 min gradient

[74][77]. In a recent study, the analysis of TMT-labeled peptides from 141 non-small-cell lung cancer tumor samples that were fractionated on two strips (pH 3.7–4.9 and pH 3–10) and analyzed during a 60 min elution gradient quantified 13,975 proteins

[75][78]. However, HiRIEF with two pH-range strips (2.5–3.7; 3–10) did not appear to efficiently perform in a cell-cycle arrest study that identified 19,075 localized class I phosphosites from a total of 132 TMT-labeled fractions analyzed during a 50 min elution gradient

[76][79].

All things considered, the choice of peptide fractionation method is subject to the number of fractions that can be affordably analyzed, i.e., the MS time and the proteome depth sought.

2.6. MS Methods for Data Acquisition

LC-MS-based proteomics basically employs two MS data acquisition strategies, data-dependent acquisition (DDA) and data-independent acquisition (DIA), for global proteomics studies.

In DDA mode, the MS alternates between full-scan spectral acquisition at the MS1 level and MS2 sequential analysis of MS1 precursors selected according to their charge state (i.e., ≥2) and relative high intensity. Although this acquisition mode can be used for LF- or TMT-labeled samples, it introduces an abundance bias into the sampling and variability when running both biological and technical replicates. In order to alleviate these inherent DDA effects, the MS dynamic exclusion technology that adds masses with the highest intensity to a temporary exclusion list for a period of typically 30–60 s while peptides of lower abundance are sequenced and the already-mentioned software MBR tool have been widely used

[59][77][61,80].

However, the development of new publicly available and commercial software solutions has encouraged the introduction and establishment of the DIA strategy in many proteomics platforms. In DIA mode, all MS1 precursors within a m/z range of interest are sequentially selected and fragmented at the MS2 level using isolation windows of different widths. It thus offers potentially deeper coverage of the data, decreasing the need for offline fractionation. As DIA does not suffer from the stochastic identifications of peptides that DDA suffers from, cross-sample comparisons in large cohorts are thus made much easier. Because of the complex deconvoluting processes of the fragmentation spectra, DIA is currently used for LF- and SILAC-spiked samples only. Originally, experimentally derived DDA run-spectral libraries were necessary to facilitate DIA spectral deconvolution. However, some current DIA applications that are discussed below (see

Table 1) allow spectral analysis without their use.

Recent reports have shown that TMT–DDA methodology provides an excellent workflow to study proteomes and phosphoproteomes in depth. A TMT-based quantitative proteomic profiling of human monocyte-derived macrophages and foam cells identified 5146 proteins, among which 1515 and 182 were differentially expressed in macrophages/monocytes and foam cells/macrophages, respectively

[78][81]. A three TMT 11-plex quantitative proteomic and phosphoproteomic analysis of human post-mortem cortex across asymptomatic phase Alzheimer’s disease, symptomatic Alzheimer’s disease, and healthy individuals identified 11,378 protein groups and 51,736 phosphopeptides

[79][82]. However, DIA-based approaches that do not require expensive labels and time-consuming fractionation steps have become a powerful alternative for both proteomic and phosphoproteomics characterization

[41][70][80][8,73,83]. A recent DIA with parallel accumulation-serial fragmentation (PASEF, a mass spectrometry technique that enables hundreds of MS/MS events per second at full sensitivity) study identified over 7700 proteins in HeLa cells in 44 min with quadruplicate single-shot injections and over 35,000 phosphosites after stimulation with epidermal growth factor in triplicate 31 min runs

[81][84].

When TMT quantification is preferred, the synchronous precursor selection (SPS) MS3 technology in Orbitrap Tribrid mass spectrometers can be used to obtain a higher accuracy than the one provided by MS2 acquisition. Moreover, a real-time search (RTS) step between the MS2 and MS3 scans, which allows an MS3 scan acquisition only if the MS2 spectrum provides a positive peptide identification, can be selected in order to increase the scan rate of data acquisition and match the number of peptide identifications usually observed in MS2 acquisition

[82][83][84][85,86,87].