Your browser does not fully support modern features. Please upgrade for a smoother experience.

Please note this is a comparison between Version 2 by Jessie Wu and Version 1 by ayesha hameed.

Human–robot collaboration is an innovative area aiming to construct an environment for safe and efficient collaboration between humans and robots to accomplish a specific task. Collaborative robots cooperate with humans to assist them in undertaking simple-to-complex tasks in several fields, including industry, education, agriculture, healthcare services, security, and space exploration. These robots play a vital role in the revolution of Industry 4.0, which defines new standards of manufacturing and the organization of products in the industry. Incorporating collaborative robots in the workspace improves efficiency, but it also introduces several safety risks.

- collaborative control

- robots

- architectures

1. Human–Robot Collaboration

1.1. Human–Robot Interaction

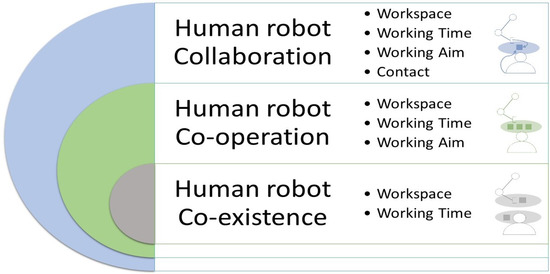

The human–robot interactions are divided into three subcategories: (i) Human–robot co-existence; (ii) Human–robot cooperation; and (iii) Human–robot collaboration. This classification is based on four criteria: (i) workspace; (ii) working time; (iii) working aim or task; and (iv) the existence of contact (contactless or with-contact).

The workspace can be described as a working area surrounding humans and robots wherein they can perform their tasks individually, as shown in Figure 1. The time during which a human is working in the collaborative workspace is known as the working time. Humans and robots interact in a workspace to achieve a common goal or distinct goals. Therefore, if the workspace is shared between the two entities along with simultaneous action, this interaction is known as HR coexistence [13][1]. HR cooperation implies an interaction when they work simultaneously towards the same aim in a shared workspace. However, HR collaboration covers scenarios in which there is direct contact between humans and robots to accomplish the shared aim or goal. Examples of these interactions are classical industrial robots, cooperative robots, and collaborative robots, respectively.

Figure 1.

Classification of human–robot interactions.

It is important to consider that the term HR collaboration is ambiguous in its definitions [14,15][2][3]. In Figure 1, HR collaboration is shown as the final category of HR interaction that describes a human and robot executing the same task together, wherein the action of the one has an immediate impact on the other.

1.2. Human–Robot Collaboration Types

Human–robot collaboration is the advanced property of robots that allows them to execute a challenging task involving human interaction in two ways: (i) physical collaboration; and (ii) contactless collaboration [14][2]. Physical collaboration entails direct physical contact of the force of the human hand exerted on the robot’s end-effector. These forces/torques assist or predict the robotic motion accordingly [16][4]. However, contactless collaboration does not involve physical interaction. This collaboration is carried out through direct (speech or gestures) or indirect (eye gaze direction, intentions recognition, or facial expressions) communication [15][3]. In these types of collaboration scenarios, human operator cognitive skills and decision-making abilities are combined with the robotic attributes of repetitively and more precisely performing the job with human involvement.

Contactless collaboration faces several issues, e.g., communication channel delay, input actuator saturation, bounded input and output, and data transmission delay in bilateral teleoperation systems. Therefore, various controller methods have been reported in the literature to deal with these issues, such as output feedback control [17][5], fuzzy control [18][6], adaptive robust control [19][7], model predictive control [20][8], and sliding mode control [21,22][9][10]. However, this survey focuses on the critical issues observed by collaborative robots during physical HR collaboration. The key challenging issue in this regard includes the prediction of human intentions, motion synchronization due to human-caused disturbances, and human safety for efficient physical HR interaction. The following section introduces the different robotic operations of a collaborative robot during HR collaboration.

1.3. Collaborative Robotic Operations

Norm ISO/TS15066 describes four operative modes for collaborative robots to ensure human safety: (1) power- and force-limiting; (2) speed and separation monitoring; (3) a safety-rated monitored stop; and (4) hand-guiding [23,24][11][12]. In these operating modes, collaborative robots work in collaboration with a human operator depending on the application. Table 1 presents the four working modes of collaborative operations on the basis of features, monitoring speed, torque-sensing, operator control, and a workspace limit for safe HR collaboration.

Table 1.

Type of collaborative robotic operations.

| Robotic Operation | Human Input | Speed | Techniques | Torques |

|---|---|---|---|---|

| Power- and force-limiting | Application-dependent | Maximum determined speed to limit forces | The robot cannot exceed power excessive force | Max. determined torques |

| Speed and separation monitoring | No human control in collaborative workspace | Safety-rated monitored speed | Limited contact between robot and human | Necessary to establish a minimum separation distance and to execute the application |

| Hand guiding | Emergency stop | Safety-rated monitored speed | Motion controlled with direct operator input | Operator input |

| Safety-rated monitored stop | Operator has no control | When human is in collaborative workspace, speed is zero | Robotic operation stops, if the human is present | Gravity and load compensation only |

2. Control Design of Human–Robot Collaboration

2.1. Collaborative Control System Architectures

When designing a controller for human–robot collaboration, two essential factors need to be considered; adjustable autonomy and mixed-initiative for integrating humans into an autonomous control system. Adjustable autonomy and human initiatives switch the control of tasks between an operator and an automated system in response to changing the demands of the robotic system. In this survey, an application scenario of a collaborative manipulator robot having direct physical collaboration with the human operator in industrial applications for collaborative assembly is considered.

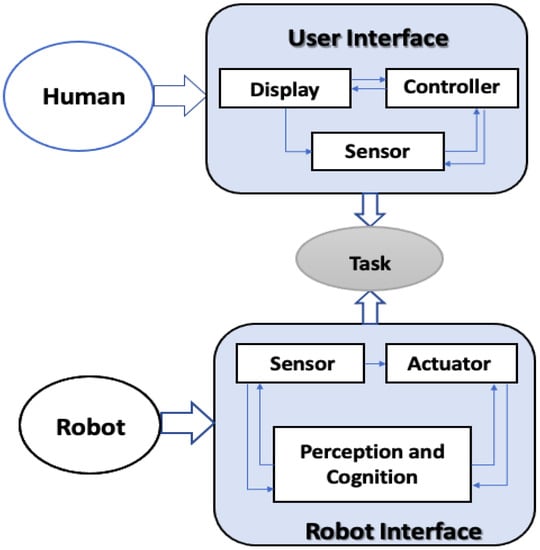

Simple-to-complex control architectures have been presented in the literature. The collaborative control architecture presents a systematic view of the interaction between humans and robots at both low-level (sensors, actuators) and high-level control (perception and cognition), as shown in Figure 2 [25,26][13][14].

Figure 2.

Block diagram of collaborative control architecture.

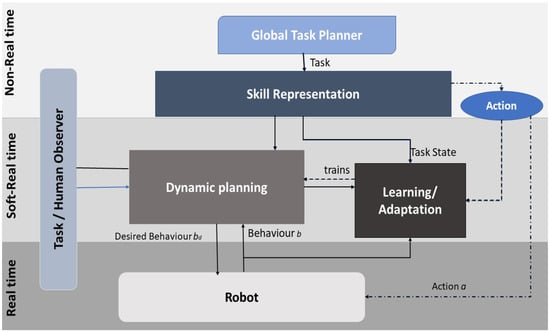

Another control architecture presented in [27,28][15][16] shows a more comprehensive review of interaction control in human–robot collaboration. This framework explains the complex requirements and diverse methods for interaction control, motion planning, and interaction planning. This control architecture is unconventional compared to a classical control architecture due to the planning for safe collaboration [29][17]. The control architecture is composed of three abstraction layers (Figure 3).

Figure 3.

Interactive collaborative control framework.

2.1.1. Non-Real-Time Layer

The highest abstraction layer is the top layer of architecture that plans the global task of the robot based on skill sets in offline mode. Such a task planner creates the different skill states to accomplish the respective global tasks/actions. It generates the task state and sends the initial desired behavior information to lower layers. Each skill state holds the information regarding the current task and action to perform. The job of a robotic system can be, e.g., to grasp the object or hand it over. The examples of non-real time control architectures were presented in [30,31][18][19].

2.1.2. Soft Real-Time Layer

The second abstraction layer is responsible for dynamically executing and modifying global plans; it does so by choosing the best action of the current task state, behavior state, human state, and environmental state. This dynamic planning unit is followed by a learning and adaption unit. The unit converts global task planning information into the corresponding dynamic planning language. The planning unit can translate the robot’s desired actions into safe and task-consistent actions, which instantaneously alter the global task plan. Hence, this layer’s primary task is to modify the pre-planned course into safe and consistent actions using the prediction of human intentions. Examples of soft real-time architectures are described in detail in [32,33,34,35,36][20][21][22][23][24].

2.1.3. Real-Time Layer

The low-level control layer is the bottom layer with the desired action (ad) and behavior (bd) that is directly forwarded to the robot for task execution. The expected behavior (bd) can alter based on reflex behaviors when accidental situations or collision events occur. The control layer provides feedback on the currently active activity (a) and behavior (b) to the dynamic planning layer, allowing it to perform accordingly.

Human interaction in this control architecture is observed at various levels of abstraction. The human observer states gather all human-related information and knowledge in the second layer (soft real-time) that can be further used for planning in the lower layer. This control architecture ensures human safety in physical interaction for interactive and cooperative tasks with a collaborative robot [37][25]. The following subsection highlights the key challenges that are considered in our literature review.

2.2. Controller Challenges

4.2.1. Estimation of Human Intention

The first challenge posed to HR collaboration is the precise estimation of human intention for a controller design. It allows the system to select the correct dynamic planning to anticipate the appropriate safety behavior. The goal here is to equip the robot with the human intention that is easy to interpret and safe for humans and robots to participate in the collaborative task. In the real-time control layer, there is a two-way interaction: the human ability to anticipate the robot movement is as important as the ability of the robot to anticipate human behaviors. The prediction of human actions relies on two factors: (1) predicting the next action; and (2) prediction of action time. Human motion prediction relies on predicting the desired tasks (manipulation, navigation) and the characteristics of human motion. On the other side, with either explicit or implicit cues, the robot can make its intended goals/tasks clearer to the co-located humans, facilitating the humans’ ability to select safe actions and motions.

4.2.2. Safety

Industrial collaborative robots can work with humans and perform operations besides them. These robots can move their arms and bodies and operate with dangerous and sharp objects. Such a situation demands specific procedures to ensure human safety while undertaking collaborative tasks. This is an important and emerging issue in the field of human–robot collaboration. Such a problem can be tackled using a collision model for a robot consisting of n joints and a particular link detecting a collision with a human [38][26].

The following equation combines linear and angular velocity vectors with joint angular velocities (q)

where xc is a state vector, vc is a linear velocity vector and wc is an angular velocity vector of the related robot link at the collision contact point, and Jc(q) is the contact Jacobian. In case of the collision, the robot dynamics can be represented as

where M(q) is a joint space inertia matrix, C(q) is a coriolis vector, g(q) is the gravity vector, τf is the dissipative friction torque, τ is the motor torque, Fext is the external force observed by the joint during collision, and then τext is external joint torque expressed as

xc˙=[vcwc]=[Jc,lin(q)Jc,ang(q)]q˙

M(q)q¨+C(q,q˙)q˙+g(q)+τf=τ+τext

τext=JTc(q)Fext

Then, the effective mass of a robot can be estimated as

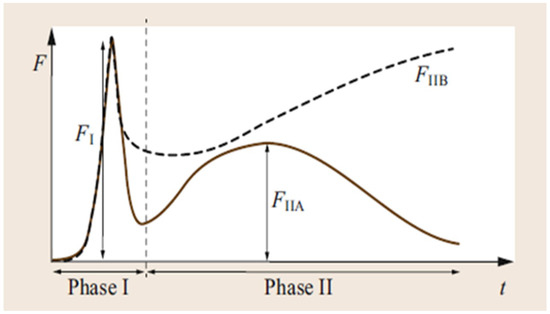

where Λv(q) is the Cartesian kinetic energy matrix. When the collision occurs, an important entity is a force observed at the contact point. This force is characterized in two phases, as shown in Figure 4. In phase I, FI represents a short impulsive force. In phase II, two types of forces come into play in case there is quasi-static contact. If the human is not clamped, then this force is called a pushing force FIIa. When a human is clamped, the force is called a crushing force FIIb. The mathematical modeling of robotic systems that includes kinematic modeling and dynamic modeling is a precursor for control design. The comprehensive dynamic modeling of point contact between the human hand and robotic arm is described in [39,40,41,42][27][28][29][30].

mu=[uTΛv(q)−1u]−1

Figure 4.

Force profiles of collision occurrence.

4.2.3. Human-Caused Disturbances

The unpredictable disturbances of human motion significantly reduce the performance of robotic control. To ensure stable and robust human–robot collaborative assembly manipulation, there is a need to minimize human disturbance.

References

- Schmidtler, J.; Knott, V.; Hölzel, C.; Bengler, K. Human Centered Assistance Applications for the working environment of the future. Occup. Ergon. 2015, 12, 83–95.

- Matheson, E.; Minto, R.; Zampieri, E.G.G.; Faccio, M.; Rosati, G. Human–Robot Collaboration in Manufacturing Applications: A Review. Robotics 2019, 8, 100.

- Wang, L.; Gao, R.; Váncza, J.; Krüger, J.; Wang, X.; Makris, S.; Chryssolouris, G. Symbiotic human–robot collaborative assembly. CIRP Ann. 2019, 68, 701–726.

- Al-Yacoub, A.; Zhao, Y.; Eaton, W.; Goh, Y.; Lohse, N. Improving human robot collaboration through Force/Torque based learning for object manipulation. Robot. Comput.-Integr. Manuf. 2021, 69, 102111.

- Rahman, S.M.; Wang, Y. Mutual trust-based subtask allocation for human–robot collaboration in flexible lightweight assembly in manufacturing. Mechatronics 2018, 54, 94–109.

- Jiang, J.; Huang, Z.; Bi, Z.; Ma, X.; Yu, G. State-of-the-Art control strategies for robotic PiH assembly. Robot. Comput.-Integr. Manuf. 2020, 65, 101894.

- Cheng, C.; Liu, S.; Wu, H.; Zhang, Y. Neural network–based direct adaptive robust control of unknown MIMO nonlinear systems using state observer. Int. J. Adapt. Control Signal Process. 2020, 34, 1–14.

- Li, S.; Wang, H.; Zhang, S. Human-Robot Collaborative Manipulation with the Suppression of Human-caused Disturbance. J. Intell. Robot. Syst. 2021, 102, 1–11.

- Chen, Z.; Huang, F.; Chen, W.; Zhang, J.; Sun, W.; Chen, J.; Gu, J.; Zhu, S. RBFNN-Based Adaptive Sliding Mode Control Design for Delayed Nonlinear Multilateral Telerobotic System With Cooperative Manipulation. IEEE Trans. Ind. Inform. 2020, 16, 1236–1247.

- Abadi, A.S.S.; Hosseinabadi, P.A.; Mekhilef, S. Fuzzy adaptive fixed-time sliding mode control with state observer for a class of high-order mismatched uncertain systems. Int. J. Control Autom. Syst. 2020, 18, 2492–2508.

- Scholtz, J. Theory and evaluation of human robot interactions. In Proceedings of the 36th Annual Hawaii International Conference on System Sciences, Big Island, HI, USA, 6–9 January 2003; p. 10.

- Cherubini, A.; Passama, R.; Meline, A.; Crosnier, A.; Fraisse, P. Multimodal control for human–robot cooperation. In Proceedings of the 2013 IEEE/RSJ International Conference on Intelligent Robots and Systems, Tokyo, Japan, 3–7 November 2013; pp. 2202–2207.

- Hua, C.; Yang, Y.; Liu, P.X. Output-feedback adaptive control of networked teleoperation system with time-varying delay and bounded inputs. IEEE/ASME Trans. Mechatron. 2014, 20, 2009–2020.

- Zhai, D.H.; Xia, Y. Adaptive fuzzy control of multilateral asymmetric teleoperation for coordinated multiple mobile manipulators. IEEE Trans. Fuzzy Syst. 2015, 24, 57–70.

- Chen, Z.; Huang, F.; Sun, W.; Gu, J.; Yao, B. RBF-neural-network-based adaptive robust control for nonlinear bilateral teleoperation manipulators with uncertainty and time delay. IEEE/ASME Trans. Mechatron. 2019, 25, 906–918.

- Rosenstrauch, M.J.; Krüger, J. Safe human–robot-collaboration-introduction and experiment using ISO/TS 15066. In Proceedings of the 3rd International Conference on Control, Automation and Robotics (ICCAR), Nagoya, Japan, 24–26 April 2017; pp. 740–744.

- Villani, V.; Pini, F.; Leali, F.; Secchi, C. Survey on human–robot collaboration in industrial settings: Safety, intuitive interfaces and applications. Mechatronics 2018, 55, 248–266.

- Asfour, T.; Kaul, L.; Wächter, M.; Ottenhaus, S.; Weiner, P.; Rader, S.; Grimm, R.; Zhou, Y.; Grotz, M.; Paus, F.; et al. ARMAR-6: A Collaborative Humanoid Robot for Industrial Environments. In Proceedings of the 2018 IEEE-RAS 18th International Conference on Humanoid Robots (Humanoids), Beijing, China, 6–9 November 2018; pp. 447–454.

- Tang, L.; Jiang, Y.; Lou, J. Reliability architecture for collaborative robot control systems in complex environments. Int. J. Adv. Robot. Syst. 2016, 13, 17.

- Ye, Y.; Li, P.; Li, Z.; Xie, F.; Liu, X.J.; Liu, J. Real-Time Design Based on PREEMPT_RT and Timing Analysis of Collaborative Robot Control System. In Proceedings of the Intelligent Robotics and Applications; Liu, X.J., Nie, Z., Yu, J., Xie, F., Song, R., Eds.; Springer International Publishing: Cham, Switzerland, 2021; pp. 596–606.

- Dumonteil, G.; Manfredi, G.; Devy, M.; Confetti, A.; Sidobre, D. Reactive planning on a collaborative robot for industrial applications. In Proceedings of the 2015 12th International Conference on Informatics in Control, Automation and Robotics (ICINCO), Colmar, France, 21–23 July 2015; Volume 2, pp. 450–457.

- Parusel, S.; Haddadin, S.; Albu-Schäffer, A. Modular state-based behavior control for safe human-robot interaction: A lightweight control architecture for a lightweight robot. In Proceedings of the 2011 IEEE International Conference on Robotics and Automation, Shanghai, China, 9–13 May 2011; pp. 4298–4305.

- Xi, Q.; Zheng, C.W.; Yao, M.Y.; Kou, W.; Kuang, S.L. Design of a Real-time Robot Control System oriented for Human-Robot Cooperation. In Proceedings of the 2021 International Conference on Artificial Intelligence and Electromechanical Automation (AIEA), Guangzhou, China, 14–16 May 2021; pp. 23–29.

- Gambao, E.; Hernando, M.; Surdilovic, D. A new generation of collaborative robots for material handling. In Proceedings of the International Symposium on Automation and Robotics in Construction; IAARC Publications: Eindhoven, The Netherlands, 2012; Volume 29, p. 1.

- Fong, T.; Thorpe, C.; Baur, C. Advanced Interfaces for Vehicle Teleoperation: Collaborative Control, Sensor Fusion Displays, and Remote Driving Tools. Auton. Robot. 2001, 11, 77–85.

- Haddadin, S.; Croft, E. Physical human-obot interaction. In Springer Handbook of Robotics; Springer: Cham, Switzerland, 2016; pp. 1835–1874.

- Skrinjar, L.; Slavič, J.; Boltežar, M. A review of continuous contact-force models in multibody dynamics. Int. J. Mech. Sci. 2018, 145, 171–187.

- Ahmadizadeh, M.; Shafei, A.; Fooladi, M. Dynamic analysis of multiple inclined and frictional impact-contacts in multi-branch robotic systems. Appl. Math. Model. 2021, 91, 24–42.

- Korayem, M.; Shafei, A.; Seidi, E. Symbolic derivation of governing equations for dual-arm mobile manipulators used in fruit-picking and the pruning of tall trees. Comput. Electron. Agric. 2014, 105, 95–102.

- Shafei, A.; Shafei, H. Planar multibranch open-loop robotic manipulators subjected to ground collision. J. Comput. Nonlinear Dyn. 2017, 12, 06100.

More