Chest X-ray radiography (CXR) is among the most frequently used medical imaging modalities. It has a preeminent value in the detection of multiple life-threatening diseases. Radiologists can visually inspect CXR images for the presence of diseases. Most thoracic diseases have very similar patterns, which makes diagnosis prone to human error and leads to misdiagnosis. Machine learning (ML) and deep learning (DL) provided techniques to make this task more efficient and faster. Numerous experiments in the diagnosis of various diseases proved the potential of these techniques.

- radiography

- chest X-ray

- computer-aided detection

- machine learning

- deep learning

- deep convolutional neural networks

1. Introduction

2. Datasets

In the medical area, there are several types of image screening technologies, including ultrasound imaging, CT (computed tomography), MRI (magnetic resonance imaging), and X-ray imaging. Radiologists use these images to diagnose organs for the detection of abnormalities [33][8]. Detecting diseases from CXR images is always a difficult task for radiologists and sometimes leads to misdiagnoses. To address this purpose using computer-aided detection (CAD) systems, a large amount of data is required for training and testing. CAD systems in medical analysis are usually trained and tested on an ensemble of data called a dataset, that are generally composed of images and other important information called metadata (e.g., age of patient, race, sex, Insurance type). Some hospitals, universities and laboratories in different countries used several approaches to collect data that belong to patients [34][9]. Datasets collection in medical area aims to advance research in detecting diseases. DL techniques proved their efficiency and ability to detect most dangerous diseases using different datasets [35,36][10][11]. These techniques achieved expert-level performance on clinical tasks in many studies [6,37][12][13].3. Image Preprocessing Techniques

Preprocessing of X-ray images is the operation that consists of improving their quality by converting them from their original form into a much more usable and informative form. Most of CXR images are produced in DICOM (Digital Imaging and Communications in Medicine) format with large set of metadata, which makes it challenging to understand by experts outside the field of radiology [47][14]. In other areas such as computer vision, DICOM images are usually stored in PNG or JPG formats using specific algorithms. These algorithms allow the compression without losing important information in the images. This process has two main steps, first is to de-identify the information of patients (privacy protection). Second is to convert DICOM images into PNG, JPEG, or other formats. Normal X-ray images have dimensions of 3000 × 2000 pixels, which requires high computational resources if used in their original size. Therefore, radiological images must be resized without losing the essential information they contain. Most of the datasets have resized images, such as Indiana dataset, which has CXR images resized to 512 × 512 pixels [38][15] and ChestX-ray dataset that has resized images with a dimension of 1024 × 1024 pixels [39][16]. Datasets are most of the time imbalanced or contain low-quality images, which usually contain noise and unwanted parts. In the process of developing a CAD system, the image preprocessing techniques play a crucial rule in enhancing and improving the quality of images. They help to remove the irrelevant data, to extract the meaningful information, and to make the ROI clearer. These techniques improve the performance of CAD systems and reduce their error rate. Preprocessing techniques applied on CXR images, consist of several methods including augmentation, enhancement, segmentation, and bone suppression.3.1. Augmentation

Training a Deep Convolutional Neural Network (DCNN) on an imbalanced dataset mostly leads to overfitting, makes the model unable to generalize to novel samples and does not provide the desired results. To cope with this situation, many transformations can be employed by position-based augmentation (cropping, rotating, scaling, flipping, padding, elastic deformations) and color-based augmentation techniques (hue, brightness, contrast) to increase the number of samples in the dataset by making slight adjustments to existing images.3.2. Enhancement

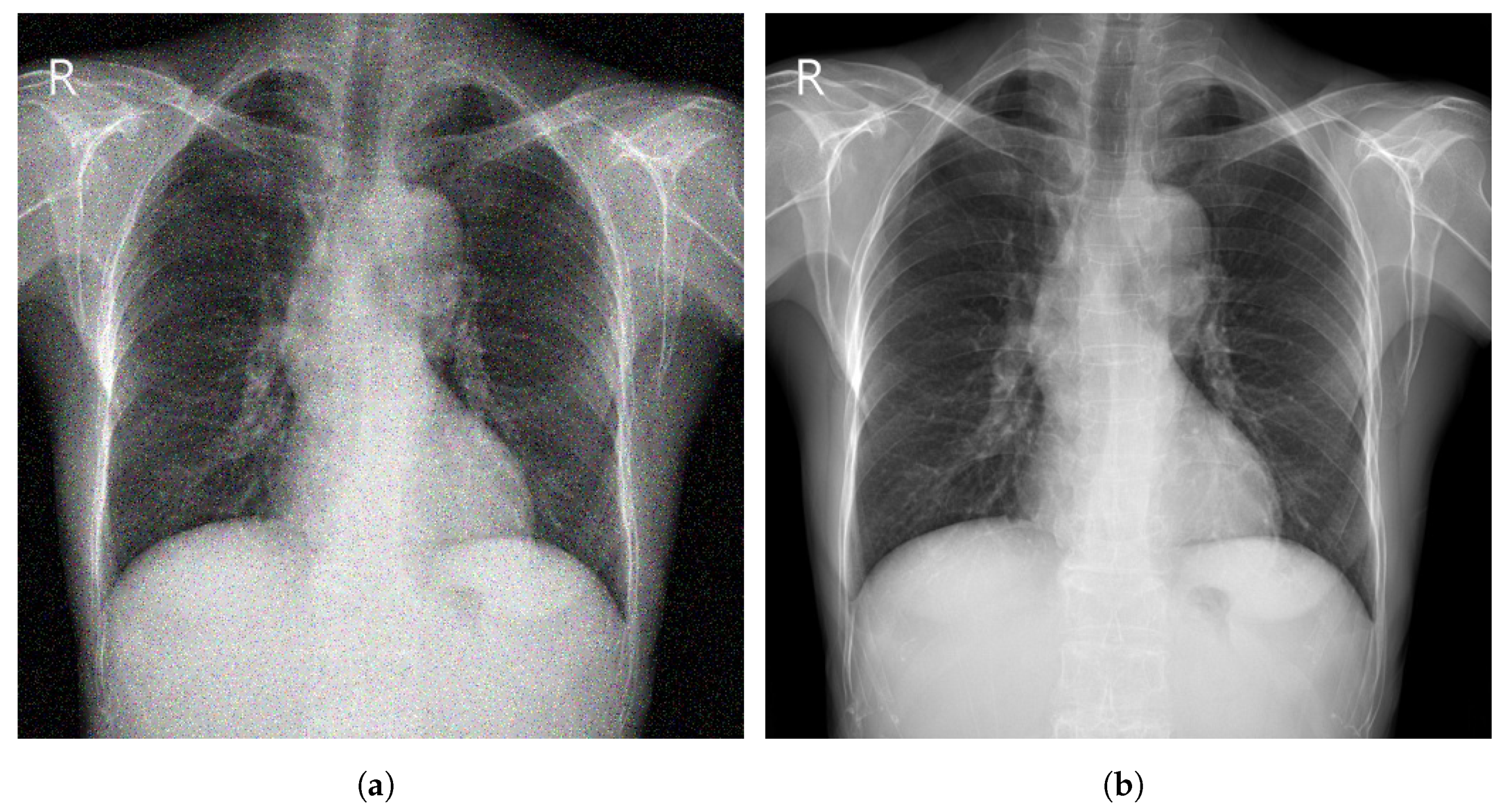

Image enhancement techniques are generally used to improve the information interpretability in images. For CXR images, these techniques are used to provide a better image quality to human readers (radiologists) as well as to automated systems [71][17]. To improve the quality of a CXR image, multiple parameters can be considered (contrast, brightness, noise suppression, edge of features, and sharpness of edges) using different methods including histogram equalization (HE) [72][18], high and low pass filtering [73][19], and unsharp masking [74][20]. Figure 51 depicts an example of enhancement applied to a CXR image.

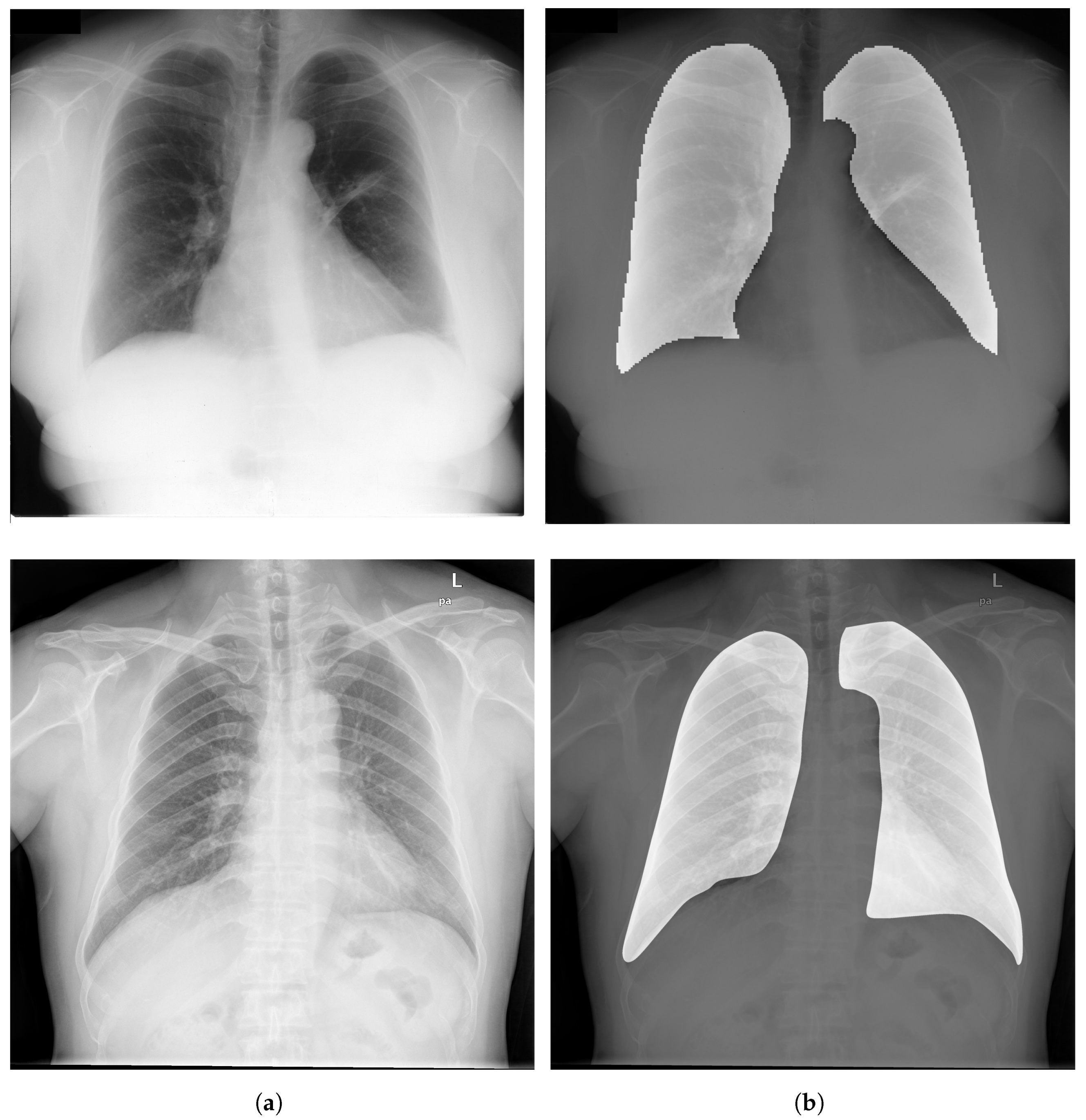

3.3. Segmentation

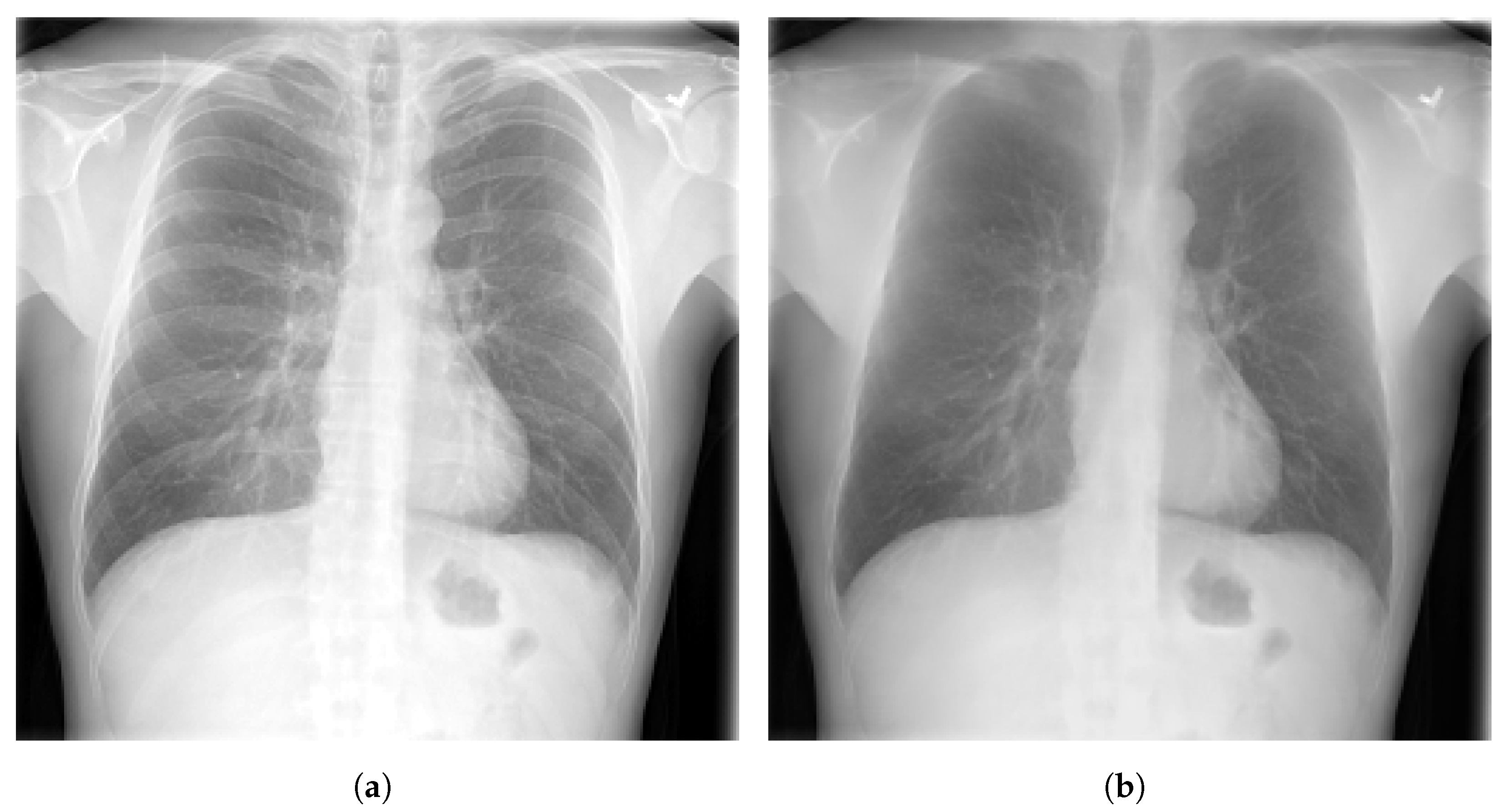

3.4. Bone Suppression

3.5. Evaluation Metrics

4. Deep Learning for Chest Disease Detection Using CXR Images

Several CAD systems were developed to detect chest diseases using different techniques. Early diagnosis of thoracic conditions gives a chance to overcome the disease. Diseases such as TB, pneumonia, and COVID-19 become more serious and severe when they are at an advanced stage. In CXR images, three main types of abnormalities can be observed: (1) Texture abnormalities, which are distinguished by changes diffusing in the appearance and structure of the area. (2) Focal abnormalities, that occur as isolated density changes and (3) Abnormal form where the anomaly changes the outline of the normal morphology.

4.1. Pneumonia Detection

Ma and Lv [99] proposed a Swin transformer model for features extraction with a fully connected layer for classification of pneumonia in CXR images. The performance of the model was evaluated against DCNN models using two different datasets (Pediatric-CXR and ChestX-ray8). Image enhancement and data-augmentation techniques were applied, which improved the performance of the introduced model, achieving an ACC of 97.20% on Pediatric-CXR and 87.30% on ChestX-ray8. Singh et al. [100] proposed an attention mechanism-based DCNN model for the classification of CXR images into two classes (normal or pneumonia). ResNet50 with attention achieved the best results with an ACC of 95.73% using images from Pediatric-CXR dataset. Darapaneni et al. [101] implemented two DCNN model with transfer learning (ResNet-50 and Inception-V4) for a binary classification of pneumonia cases using CXR images from RSNA-Pneumonia-CXR dataset. The best performing model was Inception-V4 with a validation ACC of 94.00% overcoming ResNet-50 which achieved a validation ACC of 90.00%. Rajpurkar et al. [102] developed CheXNet model, which is composed of 121-layer convolutional network to detect and localize the lung areas that show the presence of pneumonia. ChestX-ray14 was used to train the model, which was fine-tuned by replacing the final fully connected layer with one that has a single output. A nonlinear sigmoid function was used as an activation function, and the weights were initialized with the weights from ImageNet. CheXNet model showed high performance, achieving an AUC of 76.80%.

Ma and Lv [22] proposed a Swin transformer model for features extraction with a fully connected layer for classification of pneumonia in CXR images. The performance of the model was evaluated against DCNN models using two different datasets (Pediatric-CXR and ChestX-ray8). Image enhancement and data-augmentation techniques were applied, which improved the performance of the introduced model, achieving an ACC of 97.20% on Pediatric-CXR and 87.30% on ChestX-ray8. Singh et al. [23] proposed an attention mechanism-based DCNN model for the classification of CXR images into two classes (normal or pneumonia). ResNet50 with attention achieved the best results with an ACC of 95.73% using images from Pediatric-CXR dataset. Darapaneni et al. [24] implemented two DCNN model with transfer learning (ResNet-50 and Inception-V4) for a binary classification of pneumonia cases using CXR images from RSNA-Pneumonia-CXR dataset. The best performing model was Inception-V4 with a validation ACC of 94.00% overcoming ResNet-50 which achieved a validation ACC of 90.00%. Rajpurkar et al. [25] developed CheXNet model, which is composed of 121-layer convolutional network to detect and localize the lung areas that show the presence of pneumonia. ChestX-ray14 was used to train the model, which was fine-tuned by replacing the final fully connected layer with one that has a single output. A nonlinear sigmoid function was used as an activation function, and the weights were initialized with the weights from ImageNet. CheXNet model showed high performance, achieving an AUC of 76.80%.

4.2. Pulmonary Nodule Detection

According to the WHO, lung cancer is one of the most dangerous diseases. It is the most frequent cancer in men and the third in women [108]. Lung cancer manifests as lung nodules. Early diagnosis of these nodules is extremely effective in treating lung cancer before it becomes dangerous.4.3. Tuberculosis Detection

According to the WHO, TB is ranked on the top 10 diseases leading to death. TB is ranking as the second infectious disease leading to death after COVID-19 and above HIV/AIDS. In 2020, around 10 million people suffered from TB (1.1 million children). It killed a total of 1.4 million people in 2019 and 1.5 million people in 2020. TB is caused by the bacillus mycobacterium TB, which spreads when people who are sick with TB expel bacteria into the air (by coughing or sneezing). The disease typically affects the lungs [10][28]. An early diagnosis of TB saved an estimation of 66 million lives between 2000 and 2020. The variety of manifestations of pulmonary TB on CXR images makes the detection a challenging task. DL proved its high efficiency in the detection and the classification of TB. Ahmed et al. [116][29] proposed an approach to overcome TB detection problem using an efficient DL network named TBXNet. TBXNet is implemented using five dual convolution blocks with different filter sizes of 32, 64, 128, 256 and 512, respectively. The dual convolution blocks are merged with a pre-trained layer in the fusion layer of the architecture. Moreover, the pre-trained layer is used to transfer pre-trained knowledge into the fusion layer. The proposed TBXNet obtained an ACC of 99.17%. All experiments are performed using image data acquired from different sources (Montgomery, a labeled dataset created by different institutes under the ministry of health of the Republic of Belarus and a labeled dataset, that was acquired by the kaggle public available repository).4.4. COVID-19 Detection

Malathy et al. [130][30] presented a DL model called CovMnet to classify CXR images into normal and COVID-19. The layers in CovMnet include a convolution layer along with a ReLU activation function and a MaxPooling layer. The output of the last convolutional layer in the architecture is flattened and fit to fully connected neurons of four dense layers, activation layer and Dropout. Experiments are carried out for deep feature extraction, fine-tuning of CNNs (convolutional neural networks) hyperparameters, and end-to-end training of four variants of the proposed CovMnet model. The introduced CovMnet achieved a high ACC of 97.40%. All experiments were performed using CXR images from Pediatric-CXR dataset.4.5. Multiple Disease Detection

In some cases, a patient may suffer from more than one disease at the same time, which can put his life at higher risk. It may be difficult for radiologists to detect more than a pathology using CXR images due to the similarities between the signs of diseases. In such a situation, more details and more exams may be needed. To deal with this challenge, several DL systems were carried out using different algorithms. For instance, Majdi et al. [145][31] proposed a fine-tuned DenseNet-121 to classify CXR images into pulmonary nodules and cardiomegaly diseases. Images from CheXpert dataset were used for the experiment. The model obtained an AUC of 73.00% for pulmonary nodule detection and 92.00% for cardiomegaly detection. Bar et al. [146][32] employed a DL technique for the detection of pleural effusion, cardiomegaly, and normal versus abnormal disease by using a combination of features extracted by the DCNN model and the low-level features. Preprocessing techniques were applied on the used dataset that contains 93 CXR images collected from Sheba Medical Center. They attained an AUC of 93.00% for pleural effusion, 89.00% for cardiomegaly, and 79.00% for normal versus abnormal cases. Cicero et al. [147][33] used GoogleNet model to classify frontal chest radiograph images into normal, consolidation, cardiomegaly, pulmonary edema, pneumothorax, and pleural effusion. GoogleNet achieved an AUC score of 86.80% for edema, 96.20% for plural effusion, 86.10% for pneumothorax, 96.40% for normal, 87.50% for cardiomegaly and 85.00% for consolidation. The study proved that the DCNN model can achieve high performance even if trained with modest-sized medical dataset. Wang et al. [39][16] used a weak-supervised method for the classification and detection of eight chest diseases presented on ChestX-ray8 dataset.References

- Abiyev, R.; Ma’aitah, M.K.S. Deep Convolutional Neural Networks for Chest Diseases Detection. J. Healthc. Eng. 2018, 2018, 4168538.

- Radiological Society of North America. X-ray Radiography-Chest. Available online: https://www.radiologyinfo.org/en/info.cfm?pg=chestrad (accessed on 1 November 2022).

- US Food and Drugs Administration. Medical X-ray Imaging. Available online: https://www.fda.gov/radiation-emitting-products/medical-imaging/medical-x-ray-imaging (accessed on 1 November 2022).

- Avni, U.; Greenspan, H.; Konen, E.; Sharon, M.; Goldberger, J. X-ray Categorization and Retrieval on the Organ and Pathology Level, Using Patch-Based Visual Words. IEEE Trans. Med. Imaging 2011, 30, 733–746.

- Jaeger, S.; Karargyris, A.; Candemir, S.; Folio, L.; Siegelman, J.; Callaghan, F.; Xue, Z.; Palaniappan, K.; Singh, R.; Antani, S.; et al. Automatic Tuberculosis Screening Using Chest Radiographs. IEEE Trans. Med. Imaging 2014, 33, 233–245.

- Pattrapisetwong, P.; Chiracharit, W. Automatic lung segmentation in chest radiographs using shadow filter and multilevel thresholding. In Proceedings of the International Computer Science and Engineering Conference (ICSEC), Chiang Mai, Thailand, 14–17 December 2016; pp. 1–6.

- Piccialli, F.; Di Somma, V.; Giampaolo, F.; Cuomo, S.; Fortino, G. A survey on deep learning in medicine: Why, how and when? Inf. Fusion 2021, 66, 111–137.

- Elangovan, A.; Jeyaseelan, T. Medical imaging modalities: A survey. In Proceedings of the International Conference on Emerging Trends in Engineering, Technology and Science (ICETETS), Pudukkottai, India, 24–26 February 2016; pp. 1–4.

- Saczynski, J.; McManus, D.; Goldberg, R. Commonly Used Data-collection Approaches in Clinical Research. Am. J. Med. 2013, 126, 946–950.

- Horng, S.; Liao, R.; Wang, X.; Dalal, S.; Golland, P.; Berkowitz, S. Deep learning to quantify pulmonary edema in chest radiographs. Radiol. Artif. Intell. 2021, 3, e190228.

- Tolkachev, A.; Sirazitdinov, I.; Kholiavchenko, M.; Mustafaev, T.; Ibragimov, B. Deep learning for diagnosis and segmentation of pneumothorax: The results on the kaggle competition and validation against radiologists. J. Biomed. Health Inform. 2021, 25, 1660–1672.

- Cha, M.J.; Chung, M.J.; Lee, J.H.; Lee, K.S. Performance of deep learning model in detecting operable lung cancer with chest radiographs. J. Thorac. Imaging 2019, 34, 86–91.

- Schultheiss, M.; Schober, S.; Lodde, M.; Bodden, J.; Aichele, J.; Müller-Leisse, C.; Renger, B.; Pfeiffer, F.; Pfeiffer, D. A robust convolutional neural network for lung nodule detection in the presence of foreign bodies. Sci. Rep. 2020, 10, 12987.

- Johnson, A.; Pollard, T.; Berkowitz, S.; Greenbaum, N.; Lungren, M.; Deng, C.y.; Mark, R.; Horng, S. MIMIC-CXR, a de-identified publicly available database of chest radiographs with free-text reports. Sci. Data 2019, 6, 317.

- Demner-Fushman, D.; Kohli, M.; Rosenman, M.; Shooshan, S.; Rodriguez, L.; Antani, S.; Thoma, G.; McDonald, C. Preparing a collection of radiology examinations for distribution and retrieval. Am. Med. Inform. Assoc. 2016, 23, 304–310.

- Wang, X.; Peng, Y.; Lu, L.; Lu, Z.; Bagheri, M.; Summers, R.M. ChestX-ray8: Hospital-scale Chest X-ray Database and Benchmarks on Weakly-Supervised Classification and Localization of Common Thorax Diseases. In Proceedings of the Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 2097–2106.

- SK, S.; Naveen, N. Algorithm for pre-processing chest-x-ray using multi-level enhancement operation. In Proceedings of the International Conference on Wireless Communications, Signal Processing and Networking (WiSPNET), Chennai, India, 23–25 March 2016; pp. 2182–2186.

- Reza, A. Realization of the contrast limited adaptive histogram equalization (CLAHE) for real-time image enhancement. J. VLSI Signal Process. Syst. Signal Image Video Technol. 2004, 38, 35–44.

- Agaian, S.; Panetta, K.; Grigoryan, A. Transform-based image enhancement algorithms with performance measure. IEEE Trans. Image Process. 2001, 10, 367–382.

- Chen, S.; Cai, Y. Enhancement of chest radiograph in emergency intensive care unit by means of reverse anisotropic diffusion-based unsharp masking model. Diagnostics 2019, 9, 45.

- Stanford ML Group. ChexPert a Large Chest X-ray Dataset and Competition. Available online: https://stanfordmlgroup.github.io/competitions/chexpert/ (accessed on 1 November 2022).

- Ma, Y.; Lv, W. Identification of Pneumonia in Chest X-ray Image Based on Transformer. Int. J. Antennas Propag. 2022, 2022, 5072666.

- Singh, S.; Rawat, S.; Gupta, M.; Tripathi, B.; Alanzi, F.; Majumdar, A.; Khuwuthyakorn, P.; Thinnukool, O. Deep Attention Network for Pneumonia Detection Using Chest X-ray Images. Comput. Mater. Contin. 2022, 74, 1673–1691.

- Darapaneni, N.; Ranjan, A.; Bright, D.; Trivedi, D.; Kumar, K.; Kumar, V.; Paduri, A.R. Pneumonia Detection in Chest X-rays using Neural Networks. arXiv 2022, arXiv:2204.03618.

- Rajpurkar, P.; Irvin, J.; Zhu, K.; Yang, B.; Mehta, H.; Duan, T.; Ding, D.; Bagul, A.; Langlotz, C.; Shpanskaya, K. Chexnet: Radiologist-level pneumonia detection on chest X-rays with deep learning. arXiv 2017, arXiv:1711.05225.

- World Health Organization. World Cancer Report. Available online: https://www.who.int/cancer/publications/WRC_2014/en/ (accessed on 1 November 2022).

- Sim, Y.; Chung, M.J.; Kotter, E.; Yune, S.; Kim, M.; Do, S.; Han, K.; Kim, H.; Yang, S.; Lee, D.J.; et al. Deep Convolutional Neural Network–based Software Improves Radiologist Detection of Malignant Lung Nodules on Chest Radiographs. Radiology 2020, 294, 199–209.

- World Health Organization. Tuberculosis. Available online: https://www.who.int/news-room/fact-sheets/detail/tuberculosis (accessed on 1 November 2022).

- Iqbal, A.; Usman, M.; Ahmed, Z. An efficient deep learning-based framework for tuberculosis detection using chest X-ray images. Tuberculosis 2022, 136, 102234.

- Jawahar, M.; Anbarasi, J.; Ravi, V.; Jayachandran, P.; Jasmine, G.; Manikandan, R.; Sekaran, R.; Kannan, S. CovMnet-Deep Learning Model for classifying Coronavirus (COVID-19). Health Technol. 2022, 12, 1009–1024.

- Majdi, M.; Salman, K.; Morris, M.; Merchant, N.; Rodriguez, J. Deep learning classification of chest X-ray images. In Proceedings of the Southwest Symposium on Image Analysis and Interpretation (SSIAI), Albuquerque, NM, USA, 29–31 March 2020; pp. 116–119.

- Bar, Y.; Diamant, I.; Wolf, L.; Greenspan, H. Deep learning with non-medical training used for chest pathology identification. In Medical Imaging 2015: Computer-Aided Diagnosis; SPIE: Bellingham, WA, USA, 2015; pp. 215–221.

- Cicero, M.; Bilbily, A.; Colak, E.; Dowdell, T.; Gray, B.; Perampaladas, K.; Barfett, J. Training and validating a deep convolutional neural network for computer-aided detection and classification of abnormalities on frontal chest radiographs. Investig. Radiol. 2017, 52, 281–287.