Virtual human is widely employed in various industries, including personal assistance, intelligent customer service, and online education, thanks to the rapid development of artificial intelligence. An anthropomorphic digital human can quickly contact people and enhance user experience in human–computer interaction.

- talking-head generation

- virtual human generation

- human–computer interaction

1. Introduction

2. Human–Computer Interaction System Architecture

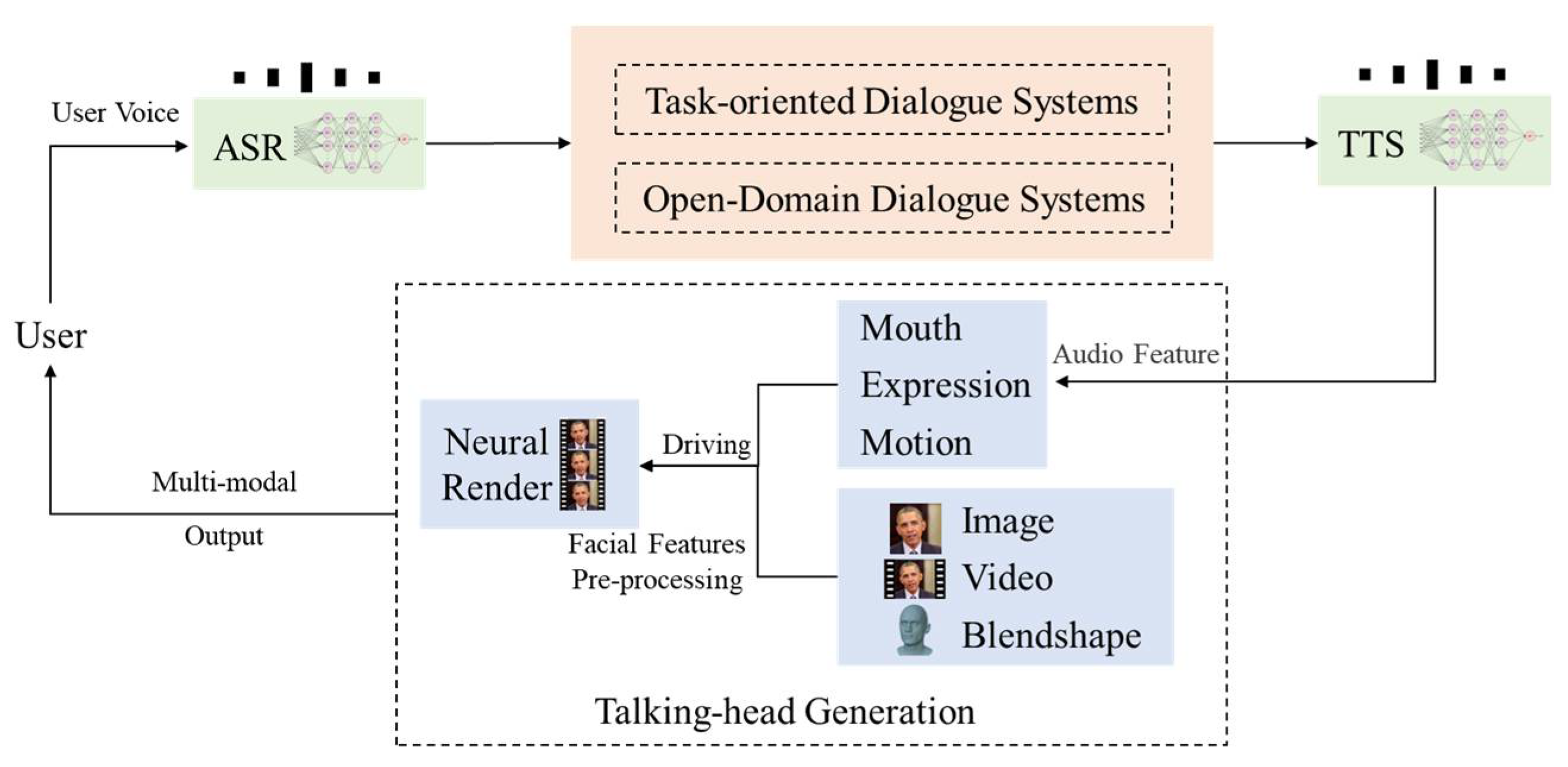

Based on artificial intelligence technologies, such as natural language processing, voice, and image processing, the system pursues multimodal interaction with low-latency and high-fidelity anthropomorphic virtual humans. As shown in Figure 1, the system is mainly composed of four modules: (1) the system converts the voice information input by the user into text information through the automatic speech recognition (ASR) module; (2) the dialogue system (DS) takes the text information output by the ASR module as input; (3) the text-to-speech (TTS) module converts the text output by the DS into realistic speech information; (4) the talking-head generation module preprocesses the picture, video, or blendshape as the model input to extract its facial features. Then, the model maps the lower-dimensional voice signal by the TTS module to the higher-dimensional video signals, including the mouth, expression, motion, etc. Finally, the model uses the rendering system to fuse the features and multimodal output video and displays it on the user side.

2.1. Speech Module

The ASR and TTS of the speech module correspond to the human hearing and language function, respectively. After decades of research, speech recognition and text-to-speech synthesis have been widely used in various commercial products. WThe researchers use the PaddleSpeech [32][6], a code, open-sourced by Baidu. One model can complete both ASR and TTS tasks, which greatly reduces the complexity of model deployment and enables better collaboration with other modules. In addition, wthe researchers can also choose API services provided by commercial companies, such as Baidu, Sogou, iFLYTEK, etc.2.2. Dialogue System Module

OurThe dialogue system module needs to have the ability to have multiple rounds of dialogue. The system needs to answer domain-specific questions and meet users’ needs to chat. As shown in Figure 1, the question is passed to the dialogue module after the user’s voice passes through the ASR. The dialogue module must retrieve or generate matching answers from the knowledge base according to the user’s question. However, it is impossible to rely entirely on the model to generate answers in a specific domain multi-turn dialogue. In some scenarios, to better consider the context information, the above information will be aggregated to identify the user’s intent and return the answer in the way of QA.2.3. Talking-Head Generation

The facial appearance data of the talking-head generation module mainly comes from real-person photos, videos, or blendshape character model coefficients. Taking video as an example, wthe researchers first perform video preprocessing on these facial appearance data and then map the audio signal of TTS in Figure 1 to higher-dimensional signals such as human face lip shape, facial expression, and facial action, and finally, use a neural network. The model performs video rendering and outputs multimodal video data. In human–computer interaction, a timely response can improve user experience. However, the time delay of the whole system is equal to the sum of the time consumed by each data processing module. Among them, the voice module and the dialogue module have been commercialized by a wide range of users, which can meet the real-time requirements of human–computer interaction. At present, it takes a long time for the talking-head generation model to render and output multimodal video. Therefore, we need to improve the data processing efficiency of the talking-head generation model, reduce the rendering time of the multimodal video, and reduce the response time of the human–computer interaction system extension. Although the virtual human has achieved low-latency response in some commercial products such as JD’s ViDA-MAN [33][7], etc., the long production cycle, high cost, and poor portability are also problems that cannot be ignored.3. Talking-Head Generation

Talking-head video generation, i.e., lip motion sequence generation, aims to synthesize lip motion sequences corresponding to the driving source (a segment of audio or text). Based on synthesizing the lip motion, the video synthesis of the talking head also needs to consider its facial attributes, such as facial expressions and head movements. In the early talking-head video generation methods, researchers mainly used cross-modal retrieval [3] and HMM-based methods [34][8] to realize the dynamic mapping of driving sources to lip motion data. However, these methods have relatively high requirements on the application environment of the model, visual phoneme annotation, etc. Thies et al. [3] introduced an image-based lip-motion synthesis method, which generates the real oral cavity by retrieving and selecting the optimal lip shape from offline samples. However, the method is based on text–morpheme–morpheme. The retrieval of the map does not truly take into account the contextual information of the speech. Zhang et al. [30][9] introduced key pose interpolation and smoothing modules to synthesize pose sequences based on cross-modal retrieval and used a GAN model to generate videos. Recently, the rapid development of deep learning technology has provided technical support for talking-head video generation and promoted the vigorous development of talking-head video generation methods. Table 1 summarizes the representative works on talking-head video generation.- (1)

-

2D-based methods.

- (2)

-

3D-based methods.Most current methods reconstruct 3D models from training videos directly. NVP (neural voice puppetry) has since designed the Audio2ExpressionNet and the 3D model of the independent identity. NeRF (Neural Radiance Fields) [38[33][34][35][36],39,40,41], which simulates implicit representation with MLP, can store 3D spatial coordinates and appearance information and be used for large-resolution scenes. To reduce information loss, AD-NeRF [25][29] trains two NeRFs for head and drive rendering of talking-head synthesis and obtains good visual effects. Many models require unrestricted universal identity and speech as input in practical application scenarios. Prajwal et al. [22,42][28][37] take any unidentified video and arbitrary speech as input to synthesize unrestricted talking-head video.Table 1. The main model of talking-head generation in recent years. ID: The model can be divided into three types: identity-dependent (D), identity-independent(I), and hybrid(H). Driving Data: Audio(A), Text(T), and Video(V).

References

Key Idea

Driving Factor

ID D/I

3D Model

Audio-to-mouth editing to video

A

D

3D

From audio and emotion-state to 3D vertices

A

D

3D

Text-to-audio-to-mouth key-points to video

T

D

2D

Joint embedding of audio and identity features

A

I

2D

DVP: parameter replacement and facial reenactment with cGAN to video

V

I

3D

Audio-driven GAN

A

I

2D

Joint embedding of person-id and word-id features

V or A

I

2D

From Audio to facial landmarks to video synthesis

A

I

2D

From text or audio feature to facial landmarks to video synthesis

A and T

I

2D

VOCA: from audio to FLAME head model with facial motions

A

I

3D

3D reconstruction and parameter recombination

T

D

3D

Audio-driven landmark prediction

A

I

2D

Wav2Lip: audio-driven, based GAN lip-sync discriminator

A

I

2D

NVP: from the fusion of audio expression feature extraction and intermediate 3D model to video

A

H

3D

AD-NeRF: Audio-to-video generation via two individual neural radiance fields

A

D

3D

TE: text-driven to video generation combine phoneme alignment, viseme search and parameter blending

T

D

3D

FaceFormer: Audio-to-3D Mesh to video

A

I

3D

A unified framework for visual-to-speech recognition and audio-to-video synthesis

A

I

3D

Text2Video: GAN+phoneme-pose dictionary

T

I

3D

4. Datasets and Evaluation Metrics

4.1. Datasets

In the era of artificial intelligence, the dataset is an important part of the deep learning model. At the same time, data sets also promote the solution of complex problems in the field of virtual human synthesis. However, in practical applications, there are few high-quality annotation data sets that cannot meet the training needs of the speech synthesis model. Moreover, many institutions/researchers are affected by the deepfake technical ethics issues, which increase the difficulty of obtaining some data sets. For example, only researchers, teachers, and engineers from universities, research institutions, and enterprises are allowed to apply, and students are prohibited from applying. In Table 2, wthe researchers briefly highlighted the data sets commonly used by most researchers, including statistics and download links.The GRID [68][38] dataset was recorded in a laboratory setting with 34 volunteers, which is relatively small in a large dataset, but each volunteer spoke 1000 phrases for a total of 34,000 utterance instances. The phrase composition of the dataset conforms to certain rules. Each phrase contains six words, randomly selected from each of the six types of words to form a random phrase. The six categories of words are “command”, “color”, “preposition”, “letter”, “number”, and “adverb”. Dataset is available at https://spandh.dcs.shef.ac.uk//gridcorpus/, accessed on 30 December 2022. LRW [69][39], known for various speaking styles and head poses, is an English-speaking video dataset collected from the BBC program with over 1000 speakers. The vocabulary size is 500 words, and each video is 1.16 s long (29 frames), involving the target word and a context. Dataset is available at https://www.robots.ox.ac.uk/~vgg/data/lip_reading/lrw1.html, accessed on 30 December 2022. LRW-1000 [70][40] is a Mandarin video dataset collected from over 2000 vocabulary subjects. The purpose of the dataset is to cover the natural variation of different speech modalities and imaging conditions to incorporate the challenges encountered in real-world applications. There are large variations in the number of samples in each category, video resolution, lighting conditions, and attributes such as speaker pose, age, gender, and makeup. Note: the official URL (http://vipl.ict.ac.cn/en/view_database.php?id=13, accessed on 30 December 2022.) is no longer available, you can go to the paper page for details about the data and download the agreement file here (https://github.com/VIPL-Audio-Visual-Speech-Understanding/AVSU-VIPL, accessed on 30 December 2022.) if you plan to use this dataset for your research. ObamaSet [8][15] is a specific audio-visual dataset that focuses on analyzing the visual speech of former US President Barack Obama. All video samples are collected from his weekly address footage. Unlike previous datasets, it only focuses on Barack Obama and does not provide any human annotations. Dataset is available at https://github.com/supasorn/synthesizing_obama_network_training, accessed on 30 December 2022. VoxCeleb2 [71][41] is extracted from YouTube videos, including the video URL and discourse timestamp. At the same time, it is currently the largest public audio-visual data set. Although this dataset is used for speaker recognition tasks, it can also be used to train a talking-head generation model. However, the dataset needs to apply to obtain the download permission to prevent misuse of the dataset. The URL for the permit application is https://www.robots.ox.ac.uk/~vgg/data/voxceleb/, accessed on 30 December 2022. Because the dataset is huge, it requires 300 G+ storage space and supporting download tools. The download method is available at https://github.com/walkoncross/voxceleb2-download, accessed on 30 December 2022. VOCASET [19][18] is a 4D-face dataset with approximately 29 min of 4D face scans, synchronized audio from 12-bit speakers (six women and six men), and recorded 4D-face scans at 60 fps. As a representative high-quality 4D face-to-face audio-visual dataset, Vocaset greatly facilitates the research of 3D VSG. Dataset is available at https://voca.is.tue.mpg.de/, accessed on 30 December 2022. MEAD [44][42], the Multi-View Emotional Audio-Visual Dataset, is a large-scale, high-quality emotional audio-video dataset. Unlike previous datasets, it focuses on facial generation for natural emotional speech and takes into account multiple emotional states (eight different emotions on three intensity levels). Dataset is available at https://wywu.github.io/projects/MEAD/MEAD.html, accessed on 30 December 2022. HDTF [51][43], a large in-the-wild high-resolution audio-visual dataset, stands for the High-definition Talking-Face Dataset. The HDTF dataset consists of approximately 362 different videos of 15.8 h. The resolution of the original video is 720 P or 1080 P. Each cropped video is resized to 512 × 512. Dataset is available at https://github.com/MRzzm/HDTF, accessed on 30 December 2022.Table 2.Summary of talking-head video datasets.Dataset Name

Year

Hours

Image Size

FPS

Speaker

Sentence

Head

Movement

Envir.

GRID

2006

27.5

720 × 576, 25

33

33 k

N

Lab

LRW

2017

173

256 × 256, 25

1 k+

539 K

N

TV

ObamaSet

2017

14

N/A

1

N/A

Y

TV

VoxCeleb2

2018

2.4 k

N/A, 25

6.1 k+

153.5 K

Y

TV

LRW-1000

2019

57

N/A

2 K+

718 K

Y

TV

VOCASET

2019

N/A

5023 vertices, 60

12

255

Y

Lab

MEAD

2020

39

1920 × 1080, 30

60

20

Y

Lab

HDTF

2021

15.8

N/A

362

10 K

Y

TV

4.2. Evaluation Metrics

The evaluation task of talking-head video generation is an open problem that requires the evaluation of generation results from both objective and subjective aspects. Chen et al. [72][44] reviewed several state-of-the-art talking-head generation methods. They designed a unified benchmark based on their properties. Subjective evaluation is often used to compare the generated content’s visual quality and sensory effects, such as whether lip-sync audio is in sync with the picture. Due to the high cost of subjective factors in the evaluation process, many researchers have attempted to quantitatively evaluate generated content using objective metrics [22,28,29,52][28][31][32][45]. These metrics can be classified into three types: visual quality, audio-visual semantic consistency, and real time based on quantitative model performance evaluations. Visual Quality. Reconstruction error measures (e.g., mean squared error) are a natural way to evaluate the quality of generated video frames. However, the reconstruction error only focuses on the pixel alignment, ignoring the global visual quality. Therefore, existing works typically employ the peak signal-to-noise ratio (PSNR), structural similarity index metric (SSIM) [29[32][46],73], and learned perceptual image patch similarity (LPIPS) [74][47] to evaluate the global vision of generated image quality. Since metrics, such as PSNR and SSIM, cannot explain human perception well, and LPIPS is closer to human perception in visual similarity judgment, it is recommended to use LPIPS to evaluate the visual quality of generated content quantitatively. More recently, Prajwal et al. [22][28] introduced the Fréchet inception distance (FID) [75][48] to measure the distance between synthetic and real data distributions, as FID is more consistent with human perception assessments. Audio-visual semantic consistency. The semantic consistency of the generated video and the driving source mainly includes audio-visual synchronization and speech consistency. For audio-visual synchronization, the landmark distance (LMD) [12][23] computes the Euclidean distance of lip region landmarks between the synthetic video frame and the ground truth frame. Another synchronization evaluation metric uses SyncNet [7][49] to predict the offset of generated frames and ground truth. For phonetic coherence, Chen et al. [74][47] proposed a synchronization evaluation metric, the Lip-Reading Similarity Distance (LRSD), which can evaluate semantically synchronized lip movements. Real-time performance. The length of time to generate the talking-head video is an important indicator for existing models. In the practical application of human–computer interaction, people are very sensitive to factors such as waiting time and video quality. Therefore, the model should generate the video as quickly as possible without sacrificing visual quality or the coherence of audio-visual semantics. NVIDIA [10][17] uses a deep neural network to map low-dimensional speech waveform data to high-dimensional facial 3D vertex coordinates and uses traditional motion capture technology to obtain high-quality video animation data to train the model. Delayed Talking-Head Synthesis Model. Ye et al. [6][50] presented a novel, fully convolutional network with DCKs for the multimodal task of audio-driven talking-face video generation. Due to the simple yet effective network architecture and the video pre-processing, there is a significant improvement in the real-time performance of talking-head generation. Lu et al. [76][51] present a live system that generates personalized photorealistic talking-head animation only driven by audio signals at over 30 fps. However, many studies ignore real-time performance, and we should consider it as a critical evaluation metric in the future. Human–computer interaction is a method for the future development of virtual humans. Unlike one-way information output, digital human needs to have multimodal information such as natural language, facial expression, and natural human-like gestures. Meanwhile, it also needs to be able to feedback on high-quality video in a short time after a given voice request [33,77][7][52]. However, in generating high-quality and low-latency digital human video, many researchers do not take real time as the evaluation index of the model. Hence, many models generate videos too slowly to cover the application requirements. For example, to generate 256 × 256 resolution facial video without background, ATVGnet [17][10] takes 1.07 s, You Said That [35][12] takes 14.13 s, X2Face [78][53] takes 15.10 s, DAVS [16][13] takes 17.05 s, and 1280 × 720 resolution video with background takes longer. Although it only takes 3.83 s for Wav2lip [22][28] to synthesize a video with a background, the definition of the lower part of the face is lower than that of other areas [6][50]. Many studies have attempted to establish a new evaluation benchmark and proposed more than a dozen evaluation metrics for virtual human video generation. Therefore, the existing evaluation metrics for virtual human video generation are not uniform. In addition to objective indicators, there are also subjective indicators, such as user research.

References

- Garrido, P.; Valgaerts, L.; Sarmadi, H.; Steiner, I.; Varanasi, K.; Perez, P.; Theobalt, C. Vdub: Modifying face video of actors for plausible visual alignment to a dubbed audio track. In Computer Graphics Forum; Wiley Online Library: Hoboken, NJ, USA, 2015; Volume 34, pp. 193–204.

- Garrido, P.; Valgaerts, L.; Rehmsen, O.; Thormahlen, T.; Perez, P.; Theobalt, C. Automatic face reenactment. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Washington, DC, USA, 23–28 June 2014; pp. 4217–4224.

- Thies, J.; Zollhofer, M.; Stamminger, M.; Theobalt, C.; Nießner, M. Face2face: Real-time face capture and reenactment of rgb videos. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 2387–2395.

- Bregler, C.; Covell, M.; Slaney, M. Video rewrite: Driving visual speech with audio. In Proceedings of the 24th Annual Conference on Computer Graphics and Interactive Techniques, Los Angeles, CA, USA, 3–8 August 1997; pp. 353–360.

- Xie, L.; Liu, Z.Q. Realistic mouth-synching for speech-driven talking face using articulatory modelling. IEEE Trans. Multimed. 2007, 9, 500–510.

- Zhang, H.; Yuan, T.; Chen, J.; Li, X.; Zheng, R.; Huang, Y.; Chen, X.; Gong, E.; Chen, Z.; Hu, X.; et al. PaddleSpeech: An Easy-to-Use All-in-One Speech Toolkit. arXiv 2022, arXiv:2205.12007.

- Shen, T.; Zuo, J.; Shi, F.; Zhang, J.; Jiang, L.; Chen, M.; Zhang, Z.; Zhang, W.; He, X.; Mei, T. ViDA-MAN: Visual Dialog with Digital Humans. In Proceedings of the 29th ACM International Conference on Multimedia, Chengdu, China, 20–24 October 2021; pp. 2789–2791.

- Sheng, C.; Kuang, G.; Bai, L.; Hou, C.; Guo, Y.; Xu, X.; Pietikäinen, M.; Liu, L. Deep Learning for Visual Speech Analysis: A Survey. arXiv 2022, arXiv:2205.10839.

- Zhang, S.; Yuan, J.; Liao, M.; Zhang, L. Text2video: Text-Driven Talking-Head Video Synthesis with Personalized Phoneme-Pose Dictionary. In ICASSP 2022-2022 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP); IEEE: Manhattan, NY, USA, 2022; pp. 2659–2663.

- Chen, L.; Maddox, R.K.; Duan, Z.; Xu, C. Hierarchical cross-modal talking face generation with dynamic pixel-wise loss. In Proceedings of the IEEE/CVF Conference on Cmputer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 7832–7841.

- Chung, J.S.; Jamaludin, A.; Zisserman, A. You said that? arXiv 2017, arXiv:1705.02966.

- Jamaludin, A.; Chung, J.S.; Zisserman, A. You said that? Synthesising talking faces from audio. Int. J. Comput. Vis. 2019, 127, 1767–1779.

- Zhou, H.; Liu, Y.; Liu, Z.; Luo, P.; Wang, X. Talking face generation by adversarially disentangled audio-visual representation. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 8–12 October 2019; 33, pp. 9299–9306.

- Song, Y.; Zhu, J.; Li, D.; Wang, X.; Qi, H. Talking face generation by conditional recurrent adversarial network. arXiv 2018, arXiv:1804.04786.

- Suwajanakorn, S.; Seitz, S.M.; Kemelmacher-Shlizerman, I. Synthesizing obama: Learning lip sync from audio. ACM Trans. Graph. (ToG) 2017, 36, 1–13.

- Kumar, R.; Sotelo, J.; Kumar, K.; de Brébisson, A.; Bengio, Y. Obamanet: Photo-realistic lip-sync from text. arXiv 2017, arXiv:1801.01442.

- Karras, T.; Aila, T.; Laine, S.; Herva, A.; Lehtinen, J. Audio-driven facial animation by joint end-to-end learning of pose and emotion. ACM Trans. Graph. (ToG) 2017, 36, 1–12.

- Cudeiro, D.; Bolkart, T.; Laidlaw, C.; Ranjan, A.; Black, M.J. Capture, learning, and synthesis of 3D speaking styles. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 10101–10111.

- Fried, O.; Tewari, A.; Zollhöfer, M.; Finkelstein, A.; Shechtman, E.; Goldman, D.B.; Genova, K.; Jin, Z.; Theobalt, C.; Agrawala, M. Text-based editing of talking-head video. ACM Trans. Graph. (ToG) 2019, 38, 1–14.

- Thies, J.; Elgharib, M.; Tewari, A.; Theobalt, C.; Nießner, M. Neural voice puppetry: Audio-driven facial reenactment. In European Conference on Computer Vision; Springer: Cham, Switzerland, 2020; pp. 716–731.

- Li, T.; Bolkart, T.; Black, M.J.; Li, H.; Romero, J. Learning a model of facial shape and expression from 4D scans. ACM Trans. Graph. 2017, 36, 194-1.

- Richard, A.; Zollhöfer, M.; Wen, Y.; De la Torre, F.; Sheikh, Y. Meshtalk: 3d face animation from speech using cross-modality disentanglement. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 1173–1182.

- Chen, L.; Li, Z.; Maddox, R.K.; Duan, Z.; Xu, C. Lip movements generation at a glance. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 520–535.

- Kim, H.; Garrido, P.; Tewari, A.; Xu, W.; Thies, J.; Niessner, M.; Pérez, P.; Richardt, C.; Zollhöfer, M.; Theobalt, C. Deep video portraits. ACM Trans. Graph. (ToG) 2018, 37(4), 1–14.

- Vougioukas, K.; Petridis, S.; Pantic, M. End-to-end speech-driven facial animation with temporal gans. arXiv 2018, arXiv:1805.09313.

- Yu, L.; Yu, J.; Ling, Q. Mining audio, text and visual information for talking face generation. In Proceedings of the 2019 IEEE International Conference on Data Mining (ICDM), Beijing, China, 8–11 November 2019; IEEE: Manhattan, NY, USA, 2019; pp. 787–795.

- Zhou, Y.; Han, X.; Shechtman, E.; Echevarria, J.; Kalogerakis, E.; Li, D. Makelttalk: Speaker-aware talking-head animation. ACM Trans. Graph. (ToG) 2020, 39, 1–15.

- Prajwal, K.R.; Mukhopadhyay, R.; Namboodiri, V.P.; Jawahar, C.V. A lip sync expert is all you need for speech to lip generation in the wild. In Proceedings of the 28th ACM International Conference on Multimedia, Seattle, WA, USA, 12–16 October 2020; pp. 484–492.

- Guo, Y.; Chen, K.; Liang, S.; Liu, Y.J.; Bao, H.; Zhang, J. Ad-nerf: Audio driven neural radiance fields for talking head synthesis. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021.

- Li, L.; Wang, S.; Zhang, Z.; Ding, Y.; Zheng, Y.; Yu, X.; Fan, C. Write-a-speaker: Text-based emotional and rhythmic talking-head generation. In Proceedings of the AAAI Conference on Artificial Intelligence, Vancouver, Canada, 2–8 February 2021; pp. 1911–1920.

- Fan, Y.; Lin, Z.; Saito, J.; Wang, W.; Komura, T. FaceFormer: Speech-Driven 3D Facial Animation with Transformers. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 19–20 June 2022; pp. 18770–18780.

- Yang, C.C.; Fan, W.C.; Yang, C.F.; Wang, Y.C.F. Cross-Modal Mutual Learning for Audio-Visual Speech Recognition and Manipulation. In Proceedings of the 36th AAAI Conference on Artificial Intelligence, Vancouver, BC, Canada, 22 February–1 March 2022; Volume 22.

- Mildenhall, B.; Srinivasan, P.P.; Tancik, M.; Barron, J.T.; Ramamoorthi, R.; Ng, R. Nerf: Representing scenes as neural radiance fields for view synthesis. Commun. ACM 2021, 65, 99–106.

- Garbin, S.J.; Kowalski, M.; Johnson, M.; Shotton, J.; Valentin, J. Fastnerf: High-fidelity neural rendering at 200fps. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 14346–14355.

- Müller, T.; Evans, A.; Schied, C.; Keller, A. Instant neural graphics primitives with a multiresolution hash encoding. arXiv 2022, arXiv:2201.05989.

- Li, R.; Tancik, M.; Kanazawa, A. NerfAcc: A General NeRF Acceleration Toolbo. arXiv 2022, arXiv:2210.04847.

- KR, P.; Mukhopadhyay, R.; Philip, J.; Jha, A.; Namboodiri, V.; Jawahar, C.V. Towards automatic face-to-face translation. In Proceedings of the 27th ACM international conference on multimedia, Nice, France, 21–25 October 2019; pp. 1428–1436.

- Cooke, M.; Barker, J.; Cunningham, S.; Shao, X. An audio-visual corpus for speech perception and automatic speech recognition. J. Acoust. Soc. Am. 2006, 120, 2421–2424.

- Chung, J.S.; Zisserman, A. Lip reading in the wild. In Asian conference on computer vision. In Asian Conference on Computer Vision; Springer: Cham, Switzerland, 2017; pp. 87–103.

- Yang, S.; Zhang, Y.; Feng, D.; Yang, M.; Wang, C.; Xiao, J.; Long, K.; Shan, S.; Chen, X. LRW-1000: A naturally-distributed large-scale benchmark for lip reading in the wild. In 2019 14th IEEE International Conference on Automatic Face & Gesture Recognition (FG 2019); IEEE: Manhattan, NY, USA, 2019; pp. 1–8.

- Chung, J.S.; Nagrani, A.; Zisserman, A. Voxceleb2: Deep speaker recognition. arXiv 2018, arXiv:1806.05622.

- Wang, K.; Wu, Q.; Song, L.; Yang, Z.; Wu, W.; Qian, C.; He, R.; Qiao, Y.; Loy, C.C. Mead: A large-scale audio-visual dataset for emotional talking-face generation. In European Conference on Computer Vision; Springer: Cham, Switzerland, 2020; pp. 700–717.

- Zhang, Z.; Li, L.; Ding, Y.; Fan, C. Flow-guided one-shot talking face generation with a high-resolution audio-visual dataset. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021. pp. 3661–3670.

- Chen, L.; Cui, G.; Kou, Z.; Zheng, H.; Xu, C. What comprises a good talking-head video generation? A survey and benchmar. arXiv 2020, arXiv:2005.03201.

- Ji, X.; Zhou, H.; Wang, K.; Wu, W.; Loy, C.C.; Cao, X.; Xu, F. Audio-driven emotional video portraits. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, Nashville, TN, USA, 20–25 June 2021; pp. 14080–14089.

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612.

- Zhang, R.; Isola, P.; Efros, A.A.; Shechtman, E.; Wang, O. The unreasonable effectiveness of deep features as a perceptual metric. In Proceedings of the IEEE conference on computer vision and pattern recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 586–595.

- Heusel, M.; Ramsauer, H.; Unterthiner, T.; Nessler, B.; Hochreiter, S. Gans trained by a two time-scale update rule converge to a local nash equilibrium. Adv. Neural Inf. Process. Syst. 2017, 30, 6626–6637.

- Chung, J.S.; Zisserman, A. Out of time: Automated lip sync in the wild. In Asian Conference on Computer Vision; Springer: Cham, Switzerland, 2017; pp. 251–263.

- Ye, Z.; Xia, M.; Yi, R.; Zhang, J.; Lai, Y.K.; Huang, X.; Zhang, G.; Liu, Y.J. Audio-driven talking face video generation with dynamic convolution kernels. IEEE Trans. Multimed. 2022.

- Lu, Y.; Chai, J.; Cao, X. Live speech portraits: Real-time photorealistic talking-head animation. ACM Trans. Graph. (TOG) 2021, 40, 1–17.

- Zhen, R.; Song, W.; Cao, J. Research on the Application of Virtual Human Synthesis Technology in Human-Computer Interaction. In 2022 IEEE/ACIS 22nd International Conference on Computer and Information Science (ICIS); IEEE: Manhattan, NY, USA, 2022; pp. 199–204.

- Wiles, O.; Koepke, A.; Zisserman, A. X2face: A network for controlling face generation using images, audio, and pose codes. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–17 September 2018; pp. 670–686.