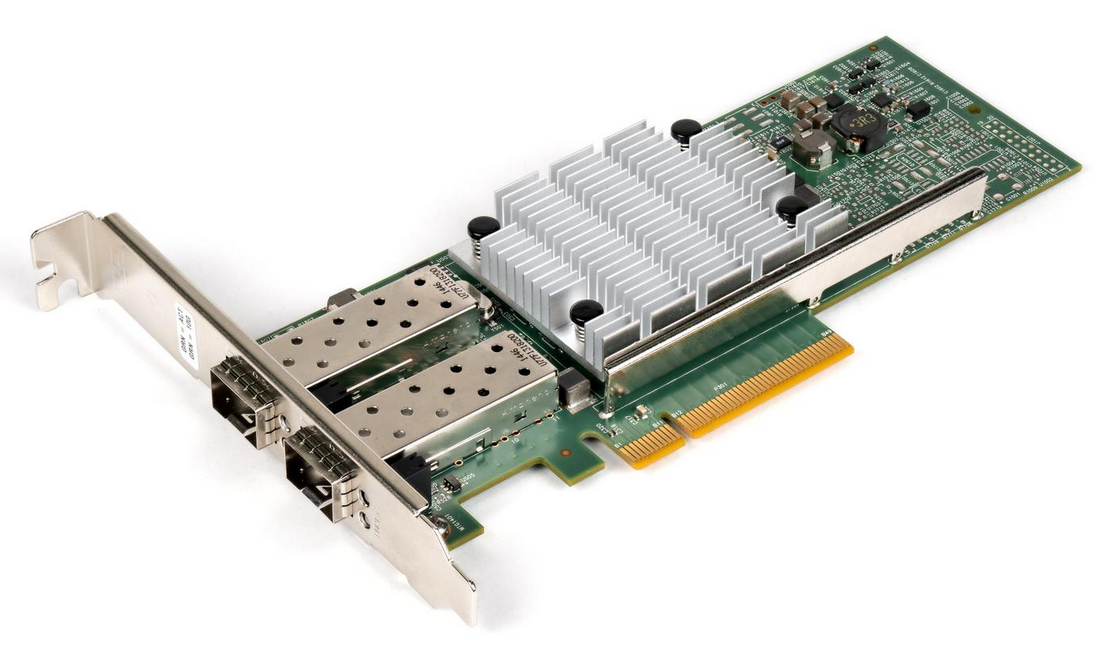

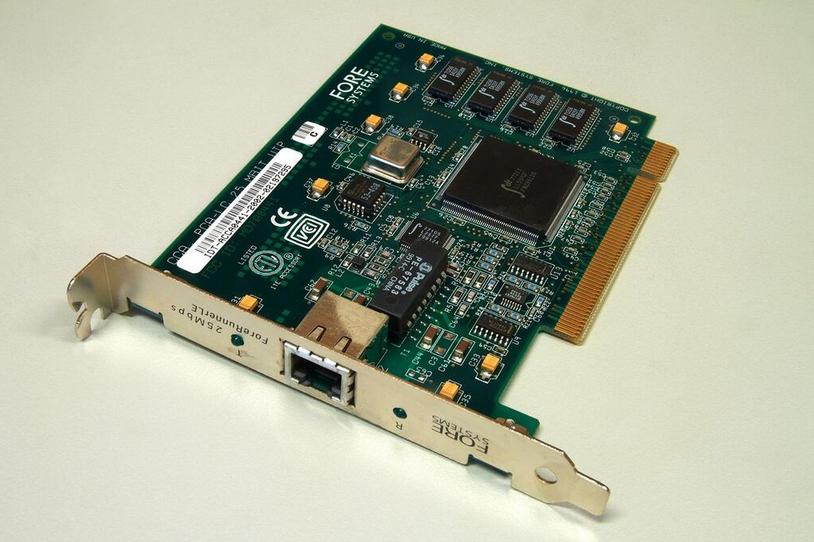

Template:Infobox Computer Hardware Generic A network interface controller (NIC, also known as a network interface card, network adapter, LAN adapter or physical network interface, and by similar terms) is a computer hardware component that connects a computer to a computer network. Early network interface controllers were commonly implemented on expansion cards that plugged into a computer bus. The low cost and ubiquity of the Ethernet standard means that most newer computers have a network interface built into the motherboard, or is contained into a USB-connected dongle. Modern network interface controllers offer advanced features such as interrupt and DMA interfaces to the host processors, support for multiple receive and transmit queues, partitioning into multiple logical interfaces, and on-controller network traffic processing such as the TCP offload engine.

- physical network

- dongle

- ethernet

1. Purpose

The network controller implements the electronic circuitry required to communicate using a specific physical layer and data link layer standard such as Ethernet or Wi-Fi.[1] This provides a base for a full network protocol stack, allowing communication among computers on the same local area network (LAN) and large-scale network communications through routable protocols, such as Internet Protocol (IP).

The NIC allows computers to communicate over a computer network, either by using cables or wirelessly. The NIC is both a physical layer and data link layer device, as it provides physical access to a networking medium and, for IEEE 802 and similar networks, provides a low-level addressing system through the use of MAC addresses that are uniquely assigned to network interfaces.

2. Implementation

Network controllers were originally implemented as expansion cards that plugged into a computer bus. The low cost and ubiquity of the Ethernet standard means that most new computers have a network interface controller built into the motherboard. Newer server motherboards may have multiple network interfaces built-in. The Ethernet capabilities are either integrated into the motherboard chipset or implemented via a low-cost dedicated Ethernet chip. A separate network card is typically no longer required unless additional independent network connections are needed or some non-Ethernet type of network is used. A general trend in computer hardware is towards integrating the various components of systems on a chip, and this is also applied to network interface cards.

An Ethernet network controller typically has an 8P8C socket where the network cable is connected. Older NICs also supplied BNC, or AUI connections. Ethernet network controllers typically support 10 Mbit/s Ethernet, 100 Mbit/s Ethernet, and 1000 Mbit/s Ethernet varieties. Such controllers are designated as 10/100/1000, meaning that they can support data rates of 10, 100 or 1000 Mbit/s. 10 Gigabit Ethernet NICs are also available, and, (As of November 2014), are beginning to be available on computer motherboards.[2][3]

Modular designs like SFP and SFP+ are highly popular, especially for fiber-optic communication. These define a standard receptacle for media-dependent transceivers, so users can easily adapt the network interface to their needs.

LEDs adjacent to or integrated into the network connector inform the user of whether the network is connected, and when data activity occurs.

The NIC may use one or more of the following techniques to indicate the availability of packets to transfer:

- Polling is where the CPU examines the status of the peripheral under program control.

- Interrupt-driven I/O is where the peripheral alerts the CPU that it is ready to transfer data.

NICs may use one or more of the following techniques to transfer packet data:

- Programmed input/output, where the CPU moves the data to or from the NIC to memory.

- Direct memory access (DMA), where a device other than the CPU assumes control of the system bus to move data to or from the NIC to memory. This removes load from the CPU but requires more logic on the card. In addition, a packet buffer on the NIC may not be required and latency can be reduced.

3. Performance and Advanced Functionality

Multiqueue NICs provide multiple transmit and receive queues, allowing packets received by the NIC to be assigned to one of its receive queues. The NIC may distribute incoming traffic between the receive queues using a hash function. Each receive queue is assigned to a separate interrupt; by routing each of those interrupts to different CPUs or CPU cores, processing of the interrupt requests triggered by the network traffic received by a single NIC can be distributed improving performance.[5][6]

The hardware-based distribution of the interrupts, described above, is referred to as receive-side scaling (RSS).[7]:82 Purely software implementations also exist, such as the receive packet steering (RPS) and receive flow steering (RFS).[5] Further performance improvements can be achieved by routing the interrupt requests to the CPUs or cores executing the applications that are the ultimate destinations for network packets that generated the interrupts. This technique improves Locality of reference and results in higher overall performance, reduced latency and better hardware utilization because of the higher utilization of CPU caches and fewer required context switches. Examples of such implementations are the RFS[5] and Intel Flow Director.[7]:98,99[8][9][10]

With multi-queue NICs, additional performance improvements can be achieved by distributing outgoing traffic among different transmit queues. By assigning different transmit queues to different CPUs or CPU cores, internal operating system contentions can be avoided. This approach is usually referred to as transmit packet steering (XPS).[5]

Some products feature NIC partitioning (NPAR, also known as port partitioning) that uses SR-IOV virtualization to divide a single 10 Gigabit Ethernet NIC into multiple discrete virtual NICs with dedicated bandwidth, which are presented to the firmware and operating system as separate PCI device functions.[11][12]

TCP offload engine is a technology used in some NICs to offload processing of the entire TCP/IP stack to the network controller. It is primarily used with high-speed network interfaces, such as Gigabit Ethernet and 10 Gigabit Ethernet, for which the processing overhead of the network stack becomes significant.[13]

Some NICs offer integrated field-programmable gate arrays (FPGAs) for user-programmable processing of network traffic before it reaches the host computer, allowing for significantly reduced latencies in time-sensitive workloads.[14] Moreover, some NICs offer complete low-latency TCP/IP stacks running on integrated FPGAs in combination with userspace libraries that intercept networking operations usually performed by the operating system kernel; Solarflare's open-source OpenOnload network stack that runs on Linux is an example. This kind of functionality is usually referred to as user-level networking.[15][16][17]

References

- Although other network technologies exist, Ethernet (IEEE 802.3) and Wi-Fi (IEEE 802.11) have achieved near-ubiquity as LAN technologies since the mid-1990s.

- Jim O'Reilly (2014-01-22). "Will 2014 Be The Year Of 10 Gigabit Ethernet?". Network Computing. http://www.networkcomputing.com/networking/will-2014-be-the--year-of-10-gigabit-ethernet/a/d-id/1234640?.

- "Breaking Speed Limits with ASRock X99 WS-E/10G and Intel 10G BASE-T LANs". 24 November 2014. http://www.asrock.com/news/index.asp?id=2517.

- "Intel 82574 Gigabit Ethernet Controller Family Datasheet". Intel. June 2014. p. 1. http://www.intel.com/content/dam/doc/datasheet/82574l-gbe-controller-datasheet.pdf.

- "Linux kernel documentation: Documentation/networking/scaling.txt". kernel.org. May 9, 2014. https://www.kernel.org/doc/Documentation/networking/scaling.txt.

- "Intel Ethernet Controller i210 Family Product Brief". Intel. 2012. http://www.mouser.com/pdfdocs/i210brief.pdf.

- "Intel Look Inside: Intel Ethernet". Xeon E5 v3 (Grantley) Launch. Intel. November 27, 2014. http://www.intel.com/content/dam/technology-provider/secure/us/en/documents/product-marketing-information/tst-grantley-launch-presentation-2014.pdf.

- "Linux kernel documentation: Documentation/networking/ixgbe.txt". kernel.org. December 15, 2014. https://www.kernel.org/doc/Documentation/networking/ixgbe.txt.

- "Intel Ethernet Flow Director". Intel. February 16, 2015. http://www.intel.com/content/www/us/en/ethernet-controllers/ethernet-flow-director-video.html.

- "Introduction to Intel Ethernet Flow Director and Memcached Performance". Intel. October 14, 2014. http://www.intel.com/content/dam/www/public/us/en/documents/white-papers/intel-ethernet-flow-director.pdf.

- "Enhancing Scalability Through Network Interface Card Partitioning". Dell. April 2011. http://www.dell.com/downloads/global/products/pedge/en/Dell-Broadcom-NPAR-White-Paper.pdf.

- "An Introduction to Intel Flexible Port Partitioning Using SR-IOV Technology". Intel. September 2011. http://www.intel.com/content/dam/www/public/us/en/documents/solution-briefs/10-gbe-ethernet-flexible-port-partitioning-brief.pdf.

- Jonathan Corbet (August 1, 2007). "Large receive offload". LWN.net. https://lwn.net/Articles/243949/.

- "High Performance Solutions for Cyber Security". New Wave DV. http://newwavedv.com/markets/defense/cyber-security/.

- Timothy Prickett Morgan (2012-02-08). "Solarflare turns network adapters into servers: When a CPU just isn't fast enough". https://www.theregister.co.uk/2012/02/08/solarflare_application_onload_engine/.

- "OpenOnload". 2013-12-03. http://www.openonload.org/.

- "OpenOnload: A user-level network stack". 2008-03-21. http://www.openonload.org/openonload-google-talk.pdf.