Your browser does not fully support modern features. Please upgrade for a smoother experience.

Please note this is a comparison between Version 2 by Catherine Yang and Version 1 by chen zhong.

As one of the critical state parameters of the battery management system, the state of charge (SOC) of lithium batteries can provide an essential reference for battery safety management, charge/discharge control, and the energy management of electric vehicles (EVs). The SOC estimation of a Li-ion battery in the deep learning method uses deep learning theory of computer science to build a model that builds the approximate relationship between input data (voltage, current, temperature, power, capacity, etc.) and output data (SOC) by available data. According to different neural network structures, it can be classified as a single, hybrid, or trans structure.

- electric vehicles

- review

- SOC estimation

- deep learning

1. Single Structure

The single structure uses only a deep learning structure to estimate SOC; in this chapter, it includes a multi-layer perceptron (MLP) type, convolutional type, and recurrent type.

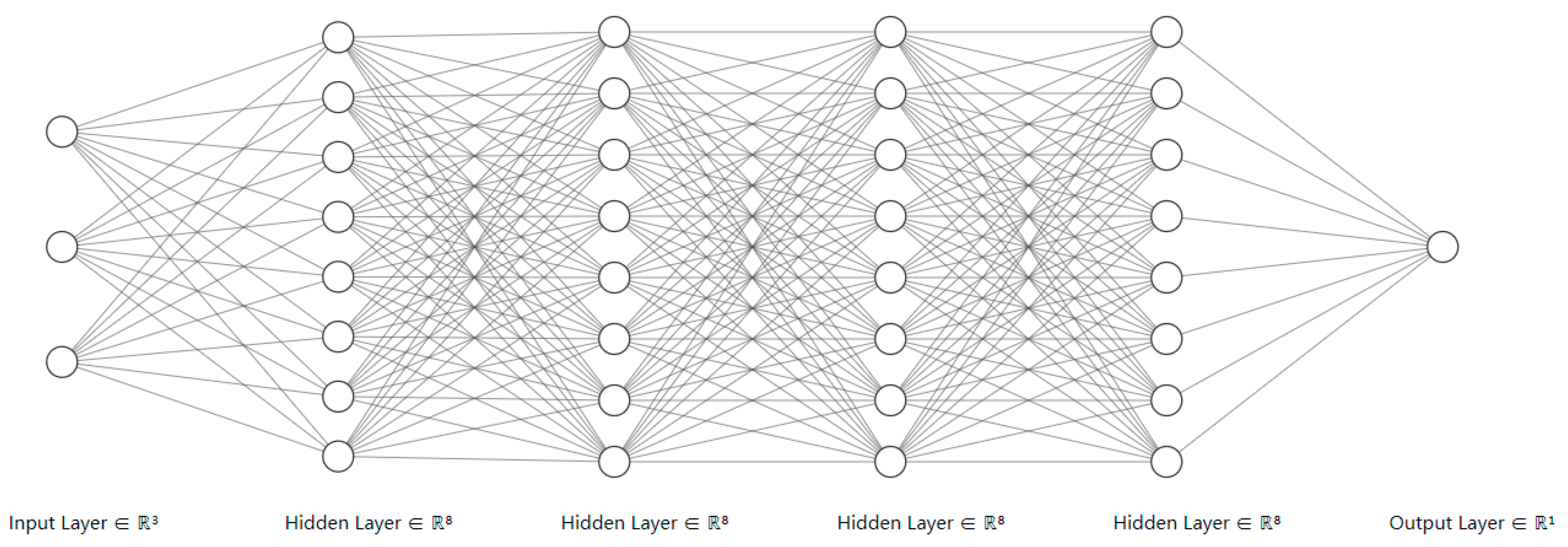

1.1. MLP Type—DNN

Multi-layer perceptron, also known as an artificial neural network, is derived from a Deep Neural Network (DNN) after the arithmetic power is improved and the training parameters are increased; its advantage is that it does not limit the dimensionality of the input, it is highly adaptable to the data, and theoretically, a 3-layer perceptron can fit any function nonlinearly, but the disadvantage is easy over-fitting [54][1] when the network has massive parameters. Figure 41 shows the structure of a deep neural network with four hidden layers, each containing eight neurons.

Figure 41.

Deep neural network with four hidden layers.

Ephrem et al. [55][2] used the DNN to train a model for SOC estimation and tested the Panasonic 18650 lithium battery under different temperatures and driving cycles [49][3], among which seven fully discharged datasets were selected as training datasets, “US06” and “HWFET” were the validation datasets, the test set was the data set under the changing temperature of 10–25 °C, the inputs were current, voltage, average voltage, and average current, and it was verified separately at each temperature. After the test set test and compared with four other methods, the lowest RMS error obtained was 0.78%. SHRIVASTAVA et al. [56][4] tested the Panasonic 18650 lithium battery, using “DST, FUDS, US06” as the training dataset and validation dataset and “WLTP” as the test set; the inputs were voltage, current, and temperature. The model was compared with the SVR (Supper Vector Regression) method, and the RMS when using the DNN method was significantly smaller than the SVR. HOW et al. [57][5] used the INR lithium battery dataset from the CALCE dataset [46][6] to train the lithium battery SOC model, with “DST” as the training dataset, and “FUDS, BJDST, and US06” as the test dataset, with current, temperature, and voltage as inputs. After training, the model was tested in the “DST” test dataset and compared with five methods, and the RMS was 3.68%. Kashkooli et al. [58][7] tested eight commercial 15 Ah lithium battery cells cycled at various constant rates of charge/discharge and conducted tests at the one-mouth interval for a period of 10 mouths; the measurement data were divided randomly into three groups in which 70% was used for training, 15% for cross-validation, and 15% for testing; the test performance based on MSE using DNN was 0.0247%.

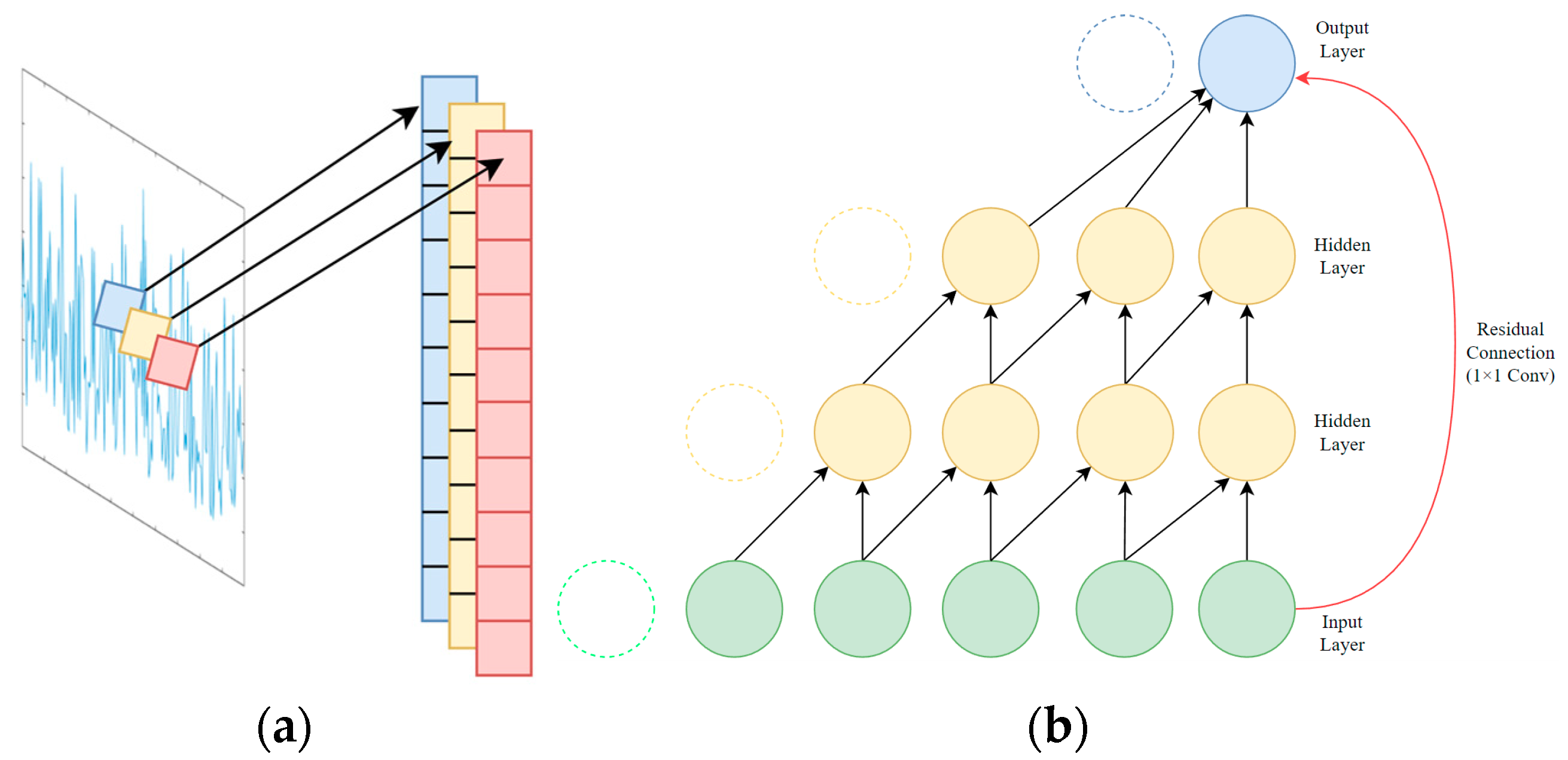

1.2. Convolutional Type—TCN

Convolutional type neural networks in the SOC estimation applications of Li-ion batteries are mainly variants of convolution neural networks (CNNs [59][8]) in time series data, which are one-dimensional convolutional neural networks [60][9] (1D-CNNs) and temporal convolution networks [61][10] (TCNs). The primary benefit of a one-dimensional convolutional neural network is that it can extract and categorize one-dimensional signal data while using less computer capacity. It has been frequently employed in real-time monitoring tasks such as defect prediction and categorization in recent years. The SOC estimation of a Li-ion battery is a regression problem, but models in 1D-CNNs are not as accurate in terms of regression prediction problems as in classification problems, so they are typically employed as a feature extraction layer in conjunction with other networks. The main benefits of time-domain convolutional networks are the expansion of the feature extraction range by increasing the perceptual field by expanding the causal convolution and the mitigation of the gradient explosion problem by residual connection [62][11], which allows for the training of models with more parameters and higher accuracy. The schematic diagram of the convolutional neural network is shown in Figure 52.

Figure 52.

Convolutional Type: (

a

) 1D-CNN schematic; (

b

) Dilated causal convolution and residual connection in TCN.

HANNAN et al. [63][12] constructed a multi-layer time-domain convolutional layer with feedforward direction and optimized the learning rate using an optimization algorithm, using “Cycle 1–Cycle 4, Cycle NN, UDDS, LA92” from the dataset [49][3] as the training set and “US06, HWFT” as the test set; the MSE of the test was 0.85% when compared with that of the four models.

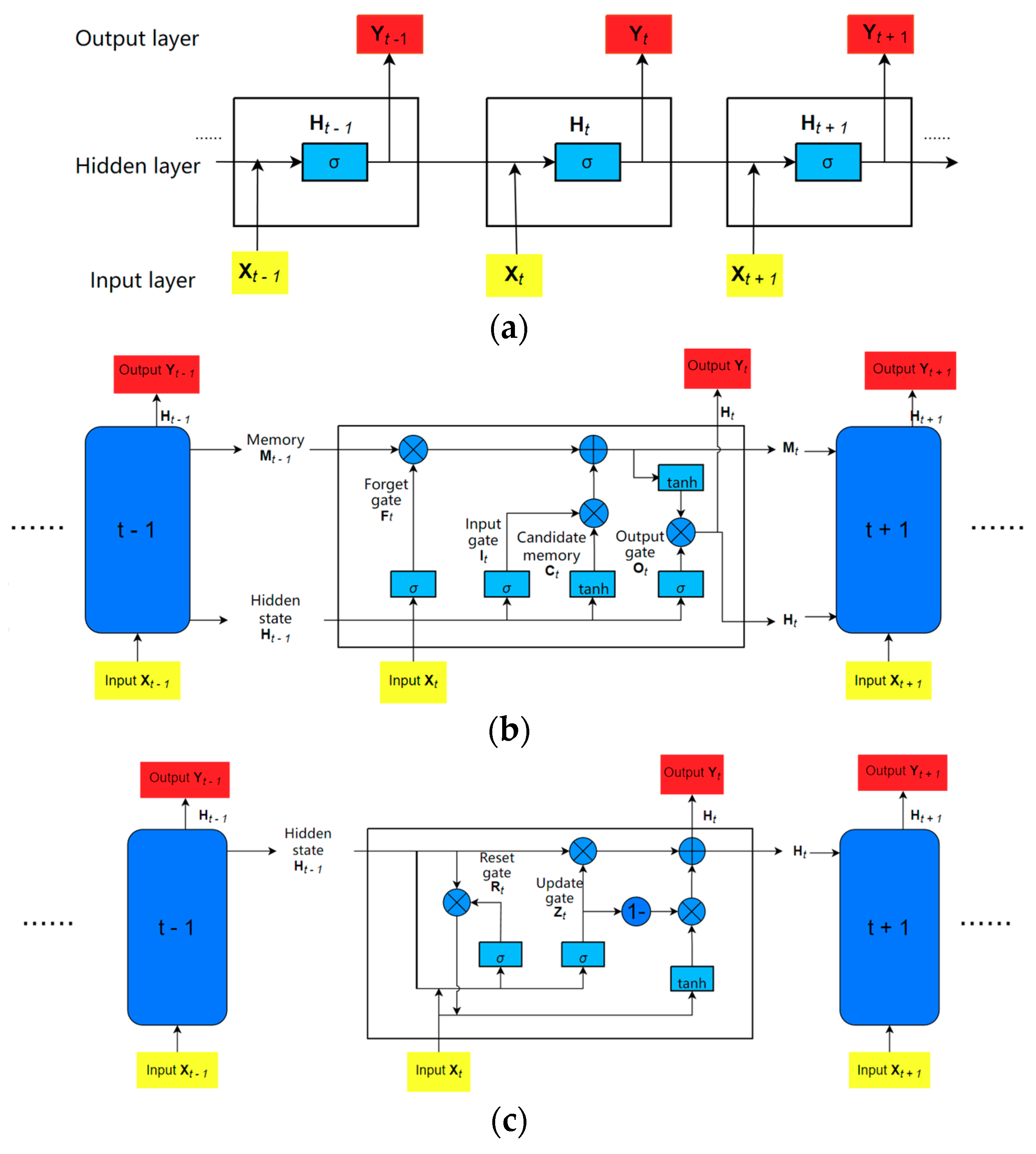

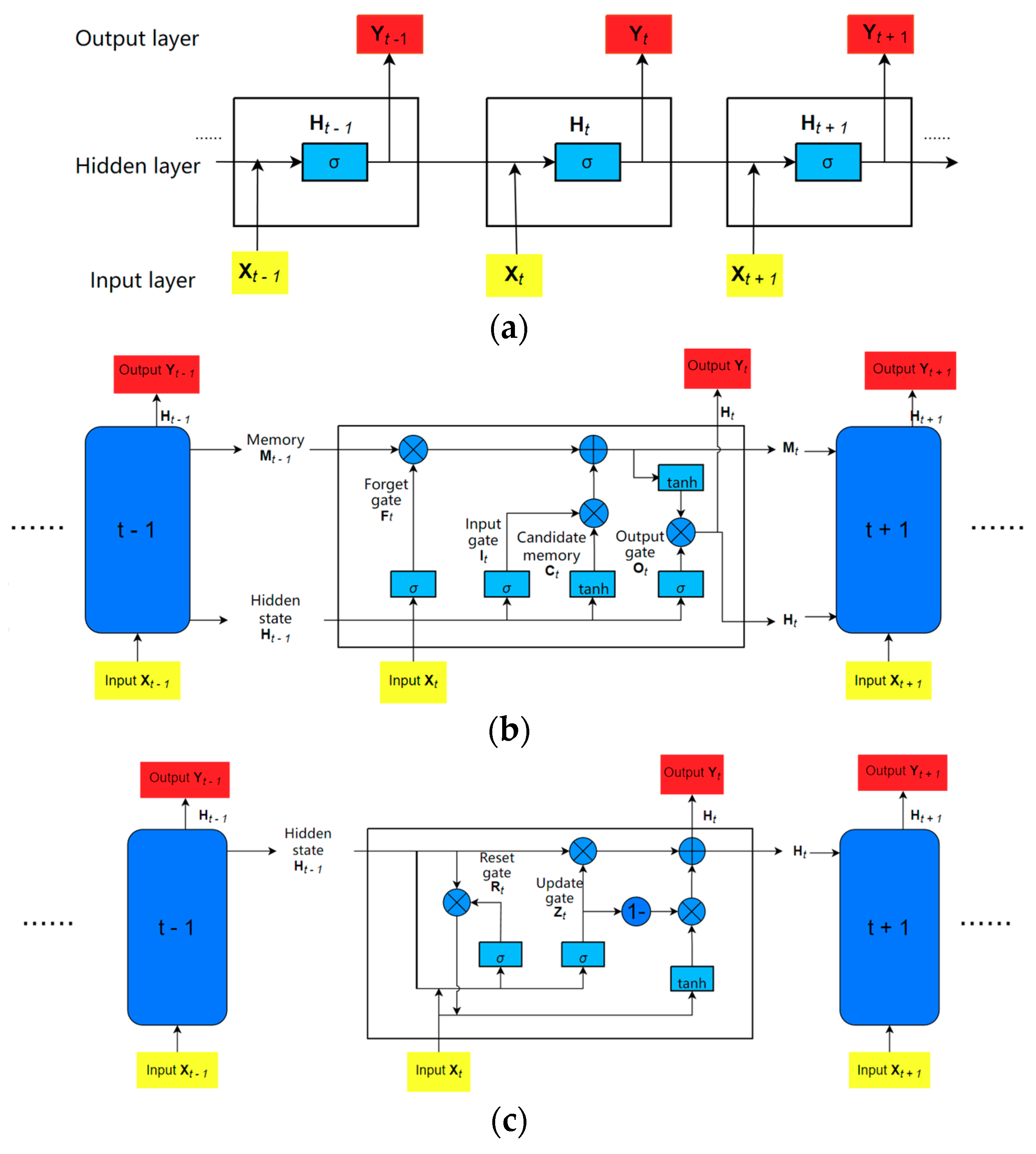

1.3. Recurrent Type—LSTM

As shown in Figure 63, recurrent types mainly include the Recurrent Neural Network (RNN), Long Short-Term Memory [64][13] (LSTM), and Gated Recurrent Unit [65][14] (GRU). Gradient explosion or disappearance occurs in recurrent neural networks as parameters are increased; then, the creation of LSTM alleviates the problem of gradient explosion in the recurrent neural network, followed by GRU with fewer parameters than LSTM. At present, the LSTM is the most used network of recurrent neural networks in the lithium battery SOC estimation problem, followed by the GRU, and the recurrent neural network is not used directly [66][15]. The benefit of a recurrent neural network is that it can utilize the previous output as the next input, thus exploiting the relationship of the input variables; but, owing to its one-way operation and historical data calculation, it takes longer to train than neural networks that can run in parallel.

Figure 63.

Recurrent Type: (

a

) Recurrent neural network; (

b

) Long short-term memory neural network; (

c

Ephrem et al. [67][16] adopted LSTM to train the lithium battery SOC model under fixed and varying ambient temperatures in the dataset [46][6]. In the fixed ambient temperature SOC model, the training dataset included the data under eight mixed drive cycles, and the two discharge test cases were used as the validation dataset; the test dataset was the charging test case; in the varying ambient temperature SOC model, the training dataset with 27 drive cycles included three sets of nine drive cycles recorded at 0 °C, 10 °C, and 25 °C. The test dataset included the data of another mixed-drive cycle. Both models’ input variables are voltage, current, and temperature. After evaluation, the model achieved the lowest MAE of 0.573% at 10 °C and an MAE of 1.606% with ambient temperature from 10 to 25 °C. Cui et al. [68][17] used LSTM with an encoder–decoder [69][18] structure in the dataset [43][19]; the input was “It, Vt, Iavg, Vavg”, and the test result was an RMSE of 0.56% and MAE of 0.46% in US06, which was higher than that using only LSTM and GRU in that paper. Wong et al. [70][20] used the undisclosed ‘UNIBO Power-tools Dataset’ as a training dataset and dataset [51][21] as a test dataset in the LSTM structure; the input variables were current, voltage, and temperature, and the MAE was 1.17% at 25 °C. Du et al. [71][22] tested two LR1865SK Li-ion battery cells at room temperature and used the dataset in [45][23] as the comparative case to test the model trained by LSTM; the input variables were current, voltage, temperature, cycles, energy, power, and time; the MAE was 0.872% at an average level. YANG et al. [72][24] used the LSTM to build a model for lithium battery SOC estimation; the data were obtained from the A123 18560 lithium battery under three drive cycles, i.e., DST, US06, and FUDS; the input vectors were current, voltage, and temperature. In addition, the model robustness was tested in the unknown initial state of the lithium battery, with the Unscented Kalman Filter [73][25] (UKF) method for comparison; the test results showed that the RMS of LSTM was significantly smaller than that of UKF.

) Gated recurrent unit.

1.4. Recurrent Type—GRU

YANG et al. [74][26] trained the model by using GRU, and the dataset was tested using three LiNiMnCoO2 batteries with DST and FUDS drive cycles; the input vectors were the current, voltage, and temperature. Then, the trained model was tested in a dataset of another material; it obtained 3.5% of max. RMS. The authors of studies [75,76,77][27][28][29] all used GRU as the neural network for model training; the dataset was the INR 18650-20R and A123 18650 lithium battery from the CALCE dataset [46][6] with inputs of voltage, current, and temperature, and the RMS error obtained from the test dataset was not significantly different. Kuo et al. [78][30] tested a 18650 Li-ion battery cell and used GRU with an encoder–decoder structure, in which the input vectors were current, voltage, and temperature; further, they compared this with LSTM, GRU, and a sequence-to-sequence structure, and the result showed that the MAE of their proposed neural network was lower than that of other methods at three different drive cycles and temperatures.2. Hybrid Structure

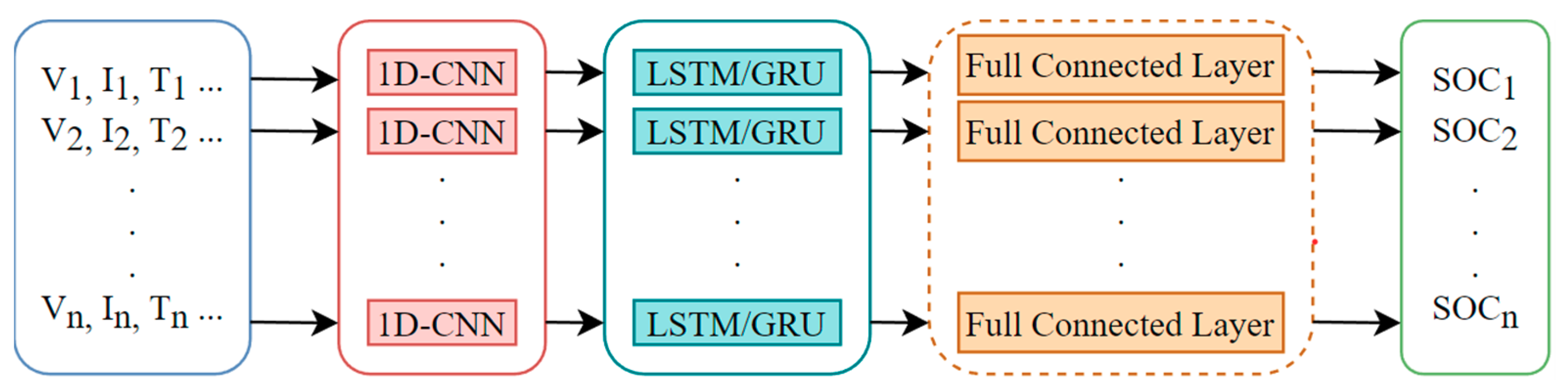

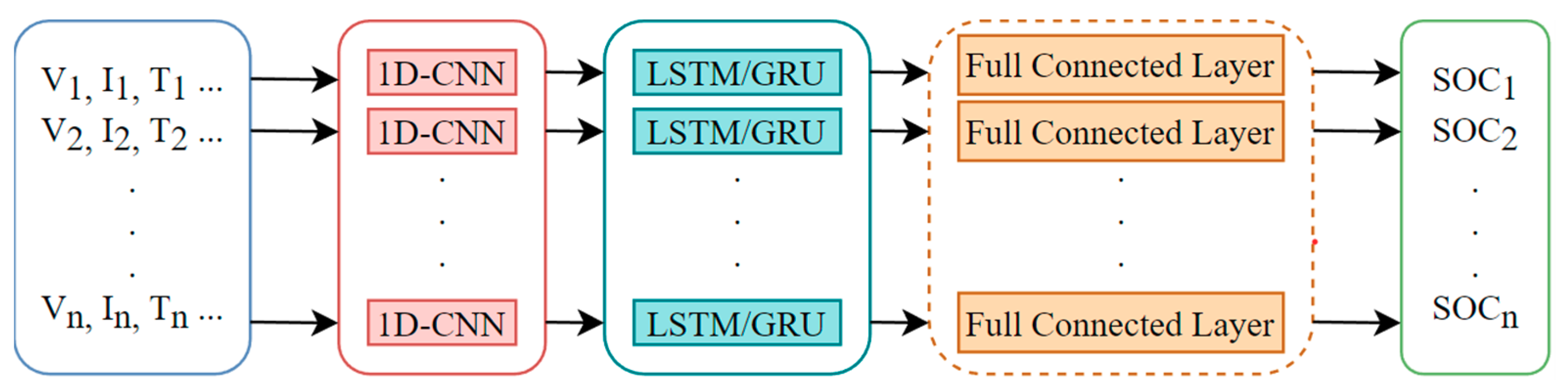

The main idea of the hybrid neural network in the estimation of the SOC of a lithium battery is to improve the prediction accuracy of the model by combining the advantages of various types of neural networks. The current common architecture in the lithium battery SOC estimation problem is a 1D-CNN as a feature extraction layer to extract deeper features of the input data, and a recurrent neural network (LSTM or GRU is used more often) as a model building layer to construct a model between the SOC and the input variables. Some scholars also added the fully connected layer (FC) before the final output layer to improve the accuracy of the model. The architecture of 1D-CNN + X + Y in lithium battery SOC estimation is depicted in Figure 74.

Figure 74.

1D-CNN + X + Y architecture diagram.

2.1. 1D-CNN + LSTM

SONG et al. [79][31] used a neural network combination of “1D-CNN + LSTM” to build a model with inputs of voltage, current, temperature, average voltage, and average current, for the dataset, and the 1.1 Ah A123 18650 lithium battery was tested at seven different temperatures with drive cycles of US06, FUDS. The results showed that the error of the “1D-CNN + LSTM” method was significantly smaller than that of the method that only used one neural network when tested in the test dataset and compared with the 1D-CNN and LSTM methods.2.2. 1D-CNN + GRU + FC

HUANG et al. [80][32] used a “1D-CNN + GRU + FC” neural network architecture with inputs of voltage, current, and temperature; the dataset was obtained from the BAK 18650 lithium battery at seven different temperatures with drive cycles of DST and FUDS. Compared with the method of one neural network such as RNN, GRU, and a support vector machine, it achieved the lowest RMS.2.3. NN + Filter Algorithm

The NN + filter algorithm type uses a neural network and filter algorithm for improving Li-ion SOC estimation performance, Figure 85 is a case of that structure, which is the combination of LSTM and the adaptive H-infinity filter that can be found in [81][33] in more detail.

Figure 85. A case of the NN + filter algorithm architecture diagram (LSTM + AHIF, reprinted with permission from Ref. [81]. Copyright 2021, Elsevier.).

YANG et al. [82][34] tried to combine the advantages of both LSTM and UKF. They used LSTM and an offline training neural network to obtain a pre-trained model with the data obtained; then, the real-time data obtained were inputted into UKF and the pre-trained model, whose data input occurred after normalization. The UKF filters out the noise and improves the model performance. After this, combinations of LSTM and filtering class algorithms appear as “LSTM + CKF (Cubature Kalman Filter)” [83][35], “LSTM + EKF (Extended Kalman Filter)” [84][36], and “LSTM + AHIF (Adaptive H-infinity Filter) [81][33], through the test dataset, and their model performance was better than the models only trained by LSTM.

A case of the NN + filter algorithm architecture diagram (LSTM + AHIF, reprinted with permission from Ref. [33]. Copyright 2021, Elsevier.).

3. Trans Structure

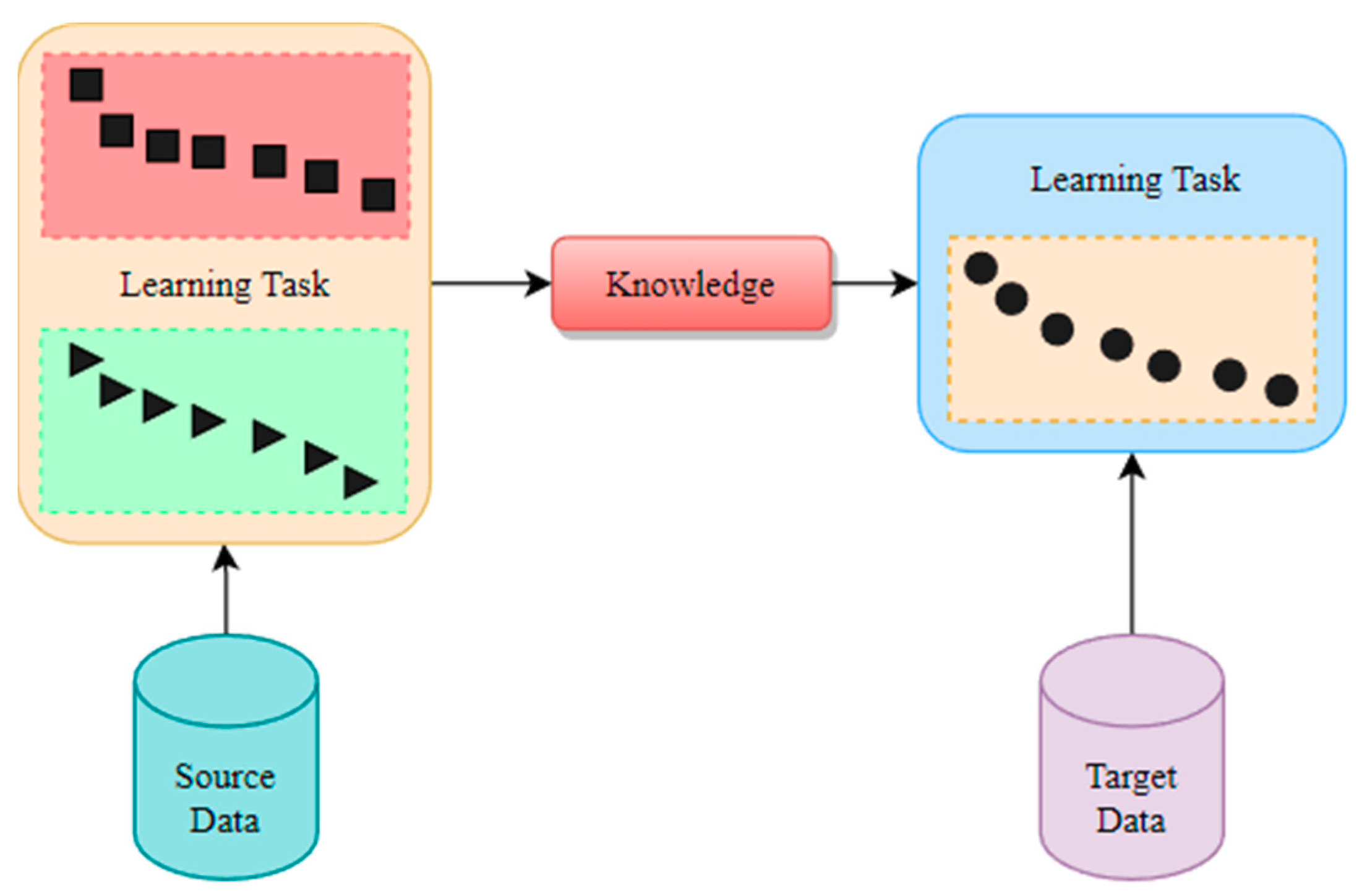

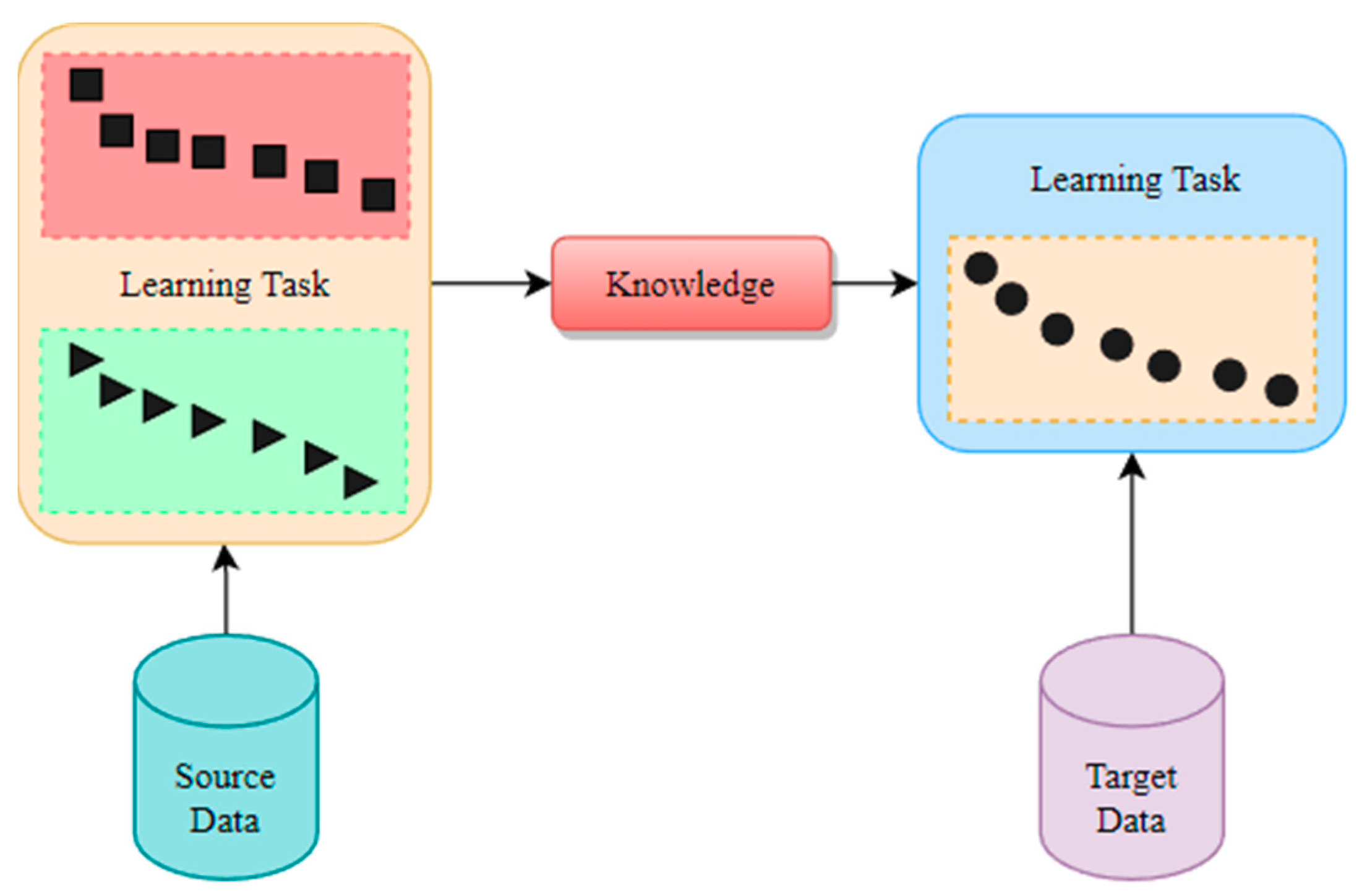

Trans structure is mainly used to transfer the knowledge of source data to target data and in this chapter includes the section on transfer learning and transformers.3.1. Transfer Learning

As depicted in Figure 96, the knowledge is utilized from the learning task trained by source data and that of the target data, which can improve the robustness of the model to achieve higher performance. Some researchers applied transfer learning to enhance the performance of SOC estimation.

Figure 96.

Bian et al. [85][37] added a fully connected layer after bidirectional LSTM on this basis with inputs of voltage, current, and temperature; the datasets were three different lithium battery datasets, A123 18650, INR 18650-20R from the CALCE dataset [46][6] as the target dataset, and the Panasonic lithium battery dataset [49][3] as the pre-trained dataset. Then, they used transfer learning to transfer features from the model trained with the pre-trained dataset to the model trained with the target dataset. Compared with the method of one neural network such as RNN, LSTM, and GRU, the model of the transfer learning method achieved the lowest RMS.

Liu et al. [86][38] applied TCN to two different types of lithium battery data and migrated the trained model for lithium battery SOC estimation as a pre-trained model to another battery dataset by transfer learning [87][39]. The training dataset of the pre-trained model was “US06, HWFET, UDDS, LA92, Cycle NN”, corresponding to 25 °C, 10 °C, and 0 °C in the dataset [49][3], and the test set was “Cycle 1–Cycle 4”; the input vectors were current, voltage, and temperature. The model trained under 25 °C was migrated to the new lithium battery SOC model as a pre-trained model by transfer learning, the training dataset of the new lithium battery SOC model included the data measured under two mixed driving cycles in the dataset [50][40], the test dataset was “US06, HWFET, UDDS, LA92” in the dataset [50][40], and its RMS range was 0.36–1.02%.

Schematic of transfer learning.

3.2. Transformer

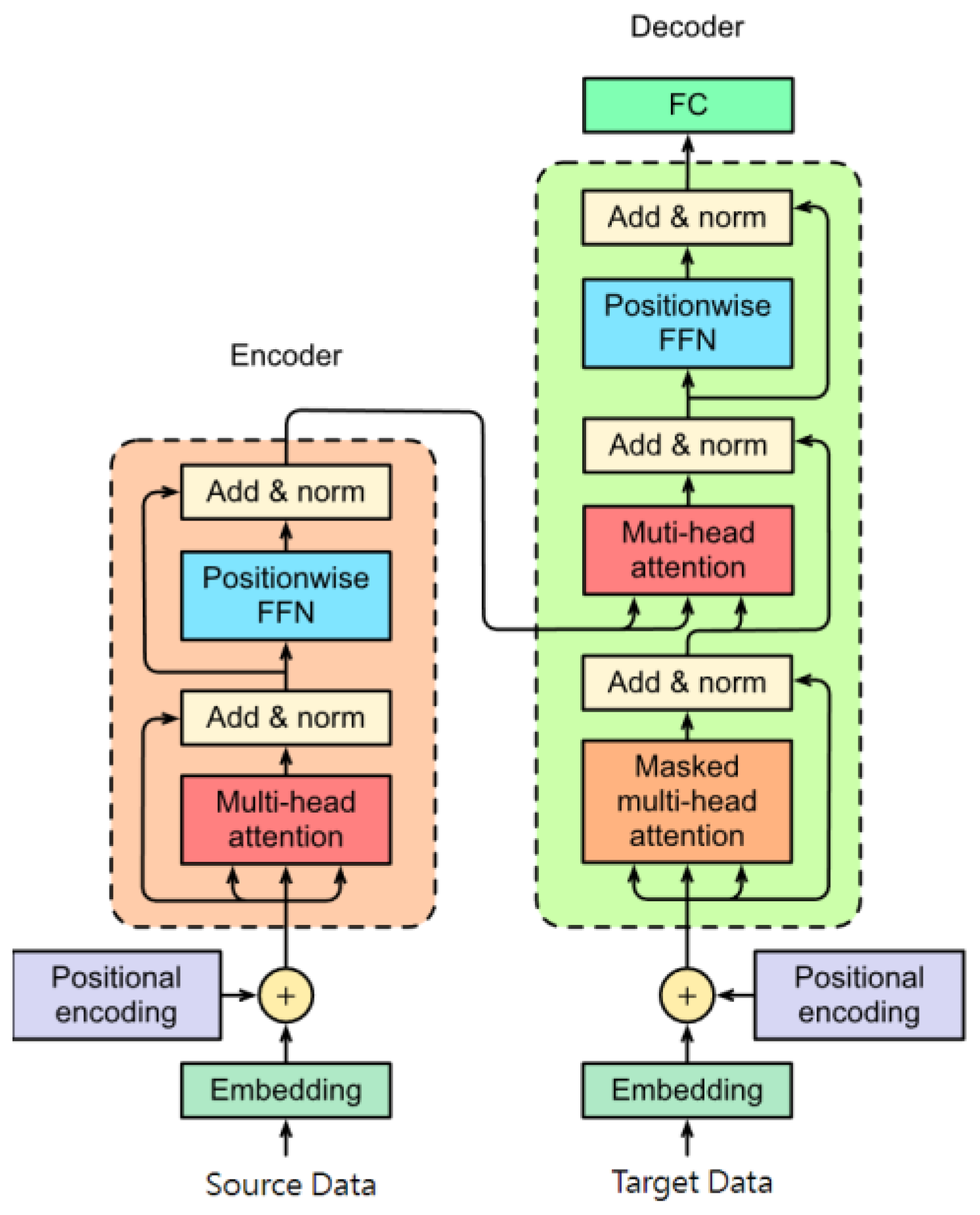

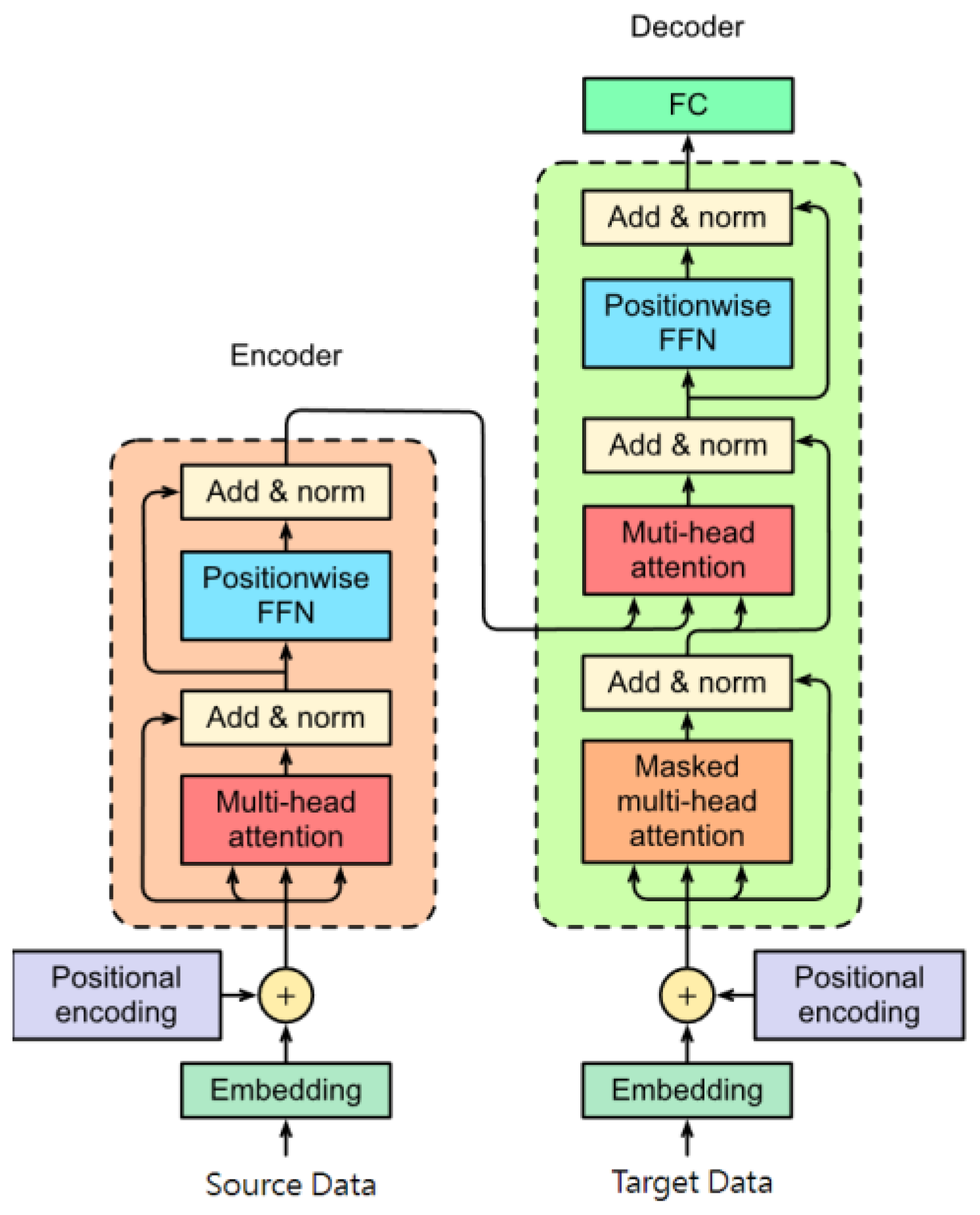

Transformer [88][41] is based on the encoder–decoder structure and attention mechanism, which is multi-head attention. It can enhance the connection and relation of data, and hence the transformer is applied in the natural language process, image detection, and segmentation, etc. In recent years, some scholars tried to use the structure based on the transformer for SOC estimation. The diagram of the transformer is shown in Figure 107.

Figure 107.

Hannan et al. [89][42] used the structure based on the encoder of the transformer [88][41] to estimate SOC, and the dataset was used in [51][21]; the input variables were current, voltage, and temperature, and compared with different methods including DNN, LSTM, GRU, and other deep learning methods, the test performance was 1.19% for RMSE and 0.65% for MAE.

Shen et al. [90][43] used two encoders and one decoder of the transformer, in which the input variables were the current–temperature and voltage–temperature sequences; the dataset was obtained from [46][6], in which the ‘DST’ and ‘FUDS’ were used as the training dataset, and the ‘US06′ was used as test dataset. Further, they added a closed loop to improve the performance of SOC estimation; then, compared with LSTM and LSTM + UKF, the test results showed that the RMSE of their proposed method was lower than that of other methods.

Structure of the transformer.

References

- Hawkins, D.M. The problem of overfitting. J. Chem. Inf. Comput. Sci. 2004, 44, 1–12.

- Chemali, E.; Kollmeyer, P.J.; Preindl, M.; Emadi, A. State-of-charge estimation of Li-ion batteries using deep neural networks: A machine learning approach. J. Power Sources 2018, 400, 242–255.

- Kollmeyer, P. Panasonic 18650PF Li-ion Battery Data; Elsevier: Madison, WI, USA, 2018; Volume 51.

- Shrivastava, P.; Soon, T.K.; Bin Idris, M.Y.; Mekhilef, S.; Adnan, S.B.R.S. Lithium-ion battery state of energy estimation using deep neural network and support vector regression. In Proceedings of the 2021 IEEE 12th Energy Conversion Congress & Exposition-Asia (ECCE-Asia), Singapore, 24–27 May 2021; pp. 2175–2180.

- How, D.N.T.; Hannan, M.A.; Lipu, M.S.H.; Sahari, K.S.M.; Ker, P.J.; Muttaqi, K.M. State-of-Charge Estimation of Li-Ion Battery in Electric Vehicles: A Deep Neural Network Approach. IEEE Trans. Ind. Appl. 2020, 56, 5565–5574.

- CALCE. Center for Advanced Life Cycle Engineering Battery Research Group. Available online: https://web.calce.umd.edu/batteries/data.htm (accessed on 30 September 2021).

- Kashkooli, A.G.; Fathiannasab, H.; Mao, Z.; Chen, Z. Application of Artificial Intelligence to State-of-Charge and State-of-Health Estimation of Calendar-Aged Lithium-Ion Pouch Cells. J. Electrochem. Soc. 2019, 166, A605–A615.

- Lecun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324.

- Kiranyaz, S.; Ince, T.; Hamila, R.; Gabbouj, M. Convolutional neural networks for patient-specific ECG classification. In Proceedings of the 2015 37th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Milan, Italy, 25–29 August 2015; pp. 2608–2611.

- Bai, S.; Kolter, J.Z.; Koltun, V. An empirical evaluation of generic convolutional and recurrent networks for sequence modeling. arXiv 2018, arXiv:1803.01271.

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778.

- Hannan, M.A.; How, D.N.T.; Lipu, M.S.H.; Ker, P.J.; Dong, Z.Y.; Mansur, M.; Blaabjerg, F. SOC Estimation of Li-ion Batteries with Learning Rate-Optimized Deep Fully Convolutional Network. IEEE Trans. Power Electron. 2020, 36, 7349–7353.

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780.

- Cho, K.; van Merriënboer, B.; Gulcehre, C.; Bahdanau, D.; Bougares, F.; Schwenk, H.; Bengio, Y. Learning phrase representations using RNN encoder-decoder for statistical machine translation. In Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP), Doha, Qatar, 25–29 October 2014; pp. 1724–1734.

- Chaoui, H.; Ibe-Ekeocha, C.C. State of Charge and State of Health Estimation for Lithium Batteries Using Recurrent Neural Networks. IEEE Trans. Veh. Technol. 2017, 66, 8773–8783.

- Chemali, E.; Kollmeyer, P.J.; Preindl, M.; Ahmed, R.; Emadi, A.; Kollmeyer, P. Long Short-Term Memory Networks for Accurate State-of-Charge Estimation of Li-ion Batteries. IEEE Trans. Ind. Electron. 2018, 65, 6730–6739.

- Cui, S.; Yong, X.; Kim, S.; Hong, S.; Joe, I. An LSTM-Based Encoder-Decoder Model for State-of-Charge Estimation of Lithium-Ion Batteries. In Computer Science On-line Conference 2020; Springer: Cham, Switzerland, 2020; pp. 178–188.

- Andréasson, J.; Straight, S.D.; Moore, T.A.; Moore, A.L.; Gust, D. Molecular all-photonic encoder−Decoder. J. Am. Chem. Soc. 2008, 130, 11122–11128.

- Shi, W.; Jiang, J.; Zhang, W.; Li, X.; Jiang, J. A Study on the Driving Cycle for the Life Test of Traction Battery in Electric Buses. Automot. Eng. 2013, 35, 138–142.

- Wong, K.L.; Bosello, M.; Tse, R.; Falcomer, C.; Rossi, C.; Pau, G. Li-Ion Batteries State-of-Charge Estimation Using Deep LSTM at Various Battery Specifications and Discharge Cycles. In Proceedings of the GoodIT ’21: Conference on Information Technology for Social Good, Rome, Italy, 9–11 September 2021; pp. 85–90.

- Kollmeyer, P.; Vidal, C.; Naguib, M.; Skells, M. Lg 18650HG2 li-Ion Battery Data and Example Deep Neural Network xEV SOC Estimator Script; Elsevier: Madison, WI, USA, 2020; Volume 3.

- Du, Z.; Zuo, L.; Li, J.; Liu, Y.; Shen, H.T. Data-Driven Estimation of Remaining Useful Lifetime and State of Charge for Lithium-Ion Battery. IEEE Trans. Transp. Electrif. 2021, 8, 356–367.

- Saha, B.; Goebel, K. NASA Ames Prognostics Data Repository; NASA Ames: Moffett Field, CA, USA, 2007. Available online: http://ti.arc.nasa.gov/project/prognostic-data-repository (accessed on 30 September 2021).

- Yang, F.; Song, X.; Xu, F.; Tsui, K.-L. State-of-Charge Estimation of Lithium-Ion Batteries via Long Short-Term Memory Network. IEEE Access 2019, 7, 53792–53799.

- Wan, E.A.; Van Der Merwe, R. The unscented Kalman filter for nonlinear estimation. In Proceedings of the IEEE 2000 Adaptive Systems for Signal Processing, Communications, and Control Symposium (Cat. No. 00EX373), Lake Louise, AB, Canada, 4 October 2000; pp. 153–158.

- Yang, F.; Li, W.; Li, C.; Miao, Q. State-of-charge estimation of lithium-ion batteries based on gated recurrent neural network. Energy 2019, 175, 66–75.

- Hannan, M.A.; How, D.N.T.; Bin Mansor, M.; Lipu, M.S.H.; Ker, P.J.; Muttaqi, K.M. State-of-Charge Estimation of Li-ion Battery Using Gated Recurrent Unit with One-Cycle Learning Rate Policy. IEEE Trans. Ind. Appl. 2021, 57, 2964–2971.

- Javid, G.; Basset, M.; Abdeslam, D.O. Adaptive online gated recurrent unit for lithium-ion battery SOC estimation. In Proceedings of the IECON 2020 the 46th Annual Conference of the IEEE Industrial Electronics Society, Singapore, 18–21 October 2020; pp. 3583–3587.

- Xiao, B.; Liu, Y. Accurate State-of-Charge Estimation Approach for Lithium-Ion Batteries by Gated Recurrent Unit with Ensemble Optimizer. IEEE Access 2019, 7, 54192–54202.

- Yang, K.; Tang, Y.; Zhang, S.; Zhang, Z. A deep learning approach to state of charge estimation of lithium-ion batteries based on dual-stage attention mechanism. Energy 2022, 244, 123233.

- Song, X.; Yang, F.; Wang, D.; Tsui, K.-L. Combined CNN-LSTM Network for State-of-Charge Estimation of Lithium-Ion Batteries. IEEE Access 2019, 7, 88894–88902.

- Huang, Z.; Yang, F.; Xu, F.; Song, X.; Tsui, K.-L. Convolutional Gated Recurrent Unit–Recurrent Neural Network for State-of-Charge Estimation of Lithium-Ion Batteries. IEEE Access 2019, 7, 93139–93149.

- Chen, Z.; Zhao, H.; Shu, X.; Zhang, Y.; Shen, J.; Liu, Y. Synthetic state of charge estimation for lithium-ion batteries based on long short-term memory network modeling and adaptive H-Infinity filter. Energy 2021, 228, 120630.

- Yang, F.; Zhang, S.; Li, W.; Miao, Q. State-of-charge estimation of lithiumion batteries using LSTM and UKF. Energy 2020, 201, 117664.

- Shu, X.; Li, G.; Zhang, Y.; Shen, S.; Chen, Z.; Liu, Y. Stage of Charge Estimation of Lithium-Ion Battery Packs Based on Improved Cubature Kalman Filter with Long Short-Term Memory Model. IEEE Trans. Transp. Electrif. 2020, 7, 1271–1284.

- Ni, Z.; Yang, Y.; Xiu, X. Battery State of Charge Estimation Using Long Short-Term Memory Network and Extended Kalman Filter. In Proceedings of the 2020 39th Chinese Control Conference (CCC), Shenyang, China, 27–29 July 2020; pp. 5778–5783.

- Bian, C.; Yang, S.; Miao, Q. Cross-Domain State-of-Charge Estimation of Li-Ion Batteries Based on Deep Transfer Neural Network with Multiscale Distribution Adaptation. IEEE Trans. Transp. Electrif. 2020, 7, 1260–1270.

- Liu, Y.; Li, J.; Zhang, G.; Hua, B.; Xiong, N. State of Charge Estimation of Lithium-Ion Batteries Based on Temporal Convolutional Network and Transfer Learning. IEEE Access 2021, 9, 34177–34187.

- Bengio, Y. Deep learning of representations for unsupervised and transfer learning. Proc. ICML Workshop Unsupervised Transf. Learn. 2012, 27, 17–36.

- Kollmeyer, P.; Skells, M. Turnigy Graphene 5000mAh 65C li-Ion Battery Data; Elsevier: Madison, WI, USA, 2020; Volume 1.

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30, 1–13.

- Hannan, M.A.; How, D.N.T.; Lipu, M.S.H.; Mansor, M.; Ker, P.J.; Dong, Z.Y.; Sahari, K.S.M.; Tiong, S.K.; Muttaqi, K.M.; Mahlia, T.M.I.; et al. Deep learning approach towards accurate state of charge estimation for lithium-ion batteries using self-supervised transformer model. Sci. Rep. 2021, 11, 19541.

- Shen, H.; Zhou, X.; Wang, Z.; Wang, J. State of charge estimation for lithium-ion battery using Transformer with immersion and invariance adaptive observer. J. Energy Storage 2021, 45, 103768.

More