Presently, smartphones are used more and more for purposes that have nothing to do with phone calls or simple data transfers. One example is the recognition of human activity, which is relevant information for many applications in the domains of medical diagnosis, elderly assistance, indoor localization, and navigation. The information captured by the inertial sensors of the phone (accelerometer, gyroscope, and magnetometer) can be analyzed to determine the activity performed by the person who is carrying the device, in particular in the activity of walking. Nevertheless, the development of a standalone application able to detect the walking activity starting only from the data provided by these inertial sensors is a complex task. This complexity lies in the hardware disparity, noise on data, and mostly the many movements that the smartphone can experience and which have nothing to do with the physical displacement of the owner. In this work, we explore and compare several approaches for identifying the walking activity. We categorize them into two main groups: the first one uses features extracted from the inertial data, whereas the second one analyzes the characteristic shape of the time series made up of the sensors readings. Due to the lack of public datasets of inertial data from smartphones for the recognition of human activity under no constraints, we collected data from 77 different people who were not connected to this research. Using this dataset, which we published online, we performed an extensive experimental validation and comparison of our proposals.

- walking recognition

- smartphones

- time series classification

Our society is more and more surrounded by devices—smartphones, tablets, wearables, “things” from the Internet of Things (IoT), etc.—which are rapidly transforming us, changing the way we live and interact with each other. The gradual incorporation of new sensors on these devices provides significant opportunities towards the development of applications that use these sensors in an ever-increasing number of domains: healthcare, sport, education, leisure, social interaction, etc. Thus, inertial sensors are being used in the smartphones to monitor human activity and, in particular, the action of walking. The information about whether the user is walking or not is very valuable for many applications, such as medicine (detection of certain pathologies) [[1]], biometric identification (recognition of the owner of the device based on his or her characteristic gait) [[2][3][4][5]], elderly assistance [[6]], emergency services [[7]], monitoring systems [[8]] and pedestrian indoor localization [[9][10][11]].

In the particular case of pedestrian indoor localization, recognizing the activity of walking using inertial sensors is essential, since other alternatives such as the Global Navigation Satellite System (GNSS) do not work indoors. Although other sensor modalities, such as infrared, ultrasound, magnetic field, WiFi or BlueTooth [[12][13][14]], have been used to detect the displacement of a person indoors, the combination of the information provided by these sensors together with the recognition of walking using the accelerometer, magnetometer and gyroscope (IMU) has been proved to be the best option to significantly increase the performance of indoor localization.

Other sensors and processing strategies were applied to identify the activity of walking, such as cameras for visual odometry or pressure sensors attached to the shoes [9]. These approaches involve additional hardware attached to the body (foot, arm, trunk, etc. [[10][11][15]]), so that their processing is simpler and the outcome more reliable. However, the placement of sensors on the body or clothing greatly restricts their applicability. Instead, using the inertial sensors that the vast majority of smartphones already have (accelerometer, gyroscope and magnetometer) is much more attractive, since they present fewer restrictions and most people already carry this kind of devices.

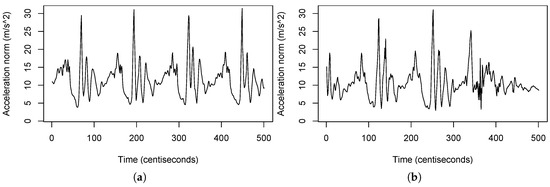

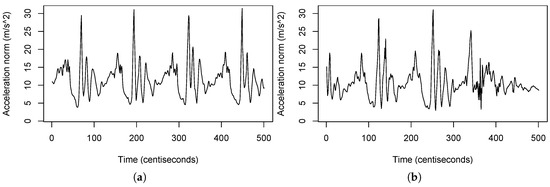

One of the biggest advantages of using the inertial sensors of the smartphone for walking recognition is that very little physical infrastructure is required for these kind systems to function. Nevertheless, achieving a robust recognition system for this task is more complex than it might seem. It is relatively easy to detect the walking activity and even count the number of steps given when people walks ideally carrying the device in the palm of his/her hand, facing upwards and without moving it. However, the situation becomes more complicated in real life, since the orientation of the smartphone with respect to the user, as well as its placement (hand, pocket, ear, bag, etc.), can constantly change as the person moves [[16]]. Getting a robust classification regardless of the device carrying mode and its orientation is challenging. This kind of devices experience a large variety of motions producing different patterns in the signal. Frequently, we obtain similar patterns for movements or actions that have nothing to do with walking, which makes the recognition of this activity a complex task. Figure 1 shows the complexity of this problem with a real example. In this figure we can see the norm of the acceleration experienced by a mobile phone while its owner is doing two different activities. The person and the device are the same in both cases. In Figure 1a, we can see the signal obtained when the person is walking with the mobile in the pocket. The impacts of the feet touching the floor are reflected in the signal as local maximum points. Please note that identifying and counting these peaks, for example applying a simple peak-valley algorithm [[17]], would easily allow counting the number of steps. Figure 1b shows the acceleration experienced by the mobile when the user is standing still with the mobile in his hand, without walking, but gesticulating with the arms in a natural way. Unfortunately, in this case, the peaks of the signal do not correspond to steps, so the aforementioned peak-valley algorithm would detect false positives.

Norm of the acceleration experienced by a mobile phone when its owner is walking (

), and not walking, but gesticulating with the mobile in his/her hand (

).

In this work, we carried out an exhaustive analysis and development of different methodologies to solve the problem of walking recognition in mobile devices. We made an extensive review of the state of the art and we explored and compared several approaches based on different machine learning techniques that allow a robust detection of the walking activity in mobile phones. We categorize these approaches into two main groups: (1) feature-based classification and (2) shape-based classification.

References

- Saibal Dutta; Amitava Chatterjee; Sugata Munshi; An automated hierarchical gait pattern identification tool employing cross-correlation-based feature extraction and recurrent neural network based classification. Expert Systems 2009, 26, 202-217, 10.1111/j.1468-0394.2009.00479.x.

- Hong Lu; Jonathan Huang; Tanwistha Saha; Lama Nachman; Unobtrusive gait verification for mobile phones. the 2014 ACM International Symposium 2014, null, 91-98, 10.1145/2634317.2642868.

- Yanzhi Ren; Yingying Chen; Mooi Choo Chuah; Jie Yang; User Verification Leveraging Gait Recognition For Smartphone Enabled Mobile Healthcare Systems. IEEE Transactions on Mobile Computing 2014, 14, 1-1, 10.1109/tmc.2014.2365185.

- Thiago Teixeira; Deokwoo Jung; Gershon Dublon; Andreas Savvides; PEM-ID: Identifying people by gait-matching using cameras and wearable accelerometers. 2009 Third ACM/IEEE International Conference on Distributed Smart Cameras (ICDSC) 2009, null, 1-8, 10.1109/icdsc.2009.5289412.

- Fernando E. Casado; Carlos V Regueiro; Roberto Iglesias; Xose M. Pardo; Eric López; Automatic Selection of User Samples for a Non-collaborative Face Verification System. Advances in Intelligent Systems and Computing 2017, 693, 555-566, 10.1007/978-3-319-70833-1_45.

- Zhu, C.; Sheng, W. Recognizing human daily activity using a single inertial sensor. In Proceedings of the 2010 8th World Congress on Intelligent Control and Automation (WCICA), Citeseer, Jinan, China, 7–9 July 2010; pp. 282–287.

- Alberto Olivares; Javier Ramirez; Juan-Manuel Gorriz; Gonzalo Olivares; Miguel Damas; Detection of (In)activity Periods in Human Body Motion Using Inertial Sensors: A Comparative Study. Sensors 2012, 12, 5791-5814, 10.3390/s120505791.

- Merryn J Mathie; Adelle Coster; Nigel Hamilton Lovell; Branko G. Celler; Accelerometry: providing an integrated, practical method for long-term, ambulatory monitoring of human movement.. Physiological Measurement 2004, 25, R1-R20, 10.1088/0967-3334/25/2/r01.

- Robert Harle; A Survey of Indoor Inertial Positioning Systems for Pedestrians. IEEE Communications Surveys & Tutorials 2013, 15, 1281-1293, 10.1109/SURV.2012.121912.00075.

- Masakatsu Kourogi; Tomoya Ishikawa; Takeshi Kurata; A method of pedestrian dead reckoning using action recognition. IEEE/ION Position, Location and Navigation Symposium 2010, null, 85-89, 10.1109/plans.2010.5507239.

- Harshvardhan Vathsangam; Adar Emken; Donna Spruijt-Metz; Gaurav S. Sukhatme; Toward free-living walking speed estimation using Gaussian Process-based Regression with on-body accelerometers and gyroscopes. Proceedings of the 4th International ICST Conference on Pervasive Computing Technologies for Healthcare 2010, null, , 10.4108/icst.pervasivehealth2010.8786.

- Jens Einsiedler; Ilja Radusch; Katinka Wolter; Vehicle indoor positioning: A survey. 2017 14th Workshop on Positioning, Navigation and Communications (WPNC) 2017, null, 1-6, 10.1109/wpnc.2017.8250068.

- Cliff Randell; Henk Muller; Low Cost Indoor Positioning System. Computer Vision 2001, 2201, 42-48, 10.1007/3-540-45427-6_5.

- Habib Mohammed Hussien; Yalemzewed Negash Shiferaw; Negassa Basha Teshale; Survey on Indoor Positioning Techniques and Systems. Simulation Tools and Techniques 2018, null, 46-55, 10.1007/978-3-319-95153-9_5.

- Steinhoff, U.; Schiele, B. Dead reckoning from the pocket-an experimental study. In Proceedings of the 2010 IEEE International Conference on Pervasive Computing and Communications (PerCom), Mannheim, Germany, 29 March–2 April 2010; pp. 162–170.

- Yang, J.; Lu, H.; Liu, Z.; Boda, P.P. Physical activity recognition with mobile phones: Challenges, methods, and applications. In Multimedia Interaction and Intelligent User Interfaces: Principles, Methods and Applications; Springer: London, UK, 2010; pp. 185–213.

- Li, F.; Zhao, C.; Ding, G.; Gong, J.; Liu, C.; Zhao, F. A reliable and accurate indoor localization method using phone inertial sensors. In Proceedings of the 2012 ACM Conference on Ubiquitous Computing (UbiComp ‘12), Pittsburgh, PA, USA, 5–8 September 2012; pp. 421–430.