Artificial Intelligence (AI), more specifically machine learning, is one of the key contributing factors that have helped realize hHome eEnergy mManagement sSystems (HEMS) today. Reinforcement lLearning (RL) is a class of machine learning algorithms that is making deep inroads in various applications in HEMS. RLenergy management in smart homes. This machine learning paradigm allows an algorithmic entity t, called an agent, to make sequences of decisions and implement actions from experience in the same manner as a human being. RLeinforcement learning is increasingly being used in HEMS various home energy management applications today. This article surveys the application of this learning paradigm in smart home energy management. Applications have been divided into five broad categories, and the reinforcement learning approach applicationed in each case have been investigated separately, in terms of building types, objective functions, and algorithm classes.

- home energy management systems (HEMS)

- reinforcement learning (RL)

- deep neural network (DNN)

- Q-value

- policy gradient

- natural gradient

- actor–critic

- residential

- commercial

- academic

1. Introduction

2. Home Energy Management Systems

HEMS refers to a slew of automation techniques that can respond to continuously or periodically changing the home/building’s internal as well as relevant external conditions, and without the need for human intervention. This section addresses the enabling technologies that make this an attainable goal.2.1. Networking and Communication

All HEMS devices must have the ability to send/receive data with each other using the same communication protocol. HEMS provides the occupants with the tools that allow them to monitor, manage, and control all the activities within the system. The advancements in technologies and more specifically in IoT-enabled devices and wireless communications protocols such as ZigBee, Wi-Fi, and Z-Wave made HEMS feasible [54][55]. These smart devices are connected through a home area network (HAN) and/or to the internet, i.e., a wide area network (WAN). The choice of communication protocol for home automation is an open question. To a large extent, it depends on the user’s personal requirements. If it is desired to automate a smaller set of home appliances with ease of installation, and operability in a plug-and-play manner, Wi-Fi is the appropriate one to use. However, with more extensive automation requirements, involving tens through to hundreds of smart devices, Wi-Fi is no longer the optimal choice. There are issues relating to scalability and signal interference in Wi-Fi. More importantly, due to its relatively high energy consumption, Wi-Fi is not appropriate for battery-powered devices. Under these circumstances, ZigBee and Z-Wave are more appropriate [56]. These communication protocols dominate today’s home automation market. There are many common features shared between the two protocols. Both protocols use RF communication mode and offer two-way communication. Both ZigBee and Z-Wave enjoy well established commercial relationships with various companies, with tens of hundreds of smart devices using one of these protocols. Z-Wave is superior to than ZigBee in terms of the range of transmission (120 m with three devices as repeaters vs. 60 m with two devices working as repeaters). In terms of inter-brand operability, Z-Wave again holds the advantage. However, ZigBee is more competitive in terms of data rate of transmission as well as in the number of connected devices. Z-Wave was specially created for home automation applications, while ZigBee is used in a wider range of places such as industry, research, health care, and home automation [57]. A study conducted by [58] foresees that ZigBee is most likely to be the standard communication protocol for HEMS. However, due to the presence of numerous factors, it is still difficult to tell with high certainty if this forecast would take place in future. It is also possible that an alternative communication protocol will emerge in future. HEMS requires this level of connectivity to be able to access electricity price from the smart grid through the smart meter and control all the system’s elements accordingly (e.g., turn on/off the TV, control the thermostat settings, determine the charge/discharge battery timings, etc.). In some scenarios, HEMS uses the forecasted electricity prices to schedule shiftable loads (e.g., washing machine, dryer, electric vehicle charging) [54].2.2. Sensors and Controller Platforms

HEMS consists of smart appliances with sensors, these IoT-enabled devices communicate with the controller by sending and receiving data. They collect information from the environment and/or about their electricity usage using built-in sensors. The smart meter gathers information regarding the total consumers’ consumption from the appliances, the peak load period, and electricity price from the smart grid. The controller can be in the form of a physical computer located within the premises, that is equipped with the ability to run complex algorithms. An alternate approach is to leverage any of the cloud services that are available to the consumers through cloud computing firms. The controller gathers information from the following sources: (i) the energy grid through the smart meter, which includes the power supply status and electricity price, (ii) the status of renewable energy and the energy storage systems, (iii) the electricity usage of each smart device at home, and (iv) the outside environment. Then it processes all the data through a computational algorithm to take specific action for each device in the whole system separately [5].2.3. Control Algorithms

AI and machine learning methods are making deep inroads into HEMS [10][59]. HEMS algorithms incorporated into the controller might be in the form of simple knowledge-based systems. These approaches embody a set of if-then-else rules, which may be crisp or fuzzy. However, due to their reliance on a fixed set of rules, such methods may not be of much practical use with real-time controllers. Moreover, they cannot effectively leverage the large amount of data available today [5]. Although it is possible to impart a certain degree of trainability to fuzzy systems, the structural bottleneck of consolidating all inputs using only conjunctions (and) and disjunctions (or) still persists. Numerical optimization comprises of another class of computational methods for the smart home controller. These methods entail an objective function that is to be either minimized (e.g., cost) or maximized (e.g., occupant comfort), as well as a set of constraints imposed by the underlying physical HEMS appliances and limitations. Due to its simplicity, linear programing is a popular choice for this class of algorithms. More recently, game theoretic approaches have emerged as an alternative approach for various HEMS optimization problems [5]. In recent years, artificial intelligence and machine learning, more specifically deep learning techniques, have become popular for HEMS applications. Deep learning takes advantage of all the available data for training the neural network to predict the output and control the connected devices. It is very helpful to forecast the weather, load, and electricity price. Furthermore, it handles non-linearities without resorting to explicit mathematical models. Since 2013, there have been significant efforts directed at using deep neural networks within an RL framework [60][61], that have met with much success.3. Use of Reinforcement Learning in Home Energy Management Systems

This section addresses aspects of the survey on the use of RL approaches for various HEMS applications. All articles in this survey have been published in established technical journals that were published or made available online within the past five years.3.1. Application Classes

In this entry, all applications were divided into five classes as in Figure 1 below.

- (i)

-

Heating, Ventilation and Air Conditioning, Fans and Water Heaters: Heating, ventilation, and air conditioning (HVAC) systems alone are responsible for about half of the total electricity consumption [48][62][63][64][65]. In this survey, HVAC, fans and water heaters (WH) have been placed under a single category. Effective control of these loads is a major research topic in HEMS.

- (ii)

-

Electric Vehicles, Energy Storage, and Renewable Generation: The charging of electric vehicles (EVs) and energy storage (ES) devices, i.e., batteries are studied in the literature as in [66][67]. Wherever applicable, EV and ES must be charged in coordination with renewable generation (RG) such as solar panels and wind turbines. The aim is to make decisions in order to save energy costs, while addressing comfort and other consumer requirements. Thus, EV, ES, and RG have been placed under a single class for the purpose of this survey.

- (iii)

-

Other Loads: Suitable scheduling of several home appliances such as dishwasher, washing machine, etc., can be achieved through HEMS to save energy usage or cost. Lighting schedules are important in buildings with large occupancy. These loads have been lumped into a single class.

- (iv)

-

Demand Response: With the rapid proliferation of green energies into homes and buildings, and these sources merged into the grid, demand response (DR) has acquired much research significance in HEMS. DR programs help in load balancing, by scheduling and/or controlling shiftable loads and in incentivizing participants [68][69] to do so through HEMS. RL for DR is one of the classes in this survey.

- (v)

-

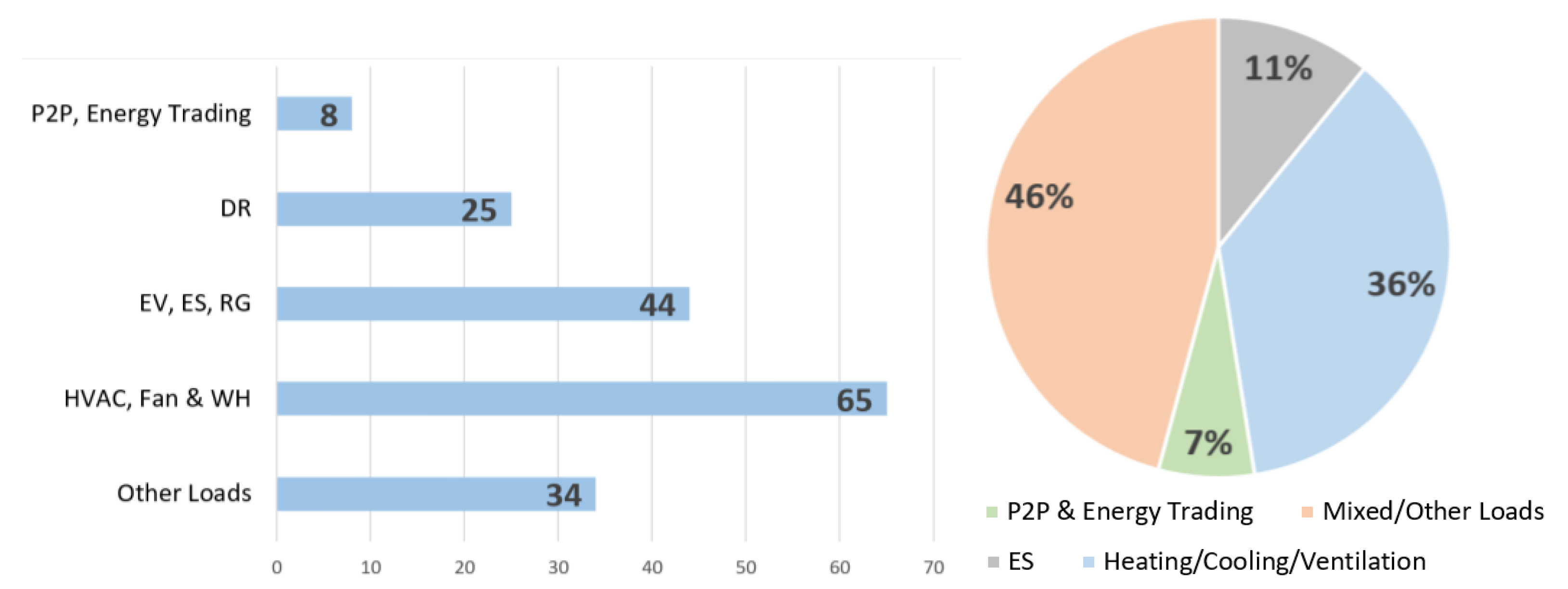

Peer-to-Peer Trading: Home energy management has been used to maximize the profit for the prosumers by trading the electricity with each other directly in peer-to-peer (P2P) trading or indirectly through a third party as in [70]. Currently, theoretical research on automated trading is receiving significant attention. P2P trading is the fifth and final application category to have been considered in this survey.Figure 3 shows the number of research articles that applied RL to each class. Note that a significant proportion of these papers addressed more than one class. More than third of the papers researchers reviewed focused only on HVAC, fans and water heaters. Just above 10% of the papers studied RL control for the energy storage (ES) systems. Only 7% of the papers focused on the energy trading. However, most of the papers (46%) are targeting more than one object. These results are shown in Figure 3.

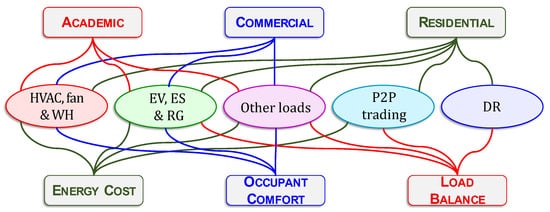

Figure 2. Building Types and Objectives. The building type and the RL’s objective of each application class. Note that the links are based on the existing literature covered in the survey. The absence of a link does not necessarily imply that the building type/objective cannot be used for the application class.

Figure 2. Building Types and Objectives. The building type and the RL’s objective of each application class. Note that the links are based on the existing literature covered in the survey. The absence of a link does not necessarily imply that the building type/objective cannot be used for the application class. Figure 3. Application Classes. The total number of articles in each application class (left), as well as their corresponding proportions (right).

Figure 3. Application Classes. The total number of articles in each application class (left), as well as their corresponding proportions (right).3.2. Objectives and Building Types

Within these HEMS applications, RL has been applied in several ways. It has been used to reduce energy consumption within residential units and buildings [71]. It has also been used to achieve a higher comfort level for the occupants [72]. In operations at the interface between the residential units and the energy grid, RL has been applied to maximize prosumers profit in energy trading as well as for load balancing. For this purpose, researchers break down the objectives into three different types as listed below.- (i)

-

Energy Cost: The cost of using any electrical device by the consumer and in most of the cases it is proportionally related to its energy consumption. In this entry researchers use the terms ‘cost’ and ‘consumption’ interchangeably.

- (ii)

-

Occupant Comfort: the main factor that can affect the occupant’s comfort is the thermal comfort, which depends mainly on the room temperature and humidity.

- (iii)

-

Load Balance: Power supply companies try to achieve load balance by reducing the power consumption of consumers at peak periods to match the station power supply. The consumers are motivated to participate in such programs by price incentives.

- (i)

-

Residential: for the purpose of this survey, individual homes, residential communities, as well as apartment complexes fall under this type of building.

- (ii)

-

Commercial: these buildings include offices, office complexes, shops, malls, hotels, as well as industrial buildings.

- (iii)

-

Academic: academic buildings range from schools, university classrooms, buildings, research laboratories, up to entire campuses.

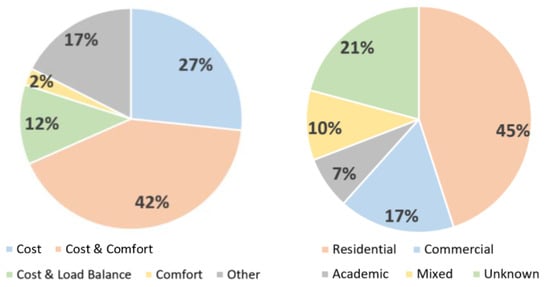

Figure 4. Objectives and Building Types. Proportions of articles in each objective (left) and building type (right).

Figure 4. Objectives and Building Types. Proportions of articles in each objective (left) and building type (right).3.3. Deployment, Multi-Agents, and Discretization

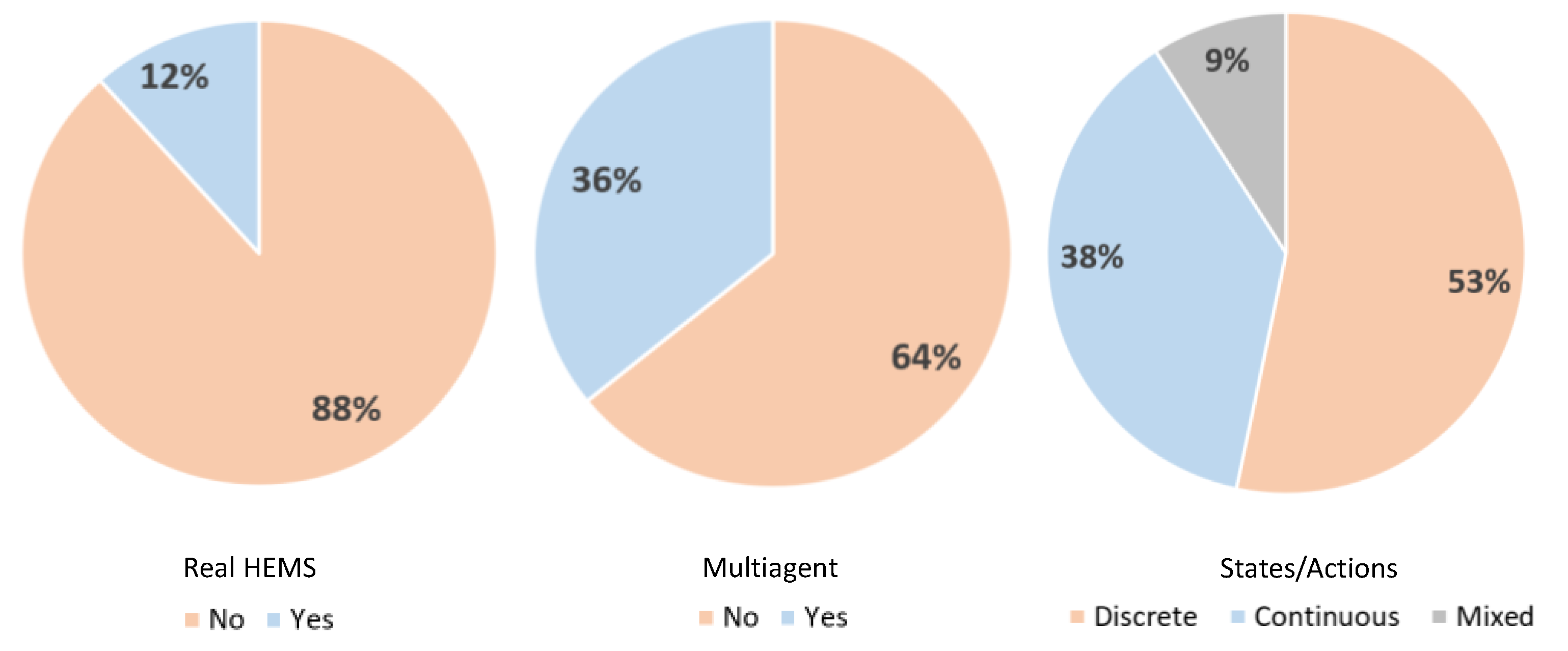

The proportion of research articles where RL was actually deployed in the real world was studied. It was found that only 12% of research articles report results where RL was used with real HEMS. The results are consistent with an earlier survey [49] where this proportion was 11%. The results are shown in Figure 5. Figure 5. Real-World, Multi-Agents, and Discretization. Proportions of articles deployed in real world HEMS (left), using multi-agents (middle), and whether the states/actions are discrete or continuous (right).

Figure 5. Real-World, Multi-Agents, and Discretization. Proportions of articles deployed in real world HEMS (left), using multi-agents (middle), and whether the states/actions are discrete or continuous (right).

References

- U.S. Energy Information Administration. Electricity Explained: Use of Electricity. 14 May 2021. Available online: www.eia.gov/energyexplained/electricity/use-of-electricity.php (accessed on 10 April 2022).

- Center for Sustainable Systems. U.S. Energy System Factsheet. Pub. No. CSS03-11; Center for Sustainable Systems, University of Michigan: Ann Arbor, MI, USA, 2021; Available online: https://css.umich.edu/publications/factsheets/energy/us-energy-system-factsheet (accessed on 10 April 2022).

- Shakeri, M.; Shayestegan, M.; Abunima, H.; Reza, S.S.; Akhtaruzzaman, M.; Alamoud, A.; Sopian, K.; Amin, N. An intelligent system architecture in home energy management systems (HEMS) for efficient demand response in smart grid. Energy Build. 2017, 138, 154–164.

- Leitão, J.; Gil, P.; Ribeiro, B.; Cardoso, A. A survey on home energy management. IEEE Access 2020, 8, 5699–5722.

- Shareef, H.; Ahmed, M.S.; Mohamed, A.; Al Hassan, E. Review on Home Energy Management System Considering Demand Responses, Smart Technologies, and Intelligent Controllers. IEEE Access 2018, 6, 24498–24509.

- Mahapatra, B.; Nayyar, A. Home energy management system (HEMS): Concept, architecture, infrastructure, challenges and energy management schemes. Energy Syst. 2019, 13, 643–669.

- Dileep, G. A survey on smart grid technologies and applications. Renew. Energy 2020, 146, 2589–2625.

- Zafar, U.; Bayhan, S.; Sanfilippo, A. Home energy management system concepts, configurations, and technologies for the smart grid. IEEE Access 2020, 8, 119271–119286.

- Alanne, K.; Sierla, S. An overview of machine learning applications for smart buildings. Sustain. Cities Soc. 2022, 76, 103445.

- Aguilar, J.; Garces-Jimenez, A.; R-Moreno, M.D.; García, R. A systematic literature review on the use of artificial intelligence in energy self-management in smart buildings. Renew. Sustain. Energy Rev. 2021, 151, 111530.

- Himeur, Y.; Ghanem, K.; Alsalemi, A.; Bensaali, F.; Amira, A. Artificial intelligence based anomaly detection of energy consumption in buildings: A review, current trends and new perspectives. Appl. Energy 2021, 287, 116601.

- Barto, A.G.; Sutton, R.S.; Anderson, C.W. Neuronlike elements that can solve difficult learning control problems. IEEE Trans. Syst. Man Cybern. 1983, 13, 835–846.

- Tesauro, G. TD-Gammon, a self-teaching backgammon program, achieves master-level play. Neural Comput. 1994, 6, 215–219.

- Peters, J.; Schaal, S. Reinforcement learning of motor skills with policy gradients. Neural Netw. 2008, 21, 682–697.

- Mnih, V.; Kavukcuoglu, K.; Silver, D.; Rusu, A.A.; Veness, J.; Bellemare, M.G.; Graves, A.; Riedmiller, M.; Fidjeland, A.K.; Ostrovski, G.; et al. Human-level control through deep reinforcement learning. Nature 2015, 518, 529–533.

- Silver, D.; Huang, A.; Maddison, C.J.; Guez, A.; Sifre, L.; van den Driessche, G.; Schrittwieser, J.; Antonoglou, I.; Panneershelvam, V.; Lanctot, M.; et al. Mastering the game of Go with deep neural networks and tree search. Nature 2016, 529, 484–489.

- Silver, D.; Schrittwieser, J.; Simonyan, K.; Antonoglou, I.; Huang, A.; Guez, A.; Hubert, T.; Baker, L.; Lai, M.; Bolton, A.; et al. Mastering the game of Go without human knowledge. Nature 2017, 550, 354–359.

- Arulkumaran, K.; Deisenroth, M.P.; Brundage, M.; Bharath, A.A. A brief survey of deep reinforcement learning. IEEE Signal Process. Mag. 2017, 34, 26–38.

- François-Lavet, V.; Henderson, P.; Islam, R.; Bellemare, M.G.; Pineau, J. An introduction to deep reinforcement learning. Found. Trends Mach. Learn. 2018, 11, 219–354.

- Silver, D.; Singh, S.; Precup, D.; Sutton, R.S. Reward is enough. Artif. Intell. 2021, 299, 103535.

- Goertzel, B. Artificial General Intelligence; Pennachin, C., Ed.; Springer: New York, NY, USA, 2007; Volume 2.

- Zhang, T.; Mo, H. Reinforcement learning for robot research: A comprehensive review and open issues. Int. J. Adv. Robot. Syst. 2021, 18, 17298814211007305.

- Bhagat, S.; Banerjee, H.; Tse, Z.T.H.; Ren, H. Deep reinforcement learning for soft, flexible robots: Brief review with impending challenges. Robotics 2019, 8, 4.

- Lee, C.; An, D. AI-Based Posture Control Algorithm for a 7-DOF Robot Manipulator. Machines 2022, 10, 651.

- Shakhatreh, H.; Sawalmeh, A.H.; Al-Fuqaha, A.; Dou, Z.; Almaita, E.; Khalil, I.; Othman, N.S.; Khreishah, A.; Guizani, M. Unmanned Aerial Vehicles (UAVs): A survey on civil applications and key research challenges. IEEE Access 2019, 7, 48572–48634.

- Zeng, F.; Wang, C.; Ge, S.S. A survey on visual navigation for artificial agents with deep reinforcement learning. IEEE Access 2020, 8, 135426–135442.

- Sun, H.; Zhang, W.; Yu, R.; Zhang, Y. Motion planning for mobile robots-focusing on deep reinforcement learning: A systematic review. IEEE Access 2021, 9, 69061–69081.

- Luong, N.C.; Hoang, D.T.; Gong, S.; Niyato, D.; Wang, P.; Liang, Y.-C.; Kim, D.I. Applications of deep reinforcement learning in communications and networking: A survey. IEEE Commun. Surv. Tutor. 2019, 21, 3133–3174.

- Zhang, G.; Li, Y.; Niu, Y.; Zhou, Q. Anti-jamming path selection method in a wireless communication network based on Dyna-Q. Electronics 2022, 11, 2397.

- Zhang, Y.; Zhu, J.; Wang, H.; Shen, X.; Wang, B.; Dong, Y. Deep reinforcement learning-based adaptive modulation for underwater acoustic communication with outdated channel state information. Remote Sens. 2022, 14, 3947.

- Ullah, Z.; Al-Turjman, F.; Mostarda, L. Cognition in UAV-aided 5G and beyond communications: A survey. IEEE Trans. Cogn. Commun. Netw. 2020, 6, 872–891.

- Nguyen, T.T.; Reddi, V.J. Deep reinforcement learning for cyber security. arXiv 2019, arXiv:1906.05799.

- Alavizadeh, H.; Alavizadeh, H.; Jang-Jaccard, J. Deep Q-Learning Based Reinforcement Learning Approach for Network Intrusion Detection. Computers 2022, 11, 41.

- Jin, Z.; Zhang, S.; Hu, Y.; Zhang, Y.; Sun, C. Security state estimation for cyber-physical systems against DoS attacks via reinforcement learning and game theory. Actuators 2022, 11, 192.

- Zhu, H.; Cao, Y.; Wang, W.; Jiang, T.; Jin, S. Deep reinforcement learning for mobile edge caching: Review, new features, and open issues. IEEE Netw. 2018, 32, 50–57.

- Liu, Y.; Wu, F.; Lyu, C.; Li, S.; Ye, J.; Qu, X. Deep dispatching: A deep reinforcement learning approach for vehicle dispatching on online ride-hailing platform. Transp. Res. Part E Logist. Transp. Rev. 2022, 161, 102694.

- Liu, S.; See, K.C.; Ngiam, K.Y.; Celi, L.A.; Sun, X.; Feng, M. Reinforcement learning for clinical decision support in critical care: Comprehensive review. J. Med. Internet Res. 2020, 22, e18477.

- Elavarasan, D.; Vincent, P.M.D. Crop yield prediction using deep reinforcement learning model for sustainable agrarian applications. IEEE Access 2020, 8, 86886–86901.

- Garnier, P.; Viquerat, J.; Rabault, J.; Larcher, A.; Kuhnle, A.; Hachem, E. A review on deep reinforcement learning for fluid mechanics. Comput. Fluids 2021, 225, 104973.

- Cheng, L.-C.; Huang, Y.-H.; Hsieh, M.-H.; Wu, M.-E. A novel trading strategy framework based on reinforcement deep learning for financial market predictions. Mathematics 2021, 9, 3094.

- Kim, S.-H.; Park, D.-Y.; Lee, K.-H. Hybrid deep reinforcement learning for pairs trading. Appl. Sci. 2022, 12, 944.

- Zhu, T.; Zhu, W. Quantitative trading through random perturbation Q-network with nonlinear transaction costs. Stats 2022, 5, 546–560.

- Zhang, D.; Han, X.; Deng, C. Review on the research and practice of deep learning and reinforcement learning in smart grids. CSEE J. Power Energy Syst. 2018, 4, 362–370.

- Zhang, Z.; Zhang, D.; Qiu, R.C. Deep reinforcement learning for power system applications: An overview. CSEE J. Power Energy Syst. 2020, 6, 213–225.

- Jogunola, O.; Adebisi, B.; Ikpehai, A.; Popoola, S.I.; Gui, G.; Gacanin, H.; Ci, S. Consensus algorithms and deep reinforcement learning in energy market: A review. IEEE Internet Things J. 2021, 8, 4211–4227.

- Perera, A.T.D.; Kamalaruban, P. Applications of reinforcement learning in energy systems. Renew. Sustain. Energy Rev. 2021, 137, 110618.

- Chen, X.; Qu, G.; Tang, Y.; Low, S.; Li, N. Reinforcement learning for selective key applications in power systems: Recent advances and future challenges. IEEE Trans. Smart Grid 2022, 13, 2935–2958.

- Mason, K.; Grijalva, S. A review of reinforcement learning for autonomous building energy management. Comput. Electr. Eng. 2019, 78, 300–312.

- Wang, Z.; Hong, T. Reinforcement learning for building controls: The opportunities and challenges. Appl. Energy 2020, 269, 115036.

- Han, M.; May, R.; Zhang, X.; Wang, X.; Pan, S.; Yan, D.; Jin, Y.; Xu, L. A review of reinforcement learning methodologies for controlling occupant comfort in buildings. Sustain. Cities Soc. 2019, 51, 101748–101762.

- Yu, L.; Qin, S.; Zhang, M.; Shen, C.; Jiang, T.; Guan, X. A review of deep reinforcement learning for smart building energy management. IEEE Internet Things J. 2021, 8, 12046–12063.

- Zhang, H.; Seal, S.; Wu, D.; Bouffard, F.; Boulet, B. Building energy management with reinforcement learning and model predictive control: A survey. IEEE Access 2022, 10, 27853–27862.

- Vázquez-Canteli, J.R.; Nagy, Z. Reinforcement learning for demand response: A review of algorithms and modeling techniques. Appl. Energy 2019, 235, 1072–1089.

- Ali, H.O.; Ouassaid, M.; Maaroufi, M. Chapter 24: Optimal appliance management system with renewable energy integration for smart homes. Renew. Energy Syst. 2021, 533–552.

- Sharda, S.; Singh, M.; Sharma, K. Demand side management through load shifting in IoT based HEMS: Overview, challenges and opportunities. Sustain. Cities Soc. 2021, 65, 102517.

- Danbatta, S.J.; Varol, A. Comparison of Zigbee, Z-Wave, Wi-Fi, and Bluetooth wireless technologies used in home automation. In Proceedings of the 7th International Symposium on Digital Forensics and Security (ISDFS), Barcelos, Portugal, 10–12 June 2019; pp. 1–5.

- Withanage, C.; Ashok, R.; Yuen, C.; Otto, K. A comparison of the popular home automation technologies. In Proceedings of the 2014 IEEE Innovative Smart Grid Technologies - Asia (ISGT ASIA), Kuala Lumpur, Malaysia, 20–23 May 2014; 2014; pp. 600–605.

- Van de Kaa, G.; Stoccuto, S.; Calderón, C.V. A battle over smart standards: Compatibility, governance, and innovation in home energy management systems and smart meters in the Netherlands. Energy Res. Soc. Sci. 2021, 82, 102302.

- Rajasekhar, B.; Tushar, W.; Lork, C.; Zhou, Y.; Yuen, C.; Pindoriya, N.M.; Wood, K.L. A survey of computational intelligence techniques for air-conditioners energy management. IEEE Trans. Emerg. Top. Comput. Intell. 2020, 4, 555–570.

- Huang, C.; Zhang, H.; Wang, L.; Luo, X.; Song, Y. Mixed deep reinforcement learning considering discrete-continuous hybrid action space for smart home energy Management. J. Mod. Power Syst. Clean Energy 2022, 10, 743–754.

- Yu, L.; Xie, W.; Xie, D.; Zou, Y.; Zhang, D.; Sun, Z.; Zhang, L.; Zhang, Y.; Jiang, T. Deep reinforcement learning for smart home energy management. IEEE Internet Things J. 2020, 7, 2751–2762.

- Esrafilian-Najafabadi, M.; Haghighat, F. Occupancy-based HVAC control systems in buildings: A state-of-the-art review. Build. Environ. 2021, 197, 107810.

- Jia, L.; Wei, S.; Liu, J. A review of optimization approaches for controlling water-cooled central cooling systems. Build. Environ. 2021, 203, 108100.

- Yu, L.; Sun, Y.; Xu, Z.; Shen, C.; Yue, D.; Jiang, T.; Guan, X. Multi-Agent Deep Reinforcement Learning for HVAC Control in Commercial Buildings. IEEE Trans. Smart Grid 2021, 12, 407–419.

- Noye, S.; Martinez, R.M.; Carnieletto, L.; de Carli, M.; Aguirre, A.C. A review of advanced ground source heat pump control: Artificial intelligence for autonomous and adaptive control. Renew. Sustain. Energy Rev. 2022, 153, 111685.

- Paraskevas, A.; Aletras, D.; Chrysopoulos, A.; Marinopoulos, A.; Doukas, D.I. Optimal Management for EV Charging Stations: A Win–Win Strategy for Different Stakeholders Using Constrained Deep Q-Learning. Energies 2022, 15, 2323.

- Ren, M.; Liu, X.; Yang, Z.; Zhang, J.; Guo, Y.; Jia, Y. A novel forecasting based scheduling method for household energy management system based on deep reinforcement learning. Sustain. Cities Soc. 2022, 76, 103207.

- Alfaverh, F.; Denaï, M.; Sun, Y. Demand Response Strategy Based on Reinforcement Learning and Fuzzy Reasoning for Home Energy Management. IEEE Access 2020, 8, 39310–39321.

- Antonopoulos, I.; Robu, V.; Couraud, B.; Kirli, D.; Norbu, S.; Kiprakis, A.; Flynn, D.; Elizondo-Gonzalez, S.; Wattam, S. Artificial intelligence and machine learning approaches to energy demand-side response: A systematic review. Renew. Sustain. Energy Rev. 2020, 130, 109899.

- Chen, T.; Su, W. Indirect Customer-to-Customer Energy Trading with Reinforcement Learning. IEEE Trans. Smart Grid 2019, 10, 4338–4348.

- Bourdeau, M.; Zhai, X.q.; Nefzaoui, E.; Guo, X.; Chatellier, P. Modeling and forecasting building energy consumption: A review of data-driven techniques. Sustain. Cities Soc. 2019, 48, 101533.

- Ma, N.; Aviv, D.; Guo, H.; Braham, W.W. Measuring the right factors: A review of variables and models for thermal comfort and indoor air quality. Renew. Sustain. Energy Rev. 2021, 135, 110436.