1. Introduction

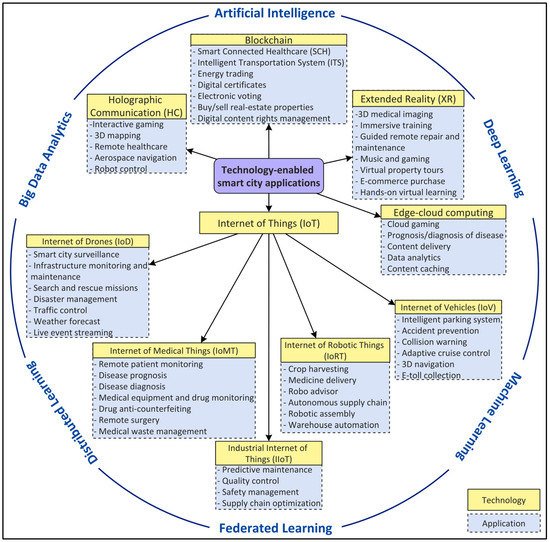

With the prominence of connected smart cities and the recent emergence of a smart city’s mobile applications and their building blocks architecture in terms of Internet of Things (IoT)

[1], Artificial Intelligence (AI)

[2], federated and distributed learning

[3], big data analytics

[4], blockchain

[5], and edge-cloud computing

[6], the implementation of a new generation of networks has been prompted. While optimization strategies at the application level along with a fast network, such as the currently in-deployment 5G networks, play an important role, this is not enough for AI-based distributed, dynamic, contextual, and secure smart city applications enabled by emergent technologies

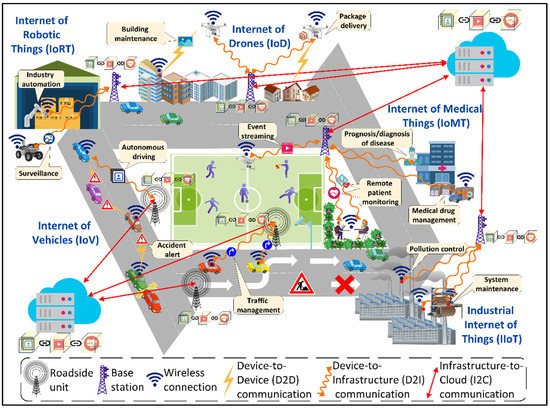

[7]. As shown in

Figure 1, these applications include, but are not limited to, autonomous driving, accident prevention and traffic management enabled by the Internet of Vehicles (IoV), remote patient monitoring, medical drug supply chain management, the prognosis/diagnosis of diseases empowered by the Internet of Medical Things (IoMT), industry automation and surveillance using the Internet of Robotic Things (IoRT), building maintenance and package delivery enabled by the Internet of Drones (IoD), system maintenance and pollution control enabled by the Industrial Internet of Things (IIoT), interactive gaming and aerospace navigation using Holographic Communication (HC), immersive training and guided repair enabled by Extended Reality (XR), intelligent transportation systems and smart connected healthcare using blockchain, and data analytics empowered by edge-cloud computing. These applications and their rigorous support for meeting requirements in terms of the quality of services (QoS) and dependability to satisfy the Service-Level-Agreement (SLA) for the end users

[8][9][10][11][8,9,10,11] have been a driving force for the evolution of networks.

Figure 1.

A view of smart city digital ecosystem.

The energy consumption of the smart cities’ digital ecosystem serving these applications is a major issue causing environmental threats and increasing electricity bills, requiring immediate sustainable remedies

[12]. Estimates show that cloud data centers, considered the backbone of smart cities, will be responsible for 4.5% of the total global energy consumption by 2025

[13]. The average electricity cost for powering a data center could be as high as $3 million per year

[14]. Furthermore, it is predicted that by 2040, Information and Communications Technology (ICT) will be responsible for 14% of global carbon emissions

[15]. Consequently, the underlying communication networks should focus on deploying efficient, dependable, and secure applications in smart city applications while considering the critical requirements of privacy, energy efficiency, high data rates, and ultra-low latencies for those applications.

2. Evolution of Wireless Communication Technology (1G–6G)

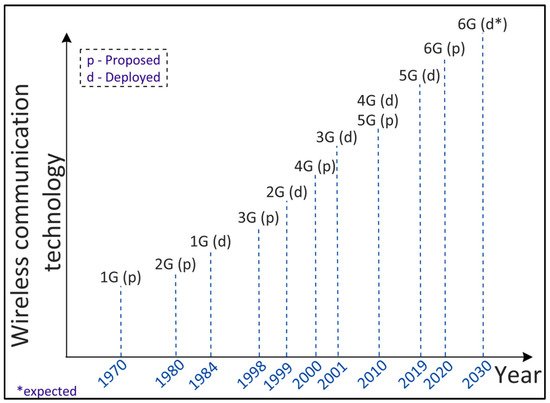

Wireless communication technology has evolved over the years intending to provide high-speed, reliable, and secure communication. Figure 2 shows the evolution of wireless network development from 1G to 6G, including the year of proposing and that of deploying a particular network generation over time. In the following, researcherswe explain each evolution along with its applications and shortcomings.

Figure 2.

Evolution of wireless communication technology from 1G to 6G.

2.1. First Generation (1G) Technology

1.1. First Generation (1G) Technology

The first generation (1G) communication system was introduced in 1978 in the United States based on the Advanced Mobile Phone System (AMPS)

[16][22]. The AMPS is an analog cellular system allocated with 50MHz bandwidth with a frequency range of 824–894 MHz

[17][23]. The bandwidth in the AMPS is divided into sub-channels of 30 KHz, each using Frequency Division Multiple Access (FDMA) for multiple users to send data. In 1979, 1G was commercially launched in Japan by the Nippon Telegraph and Telephone (NTT) DoCoMo Company. In 1981, the Nordic Mobile Telephone (NMT) standard for 1G was developed by the Nordic countries such as Norway, Denmark, Switzerland, Finland, and Sweden. In 1983, the AMPS was commercially launched in the United States and was later used in Australia. The Total Access Communication Systems (TACS) standard was introduced in the United Kingdom for 1G

[18][24]. First generation technology supported voice calls with up to 2.4 Kbps of bandwidth within one country. However, the underlying technology could not handle international voice and conference calls, and other applications such as messaging services, emails, and accessing information over a mobile wireless network. In addition, being an analog system, 1G suffered from bad voice quality and poor handoff reliability. Furthermore, 1G was less secure. To overcome these shortcomings, the 2G network generation was introduced.

2.2. Second Generation (2G) Technology

1.2. Second Generation (2G) Technology

To enable applications such as international voice calls, messaging, and access to information over a wireless network, which require a high data transfer rate, and to make communication more secure, the second-generation (2G) wireless technology was designed in the 1980s and introduced in 1991 under the Global System for Mobile (GSM) communication standards in Finland

[19][25]. The analog system of 1G was replaced by a digital system enabling the encryption of voice calls and thus providing security. The GSM uses Time Division Multiple Access (TDMA) such that each network user is allocated the channel bandwidth based on time slots

[20][26]. The GSM operates on a 900–1800 MHz frequency band except for in America where it operates in the 1900 MHz band. TDMA was later used by other digital standards such as the Digital AMPS (D-AMPS) in the United States and the Personal Digital Cellular (PDC) in Japan. As an alternative to TDMA, Code Division Multiple Access (CDMA) was introduced in the United States on the IS-95 standard

[16][22] which allowed multiple network users to simultaneously transmit data based on assigned unique code sequences. In addition to international roaming voice calls, 2G supported conference calls, call hold facility, short message services (SMS), and multimedia message services (MMS) with a data rate up to 9.6 Kbps.

The continuous evolution of the GSM technology led to the development of General Packet Radio Service (GPRS), referred to as 2.5 G, which implemented packet switching in addition to circuit switching. GPRS has provided additional services such as Wireless Application Protocol (WAP) access and internet communication such as e-mail and World Wide Web (WWW) access

[21][27]. It provides data rates up to 115 Kbps

[22][28]. GPRS further evolved to the Enhanced Data Rates for GSM evolution (EDGE), providing higher data rates.

2.3. Third Generation (3G) Technology

1.3. Third Generation (3G) Technology

The Third Generation Partnership Project (3GPP) was formed in 1998 to provide a standardized frequency across the globe for mobile networking, enabling high data rate services such as video calls, navigation, and interactive gaming. It is based on the International Mobile Telephone (IMT)-2000 standard and was first made available in Japan by the NTT DoCoMo in 2001. The IMT-2000 focused on providing a wider coverage area, improving the QoS, and making services available to users irrespective of their location

[23][29]. One of the requirements of the IMT-2000 was to have a minimum speed of 200 Kbps for a network to be 3G. The third generation has introduced wireless technology such as video conferencing and video downloading with an increased data transmission rate at a lower cost. It increased the efficiency of the frequency spectrum by improving the audio compression during a call, allowing more simultaneous calls in the same frequency range. Third generation technology evolved between 2000 and 2010 to provide Universal Mobile Telecommunications System (UMTS)-based networks with higher data rates and capacities. In particular, High-speed Downlink Packet Access (HSDPA) was deployed (also referred to as 3.5G), which is a packet-based data service providing downlink data rates 8–10 Mbps. To provide services for applications that require high data rates such as interactive gaming, High-Speed Uplink Packet Access (HSUPA) was introduced (referred to as 3.75G) which enabled an uplink data transmission speed of 1.4–5.8 Mbps. However, IP telephony, 3D videos, and High Definition (HD) mobile TV were not supported by the 3G technology, leading to the foundation of the 4G network generation.

2.4. Fourth Generation (4G) Technology

1.4. Fourth Generation (4G) Technology

To transmit data, voice, multimedia, and internet services at a higher rate, quality, and security at a low cost, the Fourth Generation (4G) was initiated in the late 2000s as an all-IP-based network system. Fourth Generation technology was first used commercially in Norway in 2009 after its successful field trial in Japan in 2005. It aimed to provide peak data rates of up to 1 Gbps at low mobility and 100 Mbps at high mobility and is based on Long-Term Evolution (LTE) and Wireless Interoperability for Microwave Access (WiMAX) technologies. The LTE standard was further enhanced to LE-Advanced Pro (referred to as 4.5G) to increase the mobile broadband and connectivity performances

[16][22]. However, 4G was not capable of operating applications that require image processing, such as machine vision, smart connected cars, and augmented reality, giving rise to the 5G network generation.

2.5. Fifth Generation (5G) Technology

1.5. Fifth Generation (5G) Technology

To obtain a consistent QoS, low end-to-end latency, reduced cost, and massive device connectivity, the Fifth Generation (5G) communication technology was established to support applications such as AR, home and industrial automation, and machine vision. Fifth Generation technology was first offered in South Korea in 2019. It provides a data rate of 20 Gbps in the downlink and 10 Gbps in the uplink, and is aimed to support three generic services; Enhanced Mobile Broadband (eMBB), Massive Machine-type Communications (mMTC), and Ultra-Reliable Low-Latency Communications (URLLCs)

[24][30]. eMBB aims to deliver peak download speeds of over 10 Gbps to support applications such as Ultra-High Definition (UHD) videos and AR. mMTC defines the requirement to support one million low-powered economical devices per Km

2 with a battery life of up to 10 years. It can support applications such as smart homes and industrial automation that involve several sensors, controllers, and actuators. URLLC sets the requirement of high reliability (99.99%), extremely low latencies (<1 ms), and support for low data rates (bps/Kbps) to support applications such as social messaging services, traffic lights, self-driving cars, and smart healthcare. In 2020, Huawei, as part of the 5.5G vision, proposed three additional sets of services; Uplink-centric Broadband Communication (UCBC), Real-Time Broadband Communication (RTBC), and Harmonized Communication and Sensing (HCS)

[25][31]. UCBC aims to increase the uplink bandwidth by 10-fold to support applications involving machine vision. For Augmented Reality (AR), Virtual Reality (VR), and Extended Reality (XR) applications, RTBC would provision large bandwidth and low latency services with a certain level of reliability. HCS focuses to offer communication and sensing functionalities for connected cars and drone scenarios. However, with the emergence of an interactive and connected IoT, communicating IoV, and holographic applications, the 5G networks could not manage the stringent high computing and communications requirements of those applications. Consequently, the 6G network generation was instigated.

2.6. Sixth Generation (6G) Technology

1.6. Sixth Generation (6G) Technology

The Sixth Generation (6G) technology was envisioned in 2019–2020 to transform the “Internet of Everything” into an “Intelligent Internet of Everything” with more stringent requirements in terms of a high data rate, high energy efficiency, massive low-latency control, high reliability, connected intelligence with Machine Learning (ML) and Deep Learning (DL), and very broad frequency bands

[26][19]. Three new application services are proposed; Computation Oriented Communications (COC), Contextually Agile eMBB Communications (CAeC), and Event-Defined uRLLC (EDuRLLC)

[26][19]. COC will enable the flexible selection of resources from the rate-latency-reliability space depending on the available communication resources to achieve a certain level of computational accuracy for learning approaches. CAeC will provision eMBB services that would be adaptive to the network congestion, traffic, topology, users’ mobility, and social networking context. 6G-EDuRLLC targets the 5G-URLLC applications that will operate in emergency or extreme situations having spatial-temporal device densities, traffic patterns, and infrastructure availability.

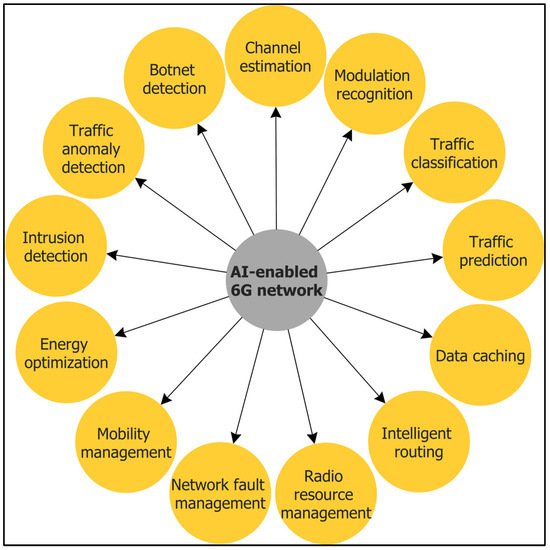

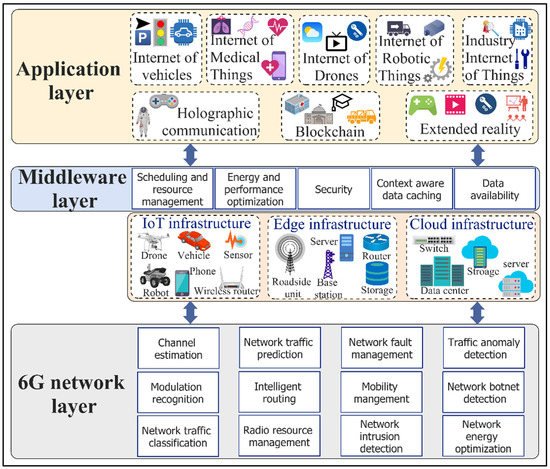

32. Artificial Intelligence (AI)-Enabled 6G Networks

The disruptive emergence of highly distributed smart city mobile applications

[27][28][29][33,34,35] such as the IoV, IoMTs, IoD, IoRT, IIoT, 3D virtual reality, and their stringent requirements in terms of the QoS and the need for the service providers to satisfy SLAs, have been a driving force for 6G. In addition, many of those applications are AI-Big data-driven, making it challenging if not impossible for 5G to satisfy those requirements. Therefore, 6G must provide battery-free device capabilities, very high data rates, a very high energy efficiency, massive low-latency control, very broad frequency bands, and ubiquitous broadband-global network coverage beyond what 5G LTE can offer. To achieve that level of efficiency, in contrast to 5G, 6G needs to be equipped with context-aware algorithms to optimize its architecture, protocols, and operations. For this purpose, 6G will infuse connected intelligence in its design in an integrating communication, computing, and storage infrastructure from the edges to the cloud and core infrastructure. Supporting a wide range of applications that are demanding in terms of low latency, high reliability, security, and execution time requires an AI-enabled optimization for 6G

[26][19]. Traditional approaches using statistical analysis based on prior knowledge and experiences via the deployment of the Software Defined Network (SDN)

[26][19] will not be any more effective due to the elapsed time from analysis to decision making. Consequently, ML and DL algorithms are used to solve several issues in networking, such as caching and data offloading

[30][36]. In this section,

rwe

searchers present AI-enabled 6G network protocols and mechanisms (

Figure 3) and their employed self-learning ML/DL models.

Figure 3.

Artificial Intelligence (AI)-enabled 6G networks.

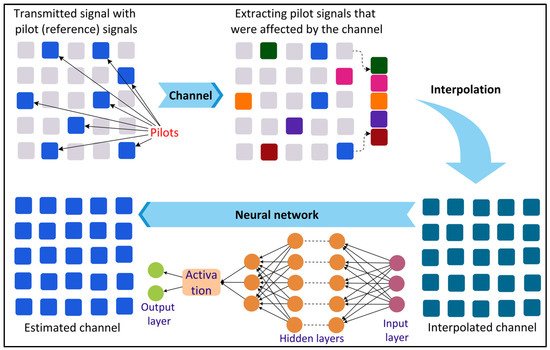

3.1. Channel Estimation

2.1. Channel Estimation

To fulfill the demanding requirements of smart city applications in terms of high data rate (Tbps), low latency (order of 0.1–1 ms), and high reliability (order of 10

−9)

[31][37], the 6G radio access will be enabled by emerging technologies such as Terahertz communication

[32][38], visible-light communication, ultra-massive multiple-input multiple-output (MIMO)

[33][39], and large intelligent surfaces

[34][40]. These technologies will increase the complexity of the radio communication channels, making efficient channel estimation challenging using the traditional mathematical approaches. The wireless communication channel attenuates the phase shifts and attenuates and adds noise to the transmitted information. In this context, channel estimation can be defined as the process of estimating the characteristics of the communication channel to recover the transmitted information from the channel effect. To increase the performance and capacity of 6G communication, precise and real-time channel estimation becomes crucial. Recently, DL has gained wide attention for precise channel estimation.

Figure 4 shows a DL-based channel estimation process where the signal is first transmitted along with some pilot (reference) signals. The effects of the channel on the pilot signals are then extracted. The channel characteristics are then estimated by a DL method using the interpolated channel.

Figure 4.

Deep Learning (DL)-based channel estimation.

Ye et al.

[35][41] proposed a Deep Neural Network (DNN)-based approach for channel estimation and symbol detection in an Orthogonal Frequency Division Multiplexing (OFDM) system. The DNN model is trained offline using OFDM samples generated using different information sequences under distinct channel conditions. The model is then used to recover the transmitted information without estimating the channel characteristics. Gao et al.

[36][42] proposed a Convolutional Neural Network (CNN)-based channel estimation framework for massive MIMO systems. The

resea

rcheuthors used one-dimensional convolution to shape the input data. Each convolutional block consists of a batch normalization layer to avoid gradient explosions

[37][43] and a Rectified Linear Unit (Relu) activation function.

3.2. Modulation Recognition

2.2. Modulation Recognition

With the increasing data traffic in smart cities, different modulation methods are employed in a communication system for efficient and effective data transmission by modulating the transmitted signal. In this context, modulation recognition aims to identify the modulation information of the signals under a noisy interference environment

[38][44]. Modulation recognition aids in signal demodulation and decoding for applications such as interference identification, spectrum monitoring, cognitive radio, threat assessment, and signal recognition. The conventional decision-theory-based and statistical-pattern-recognition-based methods for modulation recognition become computationally expensive and time consuming for smart city applications

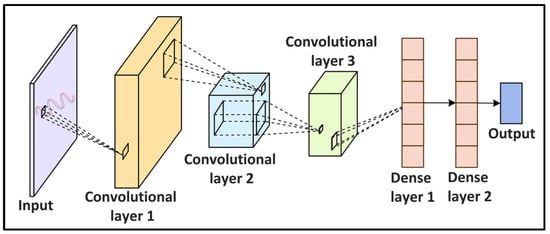

[38][44]. DL can be used as an alternative to improve the accuracy and efficiency of modulation recognition as shown in

Figure 5. Zhang et al.

[38][44] investigated the applicability of a CNN and Long Short-Term Memory (LSTM) for modulation recognition as the former is good for the automatic feature extraction of spatial data and the latter performs well for sequential data. Yang et al.

[39][45] proposed the use of CNN and Recurrent Neural Networks (RNNs) for modulation recognition over additive white Gaussian noise and Rayleigh fading channels. The

resea

rcheuthors found that DL algorithms perform modulation recognition more accurately compared to ML algorithms such as the Support Vector Machine (SVM). To ensure the privacy and security of the transmitted data, Shi et al.

[40][46] proposed a CNN-based federated learning approach with differential privacy for modulation recognition.

Figure 5.

Convolutional Neural Network (CNN)-based modulation recognition in networking.

3.3. Traffic Classification

2.3. Traffic Classification

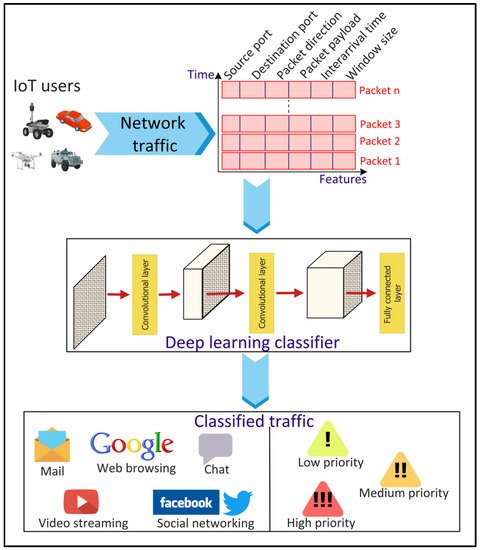

The categorization of network traffic into different classes, referred to as traffic classification, is important to ensure the QoS, control pricing, resource management, and security of smart city applications. The simplest method for traffic classification involves mapping the applications’ traffic to port numbers

[41][47]. However, this technique provides an inaccurate classification since several applications use dynamic port numbers. Payload-based methods are alternatives to the port-based techniques where the traffic is classified by examining the packet payload

[41][47]. However, the traffic payload cannot be accessed in scenarios where the packets are encrypted due to privacy and security concerns. Consequently, ML/DL-based methods can be used to address the issues of the conventional methods (

Figure 6). Ren, Gu, and Wei

[42][48] proposed a Tree-RNN to classify network traffic into 12 different classes. The proposed DL model consists of a tree structure that divides the large classification problem into smaller ones, with each class represented by a tree node. Lopez-Martin et al.

[43][49] proposed a hybrid RNN- and CNN-based network to classify traffic from IoT devices and services. CNN layers extract complex network traffic features automatically from the input data, eliminating the feature selection process used in the classical ML approaches.

Figure 6.

Deep Learning (DL)-based network traffic classification for Internet of Things (IoT) applications.

3.4. Traffic Prediction

2.4. Traffic Prediction

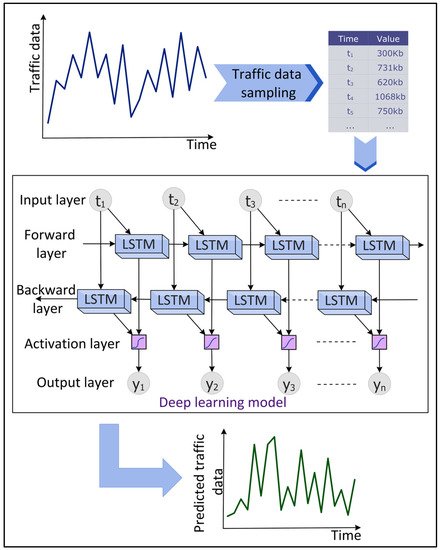

Network traffic predictions focus on predicting future traffic using previous traffic data. This aids in proactively managing the network and computing resources, improving the QoS, making the network operations cost-effective, and detecting anomalies in the data traffic. DL has shown potential in predicting network traffic accurately in real time.

Figure 7 shows an overview of the DL-based predictions of the network traffic data. Vinayakumar et al.

[44][50] evaluated the performance of different RNNs, namely the simple RNN, Long Short-Term Memory (LSTM), Gated Recurrent Unit (GRU), Identity Recurrent Unit (IRNN), and Feed-forward Neural Network (FNN) to predict network traffic using a GEANT backbone networks dataset. The experimental results showed that LSTM predicts the network traffic with the least MSE. Aloraifan, Ahmad, and Alrashed

[45][51] used Bi-directional LSTM (Bi-LSTM) and Bi-directional GRU (Bi-GRU) to predict the network traffic matrix. To increase the prediction accuracy, the

resea

rcheuthors combined a CNN with Bi-LSTM or Bi-GRU. The

researcheauthors found that the prediction performance of DL algorithms depends on the configuration of the neural network parameters.

Figure 7.

Deep Learning (DL)-based time series prediction of network traffic data.

3.5. Data Caching

2.5. Data Caching

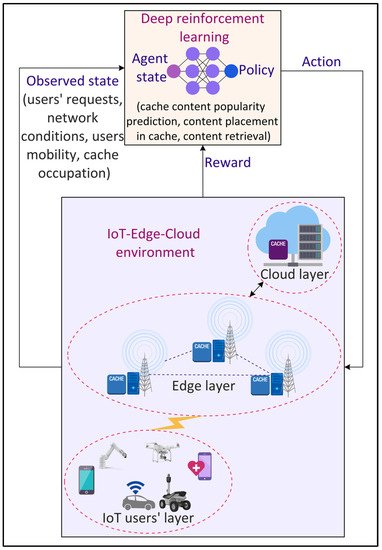

Internet data traffic is exploding at a rapid pace with the increasing popularity and demand of different smart city applications such as infotainment, AV, VR, interactive gaming, and XR. Consequently, it becomes challenging to accommodate these data in terms of storage and transmission for applications that require an ultra-low latency such as autonomous vehicles and smart healthcare. To address this challenge, edge caching

[46][52] is seen to be a potential solution that provides storage facilities to the IoT data at the edge of the network, i.e., in proximity to the mobile devices. This enables IoT applications to retrieve data in real time from the edge resources, eliminating backhaul link communication. Consequently, edge caching reduces data transmission time and energy. However, determining the optimal cache content and cache placement strategies in a dynamic network is challenging

[47][53]. DL can be effective in designing optimal caching strategies, as shown in

Figure 8. Jiang et al.

[48][54] proposed a distributed deep Q-learning-based caching mechanism to improve the edge caching efficiency in terms of cache hit rate. The mechanism involves the prediction of users’ preferences offline followed by the online prediction of content popularity. However, due to the limited caching resources of the edge nodes and spatial-temporal content demands from the mobile users, cooperative edge caching schemes are required. Zhang et al.

[49][55] proposed a DRL-based cooperative edge caching approach that enables the communication between distributed edge servers to enlarge the size of the cache data. However, cooperative schemes often collect and analyze the data at a centralized server. The sharing of sensitive data, such as users’ preferences and content popularity, among different edge and cloud servers, raises privacy concerns. To tackle this challenge, federated learning (FL) can be a promising solution in which learning models to predict content popularity are trained locally at the IoT devices for cooperative caching

[49][55].

Figure 8.

Deep Reinforcement Learning (DRL)-based data caching in the Internet of Things (IoT) environment.

3.6. Intelligent Routing

2.6. Intelligent Routing

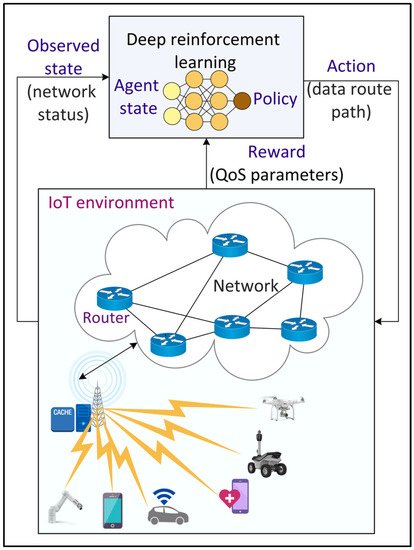

To manage the network traffic efficiently and to fulfill the QoS requirements of 6G applications, several routing strategies have been developed. However, the traditional routing protocols developed using meta-heuristic approaches become computationally expensive with increasing traffic variability. Consequently, ML and DL-based approaches have been proposed to address the shortcomings of the traditional methods.

Figure 9 shows an example of DL-based intelligent routing in an IoT environment. Tang et al.

[50][56] proposed a real-time deep CNN-based routing algorithm in a wireless mesh network backbone. A CNN model with two convolutional layers and two fully connected layers is trained periodically using the continuous stream of network data. Liu et al.

[51][57] proposed a DRL-based routing in software-defined data center networks by recombining network resources (such as cache and bandwidth) based on their effectiveness in reducing delay and then using DRL for routing with the recombined state. The employed DNN model within DRL consists of two fully connected hidden layers with 30 neurons each. The CNN model in the actor and critic networks of DRL consists of two max pooling layers, three convolutional layers with eight filters, and one fully connected layer with 30 neurons. A Relu activation function is employed in all the layers.

Figure 9.

Deep Reinforcement Learning (DRL)-based intelligent routing in the Internet of Things (IoT) environment.

3.7. Radio Resource Management

2.7. Radio Resource Management

With the future 6G networks, the density of small-cell networks increases drastically. Consequently, radio resource management has emerged for the system-level management of co-channel interference, radio resources, and other radio transmission characteristics in a wireless communication system to utilize the radio spectrum efficiently. With the increasing dynamicity and complexity of network generations towards 6G, the traditional heuristic-based approaches for radio resource management become inaccurate. ML/DL-based approaches are explored as an alternative solution. Shen et al.

[52][58] proposed graph neural networks for radio resource management in a large-scale network by modeling the wireless network as a wireless channel graph and then formulating the resource management as a graph optimization problem. The neural network consisted of three layers, an adam optimizer, and a learning rate of 0.001. Zhang et al.

[53][59] proposed a DNN framework for radio resource management to minimize the energy consumption of the network constrained by power limitation, inference limitation, and the QoS. A DNN model with three layers, 800 neurons per layer, a 0.01 learning rate, and an adam optimizer is employed for a power optimization scheme, whereas a DNN model with four layers, 80 neurons per layer, a 0.05 learning rate, and an RMSProp optimizer is used for sub-channel allocation.

3.8. Network Fault Management

2.8. Network Fault Management

In network management, fault management is to detect, predict, and eliminate malfunctions in the communication network. The integration of newly emerging technologies and paradigms in 6G networks makes the network more complex, heterogeneous, and dynamic. Consequently, fault management becomes more challenging in 6G networks. ML/DL approaches have been studied recently for efficient fault management. Regin, Rajest, and Singh

[54][60] proposed a Naïve Bayes and CNN-based algorithm for fault detection over a wireless sensor network in a distributed manner. The results show that the proposed approach accurately detects faults and is energy efficient. Regarding fault diagnosis,

[55][61] implemented an FNN for fault detection and classification in wireless sensor networks. The DL model was tuned using a hybrid gravitational search and particle swarm optimization algorithm. Kumar et al.

[56][62] studied the feasibility of ML and DL approaches for fault prediction on a cellular network. The results showed that a DNN with an autoencoder (AE) predicts the network fault with the highest accuracy compared to autoregressive neural networks and the SVM.

3.9. Mobility Management

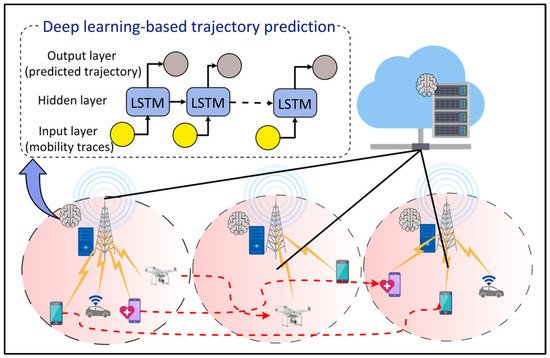

2.9. Mobility Management

Sixth Generation networks will serve a spectrum of mobile applications such as the IoV, IoRT, and IoMT that require low latency and highly reliable services. To guarantee the QoS for these applications while improving the resource utilization and network bottleneck, it becomes crucial to learn and predict users’ movements. DL-based approaches can be an alternative solution, as shown in

Figure 10. Zhao et al.

[57][63] proposed a mobile user trajectory prediction algorithm by combining LSTM with RL. LSTM is used to predict the trajectories of mobile users, whereas RL is used to improve the model training time of LSTM by finding the most accurate neural network architecture for the given problem without human intervention. An initial learning rate of 0.002 is selected for LSTM and a Q-learning rate and discount factor of 0.001 and 1 are used for RL. Klus et al.

[58][64] proposed ANN models for cell-level and beam-level mobility management optimization in the wireless network. The results showed that DL-based approaches outperform the conventional 3GPP approach for mobility management.

Figure 10.

Deep Learning (DL)-based mobility management for Internet of Things (IoT) devices and users.

3.10. Energy Optimization

2.10. Energy Optimization

With 6G networks providing efficient connectivity to a wide range of IoT applications, the number of IoT devices is expected to increase dramatically. The data transfer, storage, and analysis from these devices will increase the energy consumption of the network. Recently, ML/DL approaches have shown potential for saving energy in wireless networks. Wei et al.

[59][65] proposed actor-critic RL for users’ requests scheduling and resource allocation in heterogeneous cellular networks to minimize the energy consumption of the overall network. Continuous stochastic actions are generated by the actor part using a Gaussian distribution. The critic part estimates the performance of the policy and aids the actor in learning the gradient of the policy using compatible function approximation. Kong and Panaitopol

[60][66] proposed an online RL algorithm to dynamically activate and deactivate the resources at the base station depending on the network traffic. The online RL algorithm eliminates the need for a separate model training process.

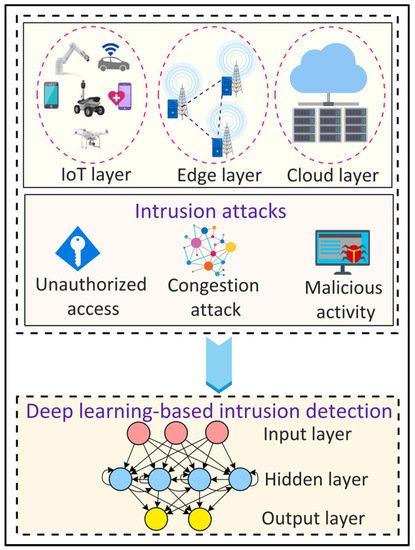

3.11. Intrusion Detection

2.11. Intrusion Detection

The evolving smart city applications running on the underlying 6G networks require high reliability and high security. In this context, intrusion detection can be used to identify unauthorized access and malicious activities in smart city applications.

Figure 11 shows a DL-based approach for intrusion detection in the IoT environment. Sharifi et al.

[61][67] proposed an intrusion detection system using a combined K Nearest Neighbor (Knn) and K-means algorithm. The proposed system employs principal component analysis for feature extraction and then uses a K-means algorithm to cluster the data. The clustered data is then classified using KNN. Yin et al.

[62][68] proposed an RNN-based approach for binary and multi-class intrusion detection. For binary class intrusion detection, an RNN model with 80 hidden nodes and a learning rate of 0.1 provides the highest accuracy. For multi-class intrusion detection, an RNN model with 80 hidden nodes and a 0.5 learning rate yields the highest accuracy. The results show that DL approaches are better than ML approaches for intrusion detection.

Figure 11.

Deep Learning (DL)-based intrusion detection in Internet of Things (IoT) environments.

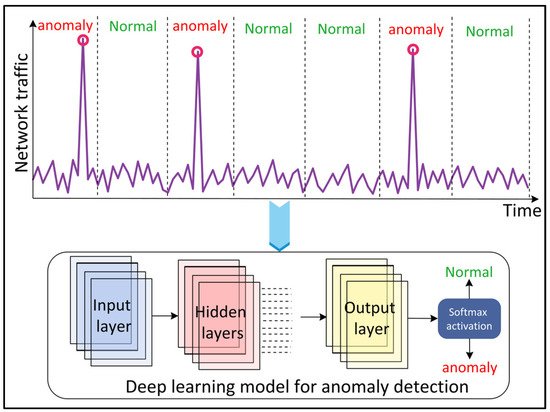

3.12. Traffic Anomaly Detection

2.12. Traffic Anomaly Detection

Network traffic anomalies refer to unusual changes in the traffic such as a transient change in users’ requests, port scans, and flash crowds. The detection of such anomalies is important for the security of the network and reliable services. DL approaches have recently gained popularity for traffic anomaly detection in complex, dynamic, and heterogeneous wireless networks (

Figure 12). Kim and Cho

[63][69] proposed a C-LSTM neural network to model the spatial-temporal traffic data information and to detect an anomaly. The CNN layer in the model reduces the variation in the information, the LSTM layer models the temporal information, and the DNN layer is used to map the data onto a separable space. The tanh activation function is employed in all the layers except the LSTM output layer, which uses softmax activation. Naseer et al.

[64][70] evaluated the performance of ML and DL models for anomaly detection. The

resea

rcheuthors implemented extreme learning machine, nearest neighbor, decision tree, random forest, SVM, Naïve Bayes, quadratic discriminant analysis, Multilayer Perceptron (MLP), LSTM, RNN, AE, and CNN models. The results showed that DCNN and LSTM detect anomalies with the highest accuracy.

Figure 12.

Deep Learning (DL)-based network traffic anomaly detection.

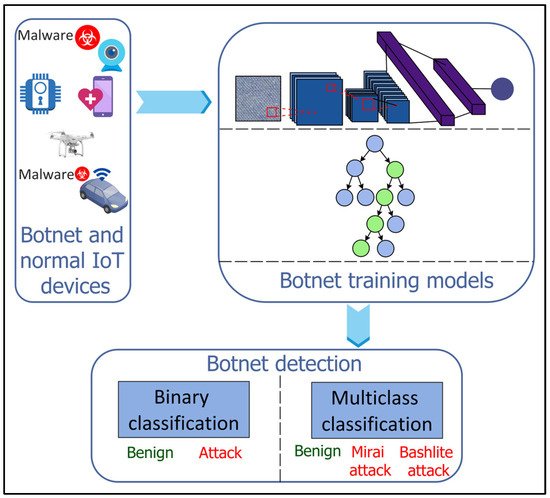

3.13. Botnet Detection

2.13. Botnet Detection

The ever-growing IoT network in smart cities suffers from botnet attacks where a large number of IoT devices are infected by malware to execute repetitive and malicious activities and launch cyber-attacks such as Denial of Service (DoS), distributed DoS (DdoS), or data theft against critical smart city infrastructure

[65][66][71,72]. For efficient and reliable botnet detection, ML and DL approaches have been restored as potential solutions in the literature (

Figure 13). Injadat et al.

[67][73] proposed a combined Bayesian Optimization Gaussian Process (BO-GP) algorithm and Decision Tree (DT) classifier for detecting botnet attacks on the IoT devices. Popoola et al.

[68][74] proposed a DL-based botnet detection system for the resource-constrained IoT devices. The dimensionality of the large volume of network traffic data is reduced using the LSTM autoencoder (LAE) having a Relu activation function and a learning rate of 0.001. A deep Bi-LSTM model, with six input neurons, four dense hidden layers, and an output layer, is then used for botnet detection on the low-dimensional feature set. A Relu activation function is employed in the input and hidden layers, whereas sigmoid and softmax activation functions are used at the output layer for binary and multiclass classification, respectively.

Figure 13.

Machine Learning (ML)- and Deep Learning (DL)-based botnet detection for Internet of Things (IoT).

43. Taxonomy of Technology-Enabled Smart City Applications in 6G Networks

In this section, rwesearchers present a taxonomy of smart city applications for next-generation 6G networks, as shown in Figure 14. RWesearchers base the taxonomy on the underlying technologies, i.e., IoT, HC, blockchain, XR, and edge-cloud computing, used by those applications empowered by AI, ML/DL, federated and distributed learning, and big data analytics paradigms. In the following, researcherswe describe the technologies along with the requirements, in terms of the network characteristics, of the applications using them.

Figure 14.

Taxonomy of smart city applications in 6G based on underlying technologies.

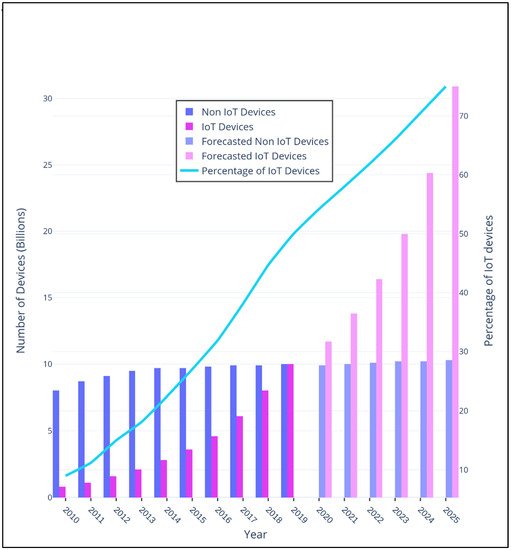

4.1. Internet of Things (IoT)

3.1. Internet of Things (IoT)

The IoT is a network of connected devices, sensors, and users using internet technologies that can self-organize, sense and collect data, analyze the stored information, and react to the dynamic environment

[69][75]. The number of connected devices is expected to reach more than 30 billion by 2025 which will be more than 70% of the non-IoT devices.

Figure 15 shows the growth of IoT and non-IoT devices over years. The IoT can be further classified based on its application domains such as the IoV, IoMT, IoRT, IoD, and IioT.

Figure 15.

Growth of Internet of Things (IoT) and non-IoT devices from 2010 to 2025.

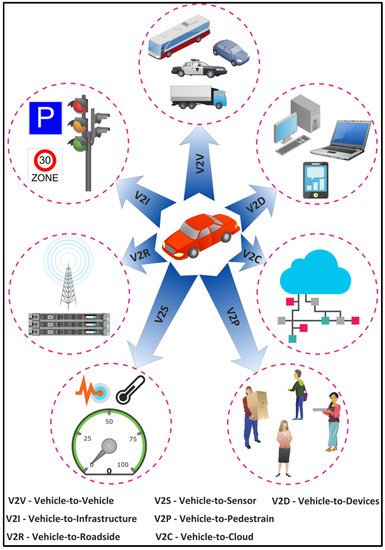

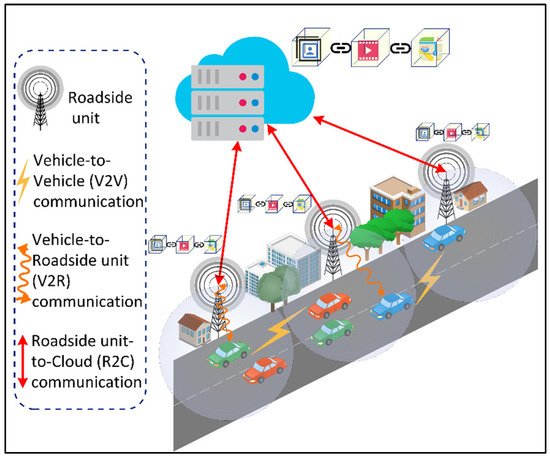

43.1.1. Internet of Vehicles (IoV)

The IoV is a distributed network of mobile vehicles that have sensing, computing, and Internet Protocol (IP)-based communication capabilities

[70][76]. The global IoV market is projected to reach $208,107 million by 2024 from $66,075 in 2017, with a GAGR of 18% between 2018 and 2024

[71][77]. The IoV network interconnects vehicles with pedestrian and urban infrastructure facilities such as the cloud and Roadside Units (RSUs). The IoV includes six types of communications for vehicles to receive and transmit data as shown in

Figure 16: (1) Vehicle-to-Vehicle (V2V), (2) Vehicle-to-Infrastructure (V2I), (3) Vehicle-to-Roadside (V2R), (4) Vehicle-to-Sensors (V2S), (5) Vehicle-to-Cloud (V2C), and (6) Vehicle-to-Pedestrian (V2P). Several vehicular applications have been developed for the IoV such as an intelligent parking system, real-time navigation, traffic and accident alert, facial recognition for autonomous driving, cooperative adaptive cruise control, and traffic signal violations. These applications have strict data rate and latency requirements that should be supported by the underlying 6G networks. For instance, autonomous driving involving multiple sensors may require a total data rate of 1 Gbps for V2V and V2X communications

[72][78]. Furthermore, it requires a reliability of 99.999%

[73][79], which cannot be obtained with the existing wireless communication systems. In addition, vehicular applications such as infotainment, e-toll collection, collision warning, autocruise, AR map navigation, and co-operative stability control have stringent latency requirements of 500 ms, 200 ms, 100 ms, 20 ms, 5 ms, and 1 ms, respectively

[74][80]. The 6G networks should consider the issues of the limited spectrum, high latency, and low reliability prevailing in the current vehicular standards, i.e., IEEE 802.11p

[75][81].

Figure 16.

Types of communications on the Internet of Vehicles (IoV).

43.1.2. Internet of Medical Things (IoMT)

The IoMT is a distributed network of bio-medical sensors and devices that acquire, process, and transmit the bio-medical signals of patients. It integrates the communication protocol of the IoT with medical devices to enable remote patient monitoring and treatment. Its global market is expected to reach $172.4 billion by 2030 from $39.3 billion in 2020, at a CAGR of 15.9% from 2021 to 2030

[76][82]. The IoMT has several applications, such as the monitoring of patients with chronic diseases, monitoring of elderly people, disease prognosis and diagnosis, medical equipment and drug monitoring, drug anti-counterfeiting, and medical waste management. In the context of a pandemic such as COVID-19, the IoMT can be used for the detection, tracking, and monitoring of infected individuals and the prediction of infections

[77][83]. The IoMT applications require ultra-low latency and high reliability for scenarios such as remote surgery. The tactile and haptic internet is the backbone for such scenarios, whose requirements are not completely fulfilled by the current wireless systems

[78][84]. The tactile internet requires an end-to-end latency of the order of 1 ms and haptic feedback requires a latency of sub-milliseconds

[79][80][85,86].

43.1.3. Internet of Robotic Things (IoRT)

The IoRT is a distributed network of intelligent robot devices that can monitor events, integrate sensors’ data from multiple heterogeneous sources, and use local/distributed intelligence to take actions

[81][87]. The IoRT market is expected to reach $1.44 billion by 2022, growing at a CAGR of 29.7% from 2016 to 2022

[82][88]. The IoRT has several applications in several domains such as agriculture, construction, logistics, transportation, banking, healthcare, home automation, and industrial automation

[83][89]. Robotics and automation require control in real time to avoid oscillatory movements, with a maximum tolerable latency of 100µs and round-trip times of 1ms

[84][17]. Moreover, industrial robotic automation requires a reliability of 99.999%

[73][79].

43.1.4. Internet of Drones (IoD)

The IoD is a network of coordinated drones with communication capabilities among themselves, pedestrians, and ground infrastructure

[85][90]. The global drone market is expected to reach $43.4 billion by 2027 with a CAGR of 12.56% between 2022 and 2027

[86][91]. The IoD applications include smart city surveillance, infrastructure monitoring, and maintenance, search, and rescue missions in place of natural/manmade hazards, logistics, traffic control, weather forecasts, disaster management, and events live streaming

[87][92]. These applications require tactile and haptic internet with an ultra-low latency, high data rate, and high reliability requirements.

43.1.5. Industrial Internet of Things (IioT)

The IioT refers to a network of connected machines and devices in the industry for machine-to-machine (M2M) and machine-to-human (M2H) communications

[88][93]. The IioT market is expected to reach $197 billion by 2023 from $115 billion in 2016 with a CAGR of 7.5% from 2017 to 2023

[89][94]. Applications involve predictive maintenance, quality control, safety management, and supply chain optimization. The IioT sensors and devices are often placed in noisy environments to support mission-critical safety applications. These applications have stringent latency and reliability requirements for proper control decisions

[90][95]. In some cases, the IioT may require a reliability of 99.99999%

[91][96] as information loss could be catastrophic in some scenarios such as nuclear energy plants.

4.2. Holographic Communication (HC)

3.2. Holographic Communication (HC)

HC is the next evolution of 3D videos and images that will capture data from multiple sources, providing end users with an immersive 3D experience. The global holographic display market size is projected to reach $11.65 billion by 2030 from $1.13 billion in 2020, i.e., a CAGR of 29.1% from 2021 to 2030

[92][97]. It requires very high data rates and an ultra-low latency. The bandwidth requirements for a human-sized hologram after data compression varies from tens of Mbps to 4.3 Tbps

[93][94][98,99]. However, a high level of compression to reduce the bandwidth requirements will lead to a high latency. To have a seamless 3D experience, holograms require a latency of sub-milliseconds

[84][95][17,100]. Consequently, there is a tradeoff between the level of compression, computation bandwidth, and latency, which needs to be optimized by the network

[96][101]. Furthermore, the network should have high resilience in the case of HC to maintain a high QoS by assuring reliability and reducing jitter, packet loss, and latency. Considering the security requirements for HC in applications such as smart healthcare (remote surgery), the network must be secured.

4.3. Extended Reality (XR)

3.3. Extended Reality (XR)

Extended reality (XR) technologies involve AR, Mixed Reality (MR), and VR applications. The current wireless communication technologies are unable to provide an immersive XR experience for users of these applications, such as 3D medical imaging, surgical training, immersive gaming, guided remote repair and maintenance, virtual property tours, e-commerce purchase, hands-on virtual learning, and virtual field trips for students. This is due to the inability of the currently deployed 5G technology to deliver ultra-low latencies and very high data rates

[97][102]. These XR applications are highly demanding in terms of communication and computation due to the incorporation of perceptual needs (human senses, physiology, and cognition). The envisioned 6G networks should ensure the Quality-of-Physical-Experience (QoPE)

[98][103] for these XR applications by providing URLLLC and eMBB services.

4.4. Blockchain

3.4. Blockchain

Blockchain is a decentralized peer-to-peer technology that eliminates the need for a centralized third party

[99][104]. Each event in the network is recorded in a ledger that is replicated and synchronized among all network participants. A participant in the network owns a public–private key pair

[100][105], which enables authentication

[101][102][106,107] and allows transaction validation. The consensus, cryptographic, provenance, and finality characteristics of blockchain provide security, privacy, immutability, transparency, and traceability. Blockchain has shown potential in several applications including healthcare

[103][104][105][106][107][108,109,110,111,112], transportation

[108][109][113,114], energy

[110][111][115,116], education

[112][113][117,118], and governance

[114][115][119,120].

Figure 17 shows a blockchain-based integrated IoV-edge-cloud computing system, where the ledge is replicated at the edge and cloud servers. The events for different vehicular applications such as autonomous driving, infotainment, and real-time navigation are recorded as transactions in the ledger. The consensus protocol and replication of the ledger involved in blockchain require high bandwidth, reliable connection, and low-latency communications between multiple nodes to reduce communication overhead

[116][117][118][121,122,123]. It requires a synergistic aggregation of URLLC and mMTC to provide an ultra-low latency, reliability, and scalability.

Figure 17.

Blockchain-enabled Integrated Internet of Vehicles (IoV)-Edge-Cloud environment.

4.5. Edge-Cloud Computing

3.5. Edge-Cloud Computing

Cloud computing is a technological paradigm that enables on-demand access to a shared pool of configurable computing, storage, and network resources over the internet

[119][124]. It is based on a pay-per-use model and can be provisioned with minimal management effort. With the emergence of the IoT and big data analytics applications in various domains such as healthcare

[120][121][122][125,126,127], education

[123][124][125][128,129,130], transportation

[126][131], banking

[127][128][132,133], energy utilities

[129][130][134,135], and entertainment

[131][132][136,137], cloud computing provides a sandbox for data processing and storage, enabling the deployment of compute-intensive smart city applications

[133][138]. However, considering the distance between the IoT devices and the remote cloud servers, the latency requirements of time-critical applications may be violated. Consequently, mobile edge computing has been introduced, which provides computing and storage resources close to the IoT devices. For applications with low latency requirements, the service request can be directed to the nearest edge computing site. However, the computing capabilities of edge servers are low compared to the remote cloud servers, leading to the high processing time for compute-intensive applications. Thus, an integrated edge-cloud computing system is often used to handle compute-intensive and/or time-critical applications

[134][135][139,140]. However, the underlying 6G networks should consider the energy efficiency

[136][137][138][139][140][141][142][143][141,142,143,144,145,146,147,148], optimal resource provisioning and scheduling

[144][145][146][147][149,150,151,152], and contextual-aware application partitioning

[148][149][150][151][152][153][154][155][156][157][158][159][153,154,155,156,157,158,159,160,161,162,163,164] requirements of this integrated system.

In summary, smart city applications have stringent requirements, in terms of an ultra-low latency, high data rate, reliability, energy efficiency, and security that should be fulfilled by the next-generation 6G networks. These applications, underpinned by emerging technologies such as the IoT, HC, blockchain, XR, and edge-cloud computing, provide a lot of potential for unprecedented services to citizens. However, smart urbanism

[160][165] is seen as critical for the success of a smart city. Governments should put in place plans to address some inherent issues to the deployment of AI-sensing and data-driven smart applications. In particular, privacy concerns should be addressed as personal data is collected continuously. In addition, people’s fear that this paradigm shift to smart cities may generate unemployment in some professions should be dealt with. Furthermore, worries of losing social face-to-face interactions with government entities, which would rely on sensing and digital devices to collect personal data for improving services, have to be taken care of. Consequently, smart urbanism advocates incremental changes to cities rather than a massive one.

54. Future Directions

While the 5G mobile communication networks generation is just starting to be deployed, there is already a plan to design new 6G networks. This is because of the proliferation of diversified smart city applications that are extensively distributed and more intelligent than ever before, thanks to the emergence of AI, big data analytics, federated and distributed learning, the IoT, edge-cloud computing, and blockchain. The currently in-deployment 5G networks will not be capable of meeting the heterogeneous and stringent requirements of these applications, in terms of efficiency, real-time operation, and reliability, with ever-increasing traffic demands. For instance, 5G is incapable of delivering ultra-low latencies and high data rates for holographic applications that demand data rates of up to 4.3 Tbps. In contrast to the previous generation networks, 6G is expected to support numerously connected and intelligent applications with stringent requirements in terms of high data rates, high energy efficiency, ultra-low latencies, and very broad frequency bands. Considering the requirements of 6G networks and smart city applications, AI will be the dominant enabler in the network, middleware, and application layers, as shown in Figure 18. Current research practices either focus on self-learning 6G networks, AI-enabled middleware, or AI applications in smart cities’ digital ecosystems. AI with self-learning capabilities empowers 6G networks to be intelligent, agile, flexible, and adaptive by providing functionalities for channel estimation, modulation recognition, network traffic classification and prediction, intelligent routing, radio resource management, fault management, network energy optimization, and intrusion, botnet, and traffic anomaly detections. Furthermore, at the middleware layer, AI can aid in the scheduling of smart city applications’ requests, computing resource management, computation and communication energy optimization, application performance optimization, context-aware data caching, and fault tolerance and data availability. In regard to smart city applications, AI can benefit the evolving applications within emerging technological paradigms such as the IoV, IoMT, IoD, IoRT, IIoT, HC, XR, cloud computing, edge-cloud computing, and blockchain.

Figure 18.

AI-enabled smart city applications in self-learning 6G networks.

Research in the following directions is required for the realization of AI-enabled smart city applications in self-learning 6G networks.

In 6G networks, a massive amount of the data will be generated from the network, middleware, and application layers. The dynamic environment requires ongoing updates of the AI learning models’ parameters. In 6G networks where ultra-low latencies are a key requirement, tuning the parameters using traditional grid search or meta-heuristic approaches may introduce a computational overhead, degrading the performance of smart city applications and the underlying 6G networks. Consequently, there is a need for automated AI frameworks that would select the optimal models’ parameters based on the contextual applications and network dynamics.

The self-learning 6G networks in the smart city digital ecosystem will comprise numerous AI models at the network, middleware, and application layers. The output of the learning models from the application and middleware layers should be fed as the input to the learning models at the network layer in a dynamic environment. The high flexibility and scalability of the AI learning frameworks are crucial for supporting a high number of interactions between the learning models at different layers and providing dependable services in real time. Consequently, further research is required on how to integrate dependable, flexible, and scalable learning frameworks for smart city applications in 6G networks.

In 6G networks, meeting the accuracies of the AI models to process high-dimensional dynamic data at the network, middleware, and application layers is crucial. However, these AI models, deep learning and meta-heuristics in particular, have high computational complexity and require a huge amount of time for convergence. This hinders the deployment of applications with ultra-low latency requirements such as robotics and automation, collision warning in the IoV, and AR map navigation. Furthermore, the computationally expensive AI models have a high energy consumption. Consequently, further research on how to design efficient AI approaches to improve computation efficiency and energy consumption is required.