Ship detection and tracking have attracted a lot of attention in remote sensing because of the great potential in military application and port activities analysis. Compared with the vehicle targets, the size of the ship targets varies in a wide range, and the background of the track is commonly water, which may limit the performance of tracking methods. The feature of the water background is very similar to adjacent frames, which leads to ineffective motion information from the background analysis. Tracking algorithms such as optical flow-based tracker and offline tracking methods are thus not proper for ship tracking. Therefore, several novel models have been proposed to track ships from satellite videos.

- satellite video

- traffic target tracking

- ship tracking

1. Introduction

Object tracking is a hot topic in computer vision and remote sensing, and it typically employs a bounding box that locks onto the region of interest (ROI) when only an initial state of the target (in a video frame) is available [1][2]. Thanks to the development of satellite imaging technology, various satellites with advanced onboard cameras have been launched to obtain very high resolution (VHR) satellite videos for military and civilian applications. Compared to traditional target tracking methods, satellite video target tracking is more efficient in motion analysis and object surveillance, and has shown great potential applications in spying on enemies [3], monitoring and protecting sea ice [4], fighting wildfires [5], and monitoring city trafficking [6], which traditional target tracking cannot even approach.

Recent research has shown an increasing interest in traditional video-based target tracking, with numerous algorithms proposed for accurate tracking in computer vision. Methods that utilize generative models [7][8][9][10] or discriminant models [11][12][13][14][15][16][17] can be divided into two categories. The generative model-based target tracking can be thought of as a search problem, in which the object area in the current frame is modeled and the most similar region is chosen as the predicted location in the next frame. In contrast, discriminant models regard object tracking as a binary classification problem and have attracted much attention due to their efficiency and robustness [18].

| Target | Method | Ref. | Year | Description |

|---|---|---|---|---|

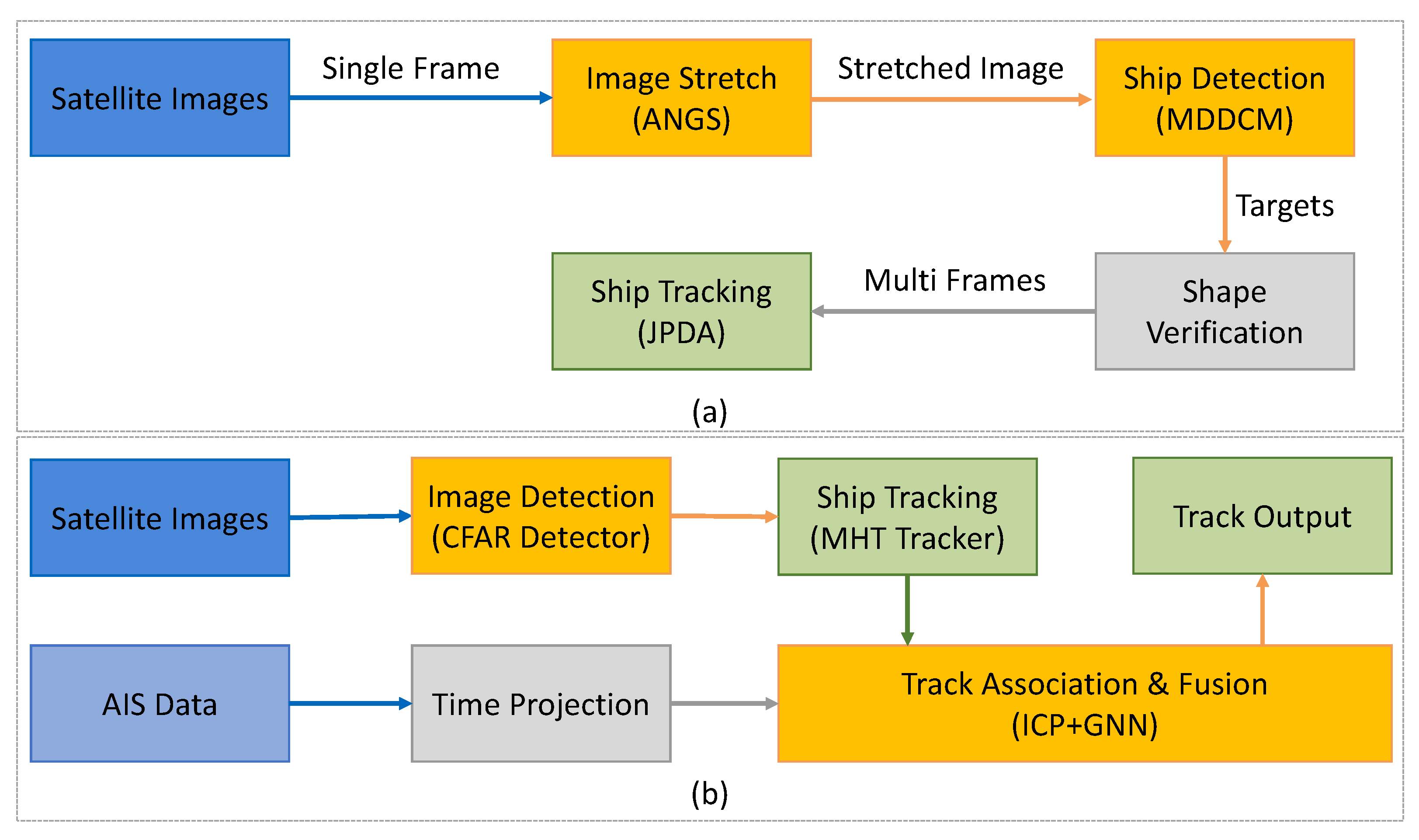

| Ship | Image-based | [19] | 2019 | Automatic detection and tracking for moving ships |

| [21] | 2021 | Framework consists of ANGS, MDDCM, JPDA | ||

| [22] | 2022 | Mutual convolution SN with hierarchical double regression | ||

| Multi-modality | [23] | 2010 | Ship detection and tracking using AIS and SAR data | |

| [20] | 2018 | Track-level fusion for noncooperative ship tracking | ||

| [24] | 2018 | Integrate sequential imagery with AIS data | ||

| [25] | 2021 | Integrate satellite sequential imagery with ship location information |

2. Image-Based Tracking Methods

3. Multi-Modality Based Tracking Methods

References

- Yilmaz, A.; Javed, O.; Shah, M. Object tracking: A survey. ACM Comput. Surv. (CSUR) 2006, 38, 13.

- Jiao, L.; Zhang, R.; Liu, F.; Yang, S.; Hou, B.; Li, L.; Tang, X. New Generation Deep Learning for Video Object Detection: A Survey. IEEE Trans. Neural Netw. Learn. Syst. 2021, 1–21.

- Melillos, G.; Themistocleous, K.; Papadavid, G.; Agapiou, A.; Prodromou, M.; Michaelides, S.; Hadjimitsis, D.G. Integrated use of field spectroscopy and satellite remote sensing for defence and security applications in Cyprus. In Proceedings of the Fourth International Conference on Remote Sensing and Geoinformation of the Environment (RSCy2016), Paphos, Cyprus, 4–8 April 2016; Volume 9688, pp. 127–135.

- Xian, Y.; Petrou, Z.I.; Tian, Y.; Meier, W.N. Super-resolved fine-scale sea ice motion tracking. IEEE Trans. Geosci. Remote Sens. 2017, 55, 5427–5439.

- Bailon-Ruiz, R.; Lacroix, S. Wildfire remote sensing with UAVs: A review from the autonomy point of view. In Proceedings of the 2020 International Conference on Unmanned Aircraft Systems (ICUAS), Athens, Greece, 1–4 September 2020; pp. 412–420.

- Du, B.; Sun, Y.; Cai, S.; Wu, C.; Du, Q. Object tracking in satellite videos by fusing the kernel correlation filter and the three-frame-difference algorithm. IEEE Geosci. Remote Sens. Lett. 2017, 15, 168–172.

- Xing, X.; Yongjie, Y.; Huang, X. Real-time object tracking based on optical flow. In Proceedings of the 2021 International Conference on Computer, Control and Robotics (ICCCR), Shanghai, China, 8–10 January 2021; pp. 315–318.

- Panetta, K.; Kezebou, L.; Oludare, V.; Agaian, S. Comprehensive underwater object tracking benchmark dataset and underwater image enhancement with GAN. IEEE J. Ocean. Eng. 2021, 47, 59–75.

- Yu, H.; Li, G.; Su, L.; Zhong, B.; Yao, H.; Huang, Q. Conditional GAN based individual and global motion fusion for multiple object tracking in UAV videos. Pattern Recognit. Lett. 2020, 131, 219–226.

- Acharya, D.; Ramezani, M.; Khoshelham, K.; Winter, S. BIM-Tracker: A model-based visual tracking approach for indoor localisation using a 3D building model. ISPRS J. Photogramm. Remote Sens. 2019, 150, 157–171.

- Zhao, C.; Liu, H.; Su, N.; Wang, L.; Yan, Y. RANet: A Reliability-Guided Aggregation Network for Hyperspectral and RGB Fusion Tracking. Remote Sens. 2022, 14, 2765.

- Wilson, D.; Alshaabi, T.; Van Oort, C.; Zhang, X.; Nelson, J.; Wshah, S. Object Tracking and Geo-Localization from Street Images. Remote Sens. 2022, 14, 2575.

- Klinger, T.; Rottensteiner, F.; Heipke, C. Probabilistic multi-person localisation and tracking in image sequences. ISPRS J. Photogramm. Remote Sens. 2017, 127, 73–88.

- Zhang, X.; Xia, G.S.; Lu, Q.; Shen, W.; Zhang, L. Visual object tracking by correlation filters and online learning. ISPRS J. Photogramm. Remote Sens. 2018, 140, 77–89.

- Liu, S.; Liu, D.; Srivastava, G.; Połap, D.; Woźniak, M. Overview and methods of correlation filter algorithms in object tracking. Complex Intell. Syst. 2021, 7, 1895–1917.

- Du, S.; Wang, S. An overview of correlation-filter-based object tracking. IEEE Trans. Comput. Soc. Syst. 2021, 9, 18–31.

- Xu, T.; Feng, Z.; Wu, X.J.; Kittler, J. Adaptive channel selection for robust visual object tracking with discriminative correlation filters. Int. J. Comput. Vis. 2021, 129, 1359–1375.

- Lyu, Y.; Yang, M.Y.; Vosselman, G.; Xia, G.S. Video object detection with a convolutional regression tracker. ISPRS J. Photogramm. Remote Sens. 2021, 176, 139–150.

- Li, H.; Chen, L.; Li, F.; Huang, M. Ship detection and tracking method for satellite video based on multiscale saliency and surrounding contrast analysis. J. Appl. Remote Sens. 2019, 13, 026511.

- Liu, Y.; Yao, L.; Xiong, W.; Zhou, Z. GF-4 Satellite and automatic identification system data fusion for ship tracking. IEEE Geosci. Remote Sens. Lett. 2018, 16, 281–285.

- Yu, W.; You, H.; Lv, P.; Hu, Y.; Han, B. A Moving Ship Detection and Tracking Method Based on Optical Remote Sensing Images from the Geostationary Satellite. Sensors 2021, 21, 7547.

- Bai, Y.; Lv, J.; Wang, C.; Geng, Y. Ship tracking method for resisting similar shape information under satellite videos. J. Appl. Remote Sens. 2022, 16, 026517.

- Gurgel, K.W.; Schlick, T.; Horstmann, J.; Maresca, S. Evaluation of an HF-radar ship detection and tracking algorithm by comparison to AIS and SAR data. In Proceedings of the 2010 International WaterSide Security Conference, Carrara, Italy, 3–5 November 2010; pp. 1–6.

- Yao, L.; Liu, Y.; He, Y. A Novel ship-tracking method for GF-4 satellite sequential images. Sensors 2018, 18, 2007.

- Shand, L.; Larson, K.M.; Staid, A.; Gray, S.; Roesler, E.L.; Lyons, D. An efficient approach for tracking the aerosol-cloud interactions formed by ship emissions using GOES-R satellite imagery and AIS ship tracking information. arXiv 2021, arXiv:2108.05882.

- Wang, D.; He, H. Observation capability and application prospect of GF-4 satellite. In Proceedings of the 3rd International Symposium of Space Optical Instruments and Applications, Beijing, China, 26–29 June 2016; pp. 393–401.