Automated emotion recognition (AEE) is important issue in the various field of activities, which uses human emotional reaction as a signal for marketing, technical equipment or human-robot interaction. Paper analyzes vast layer of scientific research and technical papers for sensor use analysis, where various methods implemented or researched. Paper cover few classes of sensors, using contactless methods, contact and skin-penetrating electrodes with for human emotion detection and measurement of their intensity. Result of performed analysis in this paper presented applicable methods for each type of emotions or their intensity and proposed their classification. Provided classification of emotion sensors revealed area of application and expected outcome from each method as well as noticed limitation of them. This paper should be interested for researchers, needed to use of human emotion evaluation and analysis, when there is a need to choose proper method for their purposes or find alternative decision.

- emotion sensing

- mechatronics

- machine learning

With the rapid increase in the use of smart technologies in society and the development of the industry, the need for technologies capable to assess the needs of a potential customer and choose the most appropriate solution for them is increasing dramatically. Automated emotion evaluation (AEE) is particularly important in areas such as: robotics [1[1]], marketing [2[2]], education [3[3]], and the entertainment industry [4[4]]. The application of AEE is used to achieve various goals:

(i) in robotics: to design smart collaborative or service robots which can interact with humans

[5–7[5][6][7]];

(ii) in marketing: to create specialized adverts, based on the emotional state of the potential customer [8–10[8][9][10]];

(iii) in education: used for improving learning processes, knowledge transfer, and perception methodologies [11–13[11][12][13]];

(iv) in entertainment industries: to propose the most appropriate entertainment for the target audience [14–17[14][15][16][17]].

In the scientific literature are presented numerous attempts to classify the emotions and set boundaries between emotions, affect, and mood [18–21[18][19][20][21]]. From the prospective of automated emotion recognition and evaluation, the most convenient classification is presented in [3[3],22[22]]. According to the latter classification, main terms defined as follows:

(i) “emotion” is a response of the organism to a particular stimulus (person, situation or event). Usually it is an intense, short duration experience and the person is typically well aware of it;

(ii) “affect” is a result of the effect caused by emotion and includes their dynamic interaction;

(iii) “feeling” is always experienced in relation to a particular object of which the person is aware; its duration depends on the length of time that the representation of the object remains active in the person’s mind;

(iv) “mood” tends to be subtler, longer lasting, less intensive, more in the background, but it can affect affective state of a person to positive or negative direction.

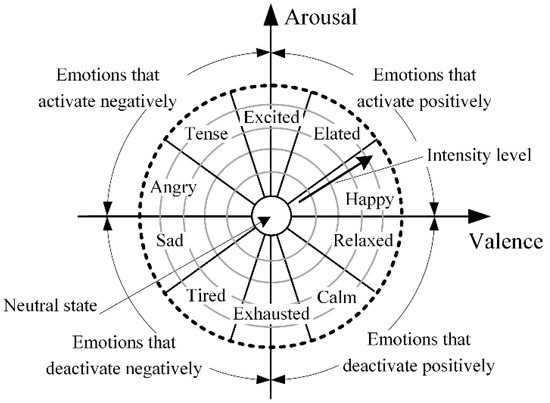

According to the research performed by Feidakis, Daradoumis and Cabella [21[21]] where the classification of emotions based on fundamental models is presented, exist 66 emotions which can be divided into two groups: ten basic emotions (anger, anticipation, distrust, fear, happiness, joy, love, sadness, surprise, trust) and 56 secondary emotions. To evaluate such a huge amount of emotions, it is extremely difficult, especially if automated recognition and evaluation is required. Moreover, similar emotions can have overlapping parameters, which are measured. To handle this issue, the majority of studies of emotion evaluation focuses on other classifications [3[3],21[21]], which include dimensions of emotions, in most cases valence (activation—negative/positive) and arousal (high/low) [23–25[23][24][25]], and analyses only basic emotions which can be defined more easily. A majority of researches use variations of Russel’s circumplex model of emotions (Figure 1) which provides a distribution of basic emotions in two-dimensional space in respect of valence and arousal. Such an approach allows for the definition of a desired emotion and evaluating its intensity just analyzing two dimensions.

Figure 1.

Russel’s circumplex model of emotions.

Using the above-described model, the classification and evaluation of emotions becomes clear, but still there are many issues related to the assessment of emotions, especially the selection of measurement and results evaluation methods, the selection of measurement hardware and software. Moreover, the issue of emotion recognition and evaluation remains complicated by its interdisciplinary nature: emotion recognition and strength evaluation are the object of psychology sciences, while the measurement and evaluation of human body parameters are related with medical sciences and measurement engineering, and sensor data analysis and solution is the object of mechatronics.

This review focuses on the hardware and methods used for automated emotion recognition, which are applicable for machine learning procedures using obtained experimental data analysis and automated solutions based on the results of these analyses. This study also analyzes the idea of humanizing the Internet of Things and affective computing systems, which has been validated by systems developed by the authors of this research [26–29[26][27][28][29]].

Intelligent machines with empathy for humans are sure to make the world a better place. The IoT field is definitely progressing on human emotion understanding thanks to achievements in human emotion recognition (sensors and methods), computer vision, speech recognition, deep learning, and related technologies [30[30]].

References

- Rattanyu, K.; Ohkura, M.; Mizukawa, M. Emotion Monitoring from Physiological Signals for Service Robots in the Living Space. In Proceedings of the ICCAS 2010, Gyeonggi-do, Korea, 27–30 October 2010; pp. 580–583.

- Kris Byron; Sophia Terranova; Stephen Nowicki; Nonverbal Emotion Recognition and Salespersons: Linking Ability to Perceived and Actual Success. Journal of Applied Social Psychology 2007, 37, 2600-2619, 10.1111/j.1559-1816.2007.00272.x.

- Feidakis, M.; Daradoumis, T.; Caballe, S. Emotion Measurement in Intelligent Tutoring Systems: What, When and How to Measure. In Proceedings of the 2011 Third International Conference on Intelligent Networking and Collaborative Systems, IEEE, Fukuoka, Japan, 30 November–2 December 2011; pp. 807–812.

- Mandryk, R.L.; Atkins, M.S.; Inkpen, K.M. A continuous and objective evaluation of emotional experience with interactive play environments. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (CHI’06), Montréal, QC, Canada, 22–27 April 2006; ACM Press: New York, NY, USA, 2006; p. 1027.

- Stefan Sosnowski; Ansgar Bittermann; Kolja Kühnlenz; Martin Buss; Design and Evaluation of Emotion-Display EDDIE. 2006 IEEE/RSJ International Conference on Intelligent Robots and Systems 2006, null, 3113-3118, 10.1109/iros.2006.282330.

- Tetsuya Ogata; Shigeki Sugano; Emotional Communication between Humans and the Autonomous Robot WAMOEBA-2 (Waseda Amoeba) which has the Emotion Model.. Transactions of the Japan Society of Mechanical Engineers Series C 1999, 65, 1900-1906, 10.1299/kikaic.65.1900.

- María Malfaz; Miguel A. Salichs; Mar?a Malfaz; A new architecture for autonomous robots based on emotions. IFAC Proceedings Volumes 2004, 37, 805-809, 10.1016/s1474-6670(17)32079-7.

- Delkhoon, M.A.; Lotfizadeh, F. An Investigation on the Effect of Gender on Emotional Responses and Purchasing Intention Due to Advertisements. UCT J. Soc. Sci. Humanit. Res. 2014, 2, 6–11.

- Jaiteg Singh; Gaurav Goyal; Rupali Gill; Use of neurometrics to choose optimal advertisement method for omnichannel business. Enterprise Information Systems 2019, 14, 243-265, 10.1080/17517575.2019.1640392.

- Susan A. Andrzejewski; Jeffrey S. Podoshen; The Influence of Cognitive Load on Nonverbal Accuracy of Caucasian and African-American Targets: Implications for Ad Processing. Journal of International Consumer Marketing 2016, 29, 1-8, 10.1080/08961530.2016.1245120.

- D’Mello, S.K.; Craig, S.D.; Gholson, B.; Franklin, S.; Picard, R.W.; Graesser, A.C. Integrating Affect Sensors in an Intelligent Tutoring System. In Proceedings of the 2005 International Conference on Intelligent User Interfaces, San Diego, CA, USA, 10–13 January 2005.

- Woolf, B.P.; Arroyo, I.; Cooper, D.; Burleson, W.; Muldner, K. Affective Tutors: Automatic Detection of and Response to Student Emotion; Springer: Berlin/Heidelberg, Germany, 2010; pp. 207–227.

- Stefano Scotti; Maurizio Mauri; Riccardo Barbieri; Bassam Jawad; Sergio Cerutti; Luca Mainardi; Emery N. Brown; Marco A. Villamira; Automatic Quantitative Evaluation of Emotions in E-learning Applications. 2006 International Conference of the IEEE Engineering in Medicine and Biology Society 2006, null, 1359-1362, 10.1109/iembs.2006.4397663.

- Agata Kolakowska; Agnieszka Landowska; Mariusz Szwoch; Wioleta Szwoch; Michał Wróbel; Emotion recognition and its application in software engineering. 2013 6th International Conference on Human System Interactions (HSI) 2013, null, 532-539, 10.1109/hsi.2013.6577877.

- Fu Guo; Wei Lin Liu; Yaqin Cao; Fan Tao Liu; Mei Lin Li; Optimization Design of a Webpage Based on Kansei Engineering. Human Factors and Ergonomics in Manufacturing & Service Industries 2015, 26, 110-126, 10.1002/hfm.20617.

- Georgios N. Yannakakis; J. Hallam; Real-Time Game Adaptation for Optimizing Player Satisfaction. IEEE Transactions on Computational Intelligence and AI in Games 2009, 1, 121-133, 10.1109/TCIAIG.2009.2024533.

- Julien Fleureau; Philippe Guillotel; Quan Huynh-Thu; Physiological-Based Affect Event Detector for Entertainment Video Applications. IEEE Transactions on Affective Computing 2012, 3, 379-385, 10.1109/T-AFFC.2012.2.

- Keith Oatley; P. N. Johnson-Laird; Towards a Cognitive Theory of Emotions. Cognition and Emotion 1987, 1, 29-50, 10.1080/02699938708408362.

- James M. Jasper; Social Movement Theory Today: Toward a Theory of Action?. Sociology Compass 2010, 4, 965-976, 10.1111/j.1751-9020.2010.00329.x.

- Jeffrey A. Gray; On the classification of the emotions. Behavioral and Brain Sciences 1982, 5, 431-432, 10.1017/s0140525x00012851.

- Michalis Feidakis; Thanasis Daradoumis; Santi Caballé; Endowing e-Learning Systems with Emotion Awareness. 2011 Third International Conference on Intelligent Networking and Collaborative Systems 2011, null, 68-75, 10.1109/incos.2011.83.

- Université de Montréal; Presses de l’Université de Montréal. Interaction of Emotion and Cognition in the Processing of Textual Materia; Presses de l’Université de Montréal: Québec, QC, Canada, 1966; Volume 52.

- James A. Russell; A circumplex model of affect.. Journal of Personality and Social Psychology 1980, 39, 1161-1178, 10.1037/h0077714.

- Csikszentmihalyi, M. Flow and the Foundations of Positive Psychology: The collected works of Mihaly Csikszentmihalyi; Springer: Dordrecht, The Netherlands, 2014; ISBN 9401790884.

- Kaklauskas, A. Biometric and Intelligent Decision Making Support; Springer: Cham, Switzerland, 2015; Volume 81, ISBN 978-3-319-13658-5.

- A. Kaklauskas; A. Kuzminske; Edmundas K. Zavadskas; A. Daniunas; G. Kaklauskas; M. Seniut; J. Raistenskis; A. Safonov; R. Kliukas; Algirdas Juozapaitis; A. Radzeviciene; R. Cerkauskiene; Affective Tutoring System for Built Environment Management. Computers & Education 2015, 82, 202-216, 10.1016/j.compedu.2014.11.016.

- Artūras Kaklauskas; D. Jokubauskas; J. Cerkauskas; G. Dzemyda; Ieva Ubarte; D. Skirmantas; A. Podviezko; I. Simkute; Affective analytics of demonstration sites. Engineering Applications of Artificial Intelligence 2019, 81, 346-372, 10.1016/j.engappai.2019.03.001.

- Artūras Kaklauskas; E.K. Zavadskas; D. Bardauskiene; J. Cerkauskas; I. Ubarte; M. Seniut; G. Dzemyda; M. Kaklauskaite; I. Vinogradova; A. Velykorusova; An Affect-Based Built Environment Video Analytics. Automation in Construction 2019, 106, 102888, 10.1016/j.autcon.2019.102888.

- Emotion-Sensing Technology in the Internet of Things. Available online: https://onix-systems.com/blog/emotion-sensing-technology-in-the-internet-of-things (accessed on 30 December 2019).

- Wallbott, H.G.; Scherer, K.R. Assesing emotion by questionnaire. In The Measurement of Emotions; Academic Press: Cambridge, MA, USA, 1989; pp. 55–82. ISBN 9780125587044.