Your browser does not fully support modern features. Please upgrade for a smoother experience.

Please note this is a comparison between Version 1 by Andreas Holzinger and Version 2 by Catherine Yang.

The main impetus for the global efforts toward the current digital transformation in almost all areas of our daily lives is due to the great successes of artificial intelligence (AI), and in particular, the workhorse of AI, statistical machine learning (ML). The intelligent analysis, modeling, and management of agricultural and forest ecosystems, and of the use and protection of soils, already play important roles in securing our planet for future generations and will become irreplaceable in the future. AI in areas that matter to human life (agriculture, forestry, climate, health, etc.) has led to an increased need for trustworthy AI with two main components: explainability and robustness.

- machine learning

- artificial intelligence

- human-centered AI

1. Artificial Intelligence

Artificial intelligence is one of the oldest fields of computer science and was extremely popular in its early days in the 1950s. However, the requirements quickly reached the limits of the computing power of digital computer systems at the time. This made AI interesting in theory but a failure practically and especially economically, which inevitably led to a decline in interest in AI in the 1980s. AI only became very popular again a decade ago, driven by the tremendous successes of data-driven statistical machine learning.

Artificial neural networks have their origins in the artificial neurons [1][7] developed by McCulloch and Pitts (1943). Today’s neural networks consist of very many layers and have an enormous number of connections, and use a special form of compositionality in which features in one layer are combined in many different ways to produce more abstract features in the next layer [2][8]. The success of such AI, referred to as “deep learning”, has only been made possible by the computing power available today. The increasing complexity of such deep learning models has naturally led to drawbacks and new problems in the comprehensibility of results. This lack of comprehensibility can be very important, especially when using such AI systems in areas that affect human life [3][9].

Many fundamental AI concepts date back to the middle of the last century. Their current success is actually based on a combination of three factors: (1) powerful, low-cost, and available digital computing technology, (2) scalable statistical machine learning methods (e.g., deep learning), and (3) the explosive growth of available datasets.

To date, AI has reached levels of popularity and maturity that have let it permeate nearly all industries and application areas, and it is the main driver of the current digital transformation—due to its undeniable potential to benefit humanity and the environment. AI can definitely help find new solutions to our society’s most pressing challenges in virtually all areas of life: from agriculture and forest ecosystems, which affect our entire planet, to the health of every individual.

For all its benefits, the large-scale adoption of AI technologies also holds enormous and unimagined potential for new, unforeseen threats. Therefore, all stakeholders—governments, policymakers, and industry—along with academia, must ensure that AI is developed with knowledge and consideration of these potential threats. The security, traceability, transparency, explainability, validity, and verifiability of AI applications must always be ensured at all times [3][9]. However, how is AI actually defined now, what is trustworthy AI, and what is human-centered AI?

For trustworthy AI, it is imperative to include ethical and legal aspects, which is a cross-disciplinary goal, because all trusted AI solutions must be not only ethically responsible but also legally compliant [4][12]. Dimensions of trustworthiness for AI include: security, safety, fairness, accountability (traceability, replicability), auditability (verifiability, checkability), and most importantly, robustness and explainability; see [5][13].

Human-centered AI we define as a synergistic approach to align AI solutions with human values, ethical principles, and legal requirements to ensure safety and security, enabling trustworthy AI. This HCAI concept is now widely supported by renowned institutions (Stanford Human-Centered AI Institute, Berkeley Center for Human-Compatible AI, Cambridge Leverhulme Center for the Future of Intelligence, Chicago Human AI Lab, Utrecht Human-centered AI, Sydney Human-Centered AI Lab) and world-leading experts, such as Ben Shneiderman, Fei Fei Li, Joseph A. Konstan, Stephen Yang, and Christopher Manning, to name a few [6][14].

The inclusion of a human-in-the-loop in interactive machine learning [7][15] is thereby not only helpful to increase the performances of AI algorithms, but also highly desirable to counter the earlier fears and anxieties that artificial intelligence automates everything, replaces and displaces humans, and pushes them into passive roles [8][16].

In addition, integrating a human-in-the-loop (expert-in-the-loop) has many other advantages: Human experts excel at certain tasks by thinking multimodally and embedding new information in a conceptual knowledge space shaped by individual experience and prior knowledge. Farmers and foresters can build on an enormous amount of prior knowledge.

2. State-of-the-Art AI Technologies

2.1. Classification of AI Technologies

Today, AI can be successfully applied in virtually all application areas [9][55]. Due to resource conservation and the demand for sustainability, precision concepts, similar to precision medicine, are gaining more attention. These include a very wide range of different information technologies that are already used in many agricultural and forestry operations worldwide [10][11][12][13][56,57,58,59]. In this context, satellite technology, geographic information systems (GIS), and remote sensing are also very important to improve all functions and services of the agricultural and forestry sectors [14][60]. Available tools include mobile applications [15][61], a variety of smart sensors [16][62], drones (unmanned aerial vehicles, UAVs) [17][63], cloud computing [18][64], Internet of Things (IoT) [19][65], and blockchain technologies [20][66]. An increasingly important and often underappreciated area is the provision of energy, making alternative low-energy approaches imperative [21][67]. All of these technologies make it possible to process information about the state of the soil, plants, weather, or animals in a shared network in quasi-real time and make it available for further processes regardless of location. This means that today’s agricultural and forestry systems are being expanded to include other relevant processes/services, and additional datasets are being created for quantitative and qualitative information along the entire value chain for plant production and animal husbandry products and food safety (“from farm to fork”). Let us now show a concrete application example for each type of our four AI classes.2.2. Autonomous AI Systems

“Full automation is one of the hottest topics in AI and could lead to fully driverless cars in less than a decade”, stated a 2015 Nature article [22][68]. Fully autonomous vehicles are indeed the popular example of AI and are also readily representable, as the Society of Automotive Engineers (SAE) has provided very descriptive definitions for levels of automation in its standards. Levels of automation emerged as a way to represent gradations or categories of autonomy and to distinguish between tasks for machines and tasks for humans. In a very recent paper, however, Hopkins and Schwanen (2021) [23][69] argued that the current discourse on automated vehicles is underpinned by a technology-centered logic dominated by AI proponents, and point to the benefits of a stronger human-centered perspective. However, compared to car driving, the complexity of processes in agriculture and forestry is disproportionately higher. Agricultural and forestry systems include virtually all processes for the production of f5 (food, feed, fiber, fire, fuel). In this context, the production processes take place both indoors (buildings and facilities for people, livestock, post-harvest, and machinery) and outdoors, and face much diversity in terms of soil, plants, animals, and people. The temporal resolution of process phenomena varies over an extremely wide range (from milliseconds, e.g., moving machinery, to many years, e.g., growth of trees and changes in soil).2.2.1. In Agriculture

A major problem in agriculture has always been weed control. In their daily life cycle, plants and weeds compete with each other for soil nutrients, water from the soil, and sunlight. If weeds are left untouched, increased weed growth can affect both crop yields and crop quality. Several studies have already shown that these effects can be significant, ranging from 48 to 71%, depending on the crop [24][70]. Moreover, in certain cases, crop damage can be so high that the entire yield is not suitable for the market [25][71]. To prevent this, weed control has emerged as a necessity. Furthermore, with the ever-increasing trends in crop yield production, the demand for process optimization, i.e., reduction of energy losses, herbicide use, and manual labor, is becoming more and more urgent. To meet the above requirements, traditional methods of weed control need to be changed. One of the possible ways to achieve this is to introduce systems that significantly reduce the presence of human labor, the use of herbicides, and mechanical treatment of the soil by focusing only on specific areas where and when intervention is needed. The novel approach based on the above principles is called smart farming or Agriculture 4.0. Moreover, this type of system should involve the performance of agricultural tasks autonomously, i.e., without human intervention, relying entirely on its own systems to collect the data, navigate through the field, detect the plants/weeds, and perform the required operation based on the results of the collected data [26][72]. These types of systems are known as autonomous agricultural robot systems. Each autonomous agricultural robotic system, e.g., an autonomous robot for weed control, consists of four main systems, i.e., steering/machine vision, weed detection, mapping, and precision weed control [26][72]. Most agricultural robots are developed for outdoor use, though some of them can operate indoors [27][73]. Precise navigation of these devices is provided throughout the global navigation satellite systems (GNSS) and real-time kinematics (RTK) [28][29][74,75]. However, under certain conditions, localization accuracy may fall below the required thresholds, and then autonomous robotic systems must rely on machine vision and indoor positioning systems, such as adaptive Monte Carlo localization and laser scanners [30][76]. The above two technologies are widely used and commercially available. Weed control in the row is mainly done by the four conventional weed control methods, i.e., electric, chemical, thermal, and mechanical weed control methods. Currently, weed detection and identification is the most challenging issue. Several studies have addressed this issue, with detection accuracy varying from 60 to 90% under ideal test conditions [26][72]. Thanks to extensive remote sensing technologies and data processing software, the development of weed maps has become a reality, and together with machine vision, a powerful tool for weed detection. Some of the most representative autonomous agricultural robotic systems are: The robotic system for weed control in sugar beets developed by Astrand et al. (2002) [31][77]. The robot consisted of two vision systems used for crop guidance and detection, and a hoe for weed removal. A front camera with two-row detection at 5-meter range and near-infrared filter was used for row detection and navigation, while a second color camera mounted inside the robot was used for weed detection. Initial trials showed that color-based plant detection was feasible and that the robot’s subsystems could functionally work together. The BoniRob autonomous multipurpose robotic platform (see Figure 12) with wavelength-matched illumination system for capturing high-resolution image data was used for weed detection and precision spraying [32][78], and for ground intervention measurements [33][79]. Autonomous navigation along crop rows was achieved using 3D laser scans or by using global navigation satellite systems (GNSS). Lamm et al. (2002) developed a robotic system for weed control in cotton fields that is able to distinguish weeds from cotton plants and precisely apply herbicides. A machine vision algorithm was used to determine the diameter of the inscribed leaf circle to identify the plant species. Field tests showed a spray efficiency of 88.8 percent [34][80]. Similarly to Astrand et al. (2002) [31][77], Blasco et al. (2002) [35][81] developed two machine vision system robots for weed control. The machine vision systems are used separately, one for in row navigation and the second one for the weed detection. Precise target weeding was done with an end-effector which emitted electrical charge [35][81]. Bawden et al. (2017) [36][82] have developed an autonomous robot platform with a heterogeneous weeding array. The weeding mechanism is based on machine vision for weed detection and classification, together with weeding array which combines precise spraying and hoeing methods for weed destruction [36][82]. As can be seen, robotic technologies are changing current practices in agricultural technology, particularly in autonomous weed control. The steady increase in research and development in this area will inevitably have a significant impact on traditional agricultural practices.2.2.2. In Forestry

Timber harvesting is physically demanding and risky, as forest workers often work manually and are exposed to heavy and fast-moving objects such as trees, logs, and dangerous machinery. Over time, timber harvesting has become more mechanized to increase worker safety, productivity, and environmental sustainability. In the context of increasing productivity through machine use, Ringdahl (2011) [37][83] found that human operators can become a bottleneck because it is not possible to work as fast as the potential capacity of machines. In trafficable terrain, harvesters and forwarders represent the highest level of mechanization, and they are basically manually controlled by human using joysticks. One way to overcome this human limitation of machine capacity is to change forest working methods in such a way that human activities are reduced to a minimum or are no longer required, like in autonomous vehicles [38][84]. While autonomous robotic systems are already being used in controlled workspaces such as factories or in simple agricultural environments, the use of autonomous machines in more complex environments, such as forests, is still in the research and development stage. One of the biggest challenges is on-the-fly navigation in the forest. The most common approach for autonomous navigation in open terrain such as agriculture is based on global navigation satellite systems (GNSS). However, the GNSS signal absorption by the forest canopy leads to position errors of up to 50 m and more, which requires other solutions independent of the GNSS signal [39][85]. In addition to localization of the forest machine’s own position, the complex terrain and obstacles such as understory, and above all, trees, must also be taken into account when navigating autonomously in forests. In recent years, methods in the field of remote sensing have increasingly been developed to generate digital twins of forests based of terrestrial, airborne, or spaceborne sensor technologies. Gollob et al. (2020) [40][86] showed that personal laser scanning (PLS) is able to capture and automatically map terrain information, individual tree parameters and entire stands in a highly efficient way. This data can serve as a navigation basis for autonomous forest machines and the optimized operational harvest planning [39][85]. Rossmann (2010) [39][85] showed that an initial guess of the forest machine position can be made using an “imprecise” GNSS sensor. 2D laser scanners or stereo cameras on the forest machine (e.g., [41][42][43][87,88,89]) can detect tree positions in the near neighborhood of the machine (local tree pattern). The position of the forest machine can be determined efficiently and precisely by means of tree pattern matching between the stand map (global tree pattern from, e.g., PLS) and the local tree pattern [39][85]. The initial guess of the machine position with GNSS helps to make the pattern matching more time efficient. Regardless of the challenging navigation, research is also being done on the type of locomotion of autonomous forest machines. Machines that seemed futuristic just a few years ago are already in use or available as prototypes. For example, the concept of animals moving slowly from branch-to-branch was used by New Zealand scientists and engineers to build a tree-to-tree locomotion machine (swinging machine) [38][84] (see Figure 23). To date, the swinging harvester has been radio-controlled—but with the challenges shown in terms of navigation and sensor fusion, the path to an autonomous, soil-conserving forestry machine is mapped out.

Figure 23.

Tree to tree robot (image taken by SCION NZ Forest Research Institute, used with permission from Richard Parker).

2.3. Automated AI Systems

2.3.1. In Agriculture

As previously mentioned, autonomous AI systems are relatively advanced and are constantly being developed. These developments and upgrades lead to higher efficiency of the machines. In addition, more and more systems in modern tractors and harvesters are becoming fully automated to minimize the operator’s workload. The two most important domains of automation are situation awareness and process monitoring. For example, machine vision guidance systems are already widely used in modern tractors and harvesters, allowing the machine to automatically align itself with the harvest line without the operator’s help, so that humans can focus on other processes while they do so [44][90]. Infrared 3D camera systems on harvesters continuously monitor and control bin placement while allowing the operator to focus on the harvesting process [45][91]. Process monitoring is particularly pronounced in harvesting operations, where speed must be constantly controlled and adjusted according to the operation being performed [46][92]. The precise application of fertilizers and herbicides is also usually monitored and controlled automatically throughout the process. For this purpose, data from a global navigation satellite system (GNSS) as a guidance system with real-time sensor technology (e.g., Claas Crop Sensor [47][93]) are communicated among the tractor, the application device, and the task controller via the terminal, which has been done for some time [48][94].2.3.2. In Forestry

Cable-yarding technologies are the basis for efficient and safe timber harvesting on steep slopes. To guarantee low harvesting costs and low environmental impacts on remaining trees and soil, the machine position and cable road must be carefully planned. For this planning, usually only imprecise information about the terrain and the forest stands is available. If stand and terrain information were collected with traditional measuring devices such as calipers, hypsometers, and theodolites, these measurements would be labor intensive, time consuming, prone to various errors, and thus limited in their spatial and temporal extent [49][95]. Thus, the cable road layout is still determined by experts based on rules of thumb and empirical knowledge [50][96]. Rules for this are formulated, for example, in Heinimann (2003) [51][97]. Automatic methods (optimization methods) to solve this problem have already been formulated in countries such as the USA and Chile [52][53][54][98,99,100]. However, these optimization or planning methods are largely based on the assumption of clear-cutting and do not use modern sensor technology to capture individual tree and terrain data. For example, high-resolution 3D data in form of a digital twin of the forest combined with well-known optimization functions and expert knowledge is a key factor to optimizing planning of timber harvesting. In this way, automatically optimized cable road planning can help to minimize the environmental impact and the costs for cable yarding (e.g., [50][55][96,101]). In terms of cable yarding, there are also other examples for automation that are already being used in practice: Most cable yarding systems follow a typical scheme (work phases) of unloaded out, accumulate load, loaded in, and drop load on landing. Two of these phases, unloaded and loaded travel, have been automated; thus, the operator can work in the meantime with an integrated processor [42][88]. Pierzchała et al. (2018) [56][102] developed a method for automatic recognition of cable yarding work phases by using multiple sensors on the carriage and tower yarder. Further automation steps in cable yarding would be conceivable in the future; for example, the carriage could be equipped with additional orientation sensors, such as laser scanners or stereo cameras, for orientation.2.4. Assisted AI Systems

2.4.1. In Agriculture

Assisted AI systems in agriculture are tightly overlapped with automated AI systems. In agricultural applications, machines can independently perform certain repetitive tasks without the human intervention. However, in the decision-making loop, humans are those one who make final decisions [57][103]. For example, implementation of a wide variety of non-invasive sensors in fruit and vegetable processing, e.g., drying processes, merged together with AI technologies, can be used to control drying processes and changes in shape of vegetables and fruits, and to predict optimum drying process parameters [58][104]. Several systems, e.g., situation awareness systems such as machine vision guidance systems, though performing their work automatically, can be still manually overridden by an operator [59][105]. An example is the precision application of fertilizer and pesticides: sprayers can work in fully manual mode [44][90]. In modern tractors, advanced steering control systems can adjust steering performance in order to suit current conditions, etc. [60][106]. Furthermore, fuel consumption efficiency can be improved with the above-mentioned technologies [61][107].2.4.2. In Forestry

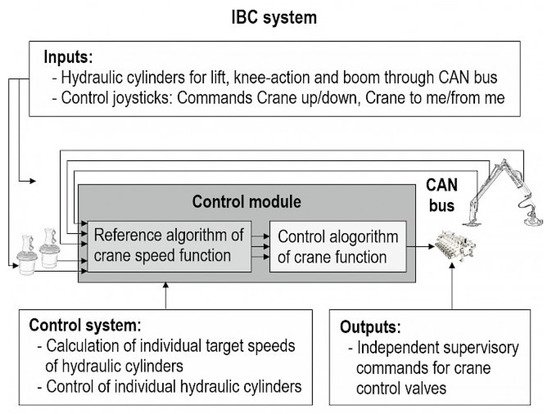

Operating with forestry cranes requires a lot of knowledge and experience to be productive with a low impact on the environment. Furthermore, trained forestry machine operators are essential for efficient timber production, in particular to reduce harvesting damage to the remaining trees and reduce machine downtime. Ovaskainen et al. (2004) [62][108] has shown that the productivity of trained harvester (Cut-to-length (CTL)) operators varies by about 40% under similar forest stand conditions. The study hypothesizes that efficiency differences are related to aspects of operator crane experience based on deliberate practice of motor skills, situational awareness, and visual perception. The state-of-the-art in crane control is the use of two analog joysticks, which are controlled by the two hands and/or the fingers. The joysticks provide electrical or hydraulic signals that control the flow rate of the hydraulic system and thus enable precise movement of the individual hydraulic cylinders. Motor learning is the key to smooth crane movements and harvester head control. Forest machinery machinists make approximately 4000 control inputs/h, many of which are repeated again and again but always have to be applied in a targeted manner [63][109]. Purfürst (2010) [64][110] found that learning to operate a harvester took on average 9 months. Furthermore, the operator must also master decision making and planning in order to achieve an appropriate level of performance. In summary, it can be stated that harvester/forwarder operation, especially crane operation, is ergonomically, motorically, and cognitively very demanding. To improve this, existing forestry machines are constantly being improved. Modern sensors (e.g., diameter and length measurement) combined with intelligent data processing can help to assist certain operations, such as processing stems into logs by automatically moving the harvester head to predetermined positions depending on the stem shape and log quality. On the one hand, this reduces the workload of the harvester operator, and on the other hand, optimizes the profit on the timber. Other good examples of how intelligent assistance can make work easier and also more efficient for the machine operator are the intelligent crane control systems from John Deere Forestry Oy (IBC: Intelligent Boom Control [65][111], see Figure 34), Komatsu (Smart Crane [66][112]), and Palfinger (Smart Control [67][113]). In such systems, the crane is controlled via the crane tip (harvester head or grapple), and the crane’s movement is automatically done via algorithms. Therefore, the operator is not controlling individual rams, but only needs to concentrate on the crane tip and control it with the joysticks. The system also dampens the movements of the cylinders and stops jerky load thrusts in the end positions, which enables jerk-free operation. The smart control results in less fatigue for the machinist, making them more productive overall. Results from Manner et al. (2019) [68][114] showed that if the crane is controlled with IBC compared to conventional crane control, the machine working time for the forwarder is 5.2% shorter during loading and 7.9% shorter during unloading. It has already been shown that the use of such a smart crane control system makes it much easier to learn how to operate harvester or forwarder machines [42][69][88,115].2.5. Augmenting AI Systems

2.5.1. In Agriculture

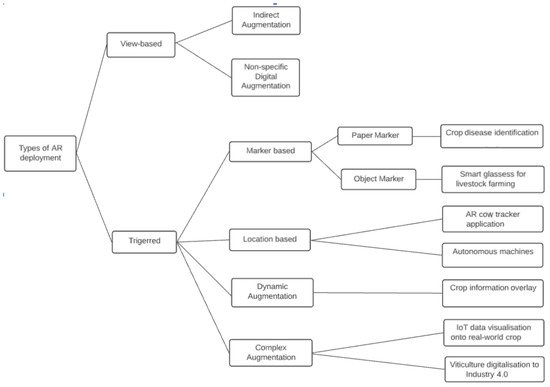

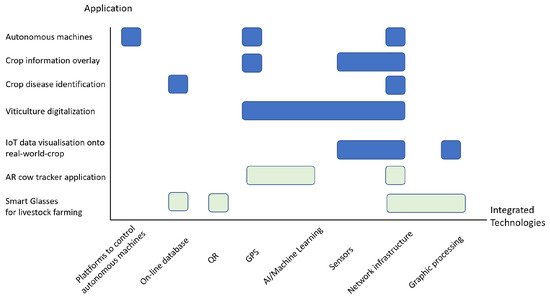

A good systematic for AR applications is given by Hurst et al. (2021) [71][117] (see Figure 45 and Figure 56), which has subdivisions into (1) marker-based, (2) markerless (location-based), (3) dynamic augmentation, and (4) complex augmentation, subdivided by AR type. Based on the four classifications, there are a lot of potential applications of AR in agriculture.

Figure 45. Types of AR deployment within crop and livestock management. Please refer to the excellent overview by Hurst et al. (2021) [71].

Types of AR deployment within crop and livestock management. Please refer to the excellent overview by Hurst et al. (2021) [117].

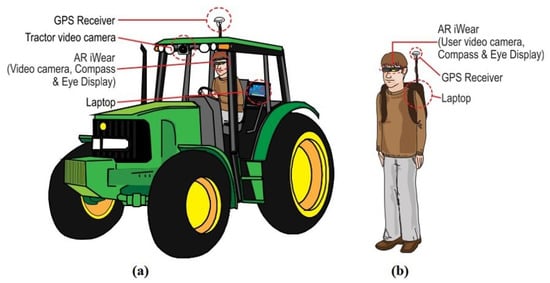

Figure 67.

AR-based positioning assist system: (

a

) tractor mounted, (