Your browser does not fully support modern features. Please upgrade for a smoother experience.

Please note this is a comparison between Version 2 by Rita Xu and Version 1 by Iulian Ogrezeanu.

The industrial environment has gone through the fourth revolution, also called “Industry 4.0”, where the main aspect is digitalization. Each device employed in an industrial process is connected to a network called the industrial Internet of things (IIOT). With IIOT manufacturers being capable of tracking every device, it has become easier to prevent or quickly solve failures. Specifically, the large amount of available data has allowed the use of artificial intelligence (AI) algorithms to improve industrial applications in many ways (e.g., failure detection, process optimization, and abnormality detection).

- artificial intelligence

- industrial applications

- privacy preservation

1. Introduction

Industry 4.0 [1] has introduced advanced technology in manufacturing, to make it more client-driven and customizable, leading to manufacturers striving toward a continuous improvement in quality and productivity. To achieve smart manufacturing, which enables variable product demand, intelligent systems were introduced in industrial units.

Recent developments in Internet of things (IOT) [2], Cyber-Physical Production Systems (CPPS) [3], and big data [4] led to major improvements in productivity, quality, and monitoring of industrial processes. Artificial intelligence (AI) plays an important role in industry, as more and more manufacturers are implementing AI in their processes.

Developed and employed with the purpose of performing tasks that normally require human discernment, AI is currently a popular topic. Having the capability of interpreting data for solving complex problems [5], AI is also a good fit for factories [6]. It enables industrial systems to process data, perceive their environment, and learn, while building up experience, in order to become better at a task by dealing with it and its data repeatedly.

Artificial intelligence [7] is a subject that researchers have been preoccupied with almost since computers were invented. AI includes every algorithm that enables machines to perform tasks that require discernment, not just by applying a formula or following a strict rule-based logic. Thus, if wresearchers provide datasets with inputs and outputs to an AI algorithm, it will be capable of yielding a logic which maps the inputs to the outputs. In contrast, in classic programming, humans provide the logic. Of course, in many situations it is not necessary to use AI (e.g., if the problem can be solved through a mathematical formula). In the last two decades, thanks to the increase in computational power, AI has become very popular, and it has been used in several domains (medicine, marketing, industry, etc.), with various subdomains of algorithms such as machine learning (ML) [8] and deep learning (DL).

Machine learning is a popular subdomain of AI, and it is composed of statistical algorithms that can learn from data to create mathematical models for intelligent systems. Today, wresearchers have recommendation systems that use ML to suggest aspects that weresearchers like on the basis of ourthe preferences (e.g., music, ads, and shopping). In the medical environment, weresearchers have ML models which help clinicians during diagnostic processes (decision support systems) [9].

Deep learning [10] is a widely used type of learning algorithm that relies on defining neural networks [11] with more than one hidden layer of neurons. Neural networks are inspired by the human brain, having computational units (named neurons) which are interconnected and exchange information to extract features from input data, thus enabling the mapping of input data to output data. However, neural networks used in AI do not work in the same way as human neural networks, because they exchange information using real numbers, whilst ourthe neurons exchange information through electrical impulses. Neurons are organized in layers, where the first and the last layer are those that interact with the external environment, also named the input layer and output layer. Intermediate layers are called hidden layers.

Multiple review papers have described the multitude of approaches on the basis of which AI is employed in manufacturing. In [12], Sharma et al. presented a theoretical framework for machine learning in manufacturing, which guides researchers in elaborating a paper in this field. They pointed to several review papers that targeted the use of ML in the industrial environment. Rai et al. [13] discussed the use of AI in the context of the fourth industrial revolution. To highlight the potential advantages and potential flaws of using AI in industry, Bertolini et al. [14] reviewed the literature and classified research on the basis of the algorithm and application domain. Sarker [15] also reviewed the use of machine learning in real-world applications such as cyber security, agriculture, smart cities, and healthcare. In [16], Rao summarized the use of AI in different domains such as healthcare and travel.

In [17], four important challenges were identified: data availability, data quality, cybersecurity and privacy preservation, and interpretability/explainability. While the former two have been extensively discussed in the past and are well known, in thires paper, we earchers focus on topics related to the latter two challenges.

AI/ML relies extensively on existing and future data to deliver accurate and reliable results. The collection of large volumes of data for centralized processing poses severe privacy concerns. Thus, the first challenge refers to the fact that, while industrial data are abundant, they are hard to circulate and access due to privacy/IP constraints, also affecting the development of computer-based solutions. Industrial AI systems are difficult to realize, as data to develop and train them exist, but are not accessible. If training datasets lack diversity, algorithms may be biased or skewed to certain types of data/events [18].

Secondly, AI algorithms should be explainable and interpretable. ML algorithms are, in general, related to the concept of ‘black box’, i.e., the rationale for how the outputs are inferred from the input data is unclear [19]. Algorithmic decisions should, however, ideally provide a form of explainability [20]. In general, explanations are about the attribution of the worth of input features toward the final model predictions, whereas interpretability refers to the deterministic propagation of information from the input to the response function.

ML is usually regarded as a ‘black box’ unit; once a model is trained, its logic for determining the outputs on the basis of the inputs is not available, and further experiments and methods need to be performed to understand the way a trained model analyzes and processes the data. For stakeholders, however, it is important to understand how and why a solution is being proposed. Hence, explainable AI, with its interpretability tools, is key. Model-agnostic methods [21] were the subject of past research that yielded good results. Most of them targeted local interpretable model-agnostic explanations (LIMEs) [22] and Shapley additive explanations [23]. An important advantage of these methods is that they are compatible with a multitude of ML models. On the other hand, there are model-specific interpretation methods [24], which have the disadvantage of being compatible only with specific model types.

2. Privacy Preservation in Industrial AI Applications

2.1. State of the Art in Privacy-Preserving AI

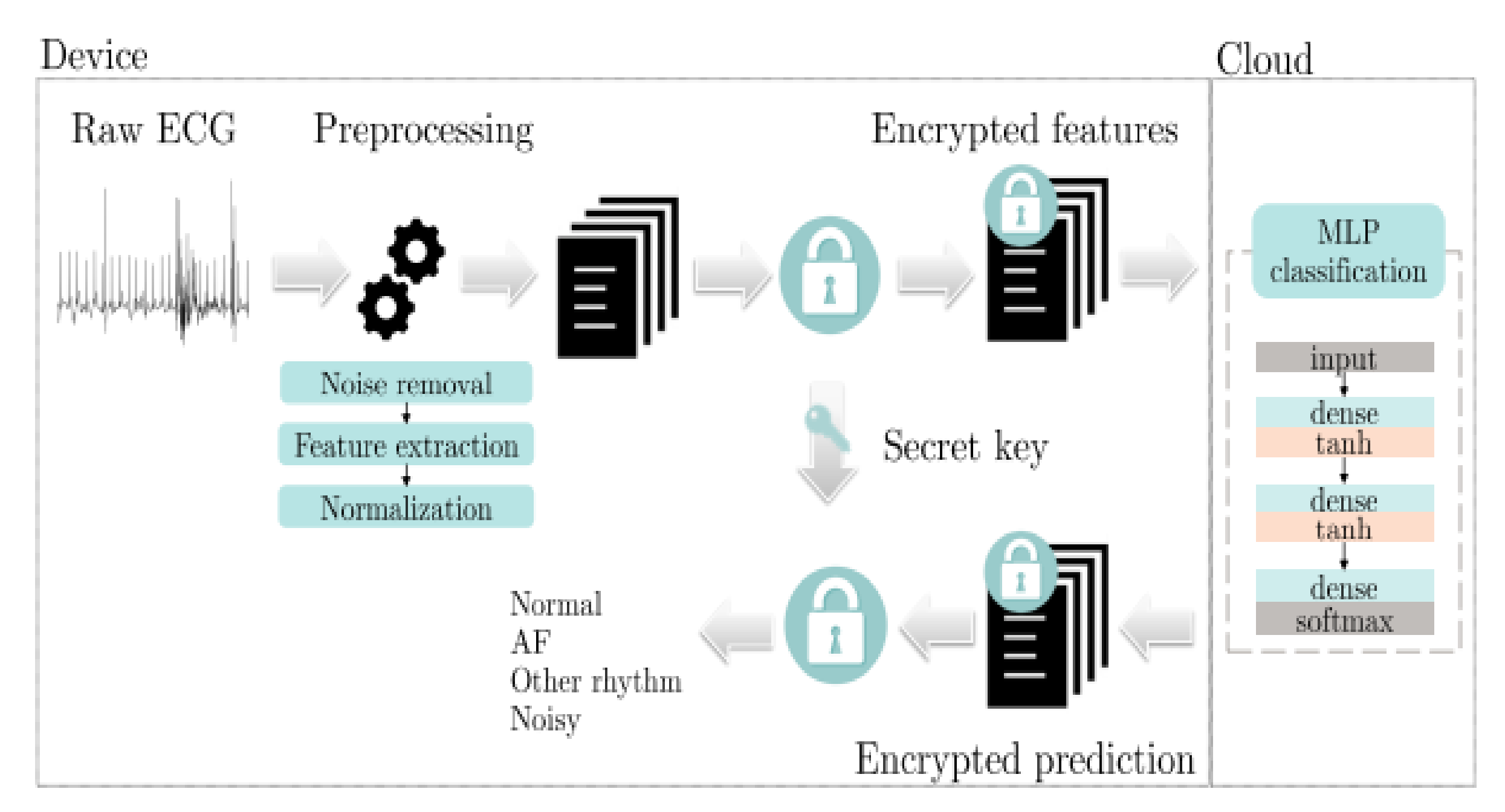

One of the most used solutions in privacy-preserving AI is homomorphic encryption (HE). HE allows users to perform computations on encrypted data, yielding results that are also encrypted (results are identical to those obtained by performing the operations on unencrypted data). This type of encryption is necessary when processing sensitive data (e.g., healthcare data). Homomorphic encryption has been introduced and developed independently from AI, but the large computational overhead limits its real-world usage. Since AI-based methods provide results in near real time, i.e., the computational cost during inference is small, extending AI with HE allows for privacy-preserving data processing, while obtaining results in a reasonable amount of time. One of the first notable approaches in using homomorphic encryption with neural networks was proposed by Orlandi et al. [25]. They developed an approach to process encrypted data using a neural network, ensuring that not only the data are protected, but also the neural network itself (weight values and hyperparameters). Figure 1 illustrates the data exchange between server and client, in an encrypted format.

2.2. Review of Privacy-Preserving AI in Industrial Applications

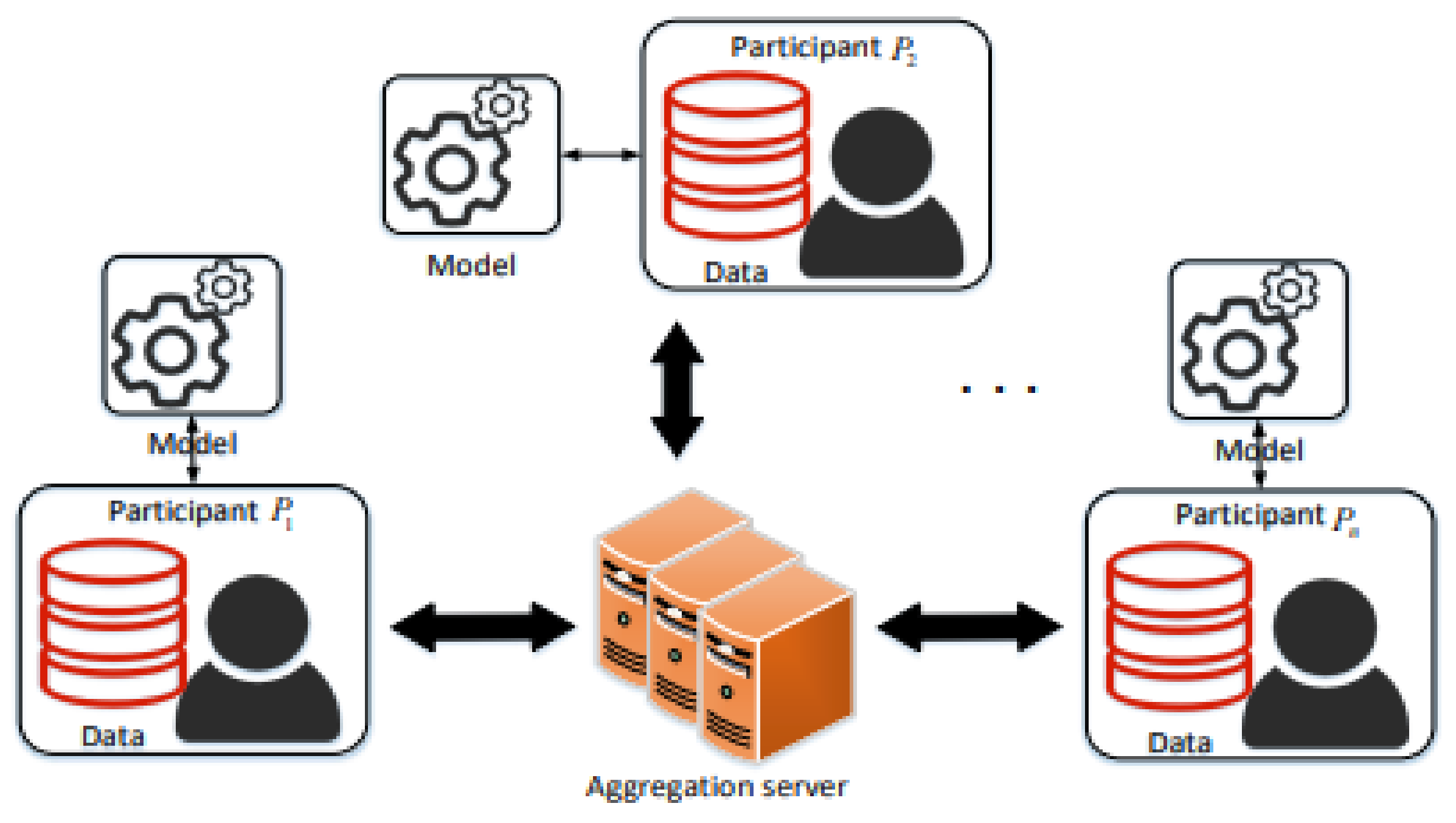

In thiRes section, weearchers focus on research that targeted privacy-preserving artificial intelligence applied in industrial applications. Some of the main subjects in industry-oriented research are industrial Internet of things (IIOT) and Industry 4.0. A new method termed verifiable federated learning (VFL) was proposed by Fu et al. [37] for privacy preservation in industrial IOT, which employs federated learning, while also allowing for information extraction from the shared gradients. Figure 2 illustrates the proposed federated learning framework.

Figure 2. Federated learning framework proposed by Fu et al.

References

- Ghobakhloo, M. Industry 4.0, digitization, and opportunities for sustainability. J. Clean Prod. 2020, 252, 119869.

- Kumar, S.; Tiwari, P.; Zymbler, M. Internet of Things is a revolutionary approach for future technology enhancement: A review. J. Big Data 2019, 6, 111.

- Cardin, O. Classification of cyber-physical production systems applications: Proposition of an analysis framework. Comput. Ind. 2019, 104, 11–21.

- Wang, J.; Yang, Y.; Wang, T.; Sheratt, R.S.; Zhang, J. Big data service architecture: A survey. J. Internet Technol. 2020, 21, 393–405.

- Chen, Z.; Ye, R. Principles of Creative Problem Solving in AI Systems. Sci. Educ. 2022, 31, 555–557.

- Fahle, S.; Prinz, C.; Kuhlenkotter, B. Systematic review on machine learning (ML) methods for manufacturing processes–Identifying artificial intelligence (AI) methods for field application. Procedia CIRP 2020, 93, 413–418.

- Zhang, C.; Lu, Y. Study on artificial intelligence: The state of the art and future prospects. J. Ind. Inf. Integr. 2021, 23, 100224.

- Mehrabi, N.; Morstatter, F.; Saxena, N.; Lerman, K.; Galstyan, A. A Survey on Bias and Fairness in Machine Learning. ACM Comput. Surv. 2022, 54, 1–35.

- Varghese, J.; Kleine, M.; Gessner, S.I.; Sandmann, S.; Dugas, M. Effects of computerized decision support system implementations on patient outcomes in inpatient care: A systematic review. J. Am. Med. Inform. Assn. 2018, 25, 593–602.

- Kotsiopoulos, T.; Sarigiannidis, P.; Ioannidis, D.; Tzovaras, D. Machine Learning and Deep Learning in smart manufacturing: The Smart Grid paradigm. Comput. Sci. Rev. 2021, 40, 100341.

- Abiodun, O.I.; Jantan, A.; Omolara, A.E.; Dada, K.V.; Mohamed, N.A.; Arshad, H. State-of-the-art in artificial neural network applications: A survey. Heliyon 2018, 4, e00938.

- Sharma, A.; Zhang, Z.; Rai, R. The interpretive model of manufacturing: A theoretical framework and research agenda for machine learning in manufacturing. Int. J. Prod. Res. 2021, 59, 4960–4994.

- Rai, R.; Tiwari, M.K.; Ivanov, D.; Dolgui, A. Machine learning in manufacturing and industry 4.0 applications. Int. J. Prod. Res. 2021, 59, 4773–4778.

- Bertolini, M.; Mezzogori, D.; Neroni, M.; Zammori, F. Machine Learning for industrial applications: A comprehensive literature review. Expert Syst. Appl. 2021, 175, 114820.

- Sarker, I.H. Machine Learning: Algorithms, Real-World Applications and Research Directions. SN Comput. Sci. 2021, 2, 160.

- Rao, N.T. A review on industrial applications of machine learning. Int. J. Disast. Recov. Bus. Cont. 2018, 9, 1–9.

- Peres, R.S.; Jia, X.; Lee, J.; Sun, K.; Colombo, A.W.; Barata, J. Industrial Artificial Intelligence in Industry 4.0–Systematic Review, Challenges and Outlook. IEEE Access 2020, 8, 220121–220139.

- Challen, R.; Denny, J.; Pitt, M.; Gompels, L.; Edwards, T.; Tsaneva-Atanasova, K. Artificial intelligence, bias and clinical safety. BMJ Qual. Saf. 2019, 28, 231–237.

- Rai, A. Explainable AI: From black box to glass box. Acad. Mark. Sci. Rev. 2020, 48, 137–141.

- Linardatos, P.; Papastefanopoulos, V.; Kotsiantis, S. Explainable AI: A review of Machine Learning Interpretability Methods. Entropy 2020, 23, 18.

- Messalas, A.; Kanellopoulos, Y.; Makris, C. Model-Agnostic Interpretability with Shapley Values. In Proceedings of the 10th International Conference on Information, Intelligence, Systems and Applications (IISA 2019), Patras, Greece, 15–17 July 2019; pp. 1–7.

- Palatnik de Sousa, I.; Maria Bernardes Rebuzzi Vellasco, M.; Costa da Silva, E. Local Interpretable Model-Agnostic Explanations for Classification of Lymph Node Metastases. Sensors 2019, 19, 2969.

- Antwarg, L.; Miller, R.M.; Shapira, B.; Rokach, L. Explaining anomalies detected by autoencoders using Shapley Additive Explanations. Expert Syst. Appl. 2021, 186, 115736.

- Liang, Y.; Li, S.; Yan, C.; Li, M.; Jiang, C. Explaining the black-box model: A survey of local interpretation methods for deep neural networks. Neurocomputing 2019, 419, 168–182.

- Orlandi, C.; Piva, A.; Barni, M. Oblivious Neural Network Computing via Homomorphic Encryption. Eurasip J. Inf. 2007, 2007, 1–11.

- Vizitiu, A.; Nita, C.I.; Toev, R.M.; Suditu, T.; Suciu, C.; Itu, L.M. Framework for Privacy-Preserving Wearable Health Data Analysis: Proof-of-Concept Study for Atrial Fibrillation Detection. Appl. Sci. 2021, 11, 9049.

- Sun, X.; Zhang, P.; Liu, J.K.; Yu, J.; Xie, W. Private Machine Learning Classification Based on Fully Homomorphic Encryption. IEEE Trans. Emerg. Top. Comput. 2020, 8, 352–364.

- Aslett, L.J.; Esperança, P.M.; Holmes, C.C. A review of homomorphic encryption and software tools for encrypted statistical machine learning. arXiv 2015, arXiv:1508.06574.

- Takabi, H.; Hesamifard, E.; Ghasemi, M. Privacy preserving multi-party machine learning with homomorphic encryption. In Proceedings of the 29th Annual Conference on Neural Information Processing Systems (NIPS 2016), Barcelona, Spain, 5–10 December 2016.

- Li, J.; Kuang, X.; Lin, S.; Ma, X.; Tang, Y. Privacy preservation for machine learning training and classification based on homomorphic encryption schemes. Inf. Sci. 2020, 526, 166–179.

- Wood, A.; Najarian, K.; Kahrobaei, D. Homomorphic Encryption for Machine Learning in Medicine and Bioinformatics. ACM Comput. Surv. 2021, 53, 1–35.

- Fang, H.; Qian, Q. Privacy Preserving Machine Learning with Homomorphic Encryption and Federated Learning. Future Internet 2021, 13, 94.

- Khan, L.U.; Saad, W.; Han, Z.; Hossain, E.; Hong, C.S. Federated Learning for Internet of Things: Recent Advances, Taxonomy, and Open Challenges. IEEE Commun. Surv. Tutor. 2021, 23, 1759–1799.

- Oh, S.J.; Schiele, B.; Fritz, M. Towards Reverse-Engineering Black-Box Neural Networks. In Explainable AI: Interpreting, Explaining and Visualizing Deep Learning, 1st ed.; Samek, W., Montavon, G., Vedaldi, A., Hansen, L.K., Müller, K.R., Eds.; Springer: Cham, Switzerland, 2019; Volume 11700, pp. 121–144. ISBN 978-3-030-28954-6.

- Google Cloud. Accelerate Your Transformation with Google Could. 2022. Available online: https://cloud.google.com/ (accessed on 10 March 2022).

- Azure Machine Learning. An Enterprise-Grade Service for the End-to-End Machine Learning Lifecycle. 2022. Available online: https://azure.microsoft.com/en-us/services/machine-learning/ (accessed on 10 March 2020).

- Fu, A.; Zhang, X.; Xiong, N.; Gao, Y.; Wang, H.; Zhang, J. VFL: A Verifiable Federated Learning with Privacy-Preserving for Big Data in Industrial IoT. IEEE Trans. Industr. Inform. 2020, 18, 3316–3326.

- Girka, A.; Terziyan, V.; Gavriushenko, M.; Gontarenko, A. Anonymization as homeomorphic data space transformation for privacy-preserving deep learning. Procedia Comput. Sci. 2021, 180, 867–876.

- Zhao, Y.; Zhao, J.; Jiang, L.; Tan, R.; Niyato, D.; Li, Z.; Lyu, L.; Liu, Y. Privacy-Preserving Blockchain-Based Federated Learning for IoT Devices. IEEE Internet Things J. 2021, 8, 1817–1829.

- Gonçalves, C.; Bessa, R.J.; Pinson, P. Privacy-Preserving Distributed Learning for Renewable Energy Forecasting. IEEE Trans. Sustain. Energy 2021, 12, 1777–1787.

- Goodfellow, I.; Jean, P.A.; Mehdi, M.; Bing, X.; David, W.F.; Sherjil, O.; Aaron, C.; Bengio, Y. Generative Adversarial Nets. Adv. Neural Inf. Process. Syst. 2014, 27, 2672–2680.

- Li, Y.; Li, J.; Wang, Y. Privacy-Preserving Spatiotemporal Scenario Generation of Renewable Energies: A Federated Deep Generative Learning Approach. IEEE Trans. Industr. Inform. 2021, 18, 2310–2320.

- Kaggle. Casting Product Image Data for Quality Inspection–Dataset. Available online: https://www.kaggle.com/datasets/ravirajsinh45/real-life-industrial-dataset-of-casting-product (accessed on 20 May 2022).

- Popescu, A.B.; Taca, I.A.; Vizitiu, A.; Nita, C.I.; Suciu, C.; Itu, L.M.; Scafa-Udriste, A. Obfuscation Algorithm for Privacy-Preserving Deep Learning-Based Medical Image Analysis. Appl. Sci. 2022, 12, 3997.

More