Your browser does not fully support modern features. Please upgrade for a smoother experience.

Please note this is a comparison between Version 1 by Ali Kashif Bashir and Version 2 by Camila Xu.

Modern datacenters are reinforcing the computational power and energy efficiency by assimilating field programmable gate arrays (FPGAs). The sustainability of this large-scale integration depends on enabling multi-tenant FPGAs. This requisite amplifies the importance of communication architecture and virtualization method with the required features in order to meet the high-end objective.

- FPGA virtualization

- datacenters

- network on chip

1. Introduction

Today, datacenters are equipped with the heterogeneous computing resources that range from Central Processing Units (CPUs), Graphical Processing Units (GPUs), Networks on Chip (NoCs) to Field Programmable Gate Arrays (FPGAs), each suited for a certain type of operation, as concluded by Escobar et al. in [1]. They all purvey the scalability and parallelism; hence, unfold new fronts for the existing body of knowledge in algorithmic optimization, computer architecture, micro-architecture, and platform-based design methods [2]. FPGAs are considered as a competitive computational resource for two reasons, added performance and lower power consumption. The cost of electrical power in datacenters is far-reaching, as it contributes roughly half of lifetime cost, as concluded in [3]. This factor alone motivates the companies to deploy FPGAs in datacenters, hence urging the scientific community to exploit High-Performance Reconfigurable Computing (HRC).

Industrial and academic works both incorporated the FPGAs to accelerate large-scale datacenter services; Microsoft’s Catapult is one such example [4]. Putnam et al. chose FPGA over GPU on the question of power demand. The flagship project accelerated Bing search engine by 95% as compared to a software-only solution, at the cost of 10% additional power.

The deployment of FPGAs in datacenters will neither be sustainable nor economical, without realizing the multi-tenancy feature of virtualization across multiple FPGAs. To achieve this ambitious goal, the scientific community needs to master two crafts, an interconnect solution preferably Network on Chip (NoC) as a communication architecture and an improved virtualization method with all the features of an operating system. Accumulating the state of the art in a survey can foster the development in this area and direct the researchers into more focused and challenging problems. Despite of the two excellent surveys, [5] in 2004 and [6] in 2018, former one categorized the FPGA virtualization as temporal partitioning, virtualized execution, and virtual machines, while, after fourteen years, the later one classified based on abstraction levels to accommodate the future changes, but the communication architecture or interconnect possibilities are not fully explored. To address this gap, an improved survey on FPGA virtualization is presented with the coverage of network-on-chip evaluation choices as a mean to explore the communication architecture, and commentary on nomenclature of existing body of knowledge. ThWe researchers reevisited the network-on-chip evaluation platforms in order to highlight its importance as compared to bus-based architectures. The researchers stretchedWe stretched our review from acceleration of standalone FPGA to FPGAs connected as a computational resource in heterogeneous environment. The researchers aWe attempted to create a synergy through combining three domains to assist the designers to choose right communication architecture for the right virtualization technique and, finally, share the work in the right language, only then, multi-tenant FPGAs in datacenters can be realized.

This area is stagnated for a lack of a standard nomenclature. ThWe researchers reccommend that the scientific community should use a unified nomenclature to present the viewpoint in order to improve the clarity and precision of communication for advancing the knowledge base. The researchers aWe also recommend that this area must be referred as High-Performance Reconfigurable Computing (HRC) in literature. Moreover, it has been observed that the use of computer science language is more conveying as virtualization in FPGAs is comparable to an operating system in CPUs.

There are four different types of network: Direct Mapping on Single or Multi FPGA(s), Fast Prototyping and Virtualization. The choice of the network affects the accuracy and resource utilization. Traffic on network can be generated in two different ways: synthetic and application-specific. Synthetic traffic is a kind of load testing to evaluate the overall performance, but it fails to forecast the performance under real traffic flow. Application-specific traffic, on the other hand, is based on the behavior of real traffic flow that is difficult to acquire but gives more accurate results. These patterns can be acquired either through trace, statistical method or executing application cores. As traces comprises of millions of packets so the size becomes a limiting factor. Running application cores to generate traffic is also resource-expensive method.

Table 2 lists some FPGA based NoC evaluation tools, describing every architecture with network type, traffic type, number of routers, target board, and execution frequency, while hiding the complexity of NoC designs. The number of routers in NoC depends on the network type, architecture with relatively more routers, are based on second group type of network, fast prototyping and virtualization. ThWe researchers hhave used the direct mapping network type in theour previous works due to relatively high execution frequency [23][24][24,25].

[43] and an area optimization technique [43][44].

Virtualization plays a relatable role to an operating system in a computer, but the term is being used in different meanings in this area, due to non-uniform nomenclature discussed earlier. Yet, the universal concept of an abstraction layer remains unchanged, a layer for the user to hide the underlying complexity of the computing machine, where the computing machine is not a traditional one, but FPGA. Many virtualization architectures have been proposed as per the requirements of the diverse applications. In 2004, a survey in this regard categorized the virtualization architectures into three broad categories, temporal partitioning, virtualized execution, and overlays [5]. Since then, no serious effort has been recorded on the classification of virtualization, until Vaishnav et al. [6] in 2018 classified the virtualization architectures based on abstraction levels. This much-needed classification contributed by Vaishnav et al. has been adopted as is, to discuss the works in this survey. ThWe researchers reeiterated them with some of the representative work examples in Table 3. The works have been discussed under the same abstract classification.

There are four different types of network: Direct Mapping on Single or Multi FPGA(s), Fast Prototyping and Virtualization. The choice of the network affects the accuracy and resource utilization. Traffic on network can be generated in two different ways: synthetic and application-specific. Synthetic traffic is a kind of load testing to evaluate the overall performance, but it fails to forecast the performance under real traffic flow. Application-specific traffic, on the other hand, is based on the behavior of real traffic flow that is difficult to acquire but gives more accurate results. These patterns can be acquired either through trace, statistical method or executing application cores. As traces comprises of millions of packets so the size becomes a limiting factor. Running application cores to generate traffic is also resource-expensive method.

Table 2 lists some FPGA based NoC evaluation tools, describing every architecture with network type, traffic type, number of routers, target board, and execution frequency, while hiding the complexity of NoC designs. The number of routers in NoC depends on the network type, architecture with relatively more routers, are based on second group type of network, fast prototyping and virtualization. ThWe researchers hhave used the direct mapping network type in theour previous works due to relatively high execution frequency [23][24][24,25].

[43] and an area optimization technique [43][44].

Virtualization plays a relatable role to an operating system in a computer, but the term is being used in different meanings in this area, due to non-uniform nomenclature discussed earlier. Yet, the universal concept of an abstraction layer remains unchanged, a layer for the user to hide the underlying complexity of the computing machine, where the computing machine is not a traditional one, but FPGA. Many virtualization architectures have been proposed as per the requirements of the diverse applications. In 2004, a survey in this regard categorized the virtualization architectures into three broad categories, temporal partitioning, virtualized execution, and overlays [5]. Since then, no serious effort has been recorded on the classification of virtualization, until Vaishnav et al. [6] in 2018 classified the virtualization architectures based on abstraction levels. This much-needed classification contributed by Vaishnav et al. has been adopted as is, to discuss the works in this survey. ThWe researchers reeiterated them with some of the representative work examples in Table 3. The works have been discussed under the same abstract classification.

These evaluation platforms assist the designers to reach the design-specific communication architecture, meeting most of the requirement specifications, for a certain application. These evaluation platforms take comparatively more time to synthesize the change, while on the other hand, a simulator can accommodate the same change in much lesser time. Designers offer dynamic reconfiguration, as a peroration to this limitation, but simulators are still the first choice of many entry-level researchers. However, the choice of NoC to realize the future datacenters with multi-tenant multi-FPGAs is yet to explore. The linking of several computational nodes becomes complicated and affects the performance of the overall system. Although NoC is not the only choice for communication within an FPGA as well as among multiple FPGAs but offer a competitive and promising solution. Other solutions include traditional bus, bus combined with a soft shell, different types of soft NoC and hard NoC. Many comparative studies evaluated these choices based on parameters like useable bandwidth, area consumption, latency, wire requirement and routing congestion. The way NoC is generated, also affects the performance so designers must be careful while choosing the NoC or an alternate for their design.

Although there are many features of virtualization like management, scheduling, adoptability, segregation, scalability, performance-overhead, availability, programmability, time-to-market, security, but the most important feature in the context of scope of this research is the multi-tenancy because it is essential for a sustainable and economically viable deployment in datacenters. FPGA has two types of fabric: reconfigurable and non-reconfigurable. The virtualization for the non-reconfigurable fabric is the same as of CPU, but there are several variations when it comes to the virtualization of the reconfigurable fabric.

2. Revisiting the Nomenclature

The applications of FPGAs as computing resource are diverse that includes data analytics, financial computing and cloud computing. This broad range of applications in different areas requires efficient applications and resource management. This lays the foundation for the need of virtualizing the FPGA as a potential resource. Nomenclature is much varying due to the different backgrounds of the researchers contributing to this area. There are many such examples in literature where similar concepts or architecture is described using a different name or term. There is also an abundance of jargon terms and acronyms, which confuse the researchers rather enhancing their understanding. Table 1 identifies and lists non-standard terms in literature from the last decade.Table 1.

Non-Standard Nomenclature Present in Literature.

| Year | Non-Standard Term(s) in Published Literature |

|---|---|

| 2010 | RAMPSoC in [7] |

| 2011 | Lightweight IP (LwIP) in [8] |

| 2012 | ASIF (Application Specific FPGA) in [9] |

| 2013 | sAES (FPGA based data protection system) in [10] |

| 2014 | PFC (FPGA cloud for privacy preserving computation in [11] |

| 2015 | CPU-Cache-FPGA in [12] |

| 2016 | HwAcc (Hardware accelerators), RIPaaS and RRaaS in [13] |

| 2017 | FPGA as a Service (FaaS) and Secure FaaS in [14] |

| 2018 | ACCLOUD (Accelerated CLOUD) in [15], FPGAVirt in [16] |

| 2019 | vFPGA-based CCMs (Custom Computing Machines) in [17] |

3. Revisiting the Network on Chip Evaluation Tools

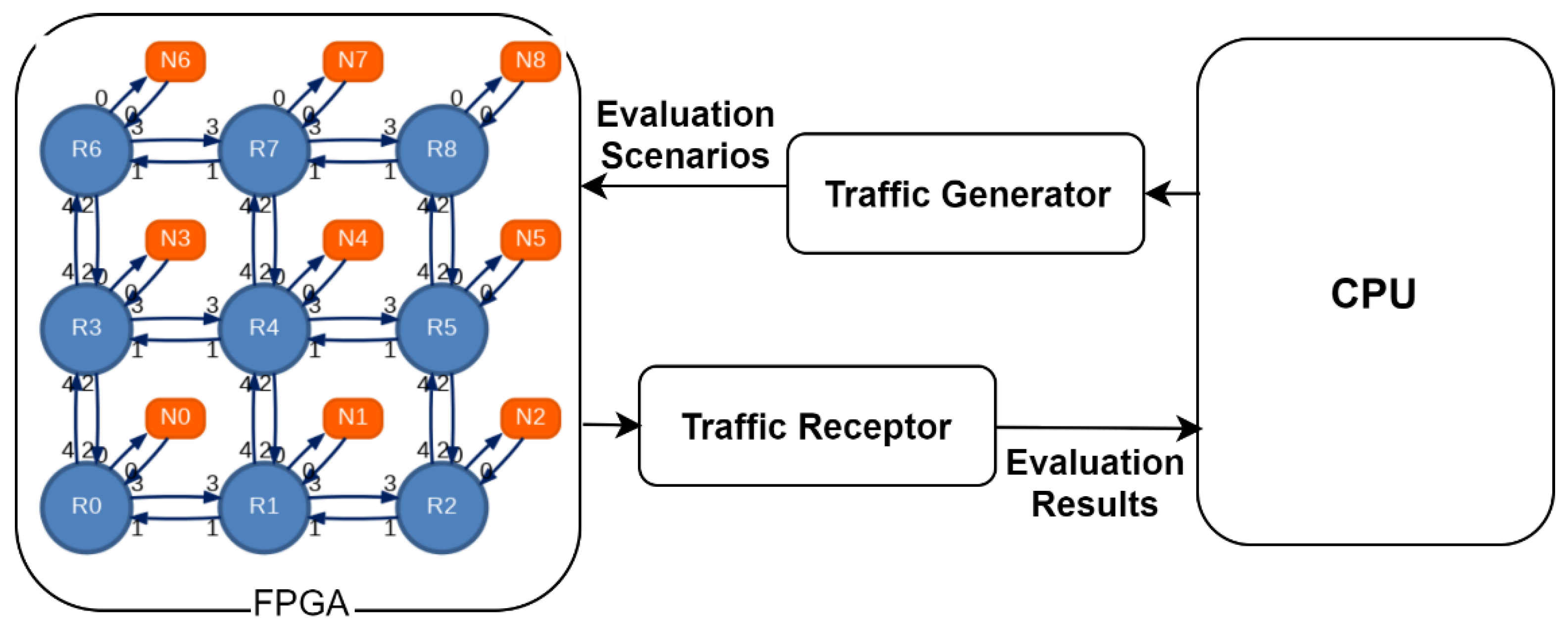

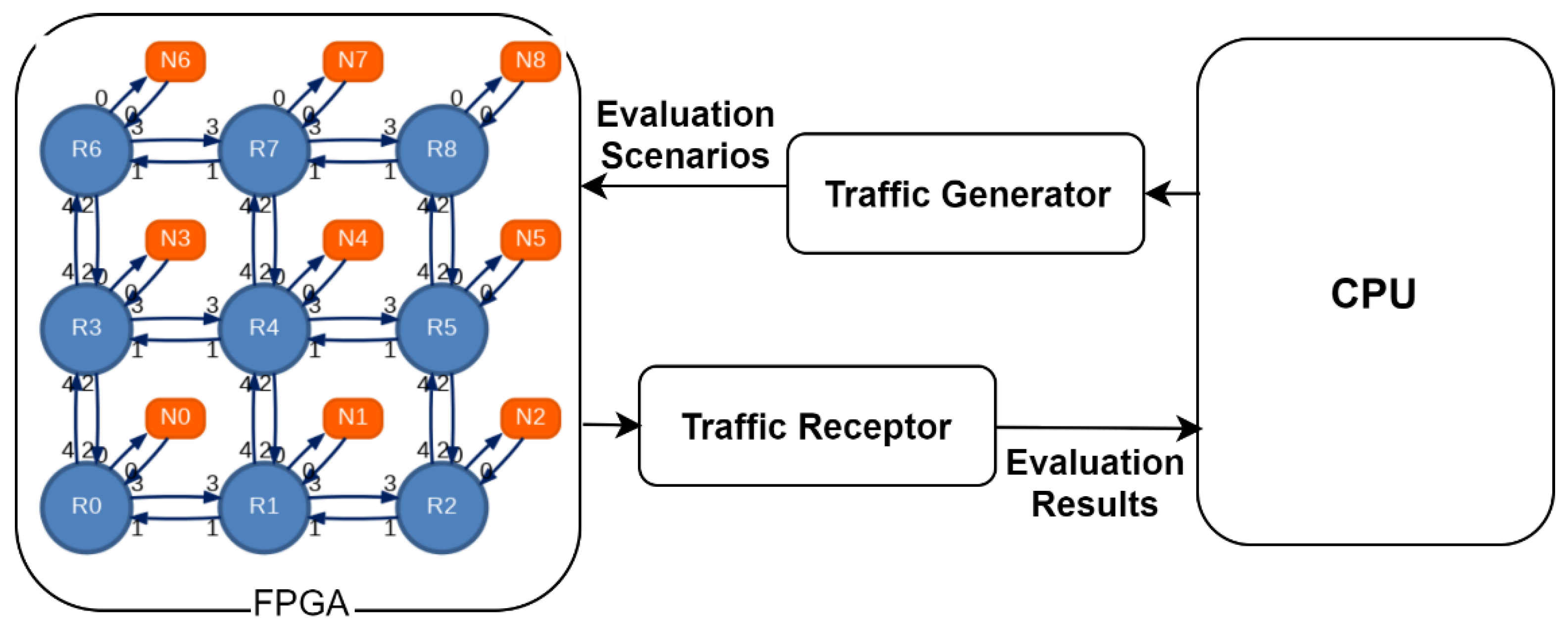

Data transfers in most of the high-performance architectures are limited by memory hierarchy and communication architecture, as summarized in [18][19][19,20]. Exploiting communication architecture suggests the use of NoC, an effective replacement for buses or dedicated links in a system with large number of processing cores [20][21][21,22]. NoC is composed of several tunable parameters like network architecture, algorithm, network topology and flow control. No System on Chip (SoC) is outright without NoC, today, due to promised high communication bandwidth with low latency as compared to the alternate communication architectures. Researchers heavily rely on automated evaluation tools, where performance and power evaluation can be viewed early in design, given the complexity of NoC. Figure 1 describes a typical cycle of NoC evaluation, with FPGA being connected to a Central Processing Unit (CPU). Traffic scenarios are generated through traffic generator, sent to NoC that resides in FPGA, and the evaluation results are received through traffic receptors. Tools for FPGA based NoC prototyping are diverse architecture-wise. De Lima et al. in [22][23] identified an architectural model comprising of three layers: network, traffic, and management.

Figure 1. Generic Architecture of Networks on Chip (NoC) Evaluation on Field Programmable Gate Arrays (FPGA)(s).

Generic Architecture of Networks on Chip (NoC) Evaluation on Field Programmable Gate Arrays (FPGA)(s).

Table 2.

NoC Evaluation Tools based on FPGA(s)

.

Table 3.

| Abstract Classification | Sub-Class | Work Examples References | ||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Resource Level | Overlays | [44][45][46][47][48][49][50][51][52][53][54][55][56][57][58][59][60][61][62][63][64][65][66][67][68][69][70][71][72][73] | [45,46,47,48,49,50,51,52,53,54,55,56,57,58,59,60,61,62,63,64,65,66,67,68,69,70,71,72,73,74] | |||||||||||||||||||

| Input Output (I/O) Virtualization | [4][74][75][76][77][78][79] | [4,75,76,77,78,79,80] | ||||||||||||||||||||

| Fast Prototyping | Synthetic | 49 | ||||||||||||||||||||

| Node Level | Virtual Machine Monitors | [80] | Virtex 6 | [81][82][83 | 50 | ] | [81,82,83,84 | [27] | [28] | |||||||||||||

| ] | 2011 | Direct Mapping | ||||||||||||||||||||

| Shells | [4][40] | 41 | [74 | Real: Traces based | ][75][76][77][84][85][86][87][88][89][90][91][92] | 25 | [93 | Virtex 5 | ] | - | [94] | ,75 | [ | ,76 | 95 | ,77 | ][ | ,78 | 96] | [4,,85 | [28] | [29] |

| , | 86,87,88,89,90,91,92,93,94,95,96,97] | 2011 | Virtualization | Real: Traces based | 256 | Virtex 6 | 152 | |||||||||||||||

| Scheduling | [81][88][97][98][99][100][101][102][103][104][ | ,100 | 105 | ,101 | ] | ,102 | [106][107][108][109] | ,103 | [ | ,104 | 110 | ,105 | ][111] | [82,89,98,99,106,107,108,109,110,111,112 | [29] | [30] | ||||||

| ] | 2011 | Fast Prototyping | Synthetic | 576 | Virtex 6 | 300 | ||||||||||||||||

| Multi Node Level | Custom Clusters | [112][113][114][115][116] | [113,114,115,116,117] | [ | 30 | ] | [31] | |||||||||||||||

| 2011 | Virtualization | |||||||||||||||||||||

| Frameworks | [76][102][117][118][ | Real: App. Cores | 119][120][121][122] | [77,103,118,64 | 119,120,121,122Virtex 2 | ,- | 123] | [31] | [32] | |||||||||||||

| 2011 | Virtualization | |||||||||||||||||||||

| Cloud Services | [4][39][123][124][125][ | ,40 | 126 | ,124 | ] | ,125,126 | [ | ,127 | 127][128][129] | [4,128,129,Real: App. Cores | 13016 | Virtex 5 | 3, 15 | ] | [32] | [33] | ||||||

| 2011 | Direct Mapping | Synthetic | 36 | Virtex 5 | - | [33] | [34] | |||||||||||||||

| 2012 | Direct Mapping | Real: App. Cores | 9 | Virtex 5 | - | [34] | [35] | |||||||||||||||

| 2013 | Multiple FPGA | Synthetic | 18 | Virtex 5 × 2 | - | [35] | [36] | |||||||||||||||

| 2014 | Virtualization | Synthetic | 1024 | Virtex 7 | 42 | [36] | [37] | |||||||||||||||

| 2015 | Direct Mapping | Synthetic | 64 | Virtex 6 | 50 | [37] | [38] | |||||||||||||||

| 2016 | Direct Mapping | Synthetic | 16 | Virtex 6 | 250 | [13] | ||||||||||||||||

| 2017 | Direct Mapping | Synthetic | 16 | Virtex 6 | 250 | [23] | [24] | |||||||||||||||

| 2018 | Direct Mapping | Synthetic | 16 | Virtex 6 | 250 | [24] | [25] |