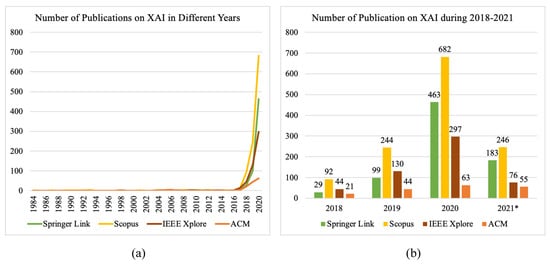

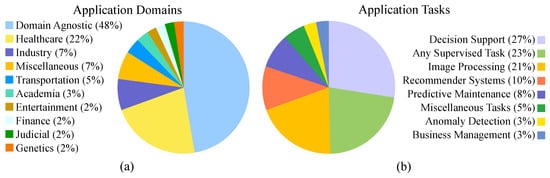

Artificial intelligence (AI) and machine learning (ML) have recently been radically improved and are now being employed in almost every application domain to develop automated or semi-automated systems. To facilitate greater human acceptability of these systems, explainable artificial intelligence (XAI) has experienced significant growth over the last couple of years with the development of highly accurate models but with a paucity of explainability and interpretability. XAI methods are mostly developed for safety-critical domains worldwide, deep learning and ensemble models are being exploited more than other types of AI/ML models, visual explanations are more acceptable to end-users and robust evaluation metrics are being developed to assess the quality of explanations.

- explainable artificial intelligence

- explainability

1. Introduction

2. Theoretical Background

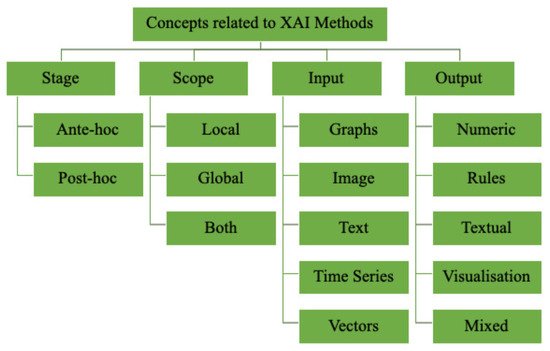

2.1. Stage of Explainability

-

Ante hoc methods generally consider generating the explanation for the decision from the very beginning of the training on the data while aiming to achieve optimal performance. Mostly, explanations are generated using these methods for transparent models, such as fuzzy models and tree-based models;

-

Post hoc methods comprise an external or surrogate model and the base model. The base model remains unchanged, and the external model mimics the base model’s behaviour to generate an explanation for the users. Generally, these methods are associated with the models in which the inference mechanism remains unknown to users, e.g., support vector machines and neural networks. Moreover, the post hoc methods are again divided into two categories: model-agnostic and model-specific. The model-agnostic methods apply to any AI/ML model, whereas the model-specific methods are confined to particular models.

2.2. Scope of Explainability

2.3. Input and Output

References

- Rai, A. Explainable AI: From Black Box to Glass Box. J. Acad. Mark. Sci. 2020, 48, 137–141.

- Guidotti, R.; Monreale, A.; Ruggieri, S.; Turini, F.; Giannotti, F.; Pedreschi, D. A Survey of Methods for Explaining Black Box Models. ACM Comput. Surv. 2018, 51, 1–42.

- Loyola-González, O. Black-Box vs. White-Box: Understanding Their Advantages and Weaknesses From a Practical Point of View. IEEE Access 2019, 7, 154096–154113.

- Neches, R.; Swartout, W.; Moore, J. Enhanced Maintenance and Explanation of Expert Systems Through Explicit Models of Their Development. IEEE Trans. Softw. Eng. 1985, SE-11, 1337–1351.

- Gunning, D.; Aha, D. DARPA’s Explainable Artificial Intelligence (XAI) Program. AI Mag. 2019, 40, 44–58.

- Xu, F.; Uszkoreit, H.; Du, Y.; Fan, W.; Zhao, D.; Zhu, J. Explainable AI: A Brief Survey on History, Research Areas, Approaches and Challenges. In Natural Language Processing and Chinese Computing; Tang, J., Kan, M.Y., Zhao, D., Li, S., Zan, H., Eds.; Springer International Publishing: Berlin/Heidelberg, Germany, 2019; Volume 11839, pp. 563–574.

- Wachter, S.; Mittelstadt, B.; Russell, C. Counterfactual Explanations Without Opening the Black Box: Automated Decisions and the GDPR. Harv. J. Law Technol. 2018, 31, 841–887.

- Vilone, G.; Longo, L. Explainable Artificial Intelligence: A Systematic Review. arXiv 2020, arXiv:2006.00093.

- Vilone, G.; Longo, L. Classification of Explainable Artificial Intelligence Methods through Their Output Formats. Mach. Learn. Knowl. Extr. 2021, 3, 615–661.

- Lacave, C.; Diéz, F.J. A Review of Explanation Methods for Bayesian Networks. Knowl. Eng. Rev. 2002, 17, 107–127.

- Ribeiro, M.T.; Singh, S.; Guestrin, C. Model-Agnostic Interpretability of Machine Learning. In Proceedings of the ICML Workshop on Human Interpretability in Machine Learning, New York, NY, USA, 23 June 2016; Available online: https://arxiv.org/abs/1606.05386 (accessed on 30 June 2021).

- Ribeiro, M.T.; Singh, S.; Guestrin, C. “Why Should I Trust You?” Explaining the Predictions of Any Classifier. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; ACM: New York, NY, USA, 2016; pp. 1135–1144.

- Alonso, J.M.; Castiello, C.; Mencar, C. A Bibliometric Analysis of the Explainable Artificial Intelligence Research Field. In Information Processing and Management of Uncertainty in Knowledge-Based Systems. Theory and Foundations; Medina, J., Ojeda-Aciego, M., Verdegay, J.L., Pelta, D.A., Cabrera, I.P., Bouchon-Meunier, B., Yager, R.R., Eds.; Springer International Publishing: Berlin/Heidelberg, Germany, 2018; Volume 853, pp. 3–15.

- Goebel, R.; Chander, A.; Holzinger, K.; Lecue, F.; Akata, Z.; Stumpf, S.; Kieseberg, P.; Holzinger, A. Explainable AI: The New 42? In Machine Learning and Knowledge Extraction; Holzinger, A., Kieseberg, P., Tjoa, A.M., Weippl, E., Eds.; Springer: Cham, Switzerland, 2018; Volume 11015, pp. 295–303.

- Rudin, C. Stop Explaining Black Box Machine Learning Models for High Stakes Decisions and Use Interpretable Models Instead. Nat. Mach. Intell. 2019, 1, 206–215.

- Dosilovic, F.K.; Brcic, M.; Hlupic, N. Explainable Artificial Intelligence: A Survey. In Proceedings of the 41st International Convention on Information and Communication Technology, Electronics and Microelectronics (MIPRO 2018), Opatija, Croatia, 21–25 May 2018; pp. 0210–0215.

- Mittelstadt, B.; Russell, C.; Wachter, S. Explaining Explanations in AI. In Proceedings of the Conference on Fairness, Accountability, and Transparency (FAT* 2019), Atlanta, GA, USA, 29–31 January 2019; ACM Press: New York, NY, USA, 2019; pp. 279–288.

- Samek, W.; Müller, K.R. Towards Explainable Artificial Intelligence. In Explainable AI: Interpreting, Explaining and Visualizing Deep Learning; Samek, W., Montavon, G., Vedaldi, A., Hansen, L.K., Müller, K.R., Eds.; Springer International Publishing: Berlin/Heidelberg, Germany, 2019; Volume 1, pp. 5–22.

- Preece, A.; Harborne, D.; Braines, D.; Tomsett, R.; Chakraborty, S. Stakeholders in Explainable AI. In Proceedings of the AAAI FSS-18: Artificial Intelligence in Government and Public Sector, Arlington, VA, USA, 18–20 October 2018; Available online: https://arxiv.org/abs/1810.00184 (accessed on 30 June 2021).

- Barredo Arrieta, A.; Díaz-Rodríguez, N.; Del Ser, J.; Bennetot, A.; Tabik, S.; Barbado, A.; Garcia, S.; Gil-Lopez, S.; Molina, D.; Benjamins, R.; et al. Explainable Explainable Artificial Intelligence (XAI): Concepts, Taxonomies, Opportunities and Challenges toward Responsible AI. Inf. Fusion 2020, 58, 82–115.

- Longo, L.; Goebel, R.; Lecue, F.; Kieseberg, P.; Holzinger, A. Explainable Artificial Intelligence: Concepts, Applications, Research Challenges and Visions. In Machine Learning and Knowledge Extraction; Holzinger, A., Kieseberg, P., Tjoa, A.M., Weippl, E., Eds.; Springer International Publishing: Berlin/Heidelberg, Germany, 2020; Volume 12279, pp. 1–16.