You're using an outdated browser. Please upgrade to a modern browser for the best experience.

Please note this is a comparison between Version 2 by Camila Xu and Version 1 by Vladimir Tadic.

The ZED depth sensor is considered as one of the world's fastest depth camera. It is composed of stereo cameras with dual 4 mega-pixel RGB sensors.

- ZED depth camera

- stereo vision

- image processing

- computer vision

- depth image

1. ZED Depth Sensors

The ZED depth camera is a passive depth ranging tool without an active ranging device because it does not contain an IR laser projector. This depth camera employs a binocular camera to capture 3D scene data, measures the disparity of the objects using a stereo matching algorithm, and finally calculates the depth map according to the sensor parameters [16,17,18,19,20][1][2][3][4][5].

The ZED depth sensor is composed of stereo cameras with a video resolution of 2560 × 1440 pixels (2K) with dual 4 mega-pixel RGB sensors. The two RGB cameras are at a fixed base distance of 12 cm. This base distance allows the ZED camera to generate a depth image up to 20 m (40 m is the maximum distance in the new updated firmware, according to the Stereolabs datasheet) [16][1]. The camera contains a USB video device class supported USB 3.0 port backward compatible with the USB 2.0. standard. It should be noted that the ZED 3D depth sensor is optimized for real-time depth calculation using NVidia Compute Unified Device Architecture technology [16][1]. Therefore, a corresponding graphical processing unit (GPU) and appropriate computer hardware are required to use it [16,17,18][1][2][3].

The ZED sensor uses wide-angle optical lenses with a FOV of 110°, and it can stream an uncompressed video signal at a rate up to 100 fps in Wide Video Graphics Array (WVGA) format. The depth image is provided with a 32-bit resolution. Hence, the camera gives a very accurate and precise depth image that describes the depth differences, i.e., the different distances from the plane of the camera. Right and left video frames are synchronized and streamed as a single uncompressed video frame in the side-by-side format. Various configurations and capturing parameters of the on-board image signal processor, such as brightness, saturation, resolution, and contrast, can be adjusted through the SDK provided by Stereolabs [16][1]. Furthermore, ZED devices support several software packages, called “wrappers,” such as ROS, MATLAB, Python, etc. All these software packages allow the modification of different parameters depending on the user requirements, such as the image quality, depth quality, sensing mode, name of topics, quantity of frames per second, etc. [16,17,18,19,20][1][2][3][4][5]. Figure 31 shows the ZED depth sensors [16][1].

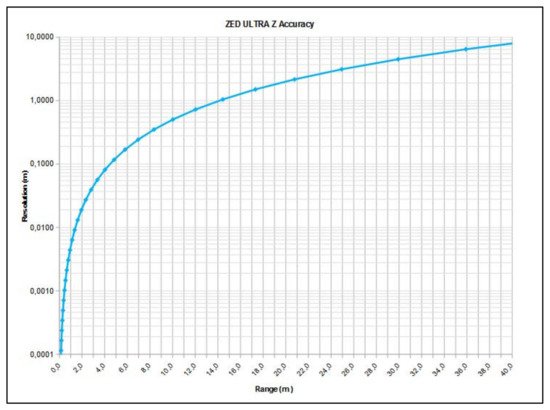

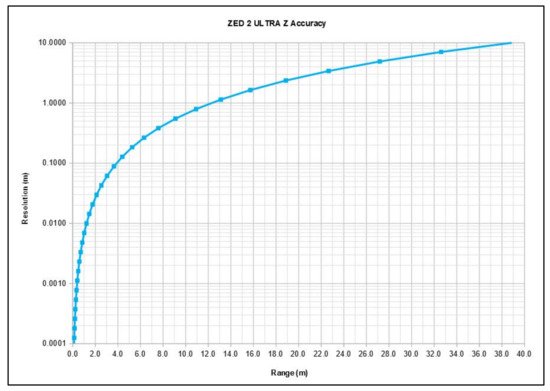

Figure 42 presents the accuracy graph of the ZED depth sensor depending on the distance of an object from the depth camera. As shown, the depth resolution, i.e., the depth precision, is impaired with increasing distance [16][1].

Finally, it should be remarked that the ZED depth sensor comes with a unique factory calibration file, which is downloaded automatically. The recommendation is to use the Stereolabs factory settings, but users can also calibrate the ZED sensor with the ZED SDK software package [16,17,18,19,20][1][2][3][4][5].

The new ZED 2i depth camera has some similarities and shares some properties with the previous version of the ZED depth camera. However, the new ZED 2i sensor includes several significant improvements.

2. Working Principle

ZED 2i is the first stereo depth camera that uses artificial neural networks (ANNs) to reproduce human vision, bringing stereo perception to a new level [16][1]. It contains a neural engine that significantly improves the captured depth image or depth video stream. This ANN is connected to the image DSP, and they contribute jointly to creating the best possible depth map [16][1]. Furthermore, the ZED 2i camera has a built-in object detection algorithm [16][1]. This algorithm detects objects with spatial context. It combines artificial intelligence with 3D localization to create next-generation spatial awareness [16][1]. There is also a built-in skeleton tracking option that uses 18x body key points for the tracking application. The algorithm detects and tracks human body skeletons in real time. The tracking result is displayed via a bounding box, and the algorithm works up to a 10 m range [16][1].

Next, this ZED 2i camera has an enhanced positional tracking algorithm that is a significant improvement suitable for robotic applications [16,17,18,19,20,21][1][2][3][4][5][6]. This benefit arises from a wide 120° angle FOV, advanced sensor stack, and thermal calibration for greatly improved positional tracking precision and accuracy [16][1]. The ZED 2i depth camera has a built-in inertial measurement unit, barometer, temperature sensor, and magnetometer. All these sensors provide extraordinary opportunities for easy and accurate multi-sensor capturing. These sensors are factory calibrated on nine axes with robotic arms [16][1]. The data rates of the position sensors, i.e., the barometer and magnetometer, are 25 Hz/50 Hz. The built-in motion sensors, accelerometer, and gyroscope contribute significantly to the development of robotic applications since there is no need to install any other sensor except the ZED 2i camera itself. The data rate of these sensors is 400 Hz. The thermal sensors monitor the temperature and compensate for the drifts caused by heating. In this way, gathered real-time synchronized inertial, elevation, and magnetic field data along with image and depth are collected. These sensors also contribute to accurate and precise depth sensing. The all-aluminum frame reduces the camera heating that induces changes in focal length and motion sensor biases [16][1]. The case in aluminum allows to better dissipate the internal heat generated by the electronic components reducing the internal temperature of the depth camera. Additionally, the case deformations cannot affect the measure of the depth in any way because the lenses do not move. Furthermore, the software is factory calibrated in order to use the temperature information provided by the internal sensors and modify the data accordingly [16][1]. All the mentioned motion and positional features indicate that the ZED 2i depth camera is extremely suitable for development of autonomous and industrial robotic applications [21,22,23,24,25,26,27,28,29,30,31,32,33,34,35,36,37,38,39,40][6][7][8][9][10][11][12][13][14][15][16][17][18][19][20][21][22][23][24][25].

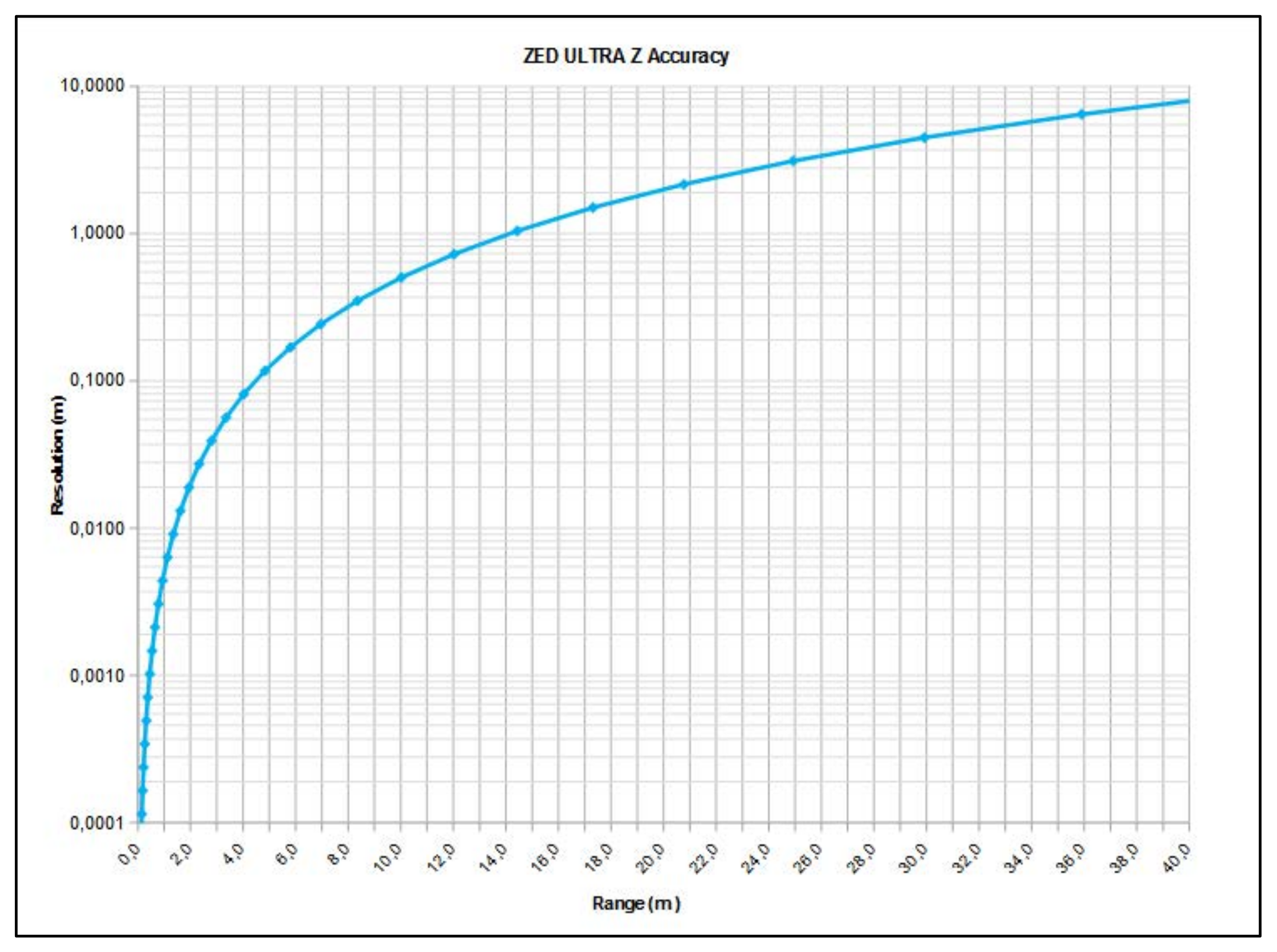

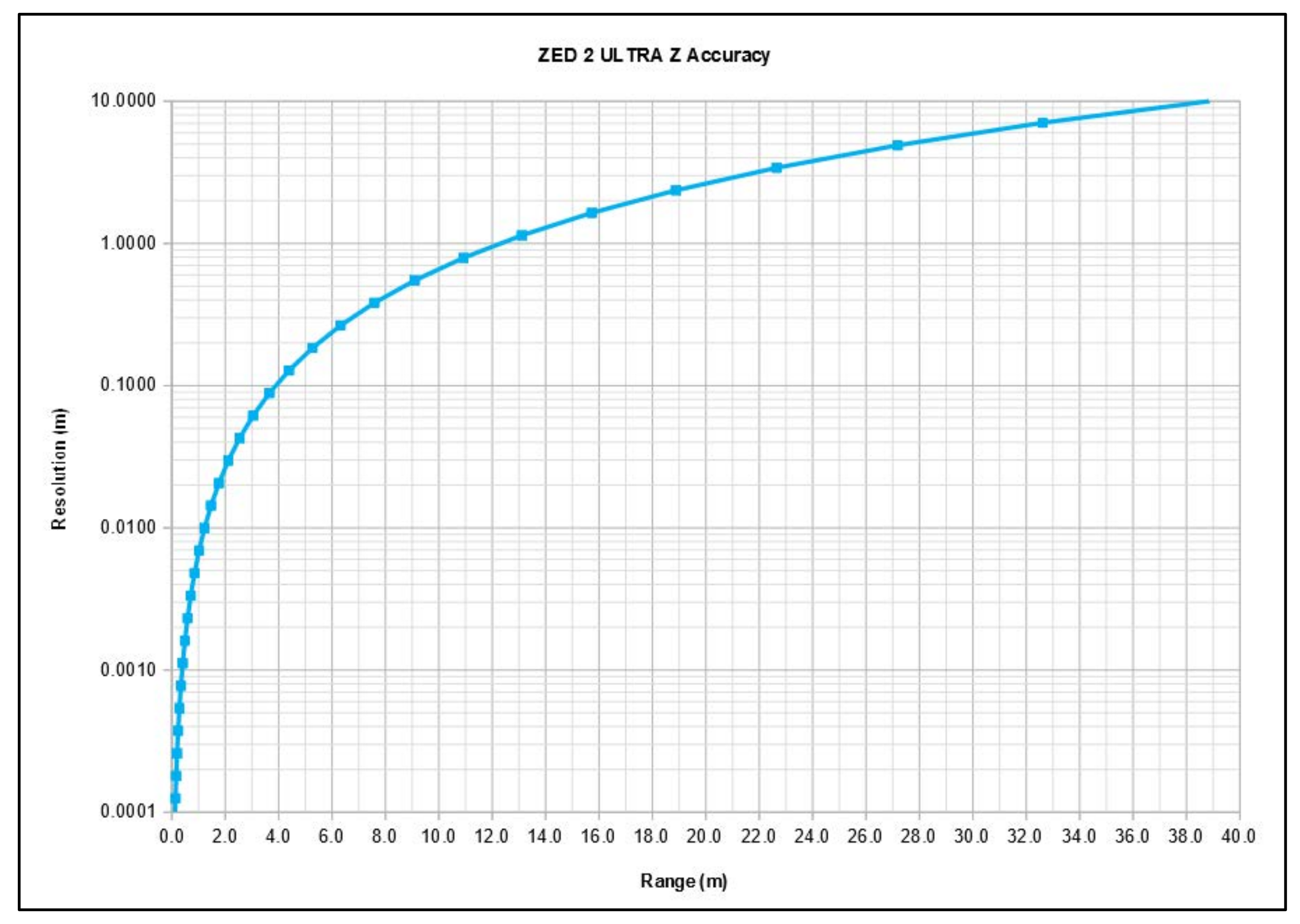

Figure 53 shows the accuracy graph of the ZED 2i depth sensor, depending on the distance of an object from the depth camera. As shown, the depth resolution, i.e., the depth precision, decreases with increasing distance [16][1]; however, for instance, at the 1 m range, the accuracy is better than that of the previous ZED, as seen in Figure 42.

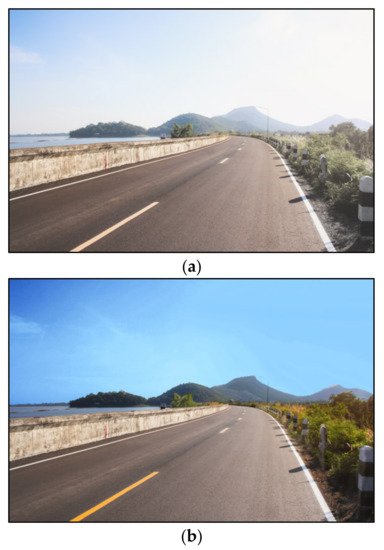

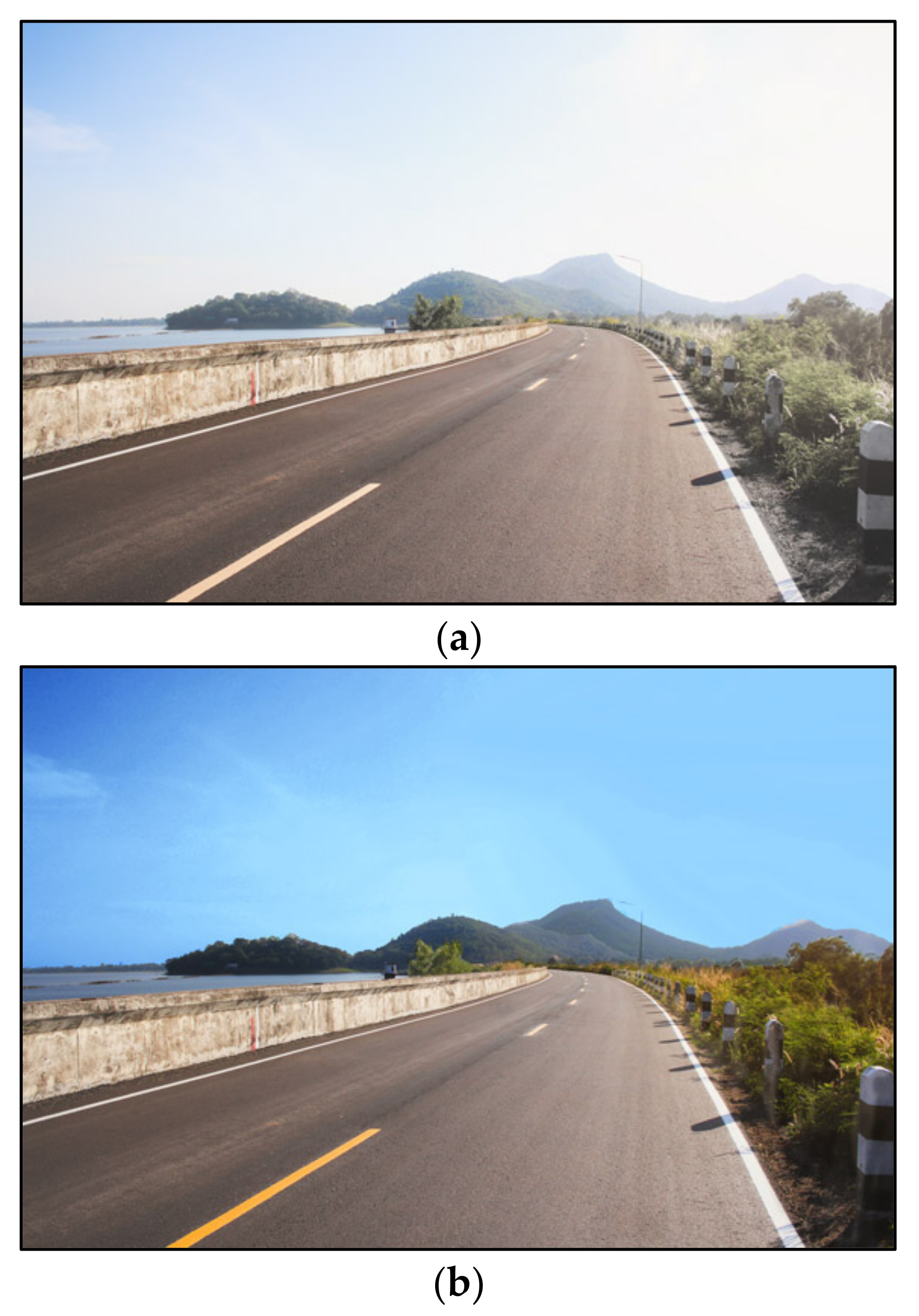

One of the most important improvements in ZED 2i is the inclusion of new ultra-sharp eight-element all-glass lenses able to capture video and depth up to a 120° FOV, with optically corrected distortion and a wider ƒ/1.8 aperture, which allows capturing 40% more light [16][1]. Furthermore, the ZED 2i has an optional feature: the polarizing filter. This built-in polarizing filter gives the highest possible image quality in various outdoors applications. This lens helps to reduce glare and reflections and increases the color depth and quality of the captured images [16][1]. The effect of the polarizer can be seen in Figure 64.

Figure 64.

Effect of the polarizer on a scene: (

a

) without polarizer, (

The ZED 2i stereo camera also has two lens options. It is possible to choose between a 2.1 mm lens for a wide FOV or a 4 mm lens for increased depth and image quality at long range, according to the manufacturer [16][1]. However, the 4 mm lens option entails delays in delivery [16][1]. These are principal features related to all depth cameras since the lenses, aperture, and light significantly affect the image quality of any camera, not only the depth camera. These features enable high-quality depth images to be obtained, upon which promising robotic vision applications can be developed [41,42,43,44,45,46,47,48,49,50,51,52,53,54,55,56,57,58,59][26][27][28][29][30][31][32][33][34][35][36][37][38][39][40][41][42][43][44]. According to the Stereolabs [16][1], the main features of ZED cameras are listed in Table 21.

| Features | ZED | ZED 2i |

|---|---|---|

| Size and weight | Dimensions: | Dimensions: |

| 175 × 30 × 33 mm | 175 × 30 × 33 mm | |

| Weight: 159 g | Weight: 166 g | |

| Depth | Range: 1–20 m | Range: 0.3–20 m |

| Format: 32 bits | Format: 32 bits | |

| Baseline: 120 mm | Baseline: 120 mm | |

| Image sensors | Size: 1/3” | Size: 1/3” |

| Format: 16:9 | Format: 16:9 | |

| Pixel Size: 2 µm pixels | Pixel Size: 2 µm pixels | |

| Lens | Field of View: 110° | Field of View: 120° |

| Six-element all-glass dual lens | Wide-angle eight-element all-glass dual lens with optically corrected distortion | |

| f/2.0 aperture | f/1.8 aperture | |

| Individual image and depth resolution in pixels |

HD2K: 2208 × 1242 (15 fps) | HD2K: 2208 × 1242 (15 fps) |

| HD1080: 1920 × 1080 (30, 15 fps) | HD1080: 1920 × 1080 (30, 15 fps) | |

| HD720: 1280 × 720 (60, 30, 15 fps) | HD720: 1280 × 720 (60, 30, 15 fps) | |

| WVGA: 672 × 376 (100, 60, 30, 15 fps) | WVGA: 672 × 376 (100, 60, 30, 15 fps) | |

| Connectivity and working temperature | USB 3.0 (5 V/380 mA) | USB 3.0 (5 V/380 mA) |

| 0 °C to +45 °C | −10 °C to +45 °C | |

| SDK System minimal requirements |

Windows or Linux | Windows or Linux |

| Dual-core 2.3 GHz CPU | Dual-core 2.3 GHz CPU | |

| 4 GB RAM | 4 GB RAM | |

| Nvidia GPU with compute capability > 3.0 | Nvidia GPU with compute capability > 3.0 | |

| Additional sensors | - | Accelerometer |

| Gyroscope | ||

| Barometer | ||

| Magnetometer | ||

| Temperature sensor | ||

| Software enhancements | - | Depth Perception with Neural Engine |

| Built-in Object Detection |

Furthermore, the ZED 2i has an Ingress Protection 66 (IP66)-rated enclosure that is highly resistant to humidity, water, and dust, since the ZED 2i depth sensor is designed for outdoor applications and challenging industrial and agricultural environments, etc. [16][1], while the ZED camera, such as the D415 and D435 RealSense cameras, is mainly designed for indoor applications [7][45]. Moreover, the ZED 2i has multiple mounting options and a flat bottom, and it can be easily integrated into any system and environment [16][1].

Since the ZED 2i can be cloud-connected, there is an option to monitor and control the camera remotely. Using a dedicated cloud platform, capturing and analyzing the 3D data of the depth image is possible from anywhere in the world [16][1]. It is also possible to monitor live video streams, remotely control the cameras, deploy applications, and collect data.

Finally, it should be mentioned that there is also a ZED two-depth camera provided by Stereolabs [16][1]. The main features and the accuracy graph of the ZED 2i are the same as those of the ZED 2 as the internal sensor stack and the lens configuration of the two sensors are the same [16][1]. The only differences are the external enclosure for the ZED 2i, which is IP66 and hence more robust, and the option of a built-in polarizing filter [16][1].

References

- ZED Product Portfolio. Stereolabs Product Portfolio and Specifications; Revision 1; Stereolabs: Orsay, France, 2022.

- Tadic, V.; Odry, A.; Burkus, E.; Kecskes, I.; Kiraly, Z.; Klincsik, M.; Sari, Z.; Vizvari, Z.; Toth, A.; Odry, P. Painting Path Planning for a Painting Robot with a RealSense Depth Sensor. Appl. Sci. 2021, 11, 1467.

- Tadic, V.; Odry, A.; Burkus, E.; Kecskes, I.; Kiraly, Z.; Odry, P. Edge-preserving Filtering and Fuzzy Image Enhancement in Depth Images Captured by Realsense Cameras in Robotic Applications. Adv. Electr. Comput. Eng. 2020, 20, 83–92.

- Tadic, V.; Burkus, E.; Odry, A.; Kecskes, I.; Kiraly, Z.; Odry, P. Effects of the post-processing on depth value accuracy of the images captured by RealSense cameras. Contemp. Eng. Sci. 2020, 13, 149–156.

- Tadic, V.; Odry, A.; Burkus, E.; Kecskes, I.; Kiraly, Z.; Vizvari, Z.; Toth, A.; Odry, P. Application of the ZED Depth Sensor for Painting Robot Vision System Development. IEEE Access 2021, 9, 117845–117859.

- Ortiz, L.E.; Cabrera, V.E.; Goncalves, L. Depth Data Error Modeling of the ZED 3D Vision Sensor from Stereolabs. ELCVIA Electron. Lett. Comput. Vis. Image Anal. 2018, 17, 1–15.

- Jauregui, J.C.; Resendiz, J.R.; Thenozhi, S.; Szalay, T.; Jacso, A.; Takacs, M. Frequency and Time-Frequency Analysis of Cutting Force and Vibration Signals for Tool Condition Monitoring. IEEE Access 2018, 6, 6400–6410.

- Padilla-Garcia, E.A.; Rodriguez-Angeles, A.; Resendiz, J.R.; Cruz-Villar, C.A. Concurrent Optimization for Selection and Control of AC Servomotors on the Powertrain of Industrial Robots. IEEE Access 2018, 6, 27923–27938.

- Martínez-Prado, M.A.; Rodríguez-Reséndiz, J.; Gómez-Loenzo, R.-A.; Herrera-Ruiz, G.; Franco-Gasca, L.-A. An FPGA-Based Open Architecture Industrial Robot Controller. IEEE Access 2018, 6, 13407–13417.

- Flacco, F.; Kröger, T.; De Luca, A.; Khatib, O. A Depth Space Approach to Human-Robot Collision Avoidance. In Proceedings of the 2012 IEEE International Conference on Robotics and Automation, Saint Paul, MN, USA, 14–18 May 2012.

- Saxena, A.; Chung, S.H.; Ng, A.Y. 3-D Depth Reconstruction from a Single Still Image. Int. J. Comput. Vis. 2007, 76, 53–69.

- Sterzentsenko, V.; Karakottas, A.; Papachristou, A.; Zioulis, N.; Doumanoglou, A.; Zarpalas, D.; Daras, P. A Low-Cost, Flexible and Portable Volumetric Capturing System; IEEE: Piscataway, NJ, USA, 2018; pp. 200–207.

- Carey, N.; Nagpal, R.; Werfel, J. Fast, accurate, small-scale 3D scene capture using a low-cost depth sensor. In Proceedings of the 2017 IEEE Winter Conference on Applications of Computer Vision (WACV), Santa Rosa, CA, USA, 24–31 March 2017; pp. 1268–1276.

- Labbé, M.; Michaud, F. RTAB-Map as an open-source lidar and visual simultaneous localization and mapping library for large-scale and long-term online operation. J. Field Robot. 2018, 36, 416–446.

- Labbé, M.; Michaud, F. Long-term online multi-session graph-based SPLAM with memory management. Auton. Robot. 2017, 42, 1133–1150.

- Labbé, M.; Michaud, F. Memory management for real-time appearance-based loop closure detection. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, San Francisco, CA, USA, 25–30 September 2011.

- Labbe, M.; Michaud, F. Online Global Loop Closure Detection for Largescale Multisession Graph Based Slam; IEEE: Piscataway, NJ, USA, 2014; pp. 2661–2666.

- Labbé, M.; Michaud, F. Appearance-Based Loop Closure Detection for Online Large-Scale and Long-Term Operation. IEEE Trans. Robot. 2013, 29, 734–745.

- Fischler, M.A.; Bolles, R.C. Random sample consensus: A Paradigm for Model Fitting with Applications to Image Analysis and Automated Cartography. Commun. ACM 1981, 24, 381–395.

- Derpanis, K.G. Overview of the RANSAC Algorithm; Version 1.2; Computer Science Department, University of Toronto: Toronto, ON, Canada, 2010.

- Rusu, R.B.; Marton, Z.C.; Blodow, N.; Dolha, M.; Beetz, M. Towards 3D Point cloud based object maps for household environments. Robot. Auton. Syst. 2008, 56, 927–941.

- Li, X.; Guo, W.; Li, M.; Sun, L. Combining Two Point Clouds Generated from Depth Camera; IEEE: Piscataway, NJ, USA, 2013; pp. 2620–2625.

- El-Sayed, E.; Abdel-Kader, R.F.; Nashaat, H.; Marei, M. Plane detection in 3D point cloud using oc-tree-balanced density down-sampling and iterative adaptive plane extraction. IET Image Process. 2018, 12, 1595–1605.

- Gallo, O.; Manduchi, R.; Rafii, A. CC-RANSAC: Fitting planes in the presence of multiple surfaces in range data. Pattern Recognit. Lett. 2011, 32, 403–410.

- Mufti, F.; Mahony, R.; Heinzmann, J. Spatio-Temporal RANSAC for Robust Estimation of Ground Plane in Video Range Images for Automotive Applications; IEEE: Piscataway, NJ, USA, 2008; pp. 1142–1148.

- Nurunnabi, A.; West, G.; Belton, D. Outlier detection and robust normal-curvature estimation in mobile laser scanning 3D point cloud data. Pattern Recognit. 2015, 48, 1404–1419.

- Prakash, S.; Kumar, M.V.; Ram, R.S.; Zivkovic, M.; Bacanin, N.; Antonijevic, M. Hybrid GLFIL Enhancement and Encoder Animal Migration Classification for Breast Cancer Detection. Comput. Syst. Sci. Eng. 2022, 41, 735–749.

- Li, Y.; Li, W.; Darwish, W.; Tang, S.; Hu, Y.; Chen, W. Improving Plane Fitting Accuracy with Rigorous Error Models of Structured Light-Based RGB-D Sensors. Remote Sens. 2020, 12, 320.

- Schwarze, T.; Lauer, M. Wall Estimation from Stereo Vision in Urban Street Canyons; IEEE: Piscataway, NJ, USA, 2013; pp. 83–90.

- Xu, M.; Lu, J. Distributed RANSAC for the robust estimation of three-dimensional reconstruction. IET Comput. Vis. 2012, 6, 324–333.

- Kovacs, L.; Kertesz, G. Hungarian Traffic Sign Detection and Classification using Semi-Supervised Learning; IEEE: Piscataway, NJ, USA, 2021; pp. 000437–000442.

- Zhou, S.; Kang, F.; Li, W.; Kan, J.; Zheng, Y.; He, G. Extracting Diameter at Breast Height with a Handheld Mobile LiDAR System in an Outdoor Environment. Sensors 2019, 19, 3212.

- Deschaud, J.E.; Goulette, F. A Fast and Accurate Plane Detection Algorithm for Large Noisy Point Clouds Using Filtered Normals and Voxel Growing. In 3DPVT; Hal Archives-Ouvertes: Paris, France, 2010.

- Najdataei, H.; Nikolakopoulos, Y.; Gulisano, V.; Papatriantafilou, M. Continuous and Parallel LiDAR Point-Cloud Clustering; IEEE: Piscataway, NJ, USA, 2018; pp. 671–684.

- Sproull, R.F. Refinements to nearest-neighbor searching ink-dimensional trees. Algorithmica 1991, 6, 579–589.

- Tadic, V.; Odry, A.; Kecskes, I.; Burkus, E.; Kiraly, Z.; Odry, P. Application of Intel RealSense Cameras for Depth Image Generation in Robotics. WSEAS Trans. Comput. 2019, 18, 2224–2872.

- Aghi, D.; Mazzia, V.; Chiaberge, M. Local Motion Planner for Autonomous Navigation in Vineyards with a RGB-D Camera-Based Algorithm and Deep Learning Synergy. Machines 2020, 8, 27.

- Yow, K.-C.; Kim, I. General Moving Object Localization from a Single Flying Camera. Appl. Sci. 2020, 10, 6945.

- Qi, X.; Wang, W.; Liao, Z.; Zhang, X.; Yang, D.; Wei, R. Object Semantic Grid Mapping with 2D LiDAR and RGB-D Camera for Domestic Robot Navigation. Appl. Sci. 2020, 10, 5782.

- Kang, X.; Li, J.; Fan, X.; Wan, W. Real-Time RGB-D Simultaneous Localization and Mapping Guided by Terrestrial LiDAR Point Cloud for Indoor 3-D Reconstruction and Camera Pose Estimation. Appl. Sci. 2019, 9, 3264.

- Tadic, V.; Odry, A.; Toth, A.; Vizvari, Z.; Odry, P. Fuzzified Circular Gabor Filter for Circular and Near-Circular Object Detection. IEEE Access 2020, 8, 96706–96713.

- Odry, Á.; Kecskes, I.; Sarcevic, P.; Vizvari, Z.; Toth, A.; Odry, P. A Novel Fuzzy-Adaptive Extended Kalman Filter for Real-Time Attitude Estimation of Mobile Robots. Sensors 2020, 20, 803.

- Chen, Y.; Zhou, W. Hybrid-Attention Network for RGB-D Salient Object Detection. Appl. Sci. 2020, 10, 5806.

- Shang, D.; Wang, Y.; Yang, Z.; Wang, J.; Liu, Y. Study on Comprehensive Calibration and Image Sieving for Coal-Gangue Separation Parallel Robot. Appl. Sci. 2020, 10, 7059.

- Tadic, V. Intel RealSense D400 Series Product Family Datasheet; Document Number: 337029-005; New Technologies Group, Intel Corporation: Satan Clara, CA, USA, 2019.

More