Your browser does not fully support modern features. Please upgrade for a smoother experience.

Please note this is a comparison between Version 5 by JiHwan Lee and Version 4 by JiHwan Lee.

A convolutional neural network (CNN) is a deep learning algorithm architecture created based on a 1962 study investigating the visual process of feline brains, and it has been applied in a wide range of areas, from autonomous vehicles to medical diagnoses. Since 2017, many studies applying deep learning-based diagnostics in the field of orthopedics have demonstrated outstanding performance.

- artificial intelligence

- orthopedics

- neural network

- deep learning

1. Introduction

A convolutional neural network (CNN) is a deep learning algorithm architecture created based on a 1962 study investigating the visual process of feline brains, and it has been applied in a wide range of areas, from autonomous vehicles to medical diagnoses [1].

A traditional CNN consists of an input layer that transmits input information, a hidden layer that modifies information (filtering) received from the input layer and amplifies the features (pooling) and an output layer that finally synthesizes and outputs the information.

According to the universal approximation theorem, it has been confirmed that various linear classifications are possible even if the neural network has a shallow hidden layer, and some pioneering studies have shown that classification and detection are improved as the layers constituting the neural network become deeper (deep neural network) [2]. Since 2012, the performance of deep learning has rapidly increased in medical image analysis with the use of deep neural networks, and this has led to a decrease in the classification error rate from approximately 25% in 2011 to 3.6% in 2015.

The CNN model was developed using a pipeline in terms of classification and detection [3], and the improved CNN shows excellent judgment, essentially giving the computer a new visual organ. A CNN has thus been expected to be used for medical diagnoses. However, a CNN does not provide any information on the basis of the decision. Therefore, even if a CNN shows an excellent diagnostic ability, it can only be discussed within a limited scope in medicine, where the basis for a judgment is important [4].

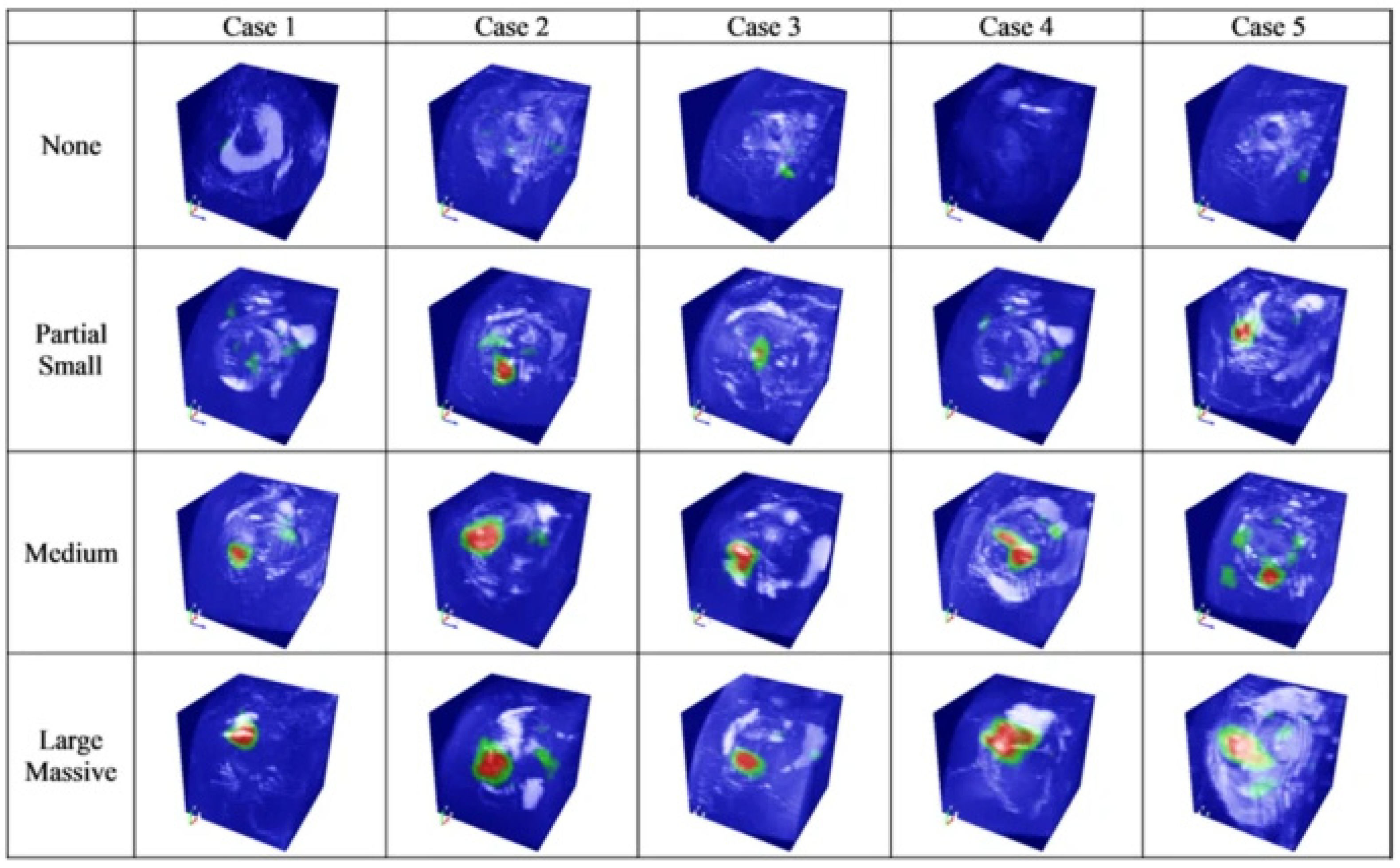

This has been pointed out as a technical limitation that reduces the effectiveness of a CNN in various fields other than medicine [5]. Researchers have dubbed this limitation “black box issues” and worked to develop “explainable artificial intelligence (XAI)” to look inside the problem [6]. The term “explainable” can be expressed as “understandability”, “comprehensibility” or “interpretability” and has the same meaning. XAI should not degrade the classification or prediction performance of the model in any way and should improve the explainability. Various strategies and suitable CNN architectures have been proposed to implement an appropriate XAI [7]. Unfortunately, the black box nature of deep learning has not been completely resolved, but there are some notable achievements [8]. As one of these achievements, in 2016, Zhou et al. introduced a method explaining how a CNN makes a decision through class activation mapping [9], and this method is widely used in the field of medical artificial intelligence (Figure 1) [10].

Figure 1. Image highlighting the location and size of a rotator cuff tear through a class activation map (CAM) [10].

In a similar context, there are attempts to improve the explainability by improving the existing CNN architecture [11]. Kim et al. modified U-Net, a CNN architecture that has strength in image segmentation, to appropriately increase the explainability. They presented an interpretable version of U-Net (SAU-Net) using an attention module for the decoder part [12].

Hence, studies introducing CNN models for diagnosing and classifying diseases using deep learning have been published in various fields of medicine, including ophthalmology and dermatology [13][14].

2. Deep Learning for Fractures

Fractures are the most familiar ailments to orthopedists and the medical area in which deep learning methods were first applied. In 2018, Chung et al. published a CNN model for diagnosing and classifying proximal humerus fractures. Three specialists labeled 1891 anteroposterior shoulder radiographs as normal shoulders (n = 515) and 4 proximal humerus fracture types (greater tuberosity: 346; surgical neck: 514; 3-part: 269; and 4-part: 247) [15]. After labeling, a CNN model (ResNet-152) was trained with a training dataset created through augmentation of the labeled data. The CNN model recorded 96% accuracy for the normal shoulders and proximal humerus fractures, showing a higher accuracy than a general orthopedist (92.8% accuracy). This model showed a top-1 accuracy of 65–86% and an area under the curve (AUC) of 0.90–0.98 for classifying the fracture types. A recently published paper introduced a model with improved classification accuracy. In 2020, Demir et al. introduced a deep learning model to diagnose and classify humerus fractures using the exemplar pyramid method, a novel, stable feature extraction approach which showed a high classification accuracy of 99.12% [16].

Urakawa et al. trained the VGG-16 CNN model using hip plain radiographs (1773 intertrochanteric hip fracture images and 1573 normal hip images) and showed an accuracy of 95.5% [17]. Yamada et al. trained the CNN model (Xception architectural) based on 3123 hip plain and lateral radiography images, and the trained model classified fractures with 98% accuracy, which is better than orthopedists (92.2% accuracy) [18].

For the hip, as with the shoulder, there has been an attempt to classify fractures by training the CNN model. Lee et al. introduced a CNN model for training 786 anteroposterior pelvic plan radiographs using GoogLeNet-inception v3 [19]. The model classified a proximal femur fracture into type A (trochanteric region), type B (femur neck) and type C (femoral head) according to AO/OTA classification with an overall accuracy of 86.8%, showing a reasonable result. Lind et al. trained a ResNet-based CNN with anteroposterior and lateral knee radiographs, amounting to 6768 images [20]. The trained CNN model classified knee radiographic images according to the AO/OTA classification system and classified proximal tibia fractures, patellar fractures and distal femur fractures with AUCs of 0.87, 0.89 and 0.89, respectively.

The trained CNN diagnosed and classified fractures at a relatively high level in the large appendices of the shoulder, knee and hip. By contrast, a CNN model trained to diagnose and classify fractures in small joints or axial joints showed a relatively low AUC and accuracy. Farda et al. trained a PCANet-based CNN model that classified calcaneal fractures according to Sanders classification using computer tomography with 5534 datasets [21]. The trained CNN model showed 72% accuracy. In addition, Ozkaya et al. trained a CNN model based on ResNet50 with 390 anteroposterior wrist radiographic images [22]. The AUC of the learned CNN was 0.84, showing a relatively satisfactory result, but it was lower than that of experienced orthopedists.

An attempt was also made to diagnose the compression fractures in the spine using a trained CNN. The results showed a significant difference depending on the type of data used for learning. Chen et al. trained a ResNet-based CNN model using plain spine X-rays, and the trained CNN showed an accuracy of 73.59% [23]. By contrast, Yabu et al. presented a CNN model using MRI images as the training data. This model showed a higher accuracy (88%) than that of the surgeons [24].

In summary, fracture diagnosis using artificial intelligence showed a high level of accuracy. The trained CNN model conducted fracture diagnosis (binary classification) with a higher accuracy than fracture classification (multiclass classification), and this gap is expected to decrease as more advanced CNN models are developed.

In classifying fractures, small and axial joints showed a lower accuracy than large joints. This may be a limitation of a CNN-based approach, which makes judgments by recognizing the contrast information (e.g., normal margin of the cortical bone and the fracture line or normal joint line) and spatial information of the images. The authors believe that this limitation can be overcome using more powerful CNN models.

Most of the diagnosis and classification of fractures using deep learning have focused on osteoporotic fractures, and studies on osteoporotic fracture joints with low frequencies are relatively poor [25]. This may be because the dataset for training the CNN model is sufficient because osteoporotic fractures account for a high proportion of the total fracture frequency, and the fracture pattern is relatively standardized, making it suitable for use in fracture classification.

3. Deep Learning for Osteoarthritis and Prediction of Arthroplasty Implants

Osteoarthritis is as familiar to orthopedists as fractures. Therefore, several attempts have been made to diagnose and classify osteoarthritis using deep learning algorithms. Xue et al. trained a CNN model based on VGG-16 with 420 plain hip X-rays [26]. This is one of the earliest studies to apply deep learning methods to the orthopedic field, and the trained model diagnosed hip osteoarthritis with an accuracy of 92.8%. Ureten et al. also presented a model for diagnosing hip osteoarthritis using a similar research design, showing an accuracy of 90.2% [27].

Tiulpin et al. trained a CNN model to classify knee osteoarthritis according to the Kellgren–Lawrence grading scale using a Siamese classification CNN [28]. The model trained using plain knee X-rays showed a multiclass accuracy of 66.7%. In addition, Swiecicki et al. trained a Faster R-CNN using plain and lateral knee X-rays from the Multicenter Osteoarthritis Study dataset [29]. The multiclass accuracy of this model was 71.9%, which showed improved performance compared with the previous study conducted by Tiulpin et al.

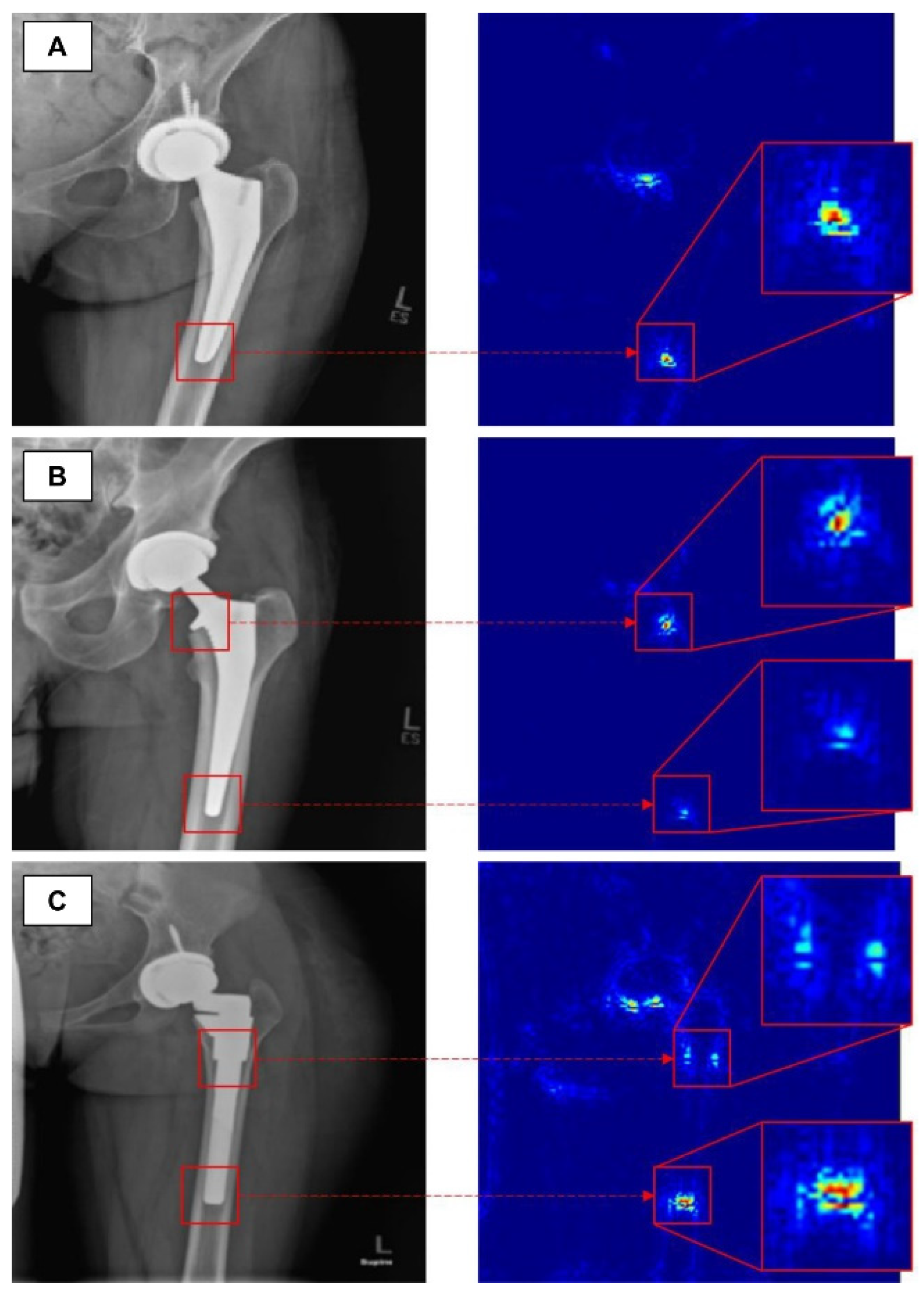

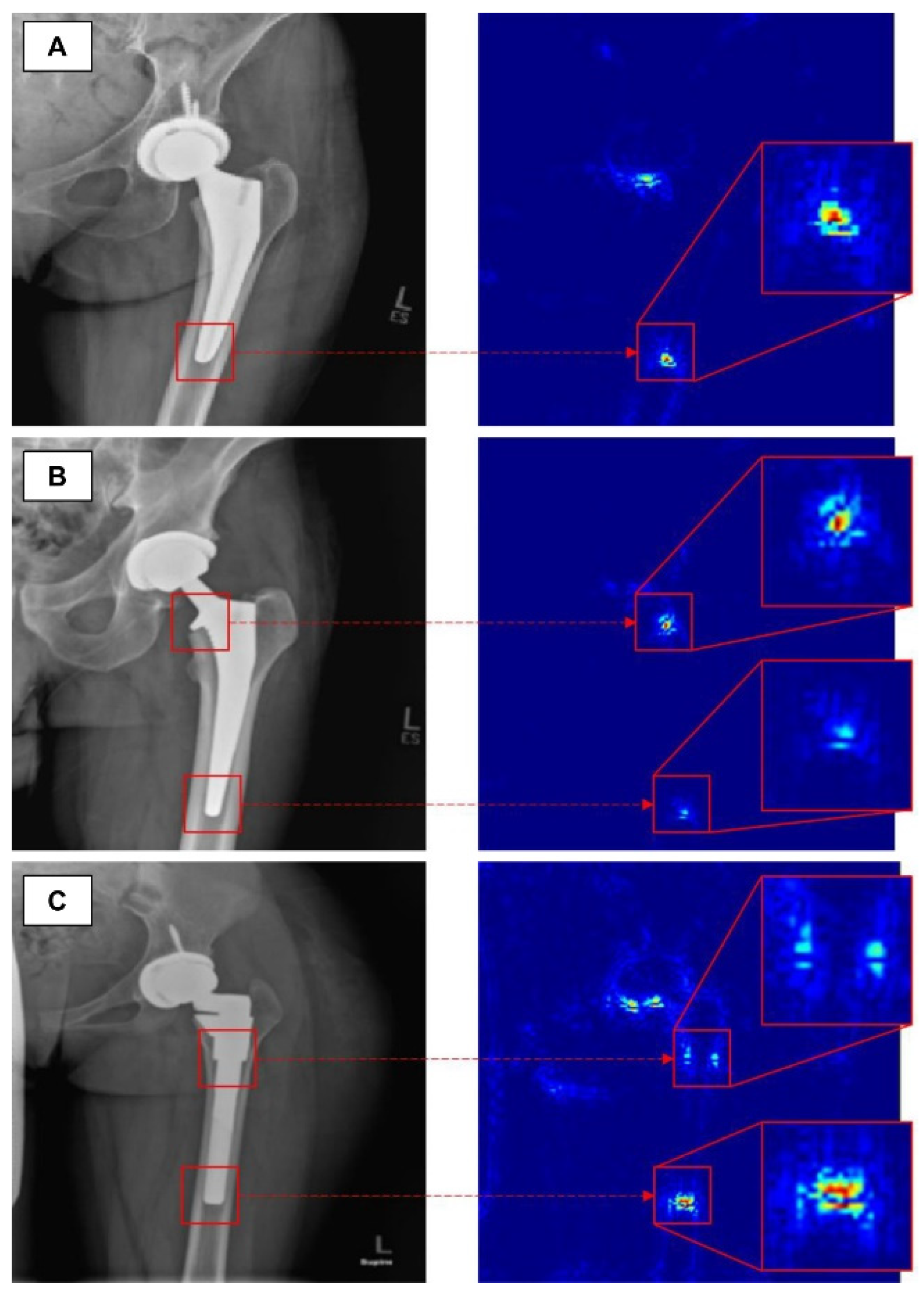

Advanced osteoarthritis of the hip or knee often requires arthroplasty. Several studies have introduced a model for classifying arthroplasty implants used by patients with deep learning algorithms. Karnuta et al. trained the InceptionV3 network-based CNN model using anteroposterior knee X-rays with nine different implant models inserted [30]. The trained model showed an accuracy of 99% and an AUC of 0.99, classifying the implant models at an almost perfect level. A similar attempt was made at the hip joint. In addition, Borjali et al. created a CNN model trained on 252 plain hip X-rays containing 3 different implant designs, and this model classified implants with 100% accuracy (Figure 2) [31]. Kang et al. also developed a CNN model trained on 170 plain hip X-rays containing 29 different implant designs. This model also showed a high level of performance, with an AUC of 0.99 [32].

Figure 2. The figure shows how a trained convolutional neural network classifies total hip replacement implants of different designs in A, B and C [31].

By contrast, the model classifying shoulder arthroplasty implants showed a relatively low AUC. Urban et al. developed a CNN model trained on 597 plain shoulder X-rays with 16 different implant designs, showing an accuracy of 80% [33]. In addition, Sultan et al. proposed a model for classifying the different designs of four manufacturers using modified ResNet and DenseNet, showing an accuracy of 85.9% [34].

In summary, as in the case of using deep learning for fractures, binary classification of osteoarthritis has a higher accuracy than multiclass classification. In particular, the CNN-based model for specifying arthroplasty implants of the hip or knee shows a high accuracy. This may be because, unlike human bone, the implant design is highly standardized, demonstrating a clear margin on X-rays and providing clear contrast information to the CNN model. However, the classification of shoulder arthroplasty implants shows a low level of accuracy. This may be due to the fact that a shoulder anteroposterior X-ray can show a wider range of positions than an anteroposterior radiograph of the knee or hip.

4. Deep Learning for Joint-Specific Soft Tissue Disease

As for deep learning approaches, an algorithm specialized for detection based on learned images and an algorithm for segmentation by analyzing features have structural differences and have developed into different areas of application [3]. In particular, segmentation has technical difficulties in that it is necessary to preserve spastic information that is easily lost in the outer-layer process of synthesizing the results of the CNN model being trained [35]. Recent studies have attempted to overcome these limitations through techniques such as FCN-based semantic segmentation.

These differences in deep learning algorithms also affect the use of deep learning in the orthopedic field. The deep learning-based studies introduced above are cases of diagnosing and classifying diseases based on X-ray images, and a CNN model specialized for segmentation is not always required [36]. By contrast, for diseases that are diagnosed and classified based on images such as ultrasound or MRI, a satisfactory level of accuracy can be obtained using only a CNN model specialized for segmentation. For example, a CNN model for diagnosing rotator cuff tears is more appropriate for inferring such tears based on the outline of the normal rotator cuff (segmentation) than a method of diagnosis applied by specifying the location where the tear occurred (regional detection).

Therefore, CNN models for diagnosing soft tissue disease in the orthopedic field have mainly been published after 2018, which was when the segmentation technology began to mature. Kim et al. trained a CNN model using a shoulder MRI dataset of 240 patients. The trained model identified the muscle region of the rotator cuff with an accuracy of 99.9% and graded fatty infiltration at a high level [37]. Taghizadeh et al. also conducted a similar study using a shoulder computed tomography of 103 patients as a dataset. The trained CNN model measured fatty infiltration with an accuracy of 91% [38].

Medina et al. introduced a model for segmenting the rotator cuff muscle with 98% accuracy by applying a CNN model trained using the shoulder MRIs of 258 patients [39]. Furthermore, Shim and Chung et al. introduced a model for evaluating the presence of tears and their sizes in the rotator cuff by training a Voxception-ResNet (VRN)-based CNN with 2124 shoulder MRIs. The trained CNN model diagnosed and classified rotator cuff tears with accuracies of 92.5% and 76.5%, respectively [10]. In addition, Lee et al. developed a new deep learning architecture using an integrated positive loss function and a pre-trained encoder. Using this, the location of the rotator cuff tear can be relatively accurately determined, even when imbalanced and noisy ultrasound images are provided [40].

Recent studies suggesting a CNN model for diagnosing meniscal tears, cartilage lesions and anterior cruciate ligament (ACL) ruptures in the knee joint have also been published. Couteaux et al. presented a model that trains a Mask-RCNN with 1828 T2-weighted 2D Fast Spin-Echo images to classify the torn part from the normal area of the meniscus and do so according to the location of the tear [41]. This model diagnosed and classified meniscal tears with an AUC of 0.91. Roblot et al. also proposed a model for diagnosing meniscal tears in a similar way, detecting meniscal tears with an AUC of 0.94 [42].

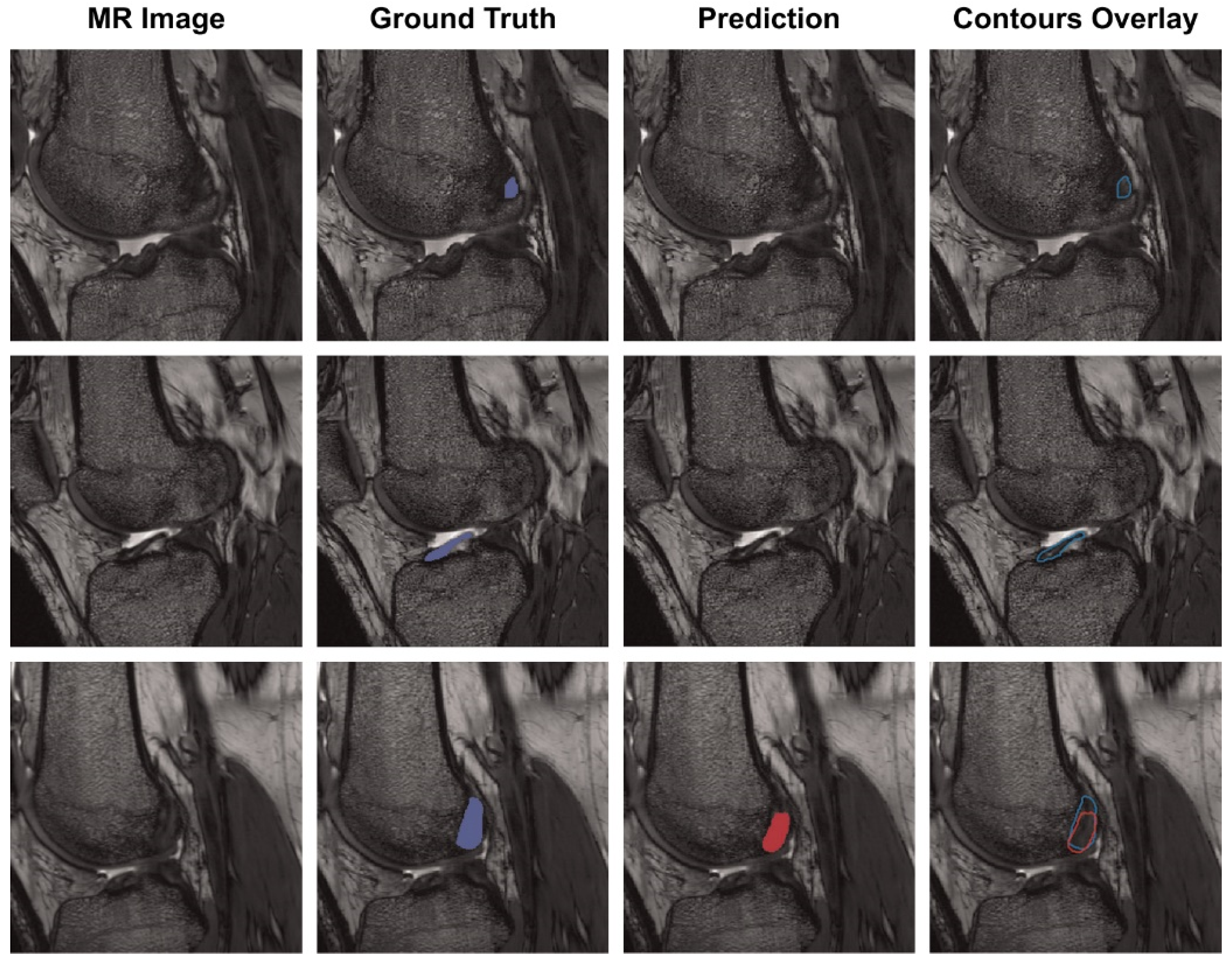

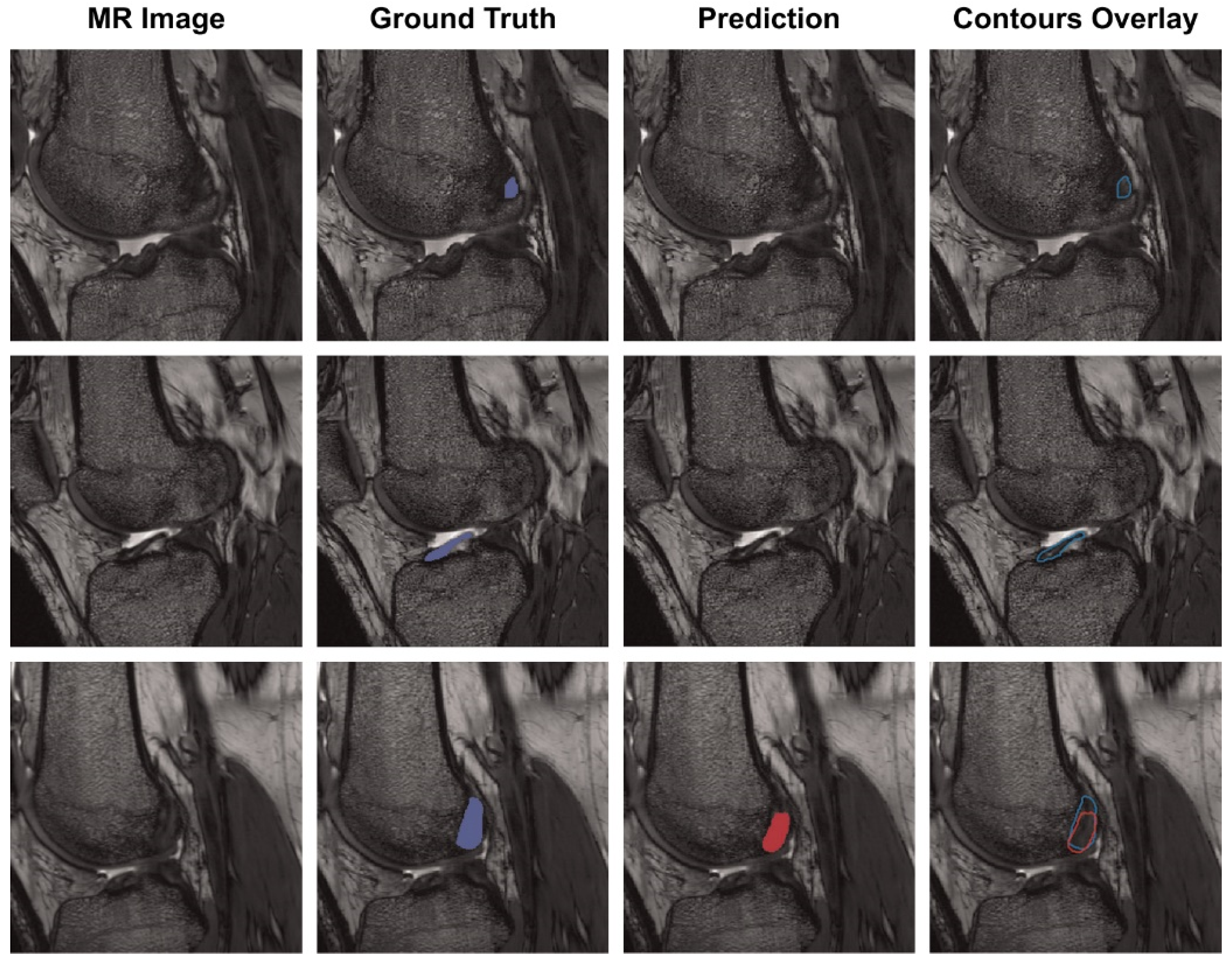

Chang et al. presented a model for diagnosing complete ACL tears by training a U-Net-based CNN using 320 coronal proton density-weighted 2D Fast Spin-Echo images, demonstrating an AUC of 0.97 [43]. In addition, Flannery et al. trained a modified U-Net-based CNN and evaluated the level of segmentation of the model. The segmentation level suggested by the trained model did not show a statistically significant difference from the ground truth (the value actually suggested by an expert) (Figure 3) [44].

Figure 3. Each row is the same MR slice, and each column is an unsegmented slice (MR Image), an expert measured value (Ground Truth), a trained CNN model predicted value (Prediction) and an overlay of manual and predicted segmentations (Contours Overlay) [44].

References

- Hubel, D.H.; Wiesel, T.N. Receptive fields, binocular interaction and functional architecture in the cat’s visual cortex. J. Physiol. 1962, 160, 106–154.

- Hornik, K.; Stinchcombe, M.; White, H. Multilayer feedforward networks are universal approximators. Neural Netw. 1989, 2, 359–366.

- Zhao, Z.-Q.; Zheng, P.; Xu, S.-T.; Wu, X. Object detection with deep learning: A review. IEEE Trans. Neural Netw. Learn. Syst. 2019, 30, 3212–3232.

- Wang, F.; Casalino, L.P.; Khullar, D. Deep learning in medicine—Promise, progress, and challenges. JAMA Intern. Med. 2019, 179, 293–294.

- Budd, S.; Robinson, E.C.; Kainz, B. A survey on active learning and human-in-the-loop deep learning for medical image analysis. Med. Image Anal. 2021, 71, 102062.

- Fujita, H. AI-based computer-aided diagnosis (AI-CAD): The latest review to read first. Radiol. Phys. Technol. 2020, 13, 6–19.

- Castiglioni, I.; Rundo, L.; Codari, M.; Leo, G.D.; Salvatore, C.; Interlenghi, M.; Gallivanone, F.; Cozzi, A.; D’Amico, N.C.; Sardanelli, F. AI applications to medical images: From machine learning to deep learning. Phys. Med. 2021, 83, 9–24.

- Tjoa, E.; Guan, C. A Survey on Explainable Artificial Intelligence (XAI): Toward Medical XAI. IEEE Trans. Neural Netw. Learn. Syst. 2020, 32, 4793–4813.

- Zhou, B.; Khosla, A.; Lapedriza, A.; Oliva, A.; Torralba, A. Learning deep features for discriminative localization. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, USA, 30 June 2016; pp. 2921–2929.

- Shim, E.; Kim, J.Y.; Yoon, J.P.; Ki, S.-Y.; Lho, T.; Kim, Y.; Chung, S.W. Automated rotator cuff tear classification using 3D convolutional neural network. Sci. Rep. 2020, 10, 1–9.

- Singh, A.; Sengupta, S.; Lakshminarayanan, V. Explainable Deep Learning Models in Medical Image Analysis. J. Imaging 2020, 6, 52.

- Kim, B.; Wattenberg, M.; Gilmer, J.; Cai, C.; Wexler, J.; Viegas, F. Interpretability beyond feature attribution: Quantitative testing with concept activation vectors (tcav). In Proceedings of the 35th International Conference on Machine Learning, PMLR, Stockholm, Sweden, 10–15 July 2018; pp. 2668–2677.

- Gulshan, V.; Peng, L.; Coram, M.; Stumpe, M.C.; Wu, D.; Narayanaswamy, A.; Venugopalan, S.; Widner, K.; Madams, T.; Cuadros, J.; et al. Development and validation of a deep learning algorithm for detection of diabetic retinopathy in retinal fundus photographs. JAMA 2016, 316, 2402–2410.

- Bejnordi, B.E.; Veta, M.; Van Diest, P.J.; Van Ginneken, B.; Karssemeijer, N.; Litjens, G.; van der Laak, J.A.W.M.; The CAMELYON16 Consortium. Diagnostic assessment of deep learning algorithms for detection of lymph node metastases in women with breast cancer. JAMA 2017, 318, 2199–2210.

- Chung, S.W.; Han, S.S.; Lee, J.W.; Oh, K.-S.; Kim, N.R.; Yoon, J.P.; Kim, J.Y.; Moon, S.H.; Kwon, J.; Lee, H.-J.; et al. Automated detection and classification of the proximal humerus fracture by using deep learning algorithm. Acta Orthop. 2018, 89, 468–473.

- Demir, S.; Key, S.; Tuncer, T.; Dogan, S. An exemplar pyramid feature extraction based humerus fracture classification method. Med. Hypotheses 2020, 140, 109663.

- Urakawa, T.; Tanaka, Y.; Goto, S.; Matsuzawa, H.; Watanabe, K.; Endo, N. Detecting intertrochanteric hip fractures with orthopedist-level accuracy using a deep convolutional neural network. Skelet. Radiol. 2019, 48, 239–244.

- Yamada, Y.; Maki, S.; Kishida, S.; Nagai, H.; Arima, J.; Yamakawa, N.; Iijima, Y.; Shiko, Y.; Kawasaki, Y.; Kotani, T.; et al. Automated classification of hip fractures using deep convolutional neural networks with orthopedic surgeon-level accuracy: Ensemble decision-making with antero-posterior and lateral radiographs. Acta Orthop. 2020, 91, 699–704.

- Lee, C.; Jang, J.; Lee, S.; Kim, Y.S.; Jo, H.J.; Kim, Y. Classification of femur fracture in pelvic X-ray images using meta-learned deep neural network. Sci. Rep. 2020, 10, 1–12.

- Lind, A.; Akbarian, E.; Olsson, S.; Nåsell, H.; Sköldenberg, O.; Razavian, A.S.; Gordon, M. Artificial intelligence for the classification of fractures around the knee in adults according to the 2018 AO/OTA classification system. PLoS ONE 2021, 16, e0248809.

- Farda, N.A.; Lai, J.-Y.; Wang, J.-C.; Lee, P.-Y.; Liu, J.-W.; Hsieh, I.-H. Sanders classification of calcaneal fractures in CT images with deep learning and differential data augmentation techniques. Injury 2020, 52, 616–624.

- Ozkaya, E.; Topal, F.E.; Bulut, T.; Gursoy, M.; Ozuysal, M.; Karakaya, Z. Evaluation of an artificial intelligence system for diagnosing scaphoid fracture on direct radiography. Eur. J. Trauma Emerg. Surg. 2020, 1–8.

- Chen, H.-Y.; Hsu, B.W.-Y.; Yin, Y.-K.; Lin, F.-H.; Yang, T.-H.; Yang, R.-S.; Lee, C.-K.; Tseng, V.S. Application of deep learning algorithm to detect and visualize vertebral fractures on plain frontal radiographs. PLoS ONE 2021, 16, e0245992.

- Yabu, A.; Hoshino, M.; Tabuchi, H.; Takahashi, S.; Masumoto, H.; Akada, M.; Morita, S.; Maeno, T.; Iwamae, M.; Inose, H.; et al. Using artificial intelligence to diagnose fresh osteoporotic vertebral fractures on magnetic resonance images. Spine J. 2021, 21, 1652–1658.

- Moon, Y.L.; Jung, S.H.; Choi, G.Y. Ecaluation of focal bone mineral density using three-dimensional of Hounsfield units in the proximal humerus. CiSE. 2015, 18, 86–90.

- Xue, Y.; Zhang, R.; Deng, Y.; Chen, K.; Jiang, T. A preliminary examination of the diagnostic value of deep learning in hip osteoarthritis. PLoS ONE 2017, 12, e0178992.

- Üreten, K.; Arslan, T.; Gültekin, K.E.; Demir, A.N.D.; Özer, H.F.; Bilgili, Y. Detection of hip osteoarthritis by using plain pelvic radiographs with deep learning methods. Skelet. Radiol. 2020, 49, 1369–1374.

- Tiulpin, A.; Thevenot, J.; Rahtu, E.; Lehenkari, P.; Saarakkala, S. Automatic Knee Osteoarthritis Diagnosis from Plain Radiographs: A Deep Learning-Based Approach. Sci. Rep. 2018, 8, 1–10.

- Swiecicki, A.; Li, N.; O’Donnell, J.; Said, N.; Yang, J.; Mather, R.C.; Jiranek, D.A.; Mazurowski, M.A. Deep learning-based algorithm for assessment of knee osteoarthritis severity in radiographs matches performance of radiologists. Comput. Biol. Med. 2021, 133, 104334.

- Karnuta, J.M.; Luu, B.C.; Roth, A.L.; Haeberle, H.S.; Chen, A.F.; Iorio, R.; Schaffer, J.L.; Mont, M.A.; Patterson, B.M.; Krebs, V.E.; et al. Artificial Intelligence to Identify Arthroplasty Implants From Radiographs of the Knee. J. Arthroplast. 2021, 36, 935–940.

- Borjali, A.; Chen, A.F.; Muratoglu, O.K.; Morid, M.A.; Varadarajan, K.M. Detecting total hip replacement prosthesis design on plain radiographs using deep convolutional neural network. J. Orthop. Res. 2020, 38, 1465–1471.

- Kang, Y.-J.; Yoo, J.-I.; Cha, Y.-H.; Park, C.H.; Kim, J.-T. Machine learning–based identification of hip arthroplasty designs. J. Orthop. Transl. 2020, 21, 13–17.

- Urban, G.; Porhemmat, S.; Stark, M.; Feeley, B.; Okada, K.; Baldi, P. Classifying shoulder implants in X-ray images using deep learning. Comput. Struct. Biotechnol. J. 2020, 18, 967–972.

- Sultan, H.; Owais, M.; Park, C.; Mahmood, T.; Haider, A.; Park, K.R. Artificial Intelligence-Based Recognition of Different Types of Shoulder Implants in X-ray Scans Based on Dense Residual Ensemble-Network for Personalized Medicine. J. Pers. Med. 2021, 11, 482.

- Guo, Y.; Liu, Y.; Georgiou, T.; Lew, M.S. A review of semantic segmentation using deep neural networks. Int. J. Multimed. Inf. Retr. 2018, 7, 87–93.

- Han, Y.; Wang, G. Skeletal bone age prediction based on a deep residual network with spatial transformer. Comput. Methods Programs Biomed. 2020, 197, 105754.

- Kim, J.Y.; Ro, K.; You, S.; Nam, B.R.; Yook, S.; Park, H.S.; Yoo, J.C.; Park, E.; Cho, K.; Cho, B.H.; et al. Development of an automatic muscle atrophy measuring algorithm to calculate the ratio of supraspinatus in supraspinous fossa using deep learning. Comput. Methods Programs Biomed. 2019, 182, 105063.

- Taghizadeh, E.; Truffer, O.; Becce, F.; Eminian, S.; Gidoin, S.; Terrier, A.; Farron, A.; Büchler, P. Deep learning for the rapid automatic quantification and characterization of rotator cuff muscle degeneration from shoulder CT datasets. Eur. Radiol. 2021, 31, 181–190.

- Medina, G.; Buckless, C.G.; Thomasson, E.; Oh, L.S.; Torriani, M. Deep learning method for segmentation of rotator cuff muscles on MR images. Skelet. Radiol. 2021, 50, 683–692.

- Lee, K.; Kim, J.Y.; Lee, M.H.; Choi, C.-H.; Hwang, J.Y. Imbalanced Loss-Integrated Deep-Learning-Based Ultrasound Image Analysis for Diagnosis of Rotator-Cuff Tear. Sensors 2021, 21, 2214.

- Couteaux, V.; Si-Mohamed, S.; Nempont, O.; Lefevre, T.; Popoff, A.; Pizaine, G.; Villain, N.; Bloch, I.; Cotten, A.; Boussel, L. Automatic knee meniscus tear detection and orientation classification with Mask-RCNN. Diagn. Interv. Imaging 2019, 100, 235–242.

- Roblot, V.; Giret, Y.; Antoun, M.B.; Morillot, C.; Chassin, X.; Cotten, A.; Zerbib, J.; Fournier, L. Artificial intelligence to diagnose meniscus tears on MRI. Diagn. Interv. Imaging 2019, 100, 243–249.

- Chang, P.D.; Wong, T.T.; Rasiej, M.J. Deep Learning for Detection of Complete Anterior Cruciate Ligament Tear. J. Digit. Imaging 2019, 32, 980–986.

- Flannery, S.W.; Kiapour, A.M.; Edgar, D.J.; Murray, M.M.; Fleming, B.C. Automated magnetic resonance image segmentation of the anterior cruciate ligament. J. Orthop. Res. 2021, 39, 831–840.

More