Your browser does not fully support modern features. Please upgrade for a smoother experience.

Please note this is a comparison between Version 2 by Dean Liu and Version 1 by George A. Papakostas.

Agricultural machinery, such as tractors, is meant to operate for many hours in large areas and perform repetitive tasks. The automatic navigation of agricultural vehicles can ensure the high intensity of automation of cultivation tasks, the enhanced precision of navigation between crop structures, an increase in operation safety and a decrease in human labor and operation costs.

- computer vision

- smart farming

- self-steering tractors

- precision agriculture

- Agriculture 5.0

1. Evolution of Vision-Based Self-Steering Tractors

The rapid development of computers, electronic sensors and computing technologies in the 1980s has motivated the interest in autonomous vehicle guidance systems. A number of guidance technologies have been proposed [12,13][1][2]; ultrasonic, optical, mechanical, etc. Since the early 1990s, GPS systems have been used widely as relatively newly introduced and accurate guiding sensors in numerous agricultural applications towards fully autonomous navigation [14][3]. However, the high cost of reliable GPS sensors made them prohibitive to use in agricultural navigation applications. Machine vision technologies based on optical local sensors could be alternatively used to guide agricultural vehicles when crop row structures can be observed. Then, the camera system could determine the relative position of the machinery in relation to the crop rows and guide the vehicle between them to perform field operations. Local features could help to fine-tune the trajectory of the vehicle on-site. The latter is the main reason why most of the existing studies on vision-based guided tractors focus on structured fields that are characterized by crop rows. A number of image processing methodologies have been suggested to define the guidance path from crop row images; yet only a finite number of vision-based guidance systems have been developed for real in-field applications [15][4].

Machine vision was first introduced for the automatic navigation of tractors and combines in the 1980s. In 1987, Reid and Searcy [16][5] developed a dynamic thresholding technique to extract path information from field images. The same authors, later in the same year [17][6], proposed a variation of their previous work. The guidance signal was computed by the same algorithm. Additionally, the distribution of the crop-background was estimated by a bimodal Gaussian distribution function, and run-length encoding was employed for locating the center points of row crop canopy shapes in thresholded images. Billingsley and Schoenfisch [18][7] designed a vision guidance system to steer a tractor relative to crop rows. The system could detect the end of the row and warn the driver to turn the tractor. The tractor could automatically acquire its track in the next row. The system was further optimized later by changes in technology; however, the fundamental principles of their previous research have remained the same [19][8]. Pinto and Reid [20][9] proposed a heading angle and offset determination using principal component analysis in order to visually guide a tractor. The task was addressed as a pose recognition problem where a pose was defined by the combination of heading angle and offset. In [21][10], Benson et al. developed a machine vision algorithm for crop edge detection. The algorithm was integrated into a tractor for automated harvest to locate the field boundaries for guidance. The same authors, in [22][11], automated a maize harvest with a combine vision-based steering system based on fuzzy logic.

In [23][12], three machine vision guidance algorithms were developed to mimic the perceptive process of a human operator towards automated harvest, both in the day and at night, reporting accuracies equivalent to a GPS. In [24][13], a machine vision system was developed for an agricultural small-grain combine harvester. The proposed algorithm used a monochromatic camera to separate the uncut crop rows from the background and to calculate a guidance signal. Keicher and Seudert [25][14] developed an automatic guidance system for mechanical weeding in crop rows based on a digital image processing system combined with a specific controller and a proportional hydraulic valve. Åstrand and Baerveldt performed extensive research on the vision-based guidance of tractors and developed robust image processing algorithms integrated with agricultural tractors to detect the position of crop rows [26][15]. Søgaard and Olsen [27][16] developed a method to guide a tractor with respect to the crop rows. The method was based on color images of the field surface. Lang [28][17] proposed an automatic steering control system for a plantation tractor based on the direction and distance of the camera to the stems of the plants. Kise [29][18] presented a row-detection algorithm for a stereovision-based agricultural machinery guidance system. The algorithm used functions for stereo-image processing, extracted elevation maps and determined navigation points. In [30][19], Tillett and Hague proposed a computer vision guidance system for cereals that was mounted on a hoe tractor. In subsequent work [31][20], they presented a method for locating crop rows in images and tested it for the guidance of a mechanical hoe in winter wheat. Later, they extended the operating range of their tracking system to sugar beets [32][21]. Subramanian et al. [33][22] tested machine vision for the guidance of a tractor in a citrus grove alleyway and compared it to a laser radar. Both approaches for path tracking performed similarly. An automatic steering rice transplanter based on image-processing self-guidance was presented by Misao [34][23]. The steering system used a video camera zoom system. Han et al. [35][24] developed a guidance directrix planner to control an agricultural vehicle that was converted to the desired steering wheel angle through navigation. In [36][25], Okamoto et al. presented an automatic guidance system based on a crop row sensor consisting of a charge-coupled device (CCD) camera and an image processing algorithm, implemented for the autonomous guidance of a weeding cultivator.

Autonomous tractor steering is the most established among agricultural navigation technologies; self-steering tractors have already been commercialized for about two decades [12,13][1][2]. Commercial tractor navigation techniques involve a fusion of sensors and are not based solely on machine vision; therefore, they are not in the scope of this research.

Although vision-based tractor navigation systems have been developed, their commercial application is still in its early stages, due to problems affecting their reliability, as reported subsequently. However, relevant research reveals the potential of vision-based automatic guidance in agricultural machinery; thus, the next decade is expected to be crucial for vision-based self-steering tractors to revolutionize the agricultural sector. A revolution is also expected by the newest trend in agriculture: agricultural robots, namely Agrobots, that claim to replace tractors. Agrobots can navigate autonomously in fields based on the same principles and sensors and can work on crop scale with precision and dexterity [5][26]. However, compared to tractors, an Agrobot is a sensitive, high-cost tool that can perform specific tasks. In contrast, a tractor is very durable and sturdy, can operate under adverse weather conditions and is versatile since it allows for the flexibility to adapt to a multitude of tools (topping tools, lawnmowers, sprayers, etc.) for a variety of tasks. Therefore, tractors are key pieces of equipment for all farms, from small to commercial scale, and at present, there is no intention to replace them but to upgrade them in terms of navigational autonomy.

2. Self-Steering Tractors’ System Architecture

In what follows, the basic modeling of self-steering tractors is presented. A vision-based system architecture is provided and key elements essential for performing autonomous navigation operations are reviewed.2.1. Basic Modeling

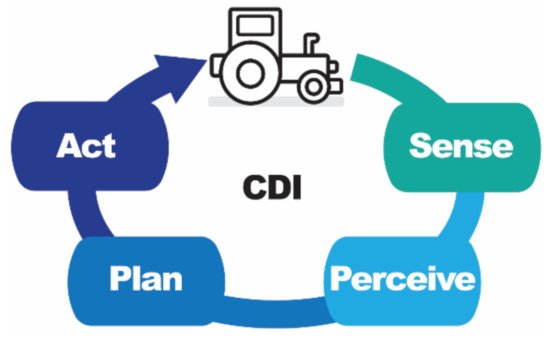

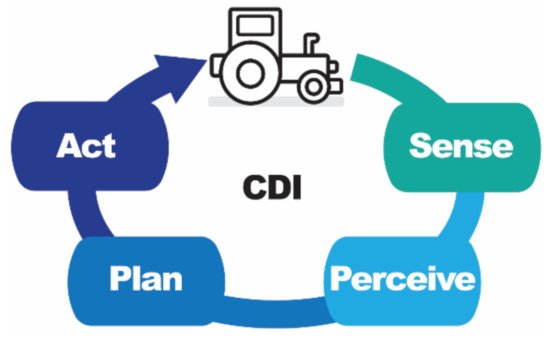

In order to develop autonomous driving machinery, a cyclic flow of information is required; it is known as the sense-perceive-plan-act (SPPA) cycle [51][27]. The SPPA cycle connects sensing, perceiving, planning and acting through a closed-loop relation; sensors collect (sense) physical information, the information is received and interpreted (perceive), feasible trajectories for navigation are selected (plan) and the tractor is controlled to follow the selected trajectory (act). Figure 4 illustrates the basic modeling of self-steering tractors.

Figure 4. A basic modeling of self-steering tractors.

In order to automate the guidance of tractors, two basic elements need to be combined: basic machinery and cognitive driving intelligence (CDI). CDI needs to be integrated into both hardware and software for the navigation and control of the platform. Navigation includes localization, mapping and path planning, while control includes all regulating steering parameters, e.g., steering rate and angle, speed, etc. CDI is made possible by using sensory data from navigation and localization sensors, algorithms for path planning and software for steering control. The basic machinery refers to the tractor where the CDI will be applied. Based on the above and in relation to the SPPA cycle, the basic elements for the automated steering of tractors are sensors for object detection, localization and mapping [52[28][29][30],53,54], path planning algorithms [55][31], path tracking and steering control [56][32].

2.2. Vision-Based Architecture

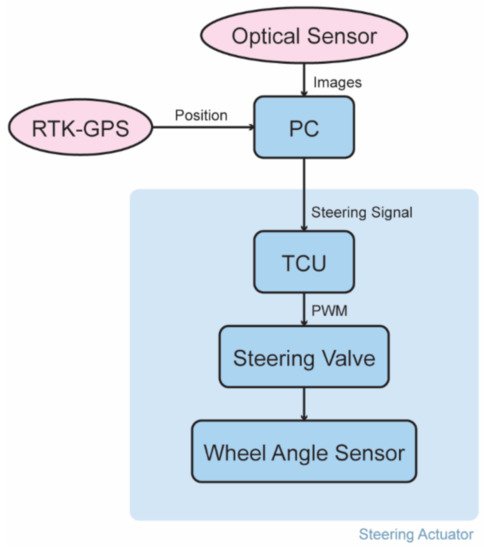

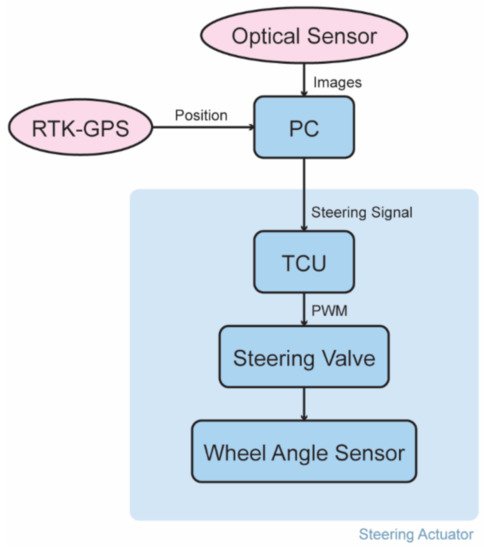

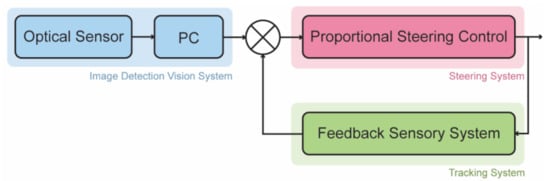

A fundamental vision-based architecture for self-steering tractors is presented in [29][18]. Figure 5 illustrates the flow diagram of the proposed vision-based navigation system.

Figure 5. A fundamental flow of the vision-based navigation systems of tractors.

The main sensor of the architecture is an optical sensor. The optical sensor captures images that are processed by a computer (PC), which also receives real-time kinematic global GPS (RTK-GPS) information and extracts the steering signal. The steering signal is fed to the tractor control unit (TCU) that generates a pulse width modulation (PWM) signal to automate steering. The closed loop of the steering actuator is comprised of an electrohydraulic steering valve and a wheel angle sensor. The system prototype of Figure 5 was installed on a commercial tractor, and a series of self-steering tests were conducted to evaluate the system in the field. Results reported a root mean square (RMS) error of lateral deviation of less than 0.05 m on straight and curved rows for speed of up to 3.0 m/s.

2.3. Path Tracking Control System

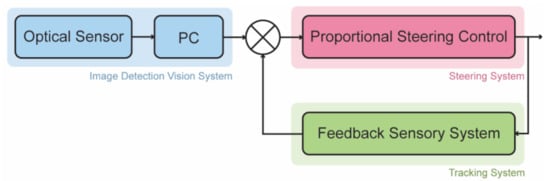

The basic design principle of a path-tracking control system comprises three main systems, as depicted in Figure 6: image detection, tracking and a steering control system. Acquired images from an optical sensor, i.e., a camera, are sent to a computer for real-time processing. The center of the crop row line is identified, and the navigation path is extracted. The system uses a feedback sensory signal for the proportional steering control of the electrohydraulic valve of the vehicle for adaptive path tracking [57][33].

Figure 6. The basic design of a path tracking control system.

2.4. Basic Sensors

Sensors record physical data from the environment and convert them into digital measurements that can be processed by the system. Determining the exact position of sensors on a tractor presupposes knowledge of both the operation of each sensor (field of view, resolution, range, etc.) and the geometry of the tractor, so that by being placed in the appropriate position onboard the vehicle, the sensor could perform to its maximum [58][34]. Navigation sensors can be either object sensors or pose sensors. Object sensors are used for the detection and identification of objects in the surrounding environment, while pose sensors are used for the localization of the tractor. Both categories can include active types of sensors, i.e., sensors that generate energy to measure things such as LiDAR, radar or ultrasonic, or passive types of sensors, e.g., optical sensors, GNSS, etc.

Sensory fusion can enhance navigation accuracy. The selection of appropriate sensors is based upon a number of factors, such as the sampling rate, the field of view, the reported accuracy, the range, the cost and the overall complexity of the final system. A vision-based system usually combines sensory data from cameras with data acquired from LiDAR, RADAR scanners, ultrasonic sensors, GPS and IMU.

Cameras capture 2D images by collecting light reflected on 3D objects. Images from different perspectives can be combined to reconstruct the geometry of the 3D navigation scenery. Image acquisition, however, is subject to the noise applied by the dynamically changing environmental conditions such as weather and lighting [59][35]. Thus, a fusion of sensors is required. LiDAR sensors can provide accurate models of the 3D navigation scene and, therefore, are used in autonomous navigation applications for depth perception. LiDAR sensors emit a laser light, which travels until it bounces off of objects and returns to the LiDAR. The system measures the travel time of the light to calculate distance, resulting in an elevation map of the surrounding environment. Radars are also used for autonomous driving applications [60][36]. Radars transmit an electromagnetic wave and analyze its reflections, deriving radar measurements such as range and radial velocity. Similar to radars, ultrasonic sensors calculate the object-source distance by measuring the time between the transmission of an ultrasonic signal and its reception by the receiver. Ultrasonic sensors are commonly used to autonomously locate and navigate a vehicle [61][37]. GPS and IMU are additional widely used sensors for autonomous navigation systems. GNSS can provide the geographic coordinates and time information to a GPS receiver anywhere on the planet as long as there is an unobstructed line of sight to at least four GPS satellites. The main disadvantage of GPS is that it sometimes fails to be accurate due to obstacles blocking the signals, such as buildings, trees or intense atmospheric conditions. Therefore, GPS is usually fused with IMU measurements to ensure signal coverage and precise position tracking. An IMU combines multiple sensors like a gyroscope, accelerometer, digital compass, magnetometer, etc. When fused with a high-speed GNSS receiver and combined with sophisticated algorithms, reliable navigation and orientation can be delivered.

References

- Thomasson, J.A.; Baillie, C.P.; Antille, D.L.; Lobsey, C.R.; McCarthy, C.L. Autonomous Technologies in Agricultural Equipment: A Review of the State of the Art. In Proceedings of the 2019 Agricultural Equipment Technology Conference, Louisville, KY, USA, 11–13 February 2019; American Society of Agricultural and Biological Engineers: St. Joseph, MI, USA, 2019; pp. 1–17.

- Baillie, C.P.; Lobsey, C.R.; Antille, D.L.; McCarthy, C.L.; Thomasson, J.A. A review of the state of the art in agricultural automation. Part III: Agricultural machinery navigation systems. In Proceedings of the 2018 Detroit, Michigan, 29 July–1 August 2018; American Society of Agricultural and Biological Engineers: St. Joseph, MI, USA, 2018; p. 1.

- Schmidt, G.T. GPS Based Navigation Systems in Difficult Environments. Gyroscopy Navig. 2019, 10, 41–53.

- Wilson, J. Guidance of agricultural vehicles—A historical perspective. Comput. Electron. Agric. 2000, 25, 3–9.

- Reid, J.; Searcy, S. Vision-based guidance of an agriculture tractor. IEEE Control Syst. Mag. 1987, 7, 39–43.

- Reid, J.F.; Searcy, S.W. Automatic Tractor Guidance with Computer Vision. In SAE Technical Papers; SAE International: Warrendale, PA, USA, 1987.

- Billingsley, J.; Schoenfisch, M. Vision-guidance of agricultural vehicles. Auton. Robots 1995, 2, 65–76.

- Billingsley, J.; Schoenfisch, M. The successful development of a vision guidance system for agriculture. Comput. Electron. Agric. 1997, 16, 147–163.

- Pinto, F.A.C.; Reid, J.F. Heading angle and offset determination using principal component analysis. In Proceedings of the ASAE Paper, Disney’s Coronado Springs, Orlando, FL, USA, 12–16 July 1998; p. 983113.

- Benson, E.R.; Reid, J.F.; Zhang, Q.; Pinto, F.A.C. An adaptive fuzzy crop edge detection method for machine vision. In Proceedings of the 2000 ASAE Annual Intenational Meeting, Technical Papers: Engineering Solutions for a New Century, Milwaukee, WI, USA, 9–12 July 2000; pp. 49085–49659.

- Benson, E.R.; Reid, J.F.; Zhang, Q. Development of an automated combine guidance system. In Proceedings of the 2000 ASAE Annual Intenational Meeting, Technical Papers: Engineering Solutions for a New Century, Milwaukee, WI, USA, 9–12 July 2000; pp. 1–11.

- Benson, E.R.; Reid, J.F.; Zhang, Q. Machine Vision Based Steering System for Agricultural Combines. In Proceedings of the 2001 Sacramento, Sacramento, CA, USA, 29 July–1 August 2001; American Society of Agricultural and Biological Engineers: St. Joseph, MI, USA, 2001.

- Benson, E.R.; Reid, J.F.; Zhang, Q. Machine vision-based guidance system for an agricultural small-grain harvester. Trans. ASAE 2003, 46, 1255–1264.

- Keicher, R.; Seufert, H. Automatic guidance for agricultural vehicles in Europe. Comput. Electron. Agric. 2000, 25, 169–194.

- Åstrand, B.; Baerveldt, A.-J. A vision based row-following system for agricultural field machinery. Mechatronics 2005, 15, 251–269.

- Søgaard, H.T.; Olsen, H.J. Crop row detection for cereal grain. In Precision Agriculture ’99; Sheffield Academic Press: Sheffield, UK, 1999; pp. 181–190. ISBN 1841270423.

- Láng, Z. Image processing based automatic steering control in plantation. VDI Ber. 1998, 1449, 93–98.

- Kise, M.; Zhang, Q.; Rovira Más, F. A Stereovision-Based Crop Row Detection Method for Tractor-automated Guidance. Biosyst. Eng. 2005, 90, 357–367.

- Tillett, N.D.; Hague, T. Computer-Vision-based Hoe Guidance for Cereals—An Initial Trial. J. Agric. Eng. Res. 1999, 74, 225–236.

- Hague, T.; Tillett, N.D. A bandpass filter-based approach to crop row location and tracking. Mechatronics 2001, 11, 1–12.

- Tillett, N.D.; Hague, T.; Miles, S.J. Inter-row vision guidance for mechanical weed control in sugar beet. Comput. Electron. Agric. 2002, 33, 163–177.

- Subramanian, V.; Burks, T.F.; Arroyo, A.A. Development of machine vision and laser radar based autonomous vehicle guidance systems for citrus grove navigation. Comput. Electron. Agric. 2006, 53, 130–143.

- Misao, Y.; Karahashi, M. An image processing based automatic steering rice transplanter (II). In Proceedings of the 2000 ASAE Annual Intenational Meeting, Technical Papers: Engineering Solutions for a New Century, Milwaukee, WI, USA, 9–12 July 2000; pp. 1–5.

- Han, S.; Dickson, M.A.; Ni, B.; Reid, J.F.; Zhang, Q. A Robust Procedure to Obtain a Guidance Directrix for Vision-Based Vehicle Guidance Systems. In Proceedings of the Automation Technology for Off-Road Equipment Proceedings of the 2002 Conference, Chicago, IL, USA, 26–27 July 2002; American Society of Agricultural and Biological Engineers: St. Joseph, MI, USA, 2013; p. 317.

- Okamoto, H.; Hamada, K.; Kataoka, T.; Terawaki, M.; Hata, S. Automatic Guidance System with Crop Row Sensor. In Proceedings of the Automation Technology for Off-Road Equipment Proceedings of the 2002 Conference, Chicago, IL, USA, 26–27 July 2002; American Society of Agricultural and Biological Engineers: St. Joseph, MI, USA, 2013; p. 307.

- Sparrow, R.; Howard, M. Robots in agriculture: Prospects, impacts, ethics, and policy. Precis. Agric. 2021, 22, 818–833.

- Rowduru, S.; Kumar, N.; Kumar, A. A critical review on automation of steering mechanism of load haul dump machine. Proc. Inst. Mech. Eng. Part I J. Syst. Control Eng. 2020, 234, 160–182.

- Eddine Hadji, S.; Kazi, S.; Howe Hing, T.; Mohamed Ali, M.S. A Review: Simultaneous Localization and Mapping Algorithms. J. Teknol. 2015, 73.

- Rodríguez Flórez, S.A.; Frémont, V.; Bonnifait, P.; Cherfaoui, V. Multi-modal object detection and localization for high integrity driving assistance. Mach. Vis. Appl. 2014, 25, 583–598.

- Jha, H.; Lodhi, V.; Chakravarty, D. Object Detection and Identification Using Vision and Radar Data Fusion System for Ground-Based Navigation. In Proceedings of the 2019 6th International Conference on Signal Processing and Integrated Networks (SPIN), Noida, India, 7–8 March 2019; pp. 590–593.

- Karur, K.; Sharma, N.; Dharmatti, C.; Siegel, J.E. A Survey of Path Planning Algorithms for Mobile Robots. Vehicles 2021, 3, 448–468.

- Ge, J.; Pei, H.; Yao, D.; Zhang, Y. A robust path tracking algorithm for connected and automated vehicles under i-VICS. Transp. Res. Interdiscip. Perspect. 2021, 9, 100314.

- Zhang, S.; Wang, Y.; Zhu, Z.; Li, Z.; Du, Y.; Mao, E. Tractor path tracking control based on binocular vision. Inf. Process. Agric. 2018, 5, 422–432.

- Pajares, G.; García-Santillán, I.; Campos, Y.; Montalvo, M.; Guerrero, J.; Emmi, L.; Romeo, J.; Guijarro, M.; Gonzalez-de-Santos, P. Machine-Vision Systems Selection for Agricultural Vehicles: A Guide. J. Imaging 2016, 2, 34.

- Zhai, Z.; Zhu, Z.; Du, Y.; Song, Z.; Mao, E. Multi-crop-row detection algorithm based on binocular vision. Biosyst. Eng. 2016, 150, 89–103.

- Schouten, G.; Steckel, J. A Biomimetic Radar System for Autonomous Navigation. IEEE Trans. Robot. 2019, 35, 539–548.

- Wang, R.; Chen, L.; Wang, J.; Zhang, P.; Tan, Q.; Pan, D. Research on autonomous navigation of mobile robot based on multi ultrasonic sensor fusion. In Proceedings of the 2018 IEEE 4th Information Technology and Mechatronics Engineering Conference (ITOEC), Chongqing, China, 14–16 December 2018; pp. 720–725.

More