Your browser does not fully support modern features. Please upgrade for a smoother experience.

Please note this is a comparison between Version 1 by Bingding Huang and Version 3 by Catherine Yang.

The accurate segmentation of lung nodules is challenging due to their small size, especially at the edge of the lung and near the blood vessels. Lung nodule segmentation is relatively broad and varies in terms of architecture, image pre-processing, and training strategy.

- lung nodule segmentation

- lung cancedr

- lung nodule classification

1. General Neural Network Architecture

Most published studies combined the conventional convolution network (CNN) architecture with neural network blocks for lung nodule segmentation. U-Net and Fully Convolutional Neural Networks (FCN) architectures are two basic structures that are frequently used. Numerous works have shown that convolutional neural networks architecture can significantly improve the performance of lung segmentation [1][2][3][4][5][6][7][8][53,54,55,56,57,58,59,60], especially semantic segmentation networks such as FCN [9][61] and U-Net [10][62]. Such networks implement two key steps. First, the image feature maps are extracted using a down-sampling process to filter the unnecessary information, whilst the important information is retained. Second, the resulting feature maps are then amplified through an up-sampling process to achieve a higher-resolution display image.

Inspired by these networks, many segmentation studies modified and fine-tuned their models by leveraging the basic CNN architecture or changing or adding blocks to that CNN architecture. Huang et al. [5][57] proposed a system with four major modules: candidate nodule detection with faster regional-CNN (R-CNN), candidate merging, FP reduction using a CNN, and nodule segmentation using a customized FCN. Their model was trained and validated on the LIDC-IDRI dataset and achieved an average of 0.793 DSC. Tong et al. [7][59] utilized the U-Net architecture to perform the lung nodule segmentation. Their proposed method improved network performance by combining the U-Net with a residual block. Moreover, the lung parenchyma was extracted using a morphological method and the image was cropped to 64 × 64 pixels as an input to their improved network. The proposed model was trained and validated on the LUNA16 dataset and achieved 0.736 DSC. Usman et al. [4][56] proposed a dynamic modification region of interest (ROI) algorithm. This approach used Deep Res-UNet as the foundation for locating the input lung nodule volumes and improving lung nodule segmentation. Their method was divided into two stages. In the first stage, the Deep Res-Net was used for training and predicting the axial axis of the CT images. The second stage then focused on the new ROI in the CT image and used the deep Res-UNet architecture for the coronal and sagittal axes to train the network. The second stage also integrated the prediction results from the first stage into the final 3D results. Ultimately, the proposed method achieved 87.55% average DSC, 91.62% SEN, and 88.24% PPV.

Zhao et al. [8][60] proposed the implementation of a patch-based 3D U-Net and contextual CNN to automatically segment and classify lung nodules. This process began with a 3D U-Net architecture being used to segment the lung nodules, before generative adversarial networks (GANs) [11][63] were used to enhance the 3D U-Ne, and, finally, the contextual CNN was used to reduce the lung nodule segmentation FPs and improve the benign and malignant classification. This method achieved good results in segmenting lung nodules and classifying nodule types. Kumar et al. [3][55] utilized V-Net [12][64] for their lung nodule segmentation model. The proposed architecture adopted a 3D CNN model, using only the convolutional layers and ignoring the pooling layers. This model was evaluated on the LUNA16 dataset and achieved a DSC of 0.9615. Pezzano et al. [13][65] proposed a lung nodule segmentation network that added Multiple Convolutional Layer (MCL) blocks to the U-Net. The proposed network architecture was also divided into two phases, as opposed to being an end-to-end network. In the first phase, the researcher- trained model obtained the initial results; then, in the second phase, a morphological method was used for post-processing in order to highlight the nodules at the lung edges. The proposed architecture was trained and validated on the LIDC-IDRI database. The model achieved an 85.9% sensitivity, a 76.7% IoU, and an 86.1% F1-score. Keetha et al. [2][54] proposed a resource-efficient U-Det architecture by integrating U-Net with Bi-FPN (implemented in Efficient-Det). The proposed network was trained and tested on the LUNA dataset and achieved an average DSC of 82.82%, an average SEN of 92.25%, and an average PPV of 78.92%.

2. Multiview CNN Architecture

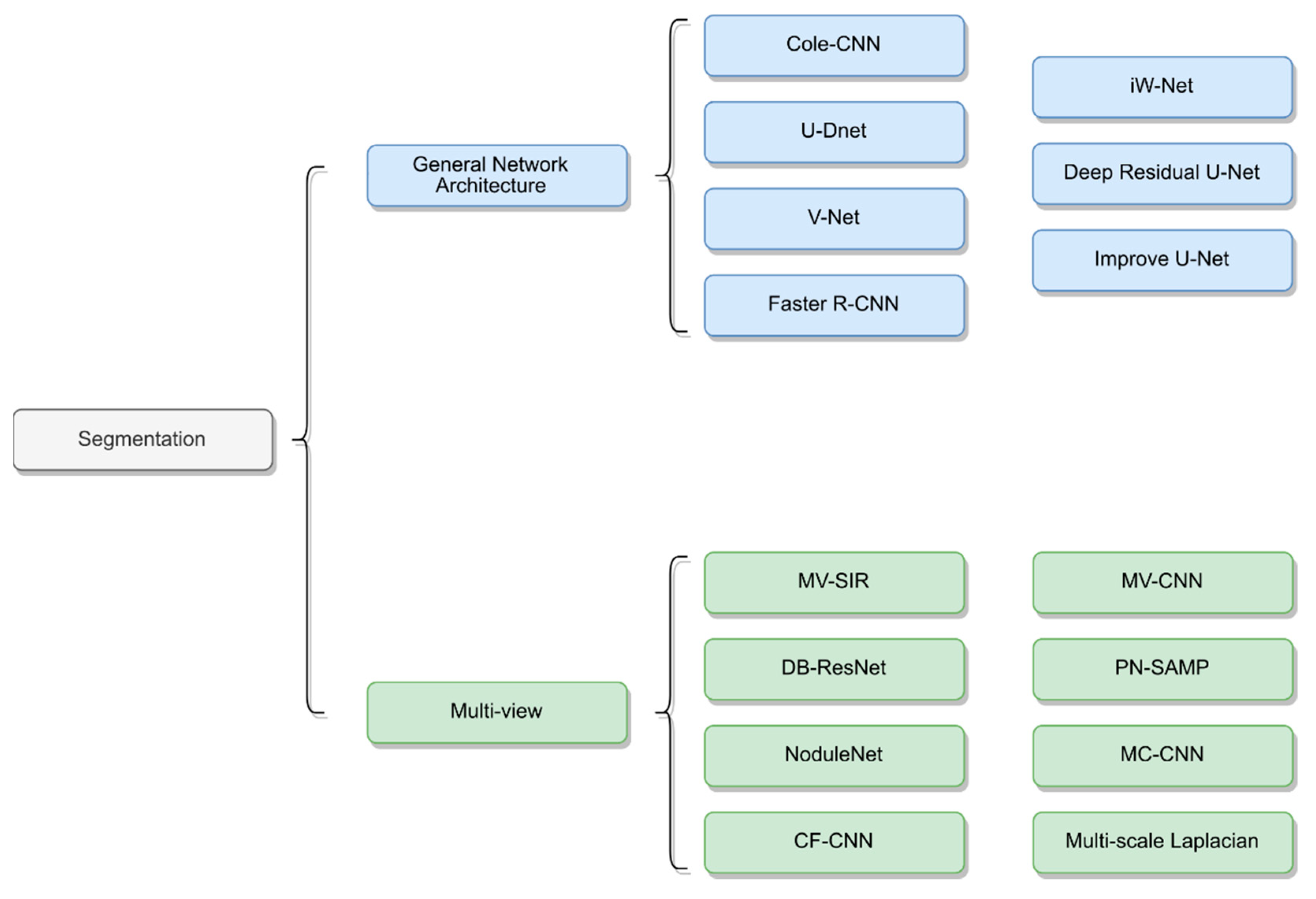

Many research studies have proposed new architectures by taking multiple views of lung nodules as inputs to neural networks to achieve improved results [1][14][15][16][17][18]. These segmentation methods are primarily based on CNN networks and combine multiscale or multiview methods to train the neural networks. The structures of the different networks are depicted in

Many research studies have proposed new architectures by taking multiple views of lung nodules as inputs to neural networks to achieve improved results [53,66,67,68,69,70]. These segmentation methods are primarily based on CNN networks and combine multiscale or multiview methods to train the neural networks. The structures of the different networks are depicted in

Figure 1.

2.

Figure 12.

Network architecture of lung nodule segmentation.

For instance, Zhang et al. [19][71] used a conventional method for nodule segmentation with a multiscale Laplacian of Gaussian filter to detect nodules. The proposed method was evaluated on the LUNA16 dataset and achieved a detection score of 0.947. Similarly, Shen et al. [18][70] considered different scales at feature levels in a single network and proposed the use of a multicrop CNN (MC-CNN) to automatically extract salient nodule information by employing a novel multicrop pooling strategy. Dong et al. [15][67] proposed a multiview secondary input residual (MV-SIR) CNN model for 3D lung nodule segmentation. Their approach achieved good results, with an 0.926 average DSC and 0.936 PPV.

Cao et al. [20][72] constructed a dual-branch residual network (DB-ResNet) and obtained improved lung segmentation results, with an average SEN of 89.35% and an average DSC of 82.74%. The proposed method employed two newly integrated schemes. First, it used the multiview and multiscale features of different nodules in CT images; second, it combined the intensity features with a CNN. Recently, Wu et al. [1][53] developed an interpretable, multitask learning CNN–joint learning for pulmonary nodule segmentation attributes and malignancy prediction (PN-SAMP) based on the U-Net architecture. The model achieved an average DSC of 73.89% and an average SEN of 97.58%. Finally, Wang et al. [17][69] proposed a central-focused CNN (CF-CNN) to segment lung nodules. Their architecture comprised two stages that used the same neural network to extract features and then merge those features. In addition, the authors used central pooling to preserve more of the features of the lung nodules. The model was tested on the LIDC-IDRI dataset and achieved an average DSC of 82.15% and an average SEN of 92.75%.

3. Segmentation Based on Lung Nodule Type

Pulmonary nodules have different types, shapes, and clinical features. Thus, the procedures used to detect nodules, as well as the associated challenges of such procedures, vary from case to case. In this section, we begin to address this issue by summarizing some of the works that have considered variations based on type. Table 1 3 shows the related methods and summarizes their key highlights.

Generally, lung nodules that are close to blood vessels and pleura are most challenging to detect. Thus, increasing the detail of the boundary nodules is core for all models. Pezzano et al. [13][65] proposed a network structure based on U-Net to segment lung nodules. The authors developed the multiple convolutional layers (MCL) module to fine-tune the details of the boundary nodules and post-process the nodule segmentation results. Morphological methods were used to strengthen the detail of the edge nodules. Dong et al. [15][67], meanwhile, proposed a model that incorporated features of voxel heterogeneity (VH) and shape heterogeneity (SH). VH reflects differences in gray voxel value, while SH reflects the characteristics of a better nodule shape. The authors found that VH can significantly learn gray information, whereas SH can better learn boundary information.

In addition, for juxta-pleural and small nodules, Cao et al. [20][72] proposed the DB-ResNet and presented a central intensity-pooling layer (CIP), which preserved the intensity features centered on the target voxel rather than the intensity information. For well-circumscribed nodules, Huang et al. [5][57] proposed a system that included segmentation and classification. In the segmentation step, the authors demonstrated that the model had a segmentation effect that was superior to that of other models.

However, the above methods exhibited slightly worse performance for the juxta-pleural, juxta-vascular, and ground-glass opacity nodules. Thus, to improve the performance of the model with respect to these types of nodules, AI-Shabi et al. [21][73] proposed the use of residual blocks with a 3 × 3 kernel size to extract local features and non-local blocks to extract global features. The proposed method managed to avoid many parameters and thus performed to a high standard. The LIDC-IDRI dataset was used for the training and testing. The proposed model achieved outstanding results as compared to DenseNet and ResNet in terms of transfer learning, scoring an AUC of 95.62%. Recently, Aresta et al. [6][58] constructed iW-Net, which comprised nodule segmentation and elements of user intervention. The proposed architecture performed well on large nodules without any user intervention. However, when user intervention was incorporated, the quality of the nodule segmentation in non-solid and sub-solid abnormalities improved significantly.

Table 13.

Deep learning-based lung nodule segmentation architectures and their key information.

| Study | Year | Architecture | Dataset | Approach | Performance |

|---|

| Pezzano et al. [13] | Pezzano et al. [65] | 2021 | CoLe-CNN | LIDC-IDRI | 2D Based U-Net Inception-v4 architecture Mean Square Error function |

F1 = 86.1 IoU = 76.6 |

| Dong et al. [15] | Dong et al. [67] | 2020 | MV-SIR | LIDC-IDRI | 2D/3D Residual block Secondary input Multi views Voxel heterogeneity (VH) Shape heterogeneity (SH) |

ASD = 7.2 ± 3.3 HSD = 129.3 ± 53.3 DSC = 92.6 ± 3.5 PPV = 93.6 ± 2.2 SEN = 98.1 ± 11.3 |

| Keetha et al. [2] | Keetha et al. [54] | 2020 | U-DNet | LUNA16 | 2D Based U-Net Bi-FPN Efficient-Det Mish activity function |

DSC = 82.82 ± 11.71 SEN = 92.24 ± 14.14 PPV = 78.92 ± 17.52 |

| Cao et al. [20] | Cao et al. [72] | 2020 | DB-ResNet | LIDC-IDRI | 2D/3D ResNet CIP Multiview Multiscale Central Intensity-Pooling |

DSC = 82.74 ± 10.19 ASD = 19 ± 21 SEN = 89.35 ± 11.79 PPV = 79.64 ± 13.34 |

| Kumar el al. [3] | Kumar el al. [55] | 2020 | V-Net | LUNA16 | 3D V-Net PReLU Only fully convolutional lays |

DSC = 96.15 |

| Usman et al. [4] | Usman et al. [56] | 2020 | Adaptive ROI with Multi-view Residual Learning | LIDC-IDRI | 2D/3D the Deep Residual U-Net Adaptive ROI Multiview |

SEN = 91.62 PPV = 88.24 DSC = 87.55 |

| Tang et al. [22] | Tang et al. [74] | 2019 | NoduleNet | LIDC-IDRI | 3D Multitask Residual-block Detection, FPR, segmentation Different loss function |

DSC = 83.10 CPM = 87.27 |

| Huang et al. [5] | Huang et al. [57] | 2019 | Faster R-CNN | LUNA16 | 2D Faster RCNN Merge overlap FP reduction Based FCN |

ACC = 91.4 DSC = 79.3 |

| Aresta et al. [6] | Aresta et al. [58] | 2019 | iW-Net | LIDC-IDRI | 3D Based U-Net two points in the nodule boundary none heavy pre-processing steps augmentation |

IoU = 55 |

| Hesamian et al. [23] | Hesamian et al. [75] | 2019 | Atrous convolution | LIDC-IDRI | 2D Atrous convolution Residual Network Weight loss Normalize to 0, 255 |

DSC = 81.24 Precision = 79.75 |

| Liu et al. [24] | Liu et al. [76] | 2018 | Mask R-CNN | LIDC-IDRI | 2D Backbone: ResNet101, FPN transfer learning RPN FCN |

73.34 mAP 79.65 mAP |

| Khosravan et al. [25] | Khosravan et al. [77] | 2018 | Semi-supervised multitask learning | LUNA16 | 3D Data augmentation Semi-supervised FP reduction |

SEN = 98 DSC = 91 |

| Wu et al. [1] | Wu et al. [53] | 2018 | PN-SAMP | LIDC-IDRI | 3D 3D U-Net WW/WC Dice coefficient loss Segmentation, classification |

DSC = 73.98 |

| Tong et al. [7] | Tong et al. [59] | 2018 | Improved U-NET network | LUNA16 | 2D U-Net Modify residual block Obtain lung parenchyma |

DSC = 73.6 |

| Zhao et al. [8] | Zhao et al. [60] | 2018 | 3D U-Net and Contextual Convolutional Neural Network | LIDC-IDRI | 3D 3D U-Net GAN Morphological methods Residual block Inception structure |

None |

| Wang et al. [14] | Wang et al. [66] | 2017 | MV-CNN | LIDC-IDRI | 2D/3D Mutilview A multiscale patch strategy |

SEN = 83.72 PPV = 77.59 DSC = 77.67 |

| Wang et al. [17] | Wang et al. [69] | 2017 | CF-CNN | LIDC-IDRI/GDGH | 2D/3D Central pooling 3D patch 2D views A sampling method Two datasets |

LIDC: DSC = 82.15 ± 10.76 SEN = 92.75 ± 12.83 PPV = 75.84 ± 13.14 GDGH: DSC = 80.02 ± 11.09 SEN = 83.19 ± 15.22 PPV = 79.30 ± 12.09 |