Alzheimer’s disease (AD) is one of the most devastating brain diseases in the world, especially in the more advanced age groups. It is a progressive neurological disease that results in irreversible loss of neurons, particularly in the cortex and hippocampus, which leads to characteristic memory loss and behavioral changes in humans. Communicative difficulties (speech and language) constitute one of the groups of symptoms that most accompany dementia and, therefore, should be recognized as a central study instrument. This recognition aims to provide earlier diagnosis, resulting in greater effectiveness in delaying the disease evolution. Speech analysis, in general, represents an important source of information encompassing the phonetic, phonological, lexical-semantic, morphosyntactic, and pragmatic levels of language organization [72]. The first signs of cognitive decline are quite present in the discourse of neurodegenerative patients so that diagnosis via speech analysis of these patients is a viable and effective method, which may even lead to an earlier and more accurate diagnosis.

- Alzheimer’s disease (AD)

- speech

- classification

1. Speech and Language Impairments in Alzheimer’s Disease

-

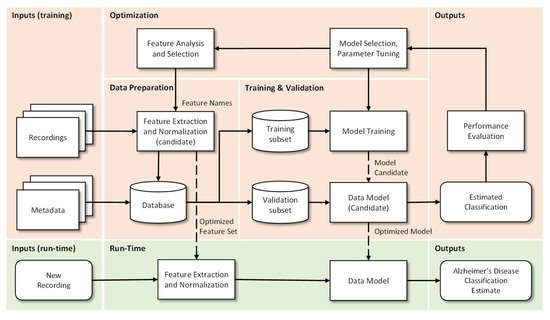

Data Preparation: In this step the extraction, optimization and normalization of features occurs. This consists in the selection of the most significant features (by removal of the non-dominant features) and in the transformation of ranges to similar limits, which will reduce training time and the complexity of the classification models. Metadata are “the data of the data”, more specifically, structured, and organized information on a given object (in this case voice recordings) that allow certain characteristics of it to be known. This metadata together with the results of the pre-processing of the recordings makes the final database. Incorrect or poor-quality data (e.g., outliers, wrong labels, noise, …), if not properly cared for, will lead to under optimized models and to unsatisfactory results. If data is not enough, for example when deep learning algorithms are used, then data augmentation techniques can be useful.

-

Training and Validation: The supporting database is divided into subsets, usually 70–90% for training and 30–10% for testing. The subsets can be randomly generated several times and the results can be averaged for additional confidence in the results, a procedure that is designated by cross-validation. The data model is trained, i.e., the involved parameters are adjusted, by one or many optimizers, and the performance is calculated using the test subset. This step allows categorizing and organizing the data to promote better analysis [18]. When data is not enough, then transfer learning approaches can be used.

-

Optimization: After model evaluation, it is possible to conclude on the parameters that need to be improved, as well as to proceed in a more effective way to the selection of the most interesting and relevant features, so that a new extraction and consequently a new process (iteration) of Training and Validation can be performed.

-

Run-Time: Having concluded the previous points, the system is ready to be deployed and to classify new unseen inputs. More specifically, from the recording of a patient’s voice, to classify it as possible healthy or possible Alzheimer’s patient.

| Function | Early Stages | Moderate to Severe Stages |

|---|---|---|

| Spontaneous Speech | Fluent, grammatical | Non-fluent, echolalic |

| Paraphrastic errors | Semantics | Semantic and phonetic |

2.3. Language and Speech Features

As mentioned in Table 1, the most evident problems early on in AD, as far as speech is concerned, are related to difficulties in general semantics, that is, in finding words to name objects. In this sense, temporal cycles during spontaneous speech production (speech fluency) are affected and, therefore, can be detectable in the patient’s hesitation and pronunciation [44]. Other speech characteristics affected in AD patients seem to be those related to articulation (speed in language processing), prosody in terms of temporal and acoustic measurements, and eventually, in later phases, phonological fluency [45]. Considering the linearity of the features, they can be classified as linear or non-linear, the linear ones being more conventionally used. Linear features can be subdivided into several groups, but these are always very interconnected. Thus, we chose to divide into two groups, linguistics, and acoustics, and present them in Table 3 and Table 4. Table 3. Linguistic features that have been used for AD detection. The features are organized by type. For each feature name, the number of occurrences/usages is provided inside parenthesis.| Feature Type | Feature Name |

|---|

| Model | |

|---|---|

| Occurrence frequency | Words (3); Verbs (2); Nouns, Predicates (1); Coordinate and Subordinate Phrases (2); Reduced phrases (2); Incomplete Phrases/Ideas (3); Filling words (1); Unique words (2); Revisions/Repetitions (1); Word Replacement (2) |

| Feature Type | Feature Name | |

|---|---|---|

| Characterization | References | |

| Hesitations | Filled Pauses (2); Silent Pauses (4); Long Pauses (3); Short Pauses (3); Voice Breaks (5). | |

| NB | Consists of a network, composed of a main node with other associated descending nodes that follow Bayes’ theorem [47 | |

| Model | Method | Reference | ||||

|---|---|---|---|---|---|---|

| ] | . | [6][23][28][41] | ||||

| Time/Duration | Total speech (3); Speech Rate (3); Speech time (2); Average of syllables (2); Pauses (4); Maximum pause (2). | |||||

| SVM | Consists of building the hyperplane with maximum margin capable of optimally separating two classes of a data set [47]. | [6][25] | Parts of speech ratio | Nouns/Verbs (2); Pronouns/Substantives (1); Determinants/Substantives (2); Type/Token (2); Silence/Speaking (4); Hesitation/Speaking (3). | ||

| [ | 26 | ][ | Semantic density | The density of the idea (1); Efficiency of the idea (1); Density of information (2); Density of the sentences (1). | ||

| POS (Parts-of-Speech) | ||||||

| Voice Segments | Period (4); Average duration (4); Accentuation (2). | |||||

| 27 | ] | [28][29][38][39][40][41][42][43][48][49] | Frequency | Fundamental frequency (8); Short term energy (7); Spectral centroid (1); Autocorrelation (2); Variation of voice frequencies (2). | ||

| Regularity | ||||||

| RF | Relies on the creation of a large number of uncorrelated decision trees based on the average random selection of predictor variables [50]. | [6][48] | Jitter (11); Shimmer (11); Intensity (6); Square Energy Operator (1); Teager-Kaiser Energy Operator (1); Root Mean Square Amplitude (2). | |||

| DT | Consists of building a decision tree where each node in the tree specifies a test on an attribute, each branch descending from that node corresponds to one of the possible values for that attribute, and each leaf represents class labels associated with the instance. The instances of the training set are classified following the path from the root to a leaf, according to the result of the tests along the path [51]. | [27][41][42][ | Text tags (4). | |||

| 43 | ] | Noise | Harmonic-Noise ratio (3); Noise-Harmonic ratio (2). | |||

| KNN | Based on the memory principle in the sense that it stores all cases and classifies new cases based on similar measures [47]. | Complexity | The entropy of words (1); Honore’s Statistics (1). | |||

| [ | 30 | ][34 | Phonetics | Articulation dynamics (1); the rate of articulation (1); Pause rate (5). | Lexical Variation | Variation: nominal (2), adjective (1), modifier (1), adverb (1), verbal (1), word (1); Brunet’s Index (1). |

| 30 | |||||

| ] | |||||

| [ | |||||

| 39 | |||||

| Cross Validation | k-Fold | [28][29][31][ | |||

| ] | |||||

| 34 | ] | [35][36][40][48] | |||

| LDA | |||||

| It is a discriminatory approach based on the differences between samples of certain groups. Unsupervised learning technique where the objective is to maximize the relationship between the variance between groups and the variance within the same group | [ | ||||

| Leave-pair-out | [39][ | Repetition | Intact | Very affected | |

| 53 | ]. | [42][43] | |||

| 49 | ] | ||||

| Leave-one-out | [6][26][38][41][42]] | Naming objects | Slightly affected | Very affected | |

| Split Evaluation | 90–10% | [40] | Understanding the words | Intact | Very affected |

| ] | [ | 36] | Syntactical understanding | Intact | Very affected |

| Reading | Intact | Very affected | |||

| Writing | ±Intact | Very affected | |||

| Semantic knowledge of words and objects | Difficulties with less used words and objects. | Very affected | |||

2. Speech- and Language-Based Classification of Alzheimer’s Disease

2.1. Machine Learning Pipeline

The use of speech analysis is potentially a useful, non-invasive, and simple method for early diagnosis of AD. The automation of this process allows a fast, accurate, and economical follow-up over time. Initially, speech-based tests for AD detection were performed by linguists. These tests were designed to extract linguistic characteristics from speech or writing samples. However, more current studies seek to optimize this task by automating the process of speech recognition through audio recordings [17]. Thus, and in sequence, the process can be described in 4 crucial steps:

2.2. Speech and Language Resources

Table 2 presents the main databases that are referred in the scientific literature, accompanied by a summary of their characteristics. These resources are crucial for supporting the development of new systems, in particular when deep learning approaches are used. The use of similar databases in different studies, by different researchers, also provides a common ground for evaluation and performance comparison.

Table 2. List of databases, with related specifications, with Alzheimer’s patients’ speech recordings. (Table contents are sorted by language, first column, and database name, second column).| LR | |||||||

| A model capable of finding an equation that predicts an outcome for a binary variable from one or more response variables | |||||||

| [ | |||||||

| 52 | ]. | [ | |||||

| Intensity | From the voice segments (1); From the pause segments (1); | ||||||

| Timbre | |||||||

| 80–20% | [ | ANN | Formant’s Structure (6); Formant’s Frequency (8). | ||||

| 30 | |||||||

| ] | |||||||

| DNN | |||||||

| Naturally inspired models. Supervised learning approach based on a theory of association (pattern recognition) between cognitive elements | |||||||

| [ | 54 | ] | |||||

| Random Sub-Sampling | - | [25] | . There are many possibilities with different elements, structures, layers, etc. The larger the number of parameters then the larger the dataset must be. | [30][31][34][35][36][40][41] | |||

| CNN | |||||||

| RNN | |||||||

| MLP | |||||||

| Language | Database Name | Task | Population | Availability | Refs. | ||

|---|---|---|---|---|---|---|---|

| HC M/F |

MCI M/F |

AD M/F |

|||||

| English | DementiaBank (TalkBank) |

DF | 99 | - | 169 | Upon request | [20] |

| English | Pitt Corpus | PD | 75/142 | 27/16 | 87/170 | Upon request | [21] |

| English | WRAP | PD | 59/141 | 28/36 | - | Upon request | [22] |

| English | - | PD | 112 | - | 98 | Undefined | [23] |

| French | - | Mixed | 6/9 | 11/12 | 13/13 | Undefined | [24] |

| French | - | VF, PD, SS Counting |

- | 19/25 | 12/15 | Undefined | [25] |

| French | - | VF, Semantics | 5/19 | 23/24 | 8/16 | Undefined | [26] |

| French | - | Reading | 16 | 16 | 16 | Undefined | [27] |

| Greek | - | PD | 16/14 | - | 13/17 | Undefined | [28] |

| Hungarian | BEA | SS | 13/23 | 16/32 | - | Upon request | [6] [29] |

| 25 | 25 | 25 | |||||

| Italian | - | Mixture | 48 | 48 | - | Undefined | [30] |

| Mandarin | Lu Corpus | PD/SS | 4/6 | - | 6/4 | Upon request | [31] |

| Mandarin | - | PD/SS | 24 | 20 | 20 | Undefined | [32] |

| Portuguese | Cinderella | SS | 20 | 20 | 20 | Undefined | [33] |

| Spanish | AZTITXIKI (AZTIAHO) |

SS | 5 | - | 5 | Undefined | [34] |

| Spanish | AZTIAHORE (AZTIAHO) |

SS | 11/9 | - | 8/12 | Undefined | [35][36] |

| Spanish | PGA-OREKA | VF | 26/36 | 17/21 | - | Upon request | [35] |

| Mini-PGA | PD | 4/8 | - | 1/5 | |||

| Spanish | - | Reading | 30/68 | - | 14/33 | Undefined | [37] |

| Swedish | Gothenburg | PD | 13/23 | 15/16 | - | Undefined | [38] |

| Swedish | - | Mixed | 12/14 | 8/21 | - | Upon request | [39] |

| Swedish | - | Reading | 11/19 | 12/13 | - | Undefined | [40] |

| Turkish | - | SS/Interview | 31/20 | - | 18/10 | Undefined | [41] |

| Turkish | - | SS/Interview | 12/15 | 17/10 | Undefined | [42] | |

| Turkish | - | SS | 12/15 | - | 17/10 | Undefined | [43] |

2.4. Classification Models

The process of classification lies in identifying to which, of a given set of categories, a new observation belongs to, based on another set of training categories whose observations have already been assigned a category [46]. Thus, after the extraction and selection of the most significant features, it is necessary to proceed to their classification so that it is also possible to classify the groups of data under study. When data distribution or patterns are known, then a compatible model (linear, polynomial, exponential or other) will lead to optimal results. However, machine learning has gained special relevance due to its ability to provide good estimates even when facing unstructured high dimensionality data. In this context, deep neural networks (DNN) can excel. These are flexible models where elements, inspired on the human brain anatomophysiology, are combined in large structures, with several sequential layers, to provide the output. The number of elements per layer, the number of layers, and the behavior of each layer (fully connected, convolutional, recurrent, …) are some of the parameters that can be adjusted to fit the network to the data/problem. In Table 5, some of the most commonly used models are summarized and defined in general terms. Table 5. NB: Naive Bayes; RF: Random Forest; LDA: Linear Discriminant Analysis; SVM: Support Vector Machine; DT: Decision Trees; ANN: Artificial Neural Networks; RNN: Recurrent Neural Network; CNN: Convolutional Neural Networks; MLP: Multilayer Perceptron; KNN: k-Nearest Neighbors; DNN: Deep Neural Networks; LR: Logistic Regression.2.5. Testing and Performance Indicators

To conclude on the efficiency and viability of the classification model adopted, it is necessary to evaluate it. To be able to compare the performance of a given system against others reported systems it is important to choose a common metric with a well/defined testing method/setup otherwise it will be impossible to understand how good a system stands against its competitors. In this sense, Table 63. Future Work

With the evolution of technology also the methods of diagnosis and analysis are evolving. Thus, more, and better ways of detecting diseases or even new diagnostic processes are appearing. The detection and classification of Alzheimer’s disease, which was usually performed via neurological tests and neuroimaging, is now possible through less invasive and equally efficient methods. The existing models for the detection of AD through speech have been increasing in quantity and in quality, though improvements are still needed. At present, the biggest barriers in the methods created for the automatic detection of AD lie in the fact that: (a) most systems are language dependent; (b) the number of samples used per study is very small, so the number of experiments on which the system is based is little for it to achieve optimal performance; (c) System components are not always integrated and may require human intervention; (d) feature sets are not yet fully established although temporal aspects (total duration, speech rate, articulation rate, among others) pitch, voice periods and interruptions, when combined with language or linguistic features can lead to very good results. Additional research is needed to find the optimal combination of parameters and what tasks should the (potential) patient be invited to perform. Thus, it is envisioned as future work the implementation of multilingual or language independent systems, supported by extensive and diverse databases (that still must be gathered, with balanced number of M/F, ages, disease severity), as well as the automation of the features selection and extraction. Better decision models, task oriented, are also required.References

- Soldan, A.; Gazes, Y.; Stern, Y. Alzheimer’s Disease☆. In Reference Module in Neuroscience and Biobehavioral Psychology; Elsevier: Amsterdam, The Netherlands, 2017; ISBN 978-0-12-809324-5.

- Nussbaum, R.L.; Ellis, C.E. Alzheimer’s Disease and Parkinson’s Disease. N. Engl. J. Med. 2003, 348, 1356–1364.

- Pulido, M.L.B.; Hernández, J.B.A.; Ballester, M.Á.F.; González, C.M.T.; Mekyska, J.; Smékal, Z. Alzheimer’s Disease and Automatic Speech Analysis: A Review. Expert Syst. Appl. 2020, 150, 113213.

- Logsdon, R.G.; Gibbons, L.E.; McCurry, S.M.; Teri, L. Quality of Life in Alzheimer’s Disease: Patient and Caregiver Reports. J. Ment. Health Aging 1999, 5, 21–32.

- McKhann, G.M.; Knopman, D.S.; Chertkow, H.; Hyman, B.T.; Jack, C.R.; Kawas, C.H.; Klunk, W.E.; Koroshetz, W.J.; Manly, J.J.; Mayeux, R.; et al. The Diagnosis of Dementia Due to Alzheimer’s Disease: Recommendations from the National Institute on Aging-Alzheimer’s Association Workgroups on Diagnostic Guidelines for Alzheimer’s Disease. Alzheimer’s Dement. 2011, 7, 263–269.

- Toth, L.; Hoffmann, I.; Gosztolya, G.; Vincze, V.; Szatloczki, G.; Banreti, Z.; Pakaski, M.; Kalman, J. A Speech Recognition-Based Solution for the Automatic Detection of Mild Cognitive Impairment from Spontaneous Speech. Curr. Alzheimer Res. 2018, 15, 130–138.

- Alberdi, A.; Aztiria, A.; Basarab, A. On the Early Diagnosis of Alzheimer’s Disease from Multimodal Signals: A Survey. Artif. Intell. Med. 2016, 71, 1–29.

- Wang, L.Y.; LaBardi, B.A.; Raskind, M.A.; Peskind, E.R. Chapter 14—Alzheimer’s Disease and Other Neurocognitive Disorders. In Handbook of Mental Health and Aging, 3rd ed.; Hantke, N., Etkin, A., O’Hara, R., Eds.; Academic Press: San Diego, CA, USA, 2020; pp. 161–183. ISBN 978-0-12-800136-3.

- Cacho, J.; Benito-León, J.; García-García, R.; Fernández-Calvo, B.; Vicente-Villardón, J.L.; Mitchell, A.J. Does the Combination of the MMSE and Clock Drawing Test (Mini-Clock) Improve the Detection of Mild Alzheimer’s Disease and Mild Cognitive Impairment? J. Alzheimers Dis. 2010, 22, 889–896.

- Hancock, P.; Larner, A.J. Test Your Memory Test: Diagnostic Utility in a Memory Clinic Population. Int. J. Geriatr. Psychiatry 2011, 26, 976–980.

- Ferris, S.H.; Farlow, M. Language Impairment in Alzheimer’s Disease and Benefits of Acetylcholinesterase Inhibitors. Clin. Interv. Aging 2013, 8, 1007–1014.

- Zhang, Y.; Schuff, N.; Camacho, M.; Chao, L.L.; Fletcher, T.P.; Yaffe, K.; Woolley, S.C.; Madison, C.; Rosen, H.J.; Miller, B.L.; et al. MRI Markers for Mild Cognitive Impairment: Comparisons between White Matter Integrity and Gray Matter Volume Measurements. PLoS ONE 2013, 8, e66367.

- Axer, H.; Klingner, C.M.; Prescher, A. Fiber Anatomy of Dorsal and Ventral Language Streams. Brain Lang. 2013, 127, 192–204.

- Banovic, S.; Zunic, L.J.; Sinanovic, O. Communication Difficulties as a Result of Dementia. Mater. Sociomed 2018, 30, 221–224.

- Soria Lopez, J.A.; González, H.M.; Léger, G.C. Alzheimer’s Disease. Handb Clin. Neurol. 2019, 167, 231–255.

- Szatloczki, G.; Hoffmann, I.; Vincze, V.; Kalman, J.; Pakaski, M. Speaking in Alzheimer’s Disease, Is That an Early Sign? Importance of Changes in Language Abilities in Alzheimer’s Disease. Front. Aging Neurosci. 2015, 7.

- Walker, L., Jr.; Schaffer, J.D. The Art and Science of Machine Intelligence; Springer: Berlin/Heidelberg, Germany, 2020.

- Allen, M.; Cervo, D. Multi-Domain Master Data Management; Elsevier: Amsterdam, The Netherlands, 2015; ISBN 978-0-12-800835-5.

- Braga, D.; Madureira, A.M.; Coelho, L.; Abraham, A. Neurodegenerative Diseases Detection Through Voice Analysis. In Proceedings of the Hybrid Intelligent Systems; Abraham, A., Muhuri, P.K., Muda, A.K., Gandhi, N., Eds.; Springer International Publishing: Cham, Switzerland, 2018; Volume 734, pp. 213–223.

- Boller, F.; Becker, J. Dementiabank Database Guide; University of Pittsburgh: Pittsburgh, PA, USA, 2005.

- Becker, J.T.; Boller, F.; Lopez, O.L.; Saxton, J.; McGonigle, K.L. The Natural History of Alzheimer’s Disease. Description of Study Cohort and Accuracy of Diagnosis. Arch. Neurol. 1994, 51, 585–594.

- Mueller, K.D.; Koscik, R.L.; Hermann, B.P.; Johnson, S.C.; Turkstra, L.S. Declines in Connected Language Are Associated with Very Early Mild Cognitive Impairment: Results from the Wisconsin Registry for Alzheimer’s Prevention. Front. Aging Neurosci. 2018, 9, 1–14.

- Land, W.H.; Schaffer, J.D. A Machine Intelligence Designed Bayesian Network Applied to Alzheimer’s Detection Using Demographics and Speech Data. Procedia Comput. Sci. 2016, 95, 168–174.

- König, A.; Satt, A.; Sorin, A.; Hoory, R.; Toledo-Ronen, O.; Derreumaux, A.; Manera, V.; Verhey, F.; Aalten, P.; Robert, P.H.; et al. Automatic Speech Analysis for the Assessment of Patients with Predementia and Alzheimer’s Disease. Alzheimer’s Dement. Diagn. Assess. Dis. Monit. 2015, 1, 112–124.

- König, A.; Satt, A.; Sorin, A.; Hoory, R.; Derreumaux, A.; David, R.; Robert, P.H. Use of Speech Analyses within a Mobile Application for the Assessment of Cognitive Impairment in Elderly People. Curr. Alzheimer Res. 2018, 15, 120–129.

- König, A.; Linz, N.; Tröger, J.; Wolters, M.; Alexandersson, J.; Robert, P. Fully Automatic Speech-Based Analysis of the Semantic Verbal Fluency Task. Dement. Geriatr. Cogn. Disord. 2018, 45, 198–209.

- Mirzaei, S.; El Yacoubi, M.; Garcia-Salicetti, S.; Boudy, J.; Kahindo, C.; Cristancho-Lacroix, V.; Kerhervé, H.; Rigaud, A.S. Two-Stage Feature Selection of Voice Parameters for Early Alzheimer’s Disease Prediction. Irbm 2018, 39, 430–435.

- Rentoumi, V.; Paliouras, G.; Danasi, E.; Arfani, D.; Fragkopoulou, K.; Varlokosta, S.; Papadatos, S. Automatic Detection of Linguistic Indicators as a Means of Early Detection of Alzheimer’s Disease and of Related Dementias: A Computational Linguistics Analysis. In Proceedings of the 2017 8th IEEE International Conference on Cognitive Infocommunications (CogInfoCom), Debrecen, Hungary, 11–14 September 2017; pp. 33–38.

- Gosztolya, G.; Vincze, V.; Tóth, L.; Pákáski, M.; Kálmán, J.; Hoffmann, I. Identifying Mild Cognitive Impairment and Mild Alzheimer’s Disease Based on Spontaneous Speech Using ASR and Linguistic Features. Comput. Speech Lang. 2019, 53, 181–197.

- Beltrami, D.; Calzà, L.; Gagliardi, G.; Ghidoni, E.; Marcello, N.; Favretti, R.R.; Tamburini, F. Automatic Identification of Mild Cognitive Impairment through the Analysis of Italian Spontaneous Speech Productions. In Proceedings of the Proceedings of the Tenth International Conference on Language Resources and Evaluation (LREC’16), Portorož, Slovenia, 23–28 May 2016; European Language Resources Association (ELRA): Portorož, Slovenia, 2016; pp. 2086–2093.

- Chien, Y.-W.; Hong, S.-Y.; Cheah, W.-T.; Yao, L.-H.; Chang, Y.-L.; Fu, L.-C. An Automatic Assessment System for Alzheimer’s Disease Based on Speech Using Feature Sequence Generator and Recurrent Neural Network. Sci. Rep. 2019, 9, 19597.

- Qiao, Y.; Xie, X.-Y.; Lin, G.-Z.; Zou, Y.; Chen, S.-D.; Ren, R.-J.; Wang, G. Computer-Assisted Speech Analysis in Mild Cognitive Impairment and Alzheimer’s Disease: A Pilot Study from Shanghai, China. J. Alzheimer’s Dis. 2020, 75, 211–221.

- Toledo, C.M.; Aluísio, S.M.; dos Santos, L.B.; Brucki, S.M.D.; Trés, E.S.; de Oliveira, M.O.; Mansur, L.L. Analysis of Macrolinguistic Aspects of Narratives from Individuals with Alzheimer’s Disease, Mild Cognitive Impairment, and No Cognitive Impairment. Alzheimer’s Dement. Diagn. Assess. Dis. Monit. 2018, 10, 31–40.

- López-de-Ipiña, K.; Alonso-Hernández, J.B.; Solé-Casals, J.; Travieso-González, C.M.; Ezeiza, A.; Faúndez-Zanuy, M.; Calvo, P.M.; Beitia, B. Feature Selection for Automatic Analysis of Emotional Response Based on Nonlinear Speech Modeling Suitable for Diagnosis of Alzheimer’s Disease. Neurocomputing 2015, 150, 392–401.

- Lopéz-de-Ipiña, K.; Martinez-de-Lizarduy, U.; Calvo, P.M.; Mekyska, J.; Beitia, B.; Barroso, N.; Estanga, A.; Tainta, M.; Ecay-Torres, M. Advances on Automatic Speech Analysis for Early Detection of Alzheimer Disease: A Non-Linear Multi-Task Approach. Curr. Alzheimer Res. 2017, 14, 139–148.

- Solé-Casals, J.; Lopéz-de-Ipiña, K.; Eguiraun, H.; Alonso, J.B.; Travieso, C.M.; Ezeiza, A.; Barroso, N.; Ecay-Torres, M.; Martinez-Lage, P.; Beitia, B. Feature Selection for Spontaneous Speech Analysis to Aid in Alzheimer’s Disease Diagnosis: A Fractal Dimension Approach. Comput. Speech Lang. 2015, 30, 43–60.

- Martínez-Sánchez, F.; Meilán, J.J.G.; Carro, J.; Ivanova, O. A Prototype for the Voice Analysis Diagnosis of Alzheimer’s Disease. J. Alzheimer’s Dis. 2018, 64, 473–481.

- Fraser, K.C.; Lundholm Fors, K.; Kokkinakis, D. Multilingual Word Embeddings for the Assessment of Narrative Speech in Mild Cognitive Impairment. Comput. Speech Lang. 2019, 53, 121–139.

- Fraser, K.C.; Lundholm Fors, K.; Eckerström, M.; Öhman, F.; Kokkinakis, D. Predicting MCI Status From Multimodal Language Data Using Cascaded Classifiers. Front. Aging Neurosci. 2019, 11.

- Themistocleous, C.; Eckerström, M.; Kokkinakis, D. Identification of Mild Cognitive Impairment From Speech in Swedish Using Deep Sequential Neural Networks. Front. Neurol. 2018, 9, 1–10.

- Khodabakhsh, A.; Yesil, F.; Guner, E.; Demiroglu, C. Evaluation of Linguistic and Prosodic Features for Detection of Alzheimer’s Disease in Turkish Conversational Speech. Eurasip J. Audio Speech Music Processing 2015, 2015.

- Khodabakhsh, A.; Kuscuoglu, S.; Demiroglu, C. Detection of Alzheimer’s Disease Using Prosodic Cues in Conversational Speech. In Proceedings of the 2014 22nd Signal Processing and Communications Applications Conference (SIU), Trabzon, Turkey, 23–25 April 2014; pp. 1003–1006.

- Khodabakhsh, A.; Demiroglu, C. Analysis of Speech-Based Measures for Detecting and Monitoring Alzheimer’s Disease. In Data Mining in Clinical Medicine; 2015; Volume 1246, pp. 159–173 ISBN 9781493919857.

- Hoffmann, I.; Nemeth, D.; Dye, C.D.; Pákáski, M.; Irinyi, T.; Kálmán, J. Temporal Parameters of Spontaneous Speech in Alzheimer’s Disease. Int J. Speech Lang Pathol 2010, 12, 29–34.

- Horley, K.; Reid, A.; Burnham, D. Emotional Prosody Perception and Production in Dementia of the Alzheimer’s Type. J. Speech Lang. Hear Res. 2010, 53, 1132–1146.

- Kalapatapu, P.; Goli, S.; Arthum, P.; Malapati, A. A Study on Feature Selection and Classification Techniques of Indian Music. Procedia Comput. Sci. 2016, 98, 125–131.

- Yahyaoui’s, A.; Yahyaoui, I.; Yumuşak, N. Machine Learning Techniques for Data Classification. In Advances in Renewable Energies and Power Technologies; Elsevier: Amsterdam, The Netherlands, 2018; pp. 441–450.

- Hernández-Domínguez, L.; Ratté, S.; Sierra-Martínez, G.; Roche-Bergua, A. Computer-Based Evaluation of Alzheimer’s Disease and Mild Cognitive Impairment Patients during a Picture Description Task. Alzheimers Dement (Amst) 2018, 10, 260–268.

- Orimaye, S.O.; Wong, J.S.M.; Golden, K.J.; Wong, C.P.; Soyiri, I.N. Predicting Probable Alzheimer’s Disease Using Linguistic Deficits and Biomarkers. BMC Bioinform. 2017, 18, 34.

- Carvajal, G.; Maucec, M.; Cullick, S. Components of Artificial Intelligence and Data Analytics. In Intelligent Digital Oil and Gas Fields; Elsevier: Amsterdam, The Netherlands, 2018; pp. 101–148.

- Capozzoli, A.; Cerquitelli, T.; Piscitelli, M.S. Enhancing Energy Efficiency in Buildings through Innovative Data Analytics Technologiesa. In Pervasive Computing; Elsevier: Amsterdam, The Netherlands, 2016; pp. 353–389.

- Hoffman, J.I.E. Logistic Regression. In Basic Biostatistics for Medical and Biomedical Practitioners; Elsevier: Amsterdam, The Netherlands, 2019; pp. 581–589.

- Stanimirova, I.; Daszykowski, M.; Walczak, B. Robust Methods in Analysis of Multivariate Food Chemistry Data. In Data Handling in Science and Technology; Elsevier: Amsterdam, The Netherlands, 2013; pp. 315–340.

- Siau, K. E-Creativity and E-Innovation. In The International Handbook on Innovation; Elsevier: Amsterdam, The Netherlands, 2003; pp. 258–264.