Your browser does not fully support modern features. Please upgrade for a smoother experience.

Please note this is a comparison between Version 1 by Elisabeth Vogel and Version 2 by Bruce Ren.

Resilience is a feature that is gaining more and more attention in computer science and computer engineering. However, the definition of resilience for the cyber landscape, especially embedded systems, is not yet clear.

- cyber-resilience

- security

- redundancy

- resilience engineering

1. Introduction

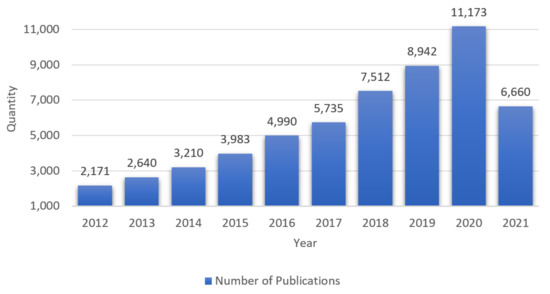

The cyberlandscape of the 21st century is constantly growing and becoming increasingly complex, covering areas such as telemedicine, autonomous driving, etc. Our societies, as well as individuals, are highly dependent on these systems working correctly 24/7. In order to be able to cope with the increasing complexity and the unprecedented importance of cybersystems, new and innovative methods and technologies have to be applied. The concept of resilience is receiving increasing attention in this respect, which is reflected above all by the steadily growing number of publications on the topic. Figure 1 shows how the number of publications has increased since 2012. The diagram in Figure 1 shows only publications with the keyword Cyber-Resilience. Beneath its attention in science, the concept of resilience has already reached industry. US-American streaming provider Netflix is considered a pioneer in the application of resilience in the form of highly redundant infrastructure. However, the principles of resilience are not only found in the hardware components of Netflix. The software architecture also demonstrates the application of various methods to increase resilience. The example of Netflix shows how important resilience becomes with increasing complexity [1].

Figure 1. Number of publications with the keyword Cyber-Resilience from 2012 to 2021 (July) [2].

However, the term “resilience” is used in many ways in IT. In some cases, resilience is described as “extreme reliability” [3] or used as a synonym for fault tolerance [4][5]. In [6] it is described that resilience is fault tolerance with the key attribute robustness. Anderson, in [6], extended the definition of fault tolerance by the property robustness, and called the new definition resilience. In recent publications, however, resilience is defined several times as an independent term [7][8]. In publications [9] and [10], some aspects are added to the concepts already applied in [7]. In [9], the model of [7] is extended. In [10], methods used to achieve cyber-resilience are listed as possible measures against cyberattacks (keyword, IT security).

2. Definitions

In the literature of recent years, there have been many definitions of resilience, some of which differ considerably. As described in [4], the content of the definitions strongly depends on the respective fields of application.

Resilience is derived from the Latin resilire, and can be translated as “bouncing back” or “bouncing off”. In essence, the term is used to describe a particular form of resistance.

How the term resilience is used in different other disciplines (material science, engineering, psychology, ecology) is described in [11].

Additionally, in computer science, the term resilience has been defined several times from different points of view. As described in [4] for example, resilience is often used as a synonym for fault tolerance. However, recent publications show that this approach has been replaced by the view that resilience is much more than fault tolerance (see [8]).

One of the first definitions was presented 1976 in [3], and describes the concept of resilience as follows:

“He (remark: the user) should be able to assume that the system will make a “best-effort” to continue service in the event that perfect service cannot be supported; and that the system will not fall apart when he does something he is not supposed to”.

- (1)

- (2)

3. Key Actions and Attributes

Section 2 introduced the key actions anticipation, resistance, recovery, detecting and adaptation. These five key actions can be defined as follows. Comparable definitions are listed in [7][9][10][19], for example:

Discussion of the Relationships between Key Actions and Attributes

The attributes are directly related to the key actions. This relationship becomes important when it comes to the concrete design or practical modeling of cyber-resilient systems. In order to design a system to be cyber-resilient or to make an existing system cyber-resilient, it must first be examined how, for example, the ability to resist can be achieved or improved. At this point, attributes must be considered. For example, to make a system resistant, methods can be used that increase robustness. Methods of reliability and security are also helpful here. It is to be considered here that the use of several different methods can lead to dependencies, which must be considered. For example, a method that makes a system more adaptable could at the same time reduce its security. Table 23 shows the mapping of the mentioned attributes to the key actions according to our understanding.

Anticipation Resistance Recovery Adaptation Detection Robustness X X Reliability X X Adaptability X X X Evolvability X Security X X X Safety X X X Assessability X Integrity X X

References

- Tseitlin, A. Resiliency through Failure: Netflix’s Approach to Extreme Availability in the Cloud; 2013. Available online: https://qconnewyork.com/ny2013/node/281.html (accessed on 15 November 2021).

- Web of Science. Available online: https://clarivate.com/webofsciencegroup/solutions/web-of-science/ (accessed on 20 July 2021).

- Alsberg, P.A.; Day, J.D. A Principle for Resilient Sharing of Distributed Resources. In Proceedings of the 2nd International Conference on Software Engineering October, San Francisco, CA, USA, 13–15 October 1976; pp. 562–570.

- Meyer, J.F. Defining and evaluating resilience: A performability perspective. In Proceedings of the International workshop on performability modeling of computer and communication systems (PMCCS), Eger, Hungary, 17–18 September 2009; Available online: http://ftp.eecs.umich.edu/people/jfm/PMCCS-9_Slides.pdf (accessed on 15 November 2021).

- Avizienis, A.; Laprie, J.-C.; Randell, B.; Landwehr, C. Basic concepts and taxonomy of dependable and secure computing. IEEE Trans. Dependable Secur. Comput. 2004, 1, 11–33.

- Resilient Computing Systems; Anderson, T. (Ed.) Wiley: New York, NY, USA, 1985; ISBN 0471845183.

- Rosati, J.D.; Touzinsky, K.F.; Lillycrop, W.J. Quantifying coastal system resilience for the US Army Corps of Engineers. Environ. Syst. Decis. 2015, 35, 196–208.

- Ron, R.; Richard, G.; Deborah, B.; Rosalie, M. Draft SP 800-160 Vol. 2, Systems Security Engineering: Cyber Resiliency Considerations for the Engineering of Trustworthy Secure Systems. 2018. Available online: https://insidecybersecurity.com/sites/insidecybersecurity.com/files/documents/2018/mar/cs03202018_NIST_Systems_Security.pdf (accessed on 16 April 2020).

- Carias, J.F.; Borges, M.R.S.; Labaka, L.; Arrizabalaga, S.; Hernantes, J. Systematic Approach to Cyber Resilience Operationalization in SMEs. IEEE Access 2020, 8, 174200–174221.

- Hopkins, S.; Kalaimannan, E.; John, C.S. Foundations for Research in Cyber-Physical System Cyber Resilience using State Estimation. In Proceedings of the 2020 SoutheastCon, Raleigh, NC, USA, 28–29 March 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 1–2, ISBN 978-1-7281-6861-6.

- Dyka, Z.; Vogel, E.; Kabin, I.; Aftowicz, M.; Klann, D.; Langendorfer, P. Resilience more than the Sum of Security and Dependability: Cognition is what makes the Difference. In Proceedings of the 2019 8th Mediterranean Conference on Embedded Computing (MECO), Budva, Montenegro, 10–14 June 2019; Stojanovic, R., Ed.; IEEE: Piscataway, NJ, UAS, 2019; pp. 1–3. ISBN 978-1-7281-1739-3.

- Nygard, M.T. Release It! Design and Deploy Production-Ready Software, 2nd ed. 2018. Available online: https://www.oreilly.com/library/view/release-it-2nd/9781680504552/ (accessed on 15 November 2021). ISBN 9781680502398.

- Jean-Claude, L. From Dependability to Resilience; DSN: Anchorage, AK, USA, 2008; p. 8. Available online: http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.331.8948&rep=rep1&type=pdf (accessed on 15 November 2021).

- De Florio, V. On the Constituent Attributes of Software and Organizational Resilience. Interdiscip. Sci. Rev. 2013, 38, 122–148.

- Jen, E. Stable or robust? What’s the difference? Complexity 2003, 8, 12–18.

- Clark-Ginsberg, A. What’s the Difference between Reliability and Resilience? 2016. Available online:

- Dyka, Z.; Vogel, E.; Kabin, I.; Klann, D.; Shamilyan, O.; Langendörfer, P. No Resilience without Security. In 2020 9th Mediterranean Conference on Embedded Computing (MECO), Budva, Montenegro, 8–11 June 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 1–5. Available online: https://ieeexplore.ieee.org/abstract/document/9134179 (accessed on 14 November 2021).

- Deborah, B.; Richard, G.; Jeffrey, P.; Rosalie, M. Cyber Resiliency Engineering Framework. 2011. Available online: https://www.mitre.org/sites/default/files/pdf/11_4436.pdf (accessed on 16 April 2020).

- World Economic Forum. A Framework for Assessing Cyber Resilience; World Economic Forum: Geneva, Switzerland, 2016.

- Castano, V.; Schagmayaev, I.; Schagaev, I. Resilient Computer System Design; Springer: Cham, Switzerland, 2015; ISBN 9783319150680.

- Hollnagel, E. Prologue: The scope of resilience engineering. In Resilience Engineering in Practice: A Guidebook; Hollnagel, E., Pariès, J., Woods, D., Wreathall, J., Eds.; Aldershot: Hampshire, UK, 2011; pp. 29–39.

More