Your browser does not fully support modern features. Please upgrade for a smoother experience.

Please note this is a comparison between Version 1 by ildar rakhmatulin and Version 3 by Lindsay Dong.

This article discusses the possibility of accurately detecting the position of weeds in real-time in real conditions. Presented detailed recommendations for solving the problem with scene density, considered ways for increasing accuracy, and FPS.

- deep learning in agriculture

- precision agriculture

- weed detection

- robotic weed control

- machine vision for weed control

1. Introduction

Weeds constitute one of the most devastating constraints for crop production, and efficient weed control is a prerequisite for increasing crop yield and food production for a growing world population [1]. However, weed control may negatively affect the environment [2]. The application of herbicides may result in pollution of the environment because, in most cases, only a tiny proportion of the applied chemicals hits the targets while most herbicides hit the ground, and a part of them may drift away [2][3][2,3]. Mechanical weed control may result in erosion and harm beneficial organisms such as earthworms in the soil and spiders on the soil surface [4][5][4,5]. Other weed control methods have other disadvantages and often affect the environment negatively. Sustainable weed control methods need to be designed only to affect the weed plants and interfere as little as possible with the surroundings. Weed control could be improved and be more sustainable if weeds were identified and located in real-time before applying any control methods.

2. Datasets and Image Pre-Processing

2.1. Datasets for Training Neural Networks

A dataset is needed to train neural networks, and image annotation of datasets is one of the main tasks for developing a computer vision system. Neural networks can be trained via multi-dimensional data and have the potential to model and extract meaning from a wide range of input information to address complex and challenging image classification and object detection problems. However, image datasets targeted for training neural networks should contain a significant number of images and enough variation in the object classes. When tracking an object with an accuracy of a centimeter, the dataset must be marked up appropriately, which is a highly labor-intensive task in terms of the time and effort required. At the same time, whichever neural network architecture is used, it is advisable to initialize the neurons using weights from a trained model that was trained using similar/related objects/images (i.e., the transfer learning technique). Transfer learning is a way to transfer the understanding (trained weights) of a model trained especially on the large dataset for initialization on a new dataset with similar objects, or on a smaller training set.

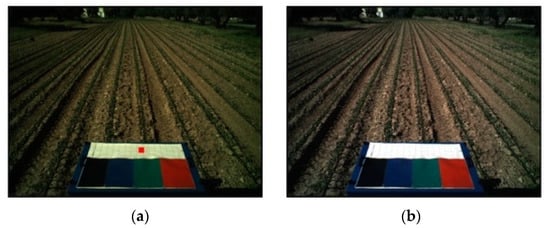

Image preprocessing has a broad scope with many different possible filters and different options for transforming an image. Dyrmann [7][78] presented a review of various filters for preprocessing but did not give enough examples with images. Preprocessing can also be used in real-time. Pajares et al. [8][64] provided guidance on choosing a vision system for optimal performance given adverse outdoor conditions with large light variability, uneven terrain, and different plant growth conditions. Calibration was used for color balance, especially when the illumination changed (Figure 25). This kind of system can be used in conjunction with DL. Since DL can be trained to show only one illumination, this will reduce the number of images required to train the model.

Image preprocessing has a broad scope with many different possible filters and different options for transforming an image. Dyrmann [7][78] presented a review of various filters for preprocessing but did not give enough examples with images. Preprocessing can also be used in real-time. Pajares et al. [8][64] provided guidance on choosing a vision system for optimal performance given adverse outdoor conditions with large light variability, uneven terrain, and different plant growth conditions. Calibration was used for color balance, especially when the illumination changed (Figure 25). This kind of system can be used in conjunction with DL. Since DL can be trained to show only one illumination, this will reduce the number of images required to train the model.

2.2. Image Preprocessing

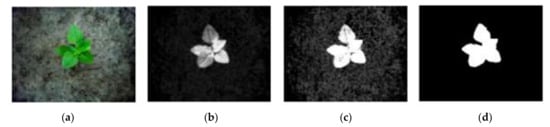

Image preprocessing (e.g., color space transform, rescaling, flipping, rotation, and translation) is a stage of image preparation that focuses on creating accurate data in the format needed for the efficient use of image processing algorithms. The advantages are that these methods do not require high computing power and these processes can be implemented in real-time on single-board computers. Faisal et al. [6][77] presented a good example of a typical preprocessing process, exploring the use of a support vector machine (SVM) and a Bayesian classifier as an ML algorithm for the classifying of pigweed (Amaranthus retroflexus L.) in image classification processes. With sufficiently bright color contrast, this method accurately recognizes the position of the object (Figure 14).

Figure 1. Image processing of pigweed (A. retroflexus): (a) original image, (b) grey-scale image, (c) after sharpening, (d) with noise filter [6].

3.3. Available Weed Detection Systems

2.3. Available Weed Detection Systems

The real application of machine vision systems is closely related to robotics. Controlling weeds with robotic systems is a practice that has recently gained increasing interest. In robotic systems, mechanical aspects usually require higher precision; hence, detecting weeds from machine vision becomes even more challenging. Qiu et al. [9][82] and Ren et al. [10][83] used popular models of neural networks, such as R-CNN and VGG16, for weed detection with a robotics system. Asha et al. [11][84] created a robot for weed control, but realized the challenge of accurate weed detection after deployment in the field due to the minor differences in size between the weed and the crop (Figure 3). When the weed was close to a cultivated crop and its leaves (e.g., when they covered each other), the robot had a problem identifying the weeds. After color segmentation, the weed was perceived as a single object together with the crop. Chang et al. [13][79] and Shinde et al. [14][85] used neural networks for image segmentation and the subsequent identification of weeds by finding the shapes that represent the weed’s structure (Figure 46).3. Deep Learning for Weed Detection

2.1. The Curse of Dense Scenes in Agricultural Fields

3.1. The Curse of Dense Scenes in Agricultural Fields

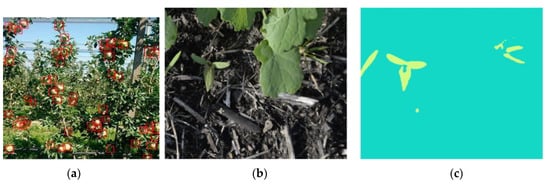

Dense scenes are the ones where there is dense vegetation, including both crops and weeds, and large occlusion between weeds and crops. Zhang et al. [15][99] presented a review of DL for dense scenes in agriculture. The purpose of this study is illustrated in Figure 57a, presenting a cluster of fruits on a dense background (including trees from other rows). As a result, the authors give several general recommendations such as increasing the dataset, generating synthetic data, and setting hyper-parameters. They concluded that it is advisable to continue increasing the depth of neural networks to solve problems with dense scenes. Assad et al. [16][100] focused on weeds (Figure 57b,c). The authors used semantic segmentation when conducting DL with a ResNet-50-based SegNet model. As a result, they attained a Frequency-Weighted Intersection Over Union (FWIOU) value of 0.9869, but the authors did not consider situations where the weed and the crop came into contact; in such cases, this method will not be efficient.

Proper annotation of the training dataset was crucial to detect the objects precisely. Bounding box-based detection methods used several IoU (intersection over union) and NMS (non-maximum suppression) values for model training and inference. Some segmentation methods can be used as well, with blob detection and thresholding techniques for the identification of individual objects in clusters. Identifying individual objects in clusters is a problem in various fields of activity, and much research has been devoted to solving it. Shawky et al. [17][101] and Zhuoyao et al. [18][102] considered the problem of dense scenes and concluded that each situation is unique, and it is advisable to combine various convolutional neural networks with each other. However, it is very difficult to predict the result in advance because such a method may take a lot of time. Dyrmann et al. [7][78] considered an object as a combination of different objects, depending on their position, which made the model a little heavier but, at the same time, increased the tracking accuracy. This method is one of the most promising.