The advances in remote sensing technologies, hence the fast-growing volume of timely data available at the global scale, offer new opportunities for a variety of applications. Deep learning being significantly successful in dealing with Big Data, is a great candidate for exploiting the potentials of such complex massive data. However, with remote sensing, there are some challenges related to the ground-truth, resolution, and the nature of data that require further efforts and adaptions of deep learning techniques.

- remote sensing data

- LULC classification

- machine learning

- deep Learning

- convolutional neural networks

- end-to-end learning

1. Introduction

The advances in remote sensing technologies and the resulting significant improvements in the spatial, spectral and temporal resolution of remotely sensed data, together with the extraordinary developments in Information and Communication Technologies (ICT) in terms of data storage, transmission, integration, and management capacities, are dramatically changing the way we observe the Earth. Such developments have increased the availability of data and led to a huge unprecedented source of information that allows us to have a more comprehensive picture of the state of our planet. Such a unique and global big set of data offers entirely new opportunities for a variety of applications that come with new challenges for scientists

The advances in remote sensing technologies and the resulting significant improvements in the spatial, spectral and temporal resolution of remotely sensed data, together with the extraordinary developments in Information and Communication Technologies (ICT) in terms of data storage, transmission, integration, and management capacities, are dramatically changing the way we observe the Earth. Such developments have increased the availability of data and led to a huge unprecedented source of information that allows us to have a more comprehensive picture of the state of our planet. Such a unique and global big set of data offers entirely new opportunities for a variety of applications that come with new challenges for scientists[1].

.[2]

[3]

[4]

[5]

[6], but such monitoring is very expensive and labour-intensive, and it is mostly limited to the first-world countries. The availability of high-resolution remote sensing data in a continuous temporal basis can be significantly effective to automatically extract on-Earth objects and land covers, map them and monitor their changes.

Volume

Variety

Velocity

Veracity

[7]. To mine and extract meaningful information from such data in an efficient way and to manage its volume, special tools and methods are required. In the last decade, Deep Learning algorithms have shown promising performance in analysing big sets of data, by performing complex abstractions over data through a hierarchical learning process. However, despite the massive success of deep learning in analysing conventional data types (e.g., grey-scale and coloured image, audio, video, and text), remote sensing data is yet a new challenge due to its unique characteristics.

[8]

[8] indicate that expert-free use of deep learning techniques is still getting questioned. Further challenges include limited resolution, high dimensionality, redundancy within data, atmospheric and acquisition noise, calibration of spectral bands, and many other source-specific issues. Answering to how deep learning would be advantageous and effective to tackle these challenges requires a deeper look into the current state-of-the-art to understand how studies have customised and adapted these techniques to make them fit into the remote sensing context. A comprehensive overview on the state-of-the-art of deep learning used for remote sensing data is provided by [8].

2. Remote Sensing Data

optical

Panchromatic

Multispectral

Hyperspectral

Thermal

SAR

multispectral

hyperspectral

contiguous

discrete

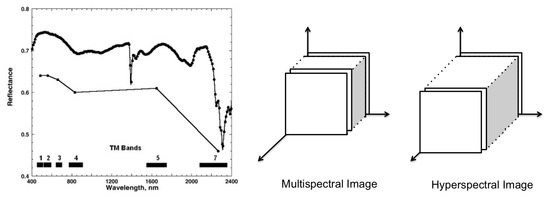

Figure 1.

Left

[9]

Right: a schema of multispectral and hyperspectral images in the spatial-spectral domain.

[10]. The latter is explained to result from the scatterings of surrounding objects during the acquisition process, the different atmospheric and geometric distortions, and the intra-class variability of similar objects.

Despite the mentioned differences in the nature of MSI and HSI, both share a similar 3D cubic-shape structure (Figure 3—Right) and are mostly used for similar purposes. Indeed, the idea behind LULC classification/segmentation relies on the morphological characteristics and material differences of on-ground regions and items, which are respectively retrievable from spatial and spectral information available in both MSI and HSI. Therefore, unlike

[11] that review methodologies designed for spectral-spatial information fusion for only hyperspectral image classifications, in this review we consider both data types as used in the literature for land cover classifications using deep learning techniques focusing on the spectral and/or spatial characteristics of land cover correlated pixels.

3. Machine Learning for Land Use and Land Cover Classification of Remote Sensing Data

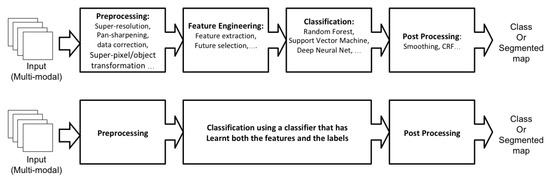

Conventional supervised Land Use and Land Cover (LULC) classification machine learning pipelines usually include four major steps: (1) pre-processing, (2) feature engineering, (3) classifier training and (4) post-processing (Figure 2—top). Each of these stages may be composed of a set of sub-tasks. A good break down of the whole process into its sub-tasks, with an explicit statement of their assumptions, helps to define standalone sub-problems that can be studied independently and have solutions or models that can be incorporated into a LULC pipeline to accomplish the targeted classification/segmentation. Over the last years, with the growing popularity of deep learning as a very powerful tool in solving different types of AI problems, we are witnessing a surge in demand of research to employ deep learning techniques in tackling these sub-problems.

Figure 2. The machine learning classification frameworks. The upper one shows the common steps of the conventional approaches, and the lower one shows the modern end-to-end structure. In the end-to-end deep learning structure, the feature engineering is replaced with feature learning as a part of the classifier training phase.

With the increased computational capacity in the new generation of processors, over the last decade, the

end-to-end

feature learning as a part of the classifier training phase (Figure 2-bottom). In this case, instead of defining the inner steps of the feature engineering phase, the end-to-end architecture generalises the model generation involving feature learning as part of it. Such improved capacity of deep learning has promoted its application on many research works where well-known, off-the-shelf, end-to-end models are directly applied to new data, such as remote sensing. However, there are some open-problems, complexities, and efficiency issues in the end-to-end use of deep learning in LULC classification, that encourages us to adopt a new approach for investigation of the state-of-the-art in deep learning for LULC classification.

3.1. Deep learning architecture

[12]

[13]

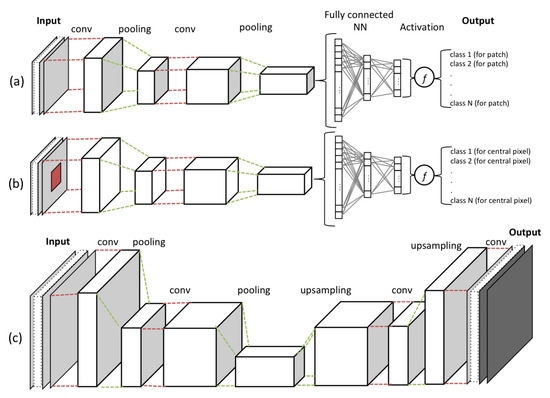

[13]. Each CNN, as shown in Figure 3, contains multiple stages of convolution and pooling, creating a hierarchy of dependant feature maps.

Figure 3.

a

b

c) an image reconstructive model. The resulting cubes after each layer of convolution and pooling are called feature maps.

At each layer of convolution, the feature maps are computed as the weighted sum of the previous layer of feature patches, using a filter with a stack of fixed-size kernels, and then pass the result into non-linearity, using an activation function (e.g., ReLU). In such a way, they detect local correlations (fitted in the kernel size), while keeping invariance to the location within the input data array. The pooling layer is used to reduce the dimension of the resulted feature map by calculating the maximum or the average of neighbouring units to create invariance to scaling, small shifts, and distortions. Eventually, the stages of convolution and pooling layers are concluded by a fully connected neural network and an activation function, which are in charge of the classification task within the network.

loss function (e.g., Minimum Square Error, Cross Entropy, or Hinge loss). In CNNs, learnable parameters are the weights associated with both convolution layer filters and connections between the neurons in the fully connected neural network. Therefore, the aim of the optimiser (e.g., Stochastic Gradient Descent, RMSprop, or Adam) is not only to train the classifier, but it is also responsible to learn data features by optimizing convolution layers parameters.

3.2. End-to-end deep learning challenges

[14]

3.3. Four-stage machine learning

4. Conclusion

The potentials and challenges of employing deep learning on remote sensing data is shortly addressed and the need for a deeper understating of machine learning as a complex problem is underlined. A complete study that discusses and reviews the state-of-the-art in the field is provided in

[19]. This study presents also the promising areas in which the implementation of deep learning can be of high potential in the future.

References

- Towards a European AI4EO R&I Agenda . ESA. Retrieved 2020-8-17

- Tim Newbold; Lawrence N. Hudson; Samantha L. L. Hill; Sara Contu; Igor Lysenko; Rebecca A. Senior; Luca Börger; Dominic J. Bennett; Argyrios Choimes; Ben Collen; et al.Julie DayAdriana De PalmaSandra DíazSusy Echeverría-LondoñoMelanie J. EdgarAnat FeldmanMorgan GaronMichelle L. K. HarrisonTamera AlhusseiniDaniel J. IngramYuval ItescuJens KattgeVictoria KempLucinda KirkpatrickMichael KleyerDavid Laginha Pinto CorreiaCallum D. MartinShai MeiriMaria NovosolovYuan PanHelen R. P. PhillipsDrew W. PurvesAlexandra RobinsonJake SimpsonSean L. TuckEvan WeiherHannah J. WhiteRobert M. EwersGeorgina M. MaceJörn P. W. ScharlemannAndy Purvis Global effects of land use on local terrestrial biodiversity. Nature 2015, 520, 45-50, 10.1038/nature14324.

- Peter M. Vitousek; Jane Lubchenco; Jerry M. Melillo; Harold A. Mooney; Human Domination of Earth's Ecosystems. Science 1997, 277, 494-499, 10.1126/science.277.5325.494.

- Johannes Feddema; Keith W. Oleson; G. B. Bonan; Linda O. Mearns; Lawrence E. Buja; Gerald A. Meehl; Warren M. Washington; The Importance of Land-Cover Change in Simulating Future Climates. Science 2005, 310, 1674-1678, 10.1126/science.1118160.

- Turner, Billie; Richard, H. Moss, and D. L. Skole. Relating Land Use and Global Land-Cover Change; IGBP Report 24, HDP Report 5; International Geosphere-Biosphere Programme: Stockholm, Sweden, 1993.

- United Nations Office for Disaster Risk Reduction. Sendai framework for disaster risk reduction 2015–2030. In Proceedings of the 3rd United Nations World Conference on Disaster Risk Reduction (WCDRR), Sendai, Japan, 14–18 March 2015; pp. 14–18.

- Zikopoulos, Paul; Eaton, Chris. Understanding Big Data: Analytics for Enterprise Class Hadoop and Streaming Data; McGraw-Hill Osborne Media: New York, NY, USA, 2011.

- Xiao Xiang Zhu; Devis Tuia; Lichao Mou; Gui-Song Xia; Liangpei Zhang; Feng Xu; Friedrich Fraundorfer; Deep Learning in Remote Sensing: A Comprehensive Review and List of Resources. IEEE Geoscience and Remote Sensing Magazine 2017, 5, 8-36, 10.1109/mgrs.2017.2762307.

- Alexander F.H. Goetz; Three decades of hyperspectral remote sensing of the Earth: A personal view. Remote Sensing of Environment 2009, 113, S5-S16, 10.1016/j.rse.2007.12.014.

- Pedram Ghamisi; Emmanuel Maggiori; Shutao Li; Roberto Souza; Yuliya Tarablaka; Gabriele Moser; Andrea De Giorgi; Leyuan Fang; Yushi Chen; Mingmin Chi; et al.Sebastiano B. SerpicoJon Atli Benediktsson New Frontiers in Spectral-Spatial Hyperspectral Image Classification: The Latest Advances Based on Mathematical Morphology, Markov Random Fields, Segmentation, Sparse Representation, and Deep Learning. IEEE Geoscience and Remote Sensing Magazine 2018, 6, 10-43, 10.1109/mgrs.2018.2854840.

- Maryam Imani; Hassan Ghassemian; An overview on spectral and spatial information fusion for hyperspectral image classification: Current trends and challenges. Information Fusion 2020, 59, 59-83, 10.1016/j.inffus.2020.01.007.

- Removing the Hunch in Data Science with AI-Based Automated Feature Engineering . IBM. Retrieved 2020-8-17

- Yann A. LeCun; Yoshua Bengio; Geoffrey Hinton; Deep learning. Nature 2015, 521, 436-444, 10.1038/nature14539 10.1038/nature14539.

- David H. Wolpert; The Lack of A Priori Distinctions Between Learning Algorithms. Neural Computation 1996, 8, 1341-1390, 10.1162/neco.1996.8.7.1341.

- Zhang, Chiyuan; Bengio, Samy; Hardt, Moritz; Recht, Benjamin; Vinyals, Oriol; Understanding deep learning requires rethinking generalization. arXiv 2016, arXiv:1611.03530.

- Kawaguchi, Kenji; Kaelbling, Leslie Pack; Bengio, Yoshua; Generalization in deep learning. arXiv 2017, arXiv:1710.05468.

- Andrew M Saxe; Yamini Bansal; Joel Dapello; Madhu Advani; Artemy Kolchinsky; Brendan D Tracey; David D Cox; On the information bottleneck theory of deep learning. Journal of Statistical Mechanics: Theory and Experiment 2019, 2019, 124020, 10.1088/1742-5468/ab3985.

- Dinh, Laurent, Razvan Pascanu; Bengio, Samy, Bengio, Yoshua; Sharp Minima Can Generalize For Deep Nets. In Proceedings of the 34th International Conference on Machine Learning (ICML), Sydney, Australia, 2017, 1019–1028.

- Ava Vali; Sara Comai; Matteo Matteucci; Deep Learning for Land Use and Land Cover Classification based on Hyperspectral and Multispectral Earth Observation Data: A Review. Remote Sensing 2020, 12, 2495, 10.3390/rs12152495.