Nasopharyngeal carcinoma (NPC) is one of the most common malignant tumours of the head and neck, and improving the efficiency of its diagnosis and treatment strategies is an important goal. With the development of the combination of artificial intelligence (AI) technology and medical imaging in recent years, an increasing number of studies have been conducted on image analysis of NPC using AI tools, especially radiomics and artificial neural network methods.

- nasopharyngeal carcinoma

- deep learning

- radiomics

- imaging

1. Introduction

2. Pipeline of Radiomics

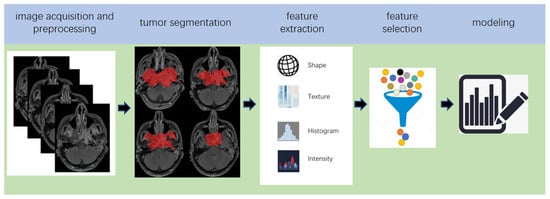

Radiomics, which was first proposed by Lambin in 2012 [7][18], is a relatively ‘young’ concept and is considered a natural extension of computer-aided diagnosis and detection systems [8][19]. It converts imaging data into a high-dimensional mineable feature space using a large number of automatically extracted data-characterization algorithms to reveal tumour features that may not be recognized by the naked eye and to quantitatively describe the tumour phenotype [9][10][11][12][20,21,22,23]. These extracted features are called radiomic features and include first-order statistics features, intensity histograms, shape- and size-based features, texture-based features, and wavelet features [13][24]. Conceptually speaking, radiomics belongs to the field of machine learning, although human participation is needed. The basic hypothesis of radiomics is that the constructed descriptive model (based on medical imaging data, sometimes supplemented by biological and/or medical data) can provide predictions of prognosis or diagnosis [14][25]. A radiomics study can be structured in five steps: data acquisition and pre-processing, tumour segmentation, feature extraction, feature selection, and modelling [15][16][26,27] (Figure 2).

3. The Principle of DL

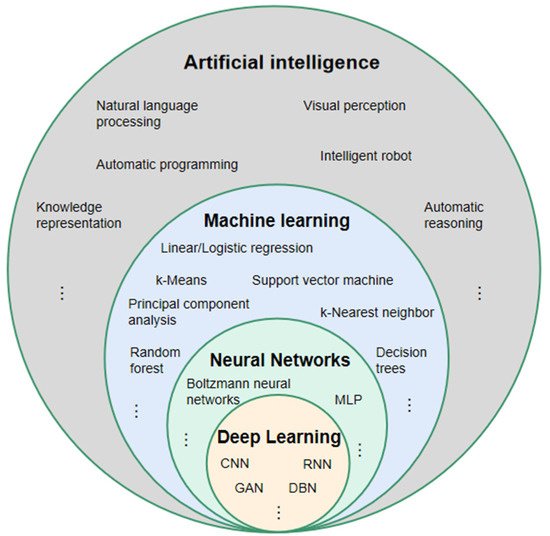

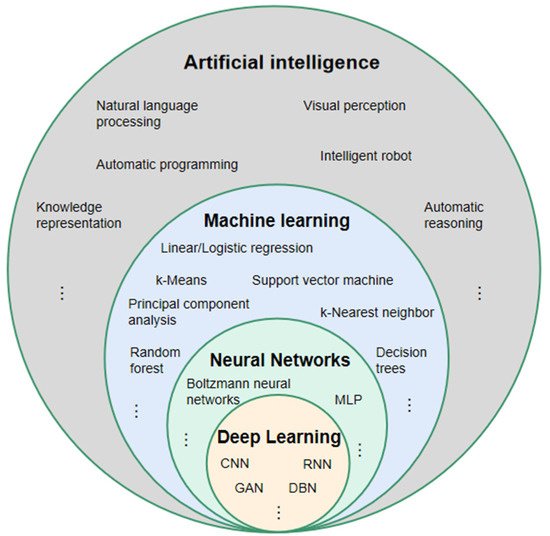

For a better understanding of DL, it is necessary to clarify the two terms of AI and machine learning, which are often accompanied by and confused with DL [27] (

5. Studies Based on Radiomics

5.1. Prognosis Prediction

Prognosis prediction includes tumour risk stratification and recurrence/progression prediction. Among the 31 radiomics-based studies retrieved, 17 were on this topic (5.1. Prognosis Prediction

| Author, Year, Reference | Image | Sample Size (Patient) | Feature Selection | Modeling | Model Evaluation |

|---|

| 85 |

| W-test, PR, RA, Chi-square test |

| SFFS coupled with SVM |

| AUC 0.829 |

| Author, Year, Reference | Image | Sample Size | Feature Selection | Modeling | Model Evaluation |

|---|

| Zhang, L. (2019) [97 | CR, nomograms, calibration curves | ] | MRIC-index 0.776 | |||||||||

| 176 | LASSO | LR | AUC 0.792 | Zhang, B. (2017) [47] | Zhang, B. (2017) [81] | MRI | 110 | L1-LOG, L1-SVM, RF, DC, EN-LOG, SIS | L2-LOG, KSVM, AdaBoost, LSVM, RF, Nnet, KNN, LDA, NB | AUC 0.846 | ||

| Zhong, X. (2020) [98] | MRI | 46 | LASSO | Nomogram | Zhang, B. (2017) [48] | Zhang, B. (2017) [82] | MRI | 113 | LASSO | RS | AUC 0.886 | |

| Ouyang, F.S. (2017) [49] | Ouyang, F.S. (2017) [83] | MRI | 100 | LASSO | RS | HR 7.28 | ||||||

| Lv, W. (2019) [50] | Lv, W. (2019) [84] | PET/CT | 128 | Univariate analysis with FDR, SC > 0.8 | CR | C-index 0.77 | ||||||

| Zhuo, E.H. (2019) [51] | Zhuo, E.H. (2019) [85] | MRI | 658 | Entropy-based consensus clustering method | SVM | C-index 0.814 | ||||||

| Zhang, L.L. (2019) [52] | Zhang, L.L. (2019) [86] | MRI | 737 | RFE | CR and nomogram | C-index 0.73 | ||||||

| Yang, K. (2019) [53] | Yang, K. (2019) [87] | MRI | 224 | LASSO | CR and nomogram | C-index 0.811 | ||||||

| Ming, X. (2019) [54] | Ming, X. (2019) [88] | MRI | 303 | Non-negative matrix factorization | Chi-squared test, nomogram | C-index 0.845 | ||||||

| 79 | Univariate analyses | CR | AUC 0.825 | |||||||||

| Du, R. (2019) [57] | Du, R. (2019) [91] | MRI | 277 | Hierarchal clustering analysis, PR | SVM | AUC 0.8 | ||||||

| AUC 0.72 | ||||||||||||

| Akram, F. (2020) [99] | MRI | 14 | Paired t-test and W-test | Shapiro-Wilk normality tests | p < 0.001 | |||||||

| Zhang, X. (2020) [100] | MRI | 238 | MRMR combined with 0.632 + bootstrap algorithms | RF | AUC 0.845 | Xu, H. (2020) [58 | ||||||

| Peng, L. (2021) [96] | PET/CT | 85 | W-test, PR, RA, Chi-square test | SFFS coupled with SVM | AUC 0.829 | Zhang, L. (2019) [55] | Zhang, L. (2019) [89] | MRI | 140 | LR-RFE | CR and nomogram | C-index 0.74 |

| Mao, J. (2019) [56] | Mao, J. (2019) [90] | MRI | ] | Xu, H. (2020) [92] | PET/CT | 128 | Univariate CR, PR > 0.8 | CR | C-index 0.69 | |||

| Shen, H. (2020) [59] | Shen, H. (2020) [93] | MRI | 327 | LASSO, RFE | CR, RS | C-index 0.874 | ||||||

| Bologna, M. (2020) [60] | Bologna, M. (2020) [94] | MRI | 136 | Intra-class correlation coefficient, SCC > 0.85 | CR | C-index 0.72 | ||||||

| Feng, Q. (2020) [61] | Feng, Q. (2020) [95] | PET/MR | 100 | LASSO | CR | AUC 0.85 | ||||||

| Peng, L. (2021) [62] | Peng, L. (2021) [96] | PET/CT | 85 | W-test, Chi-square test, PR, RA | SFFS coupled with SVM | AUC 0.829 |

5.2. Assessment of Tumour Metastasis

T

5.2. Assessment of Tumour Metastasis

5.3. Tumour Diagnosis

Lv [101] established authors in diagnostic model to distinguish NPC from chronic nasopharyngitis using the logistic regression of leave-one-out cross-validation method. [63]A total of 57 radeveloped an MRiological features were extracted from the PET/CT of 106 patients, and AUCs between 0.81 and 0.89 were reported.

I-bn [102], 76 patientsed radiomics nomogram for the differential were enrolled, including 41 with local recurrence and 35 with inflammation, as confirmed by pathology. A total of 487 radiomic features were extracted from the PET images. The performance was investigated for 42 cross-combinations derived from six feature selection methods and seven classifiers. The authors concluded that diagnosis of cervical stic models based on radiomic features showed higher AUCs (0.867–0.892) than traditional clinical indicators (AUC = 0.817).

5.4. Prediction of Therapeutic Effect

In [105], 108 patine lesions and metastasis after radients with advanced NPC were included to establish the dataset. The ANOVA/Mann–Whitney U test, correlation analysis, and LASSO were used to select texture features, and multivariate logistic regression was used to establish a predictive model for the early response to neoadjuvant chemotherapy. A total oFinally, an AUC of 0.905 was obtained for the validation cohort.

5.5. Predicting Complications

In [108], a radiomics model for predicting early acute xerostomia 279 radiomicduring radiation therapy was established based on CT images. Ridge CV and recursive features elimination were extracted from the enhanced T1-weighted MRI,used for feature selection, whereas linear regression was used for modelling. However, the study’s test cohort included only four patients with NPC and lacked sufficient evidence, despite the study reaching a precision of 0.922.

The authors ind [109] establightshed three radiomic features wes models for the early diagnosis of radiation-induced temporal lobe injury based on the MRIs of 242 patients with NPC. The feature selected using LASSOion in the study was achieved by the Relief algorithm, which is different from other studies. The random forest algorithm was used to establish a clthree early diagnosis models. The AUCs of the models in the test cohort were 0.830, 0.773, and 0.716, respectively.

6. Studies Based on DL

6.1. Prognosis Prediction

Yang [112] establisifier model that obtained an AUC of 0.72 with the hed a weakly-supervised, deep-learning network using an improved residual network (ResNet) with three input channels to achieve automated T staging of NPC. The images of multiple tumour layers of patients were labelled uniformly. The model output a predicted T-score for each slice and then selected the highest T-score slice for each patient to retrain the model to update the network weights. The accuracy of the model in the validation set was 75.

59%, and the AUC was 0.943.

In [64][116], a DL model based on ResNethe authors explore was established to predict the distant metastasis-free survival of locally advanced NPC. In contrast to the studies published in 2020, the authors of this study removed the issue of whether there was background noise and segmented the tumour region as the input image of the DL network. Finally, the optimal AUC of the multiple models combined with the clinical features was 0.808 (Table 7).

6.2. Image Synthesis

6.3. Detection and/or Diagnosis

Two similar diffstudies, [125,126], based on pathological images were conce betducted. The authors in [125] used 1970 wholeen radiomic features deri slide pathological images of 731 cases: 316 cases of inflammation, 138 cases of lymphoid hyperplasia, and 277 cases of NPC. The second study used 726 nasopharyngeal biopsies consisting of 363 images of NPC and 363 of benign nasopharyngeal tissue [126]. In [125], Inception-v3 was used from recurrent and non-recurrto build the classifier, while ResNeXt, a deep neural network with a residual and inception architecture, was used to build the classifier in [126]. The AUCs obtained in [125,126] were 0.936 and 0.985, respectively.

6.4. Segmentation

Radiotherapy is the most important treatment for NPC. However, it is necessary to accurately delimit the nasopharyngeal tumour volume and the organs at risk in images of the auxiliary damage caused by radiotherapy itself. Therefore, segmentation is particularly relevant to DL in NPC imaging. Li [138] proposed and trained a U-Net to automatically segment and delineate tumour targets in patients with NPC. A total of 502 patients from a single medical centre were included, and CT images were collected and pre-processed as a dataset. The trained U-Net finally obtained DSCs of 0.659 for lymph nodes and 0.74 for primary tumours in the testing set. Bai [147] fine-tuned a pre-trained ResNeXt-50 U-Net, which uses the recall preserved loss to produce a rough segmentation of the gross tumour volume of NPC. Then, the well-trained ResNeXt-50 U-Net was applied to the fine-grained gross tumour volume boundary minute. The study obtained a DSC of 0.618 for online testing (Table 10).7. Deep Learning-Based Radiomics

DL has shown gregiat potential to dominate the field of image analysis. In ROI [148] and feature extraction tas ks [149,150], whithch lay in the tumour. Seven histogram features and 40 timplementation pipeline of radiomics, DL has achieved good results. After completing the model training, DL can automatically analyse images, which is one of the greatest strengths compared to radiomics. Many researchers have introduced DL into radiomics (termed deep learning-based radiomics, DLR) and achieved encouraging results [151]. This may bex a ture featuresrend for the application of AI tools in medical imaging in the future.

7.1. Studies Based on Deep Learning-Based Radiomics (DLR)

In [154], Zhang innovatively combined the clinical wfere extracted from the MRI iatures of patients with nasopharyngeal cancer, the radiomic features based on MRIs, and the DCNN model based on pathological images of 14to construct a multi-scale nomogram to predict the failure-free survival of patients with T4NxM0 NNPC. The author proposed that there were snomogram showed a consistent significant improvement for predicting treatment failure compared with the clinical model in the internal test (C-index: 0.828 vs. 0.602, p < 0.050) and evxten features trnal test (C-index: 0.834 vs. 0.679, p < 0.050) cohaort ws. (Table 11)

9. Future Work

Researe significantly different between the recurrent and non-recurrent regions.

Inch on radiomics and DL in NPC imaging has only started in recent years. Therefore, there are still many issues that need further research in the future: linking NPC imaging features with tumour genes/molecules to promote the development of precision medicine for non-invasive, 2021rapid, the study ofand low-cost approaches; using multi-stage dynamic imaging to assess tumour response [62],to wdrugs/radiothich was introduced in the section on prognosis prediction,erapy and predict the risk of radiation therapy in surrounding vital organs to guide treatment decisions; and bridging the gap from the AI tools established a model for the assessment of tumour metastasis simultaneously. The best AUC for predicting in studies to clinical applications. In addition, current studies based on nasal endoscopic images and pathological images are lacking. In particular, accurate and rapid screening of NPC is of great significance, considering that endoscopic images are usually the primary screening images for most patients. Further high-quality research in this regard is needed. Finally, there is still a lack of large-scale, comprehensive, and fully labelled datasets for NPC; datasets similar to those that are available for lung and brain tumour metastasis was 0.829 (Table 2)s. The establishment of large-scale public datasets is an important task in the future.

Table 2. Studies for assessing tumour metastasis using radiomics.

| Author, Year, Reference | Image | Sample Size | Feature Selection | Modeling | Model Evaluation |

|---|---|---|---|---|---|

| Zhang, L. (2019) [65] | MRI | 176 | LASSO | LR | AUC 0.792 |

| Zhong, X. (2020) [63] | MRI | 46 | LASSO | Nomogram | AUC 0.72 |

5.3. Tumour Diagnosis

Lv [67] established a diagnostic model to distinguish NPC from chronic nasopharyngitis using the logistic regression of leave-one-out cross-validation method. A total of 57 radiological features were extracted from the PET/CT of 106 patients, and AUCs between 0.81 and 0.89 were reported.

In [68], 76 patients were enrolled, including 41 with local recurrence and 35 with inflammation, as confirmed by pathology. A total of 487 radiomic features were extracted from the PET images. The performance was investigated for 42 cross-combinations derived from six feature selection methods and seven classifiers. The authors concluded that diagnostic models based on radiomic features showed higher AUCs (0.867–0.892) than traditional clinical indicators (AUC = 0.817).

5.4. Prediction of Therapeutic Effect

In [69], 108 patients with advanced NPC were included to establish the dataset. The ANOVA/Mann–Whitney U test, correlation analysis, and LASSO were used to select texture features, and multivariate logistic regression was used to establish a predictive model for the early response to neoadjuvant chemotherapy. Finally, an AUC of 0.905 was obtained for the validation cohort.

5.5. Predicting Complications

In [70], a radiomics model for predicting early acute xerostomia during radiation therapy was established based on CT images. Ridge CV and recursive feature elimination were used for feature selection, whereas linear regression was used for modelling. However, the study’s test cohort included only four patients with NPC and lacked sufficient evidence, despite the study reaching a precision of 0.922.

The authors in [71] established three radiomics models for the early diagnosis of radiation-induced temporal lobe injury based on the MRIs of 242 patients with NPC. The feature selection in the study was achieved by the Relief algorithm, which is different from other studies. The random forest algorithm was used to establish three early diagnosis models. The AUCs of the models in the test cohort were 0.830, 0.773, and 0.716, respectively.

6. Studies Based on DL

6.1. Prognosis Prediction

Yang [72] established a weakly-supervised, deep-learning network using an improved residual network (ResNet) with three input channels to achieve automated T staging of NPC. The images of multiple tumour layers of patients were labelled uniformly. The model output a predicted T-score for each slice and then selected the highest T-score slice for each patient to retrain the model to update the network weights. The accuracy of the model in the validation set was 75.59%, and the AUC was 0.943.

In [73], a DL model based on ResNet was established to predict the distant metastasis-free survival of locally advanced NPC. In contrast to the studies published in 2020, the authors of this study removed the background noise and segmented the tumour region as the input image of the DL network. Finally, the optimal AUC of the multiple models combined with the clinical features was 0.808 (Table 7).

6.2. Image Synthesis

6.3. Detection and/or Diagnosis

Two similar studies, [76][77], based on pathological images were conducted. The authors in [76] used 1970 whole slide pathological images of 731 cases: 316 cases of inflammation, 138 cases of lymphoid hyperplasia, and 277 cases of NPC. The second study used 726 nasopharyngeal biopsies consisting of 363 images of NPC and 363 of benign nasopharyngeal tissue [77]. In [76], Inception-v3 was used to build the classifier, while ResNeXt, a deep neural network with a residual and inception architecture, was used to build the classifier in [77]. The AUCs obtained in [76][77] were 0.936 and 0.985, respectively.

6.4. Segmentation

7. Deep Learning-Based Radiomics

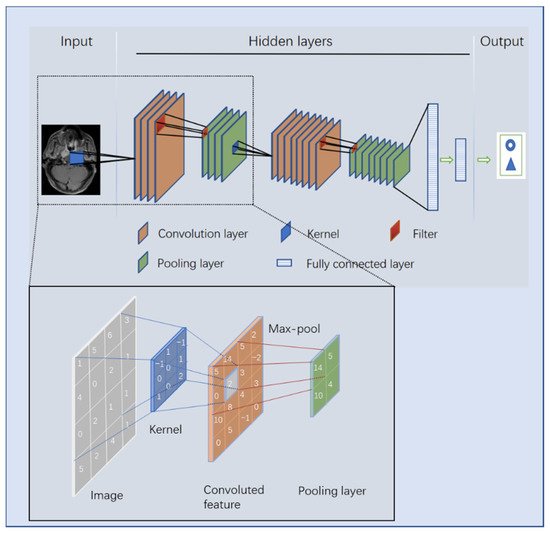

DL has shown great potential to dominate the field of image analysis. In ROI [80] and feature extraction tasks [81][82], which lay in the implementation pipeline of radiomics, DL has achieved good results. After completing the model training, DL can automatically analyse images, which is one of the greatest strengths compared to radiomics. Many researchers have introduced DL into radiomics (termed deep learning-based radiomics, DLR) and achieved encouraging results [83]. This may be a trend for the application of AI tools in medical imaging in the future.

7.1. Studies Based on Deep Learning-Based Radiomics (DLR)

In [84], Zhang innovatively combined the clinical features of patients with nasopharyngeal cancer, the radiomic features based on MRIs, and the DCNN model based on pathological images to construct a multi-scale nomogram to predict the failure-free survival of patients with NPC. The nomogram showed a consistent significant improvement for predicting treatment failure compared with the clinical model in the internal test (C-index: 0.828 vs. 0.602, p < 0.050) and external test (C-index: 0.834 vs. 0.679, p < 0.050) cohorts. (Table 11)

8. Future Work

Research on radiomics and DL in NPC imaging has only started in recent years. Therefore, there are still many issues that need further research in the future: linking NPC imaging features with tumour genes/molecules to promote the development of precision medicine for non-invasive, rapid, and low-cost approaches; using multi-stage dynamic imaging to assess tumour response to drugs/radiotherapy and predict the risk of radiation therapy in surrounding vital organs to guide treatment decisions; and bridging the gap from the AI tools established in studies to clinical applications. In addition, current studies based on nasal endoscopic images and pathological images are lacking. In particular, accurate and rapid screening of NPC is of great significance, considering that endoscopic images are usually the primary screening images for most patients. Further high-quality research in this regard is needed. Finally, there is still a lack of large-scale, comprehensive, and fully labelled datasets for NPC; datasets similar to those that are available for lung and brain tumours. The establishment of large-scale public datasets is an important task in the future.