Brain image segmentation is one of the most time-consuming and challenging procedures in a clinical environment. Recently, a drastic increase in the number of brain disorders has been noted. This has indirectly led to an increased demand for automated brain segmentation solutions to assist medical experts in early diagnosis and treatment interventions. This paper aims to present a critical review of the recent trend in segmentation and classification methods for brain magnetic resonance images. Various segmentation methods ranging from simple intensity-based to high-level segmentation approaches such as machine learning, metaheuristic, deep learning, and hybridization are included in the present review. Common issues, advantages, and disadvantages of brain image segmentation methods are also discussed to provide a better understanding of the strengths and limitations of existing methods.

Note: The entry will be online only after author check and submit it.

1. Introduction

Brain imaging is important for the diagnosis of brain-related diseases such as neurological disease (Parkinson’s disease), neurodegenerative disease (Alzheimer’s syndrome), and brain tumors. According to the American Cancer Society and the National Cancer Institute Report, brain and nervous system cancer is the tenth most common cause of death for both genders. About 18,020 deaths (10,190 males and 7830 females) and 23,890 new cases (13,590 males 10,300 females) among adults were estimated due to primary cancerous brain tumors and other nervous system diseases in 2020 in the United States

[1]. Therefore, early detection of brain tumors and related brain structures using effective brain imaging techniques is important where treatment can be initiated at an early stage of the brain tumor. High-quality brain images can be produced using magnetic resonance (MR) imaging, a standard non-invasive imaging key technique. MR imaging is useful for the diagnosis and treatment of brain tumors without inflicting harmful radiation on other brain structures and skull artifacts of the patients

[2]. MR images are used to differentiate suspicious regions of the brain tumor from healthy brain tissue. Conventionally, location, shape, and type of brain tumors are identified visually using multimodal MR images by qualified medical doctors.

2. Search Strategy and Selection Criteria

The present review aims to summarize information and identify problems from relevant research articles that utilized computer vision techniques in the context of automated brain medical imaging over the last five years. The inclusion and exclusion criteria were applied to conference papers and journal articles published on brain medical imaging in the chosen databases from 2016 until 2021. Studies that were not written in English, were duplicative, out of the study period, and did not have the full text available were excluded.

The studies were collected using the search keywords on 11 selected databases (i.e., Scopus, Web of Knowledge, Science Direct, IEEE Xplore, Springer, Frontiers, Wiley Online Library, Arixiv, ACM Digital Library, Hindawi). These databases offered comprehensive literature regarding brain image segmentation approaches and are highly appropriate. First, a search was conducted on the basis of the following keywords/terms: (delineation OR segmentation OR contouring) AND (brain tumor OR neoplasia OR brain tissues OR brain anatomical structure)) AND (ALL(“automatic delineation” OR “automatic segmentation” OR “automatic contouring” OR “semi-automatic delineation” OR “semi-automatic segmentation” OR “semi-automatic contouring”)) AND (brain tumor OR neoplasia OR brain tissues OR brain anatomical structure)) AND intensity-based methods—((delineation OR segmentation OR contouring) AND (“thresholding” OR “region” OR “Otsu” “level set” OR “active counter”) (brain tumor OR neoplasia OR brain tissues OR brain anatomical structure) AND (ALL(“automatic delineation” OR “automatic segmentation” OR “automatic contouring” OR “semi-automatic delineation” OR “semi-automatic segmentation” OR “semi-automatic contouring”)) AND (PUBYEAR > 2015) AND (“MRI”))), machine learning methods ((delineation OR segmentation OR contouring) AND (“clustering” OR “classification” OR “deep learning” OR SVM OR ANN OR K-means OR FCM OR FCN OR CNN OR convolution OR UNet OR U-Net) AND (brain tumor OR Neoplasia OR brain tissues OR anatomical structure) AND (ALL(“automatic delineation” OR “automatic segmentation” OR “automatic contouring” OR “semi-automatic delineation” OR “semi-automatic segmentation” OR “semi-automatic contouring”) AND (PUBYEAR > 2015) AND (“MRI”))), and so on, as well as for the hybrid method and its subcategories.

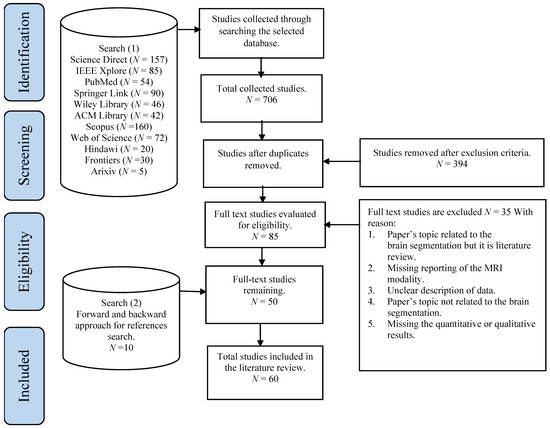

In the beginning, 761 publications were retrieved by searching the selected databases. An additional 15 publications were identified through cross-referencing. Following duplicate publications removal, the remaining 459 publications were evaluated via exclusion criteria. Based on screening the title and abstract, 394 publications were excluded. A total of 85 full-text studies were evaluated for eligibility, and 50 papers were included in this review. Then, an additional search was conducted on all selected publications by using the backward and forward approach for the references search method introduced by Webster and Watson

[3]. Through the backward search, the citations of each publication were assessed to obtain further publications to be included in the review. Through the forward search, for example, the references were obtained using Google Scholar were used to obtain further relevant studies. The results reported 10 additional publications. Overall, a total of 60 publications were selected.

Figure 1 illustrates the search strategy with the publications’ selection methods.

Figure 1. An overview of the study search and selection process according to PRISMA guidelines

[4].

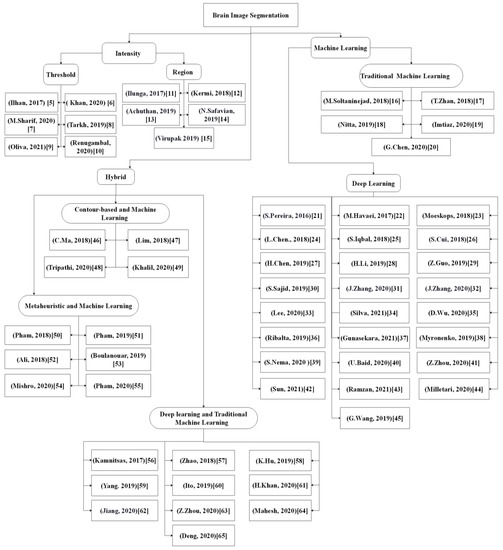

Figure 2 shows the taxonomy of reviewed research papers published in the last 5 years. It was noted that recent publications predominantly applied deep learning and hybridized metaheuristic-based methods for brain image segmentation.

Figure 2. An overview of brain segmentation approaches

[5][6][7][8][9][10][11][12][13][14][15][16][17][18][19][20][21][22][23][24][25][26][27][28][29][30][31][32][33][34][35][36][37][38][39][40][41][42][43][44][45][46][47][48][49][50][51][52][53][54][55][56][57][58][59][60][61][62][63][64][65][5,6,7,8,9,10,11,12,13,14,15,16,17,18,19,20,21,22,23,24,25,26,27,28,29,30,31,32,33,34,35,36,37,38,39,40,41,42,43,44,45,46,47,48,49,50,51,52,53,54,55,56,57,58,59,60,61,62,63,64,65].

3. Brain Segmentation Approaches

3.1. Intensity-Based Approaches

The intensity-based methods for brain segmentation function in the spatial domain and depend on the pixel value, which can be further classified into a thresholding and region-based approach.

3.1.1. Thresholding

The thresholding approach is one of the conventional and the easiest image segmentation methods where the regions of the image are categorized by measuring their intensities and compared with one or more intensity thresholds. For instance, Otsu’s method enables the determination of the global threshold optimal value to distinguish the target object from the image background. In a previous study, Otsu’s thresholding approach was coupled with morphological operations to detect brain tumors using MR images

[5]. Another study by Khan et al.

[6] presented a grade-wise brain tumor identification method where segmentation of the tumor was conducted first through the threshold approach. Then, a logical formula was employed to extract the desired tumor region. Moreover, feature set parameters, such as the angle, area, density, solidity, size, center of mass, and perimeter, were extracted from the tumor region. The extracted features were then analyzed using the partial tree (PART) algorithm to grade the brain tumor. However, the thresholding approach is sensitive to noise and intensity non-homogeneity, which limits its application for the entire tumor region. To overcome the limitation, statistical optimization of the threshold method was reported by Sharif et al.

[7], where particle swarm optimization (PSO) was employed to achieve the maximum class variance between the tumor regions and healthy brain tissues. Then, hand-crafted local binary patterns (LBP) and deep (fine-tuned capsule network) features of segmented images were extracted, and the best features were selected using a genetic algorithm (GA). Finally, an artificial neural network (ANN), a support vector machine (SVM), and an ensemble of linear discriminant analysis (LDA) were utilized to classify the tumor grades.

The above-mentioned methods have several limitations such as (i) low convergence rate and an insufficient local and global search and (ii) the optimization being trapped into a local minimum that results in low segmentation accuracy. To improve the local and global search of the multi-level thresholding approach, a new metaheuristic approach of the differential evolution (DE) technique, which was termed as adaptive differential evolution with Lévy distribution (ALDE), was introduced by Tarkhaneh and Shen

[8] for brain tissue segmentation. The proposed approach was adopted to resolve the multi-level threshold issue and achieve optimal results by preventing a local minimum through the establishment of a balance between exploration and exploitation coupled with the convergence rate boost. However, some of the tested images in this model did not segment properly, which could be attributed to the limitation of the thresholding approach that does not consider the spatial information of images, resulting in insensitivity towards different levels of noise and intensity.

Oliva et al.

[9] proposed an adaptive differential evolution and linear population size reduction (LSHADE) metaheuristic algorithm to determine the optimal threshold value by employing the minimum cross-entropy as a fitness function for the segmentation of brain tissue from MR images.

In a different study

[10], Renugambal et al. proposed a new hybridization approach based on the Otsu and new hybrid water cycle and moth-flame optimization algorithm (WCMFO) for the brain tissue segmentation. The new WCMFO algorithm was proposed to determine the optimal values for Otsu’s objective functions on various axial T2 modalities of MR brain images. However, the model cannot convert several parameters, including the water cycle and moth-flame algorithms.

3.1.2. Region-Based

The region-based approach enables the extraction of a connected region of an image by following pre-defined conditions such as pixels’/voxels’ information with matching intensities. This approach is performed in three steps: (i) selection of an initial seed point, (ii) locating the points in objects or regions, and (iii) selection of points connected to the initial point with similar intensity values. Recently, several studies applied a region-based approach for brain tissue segmentation

[11][12][13][14][15][11,12,13,14,15].

A semi-automatic approach, which consists of a localized active contour integrated with a background intensity compensation, termed LACM-BIC for tumor region segmentation, was presented by Ilunga-Mbuyamba et al.

[11]. The T1 contrast and T2 MR images were fused and used to segment the tumors. An automated initialization of the initial contour in the LACM-BIC method was conducted using the k-means algorithm accompanied by a hierarchical centroid shape descriptor. This method chose the best initialization number of the cluster, k, for the k-means algorithm, in which wrong selection of the initialization may lead to unwanted regions in the segmentation. Hence, the contour may be trapped into the wrong local minimum.

In another region-based study, a 3D-MR image brain symmetry analysis for tumor segmentation was reported by Kermi et al.

[12]. Specifically, the fast-unsupervised bounding box (FBB) and geodesic level-set methods were used. The FBB algorithm was adopted to locate initial tumor voxels and to manage intensity variations among different MR images without the use of a training dataset. Subsequently, the region growing method was combined with a 3D level set method to acquire the final tumor region. The drawbacks of this method include the inability to avoid noise and non-uniform intensity besides being limited to tumor segmentation.

In a more recent study, Achuthan and Rajeswari

[13] presented an automated point set registration approach to establish a prior knowledge model with a lower data intensiveness for hippocampus segmentation. In comparison to the usage of the entire 3D volume as used in the atlas-based methods, this study utilized a collection of representative points on the boundary of the hippocampus. The prior model was created and integrated into a level set model to perform hippocampus delineation. Nevertheless, some parameters are required to be specified experimentally, and it is a subjective task that depends on the target image properties.

Another approach for hippocampus segmentation of MR images using an automated level set method has been proposed by Safavian et al.

[14]. First, prior knowledge was obtained from an affine registration with a non-linear registration stage. Then, this information was locally integrated into an innovative level-set framework using a binary weighting map. The image gradient information adaptively utilized both local and global region information of the corresponding image. However, manual setting of parameters is required, which is very subjective and depends on the target image properties.

In a different study, Virupakshappa and Amarapur

[15] presented a modified level set segmentation method for brain tumor segmentation that provides an automatic initialization point as indicated by the maximum pixel point that serves as the initial contour. The maximum pixel was determined from the histogram, and an automatic segmentation was performed using an anisotropic diffusion filter instead of the Gaussian filter. The utilization of the anisotropic diffusion filter enhanced the local edges by detecting discontinuity within the local edge. Boundaries formed as a result of noise were removed completely, and the contours of the object were also improved. However, the manual setting of the initial contour of the level set is required to be performed based on the maximum pixel point, which is very subjective and depends on the intensity non-homogeneity.

3.2. Machine Learning

Another category of brain image segmentation approaches is traditional machine learning, comprising clustering and classification and deep learning approaches. The sections below detail these approaches.

3.2.1. Traditional Machine Learning

The clustering and classification approaches, being the traditional machine learning methods, are motivated by multidimensional feature space that may be obtained from different MR modalities. Classifiers are trained using a feature space that is created by combining different intensity and textural-based features representing the known classes. Then, a class prediction that the target structure belongs to is performed by assigning a class label, which is most similar to the target structure’s feature space. Meanwhile, clustering methods are unsupervised pixel-based methods that segregate unlabeled images into clusters of pixels that have similar features without utilizing training images. Some of the machine learning-based methods were applied for brain tissue segmentation in recent studies

[16][17][18][19][20][16,17,18,19,20]. A 3D super-voxel learning method for brain tumor segmentation was proposed by Soltaninejad et al.

[16]. In the study, the MR images were partitioned into the equivalent size of patches with similar intensity ranges based on the simple linear iterative clustering (SLIC) algorithm. Super-voxel clusters were formed by combining information from the MR multimodal images using a distance metric. For each super-voxel cluster, a set of texton descriptors along with the first-order static features were extracted from different MR modalities. The features were then used to train a random forest (RF) classifier to classify each super-voxel into a core tumor, edema, or healthy tissue. This approach was found to effectively combine the unsupervised SLIC algorithm for initial tumor region localization and the supervised RF method for tumor classification. The approach enabled the classification of located regions into sub-regions in a unified system and resulted in promising segmentation findings. However, this approach limits the segmentation of complex structural boundaries such as the smaller tumor cores as the super-voxel may include voxels from various tissue types.

In another study, a semi-supervised method based on a co-training technique with clinical and spatial constraints for the extraction of the glioma region, namely whole tumor (WT), tumor core (TC) and enhancing tumor (ET) from multi-sequence MR images, was assessed by Zhan et al.

[17]. Firstly, the labeled brain MR image was used for both SVM and sparse representation classification (SRC) classifiers training. This allows the extraction of high confidence data as pseudo-labeled samples from the test samples. The pseudo-labeled samples that resulted from each classifier were then added to the training sets of the other side of the classifier to re-train the corresponding classifier. The process was iteratively repeated until the results of classification remained stable. Finally, a super-pixel graph was plotted on the post-contrast T1 image to generate spatial and clinical constraints to remove false-positive and interference of noise. This classification method provides generalization fitting using a limited training set. However, it has a drawback where prior clinical knowledge is required to refine the segmentation results by manually correcting the pixel labeling, which is subjective as it depends on the user’s expertise.

Meanwhile, Nitta et al.

[18] investigated an approach for brain tissue segmentation using a modified k-means clustering algorithm. The researchers proposed a selection of 16 high probabilities of dominant grey-level pixels as initial centroids to resolve the issue of the arbitrary selection of initial centroids in the standard k-means algorithm. The proposed approach is sensitive to noise and has a non-uniform intensity distribution.

In a recent study by Imtiaz et al.

[19], a tumor segmentation approach based on super-pixel features extracted from 3D planes of MR images (FLAIR, T1c, and T2 modalities) was evaluated. Several statistical and Gabor textural features were extracted from each super-pixel of the three planes to avoid imbalanced planar data and mislabeling of pixel issues in a plane. Based on feature effectiveness, feature selection was performed using histogram consistency analysis and local descriptor pattern analysis. The feature vector for each super-pixel was then subjected to extremely randomized trees (ERT) for binary classification. Then, the voting algorithm was used to assign a class label (tumor or non-tumor) for each pixel in all three planes. The benefits of this approach include fast computation and high robustness to the scale-invariant and rotational changes. However, this approach is sensitive to noise and distortions, in addition to leading to the extraction of redundant features at different scales.

In a different study by Chen et al.

[20], a hybrid two-stage framework of cascaded RF and a dense conditional random field (CRF) was evaluated for intra-tumor segmentation. Firstly, the appearance features (statistical intensity and template-based) and contextual features (Gaussian mixture model-based lesion tissue probability maps) were extracted and used to train the initial RF classifier. The predicted probability map obtained by the RF classifier was used as the prior input into a dense CRF model for further segmentation improvement. Then, the results of the dense CRF model were used as the contextual information to train a cascade of RFs by the hierarchy in combination with template-based asymmetrical and original statistical features. The authors proposed a multi-layer optimization architecture as the post-processing step to further increase the efficiency of RF. The step is easy to implement and can be effectively incorporated into the local appearance and global contextual features, which can improve the segmentation outcome. The limitation of this framework includes the evaluation was performed using a small dataset, and a post-processing step is required to fine-tune the extracted tumor regions.

3.2.2. Deep Learning

Recently, the deep learning-based method has attracted much research interest due to its excellent performance and ability to automatically capture adaptive features, which outperform manually created features. Moreover, these features were learned in an increasing feature complexity trend, which results in more robust feature learning. During the last few years, more studies have been designed using a combination of the deep learning-based method and the new brain tumor segmentation method. Most of the studies utilized convolutional neural networks due to their effectiveness in detecting patterns in an image, specifically the MR images, with promising results reported. To date, the deep learning-based segmentation was performed using 2D, 2.5D, or 3D MR images, which is elaborated in the following sections.

Deep Learning-Based Methods Using 2D Images

Deep learning using 2D images requires brain image slices or extracted 2D patches from 3D images as an input for the 2D convolutional kernel. Several studies

[21][22][23][24][25][26][27][28][29][30][31][32][33][34][35][36][37][21,22,23,24,25,26,27,28,29,30,31,32,33,34,35,36,37] have been published on the deep learning-based method using 2D images. Sergio Pereira et al.

[21] introduced cascade layers using small 3*3 convolutions kernels to reduce overfitting. The study enabled the segmentation of the image into four regions, namely (i) necrosis, (ii) enhancing tumor, (iii) edema, and (iv) normal tissue. Two convolutional neural network (CNN) architectures were trained and used in the proposed work to extract the feature maps, which were low-grade glioma and high-grade glioma. The use of small kernels led to a deeper architecture design, which reduced the number of weights in the network and significantly affected overfitting. However, for the initial phase, the user has to manually identify the glioma grade where prior medical knowledge is required, which is one of the limitations. Additionally, the tissue segmentation was performed as a patch-based task in the study where the local dependency of labels during pixel classification was ignored. Another drawback of the proposed method is the poor segmentation of tumor core regions in the BRATS 2015 Challenge dataset.

Similarly, the application of another novel Cascade CNN model for fully automatic brain tumor segmentation was reported by Havaei et al.

[22]. Cascade architecture of 2D CNN was used in the study to preserve local dependency of labels during pixel classification and extract local and global contextual features which deal with imbalanced tumor labels. However, the model suffers from two drawbacks: (i) poor segmentation between the enhanced and core regions of the brain tumor inferior to the complete tumor and (ii) only the local dependency of the labeled samples was considered, with the appearance and spatial consistency neglected when applied on 3D images.

Moeskops et al.

[23] presented an automatic approach based on a multi-scale CNN for segmenting white matter hyperintensities of presumed vascular origin (WMH) (basal ganglia and thalami, brain stem, cortical grey matter, white matter, cerebellum, WMH, peripheral cerebrospinal fluid, and lateral ventricular cerebrospinal fluid) from MRI modalities (T1, T2, FLAIR, and T1inversion recovery). The proposed multi-scale CNN model was claimed to be the first modern MRI segmentation method that applies CNN for additional WMH segmentation. Furthermore, the model was assessed in two large MRI datasets of older patients that were affected by motion artifacts and varying degrees of brain abnormalities.

Another study by Chen et al.

[24] proposed a 2D novel method based on a CNN architecture identified as Dense-Res-Inception Net (DRINet) for multi-class brain tumor segmentation. The DRINet consisted of three blocks, namely, (i) convolutional, (ii) deconvolutional, and (iii) unpooling blocks. The convolutional block carried out dense connections and was used to alleviate the effect of vanishing gradients. Meanwhile, the deconvolutional block carries out the residual inception modules to aggregate feature maps from different branches. The unpooling block was used for the aggregation of different sampled feature maps. The use of this method resulted in accurate findings on segmenting complex, challenge, multivariate domains (tumor and cerebrospinal fluid (CSF)), and multi-organ segmentation on abdominal CT images. Nevertheless, the DRINet approach has a complex network structure that requires millions of parameters (i.e., billions of connections between neurons and millions of weights), which could lead to a difficult training phase, and testing can be slower depending on the ground truth label requirements.

Iqbal et al.

[25] proposed three different improved network architectures for intra-tumor segmentation, which were an extended version of SegNet (deep convolution encoder-decoder architecture), as follows: (i) Interpolated Network, (ii) SkipNet, and (iii) SE-Net. All three structures consisted of decoder/encoder architecture, and four sub-blocks were used in each phase. A batch of normalization layers was added next to each convolution to avoid the disappearance or explosion of convolutional gradients and to maintain the stability of the training phase. The advantage of this approach includes the use of simple network structures as an intermediate convolutional map along with interpolation methods to produce a quick model with a smaller memory space. However, the method has a limitation where the segmentation performance could be affected if the model is trained with limited ground truth samples.

In the same year, Cui et al.

[26] reported on a hybridized cascade of a deep convolution neural network (DCNN) architecture that can segment 2D brain images automatically in two major steps. Firstly, the tumor region was localized immediately using the pixel-wise fully convolution network (FCN) from the MR images. Then, the patch-wise CNN with smaller kernels and deeper architecture was adopted for further classification of the localized tumor region into multiple sub-regions. This approach alleviates the imbalanced data issue using a hybrid CNN. However, the approach is time-consuming during model training, and inference is required for operating the image patches.

In a different approach, Chen et al.

[27] presented a combination of prior knowledge and a DCNN to enrich the extracted features of DCNN for brain tumor sub-compartment identification. This model requires an analysis of a left-right similarity mask (LRSM) in the constructed feature space and uses LRSM as the location weight of the DCNN features. These features were then used to train the model to determine the asymmetrical location information of the input images via a similarity metric. This approach was found to provide about 3.6% of dice similarity coefficient (DSC) improvement of complete tumor segmentation over the conventional DCNN. The advantage of the proposed method includes the ability to combine the symmetric masks in several layers of DCNN to assign location weight for the extracted features. However, the method could not differentiate between the tumor core regions and the enhanced tumor region as the LRSM mask can reflect a complete tumor situation.

Li et al.

[28] presented an automatic approach based on the improved version of U-Net for multiclass brain tumor segmentation from 2D MR image slices. Firstly, the up-skip connection between the encoding and the decoding elements was proposed to further enhance the information flow and the network connectivity. Then, in each block, an inception module was implemented to assist the network in learning richer representations. Nevertheless, the model suffers from poor segmentation of enhancing tumor region as the whole brain slices were used for model training. This led to a data imbalance issue due to a small number of pixels that belong to enhance tumor and core regions inferior to other brain tissue.

Another approach was reported by Guo et al.

[29], where a supervised multimodal image analysis was performed with three cross-modality of fusion level strategies, which were feature learning, classification, and decision making. The three fusion strategies were implemented and tested in three different patches-based CNNs with corresponding variations in the network structures. Four modalities of imaging (CT, PET, T1, and T2) were used as fused inputs for brain tumor segmentation. Comparison between the single model and multimodality showed that the CNN-based fusion network performed better on PET, CT, and T2 modalities. This approach provides methodological guidelines for designing and applying multimodal image analysis fusion strategies through different implementations of CNN architecture. However, this approach is limited for complete tumor detection. Another limitation is the dramatic decrease in the segmentation performance within the misaligned regions based on the number of affected modalities and severity of the misalignment.

An automated hybrid DCNN model for brain tumor segmentation was presented by Sajid et al.

[30] for different modalities of MR. This model extracted 27 × 27 sized patches from four axial MR modalities to consider both spatial and contextual knowledge for predicting segmentation labels of pixels. The proposed hybrid DCNN model combined the output feature maps of two- and three-CNN paths. The model successfully addressed local dependencies between the output labels, which was the major drawback of the two- and three-CNN paths. By integrating the two- and three-CNN networks, an increase in the effect of neighboring pixels was noted, and the output was recognized based on the local and contextual features. Morphological operations were used to further enhance the segmentation performance by eliminating minor false positives along the edges of the expected outputs. The proposed model segmented the core and enhanced tumor regions better compared to the complete tumor regions. This could be attributed to the fuzzy boundaries of edema that limit the detection of the whole tumor region compared to other regions. However, this approach has a limitation where a large amount of training data and parameters are required for model training.

In addition to the various methods proposed, Zhang et al.

[31] presented a residual U-Net and attention mechanism in a unified architecture named AGResU-Net for patch-wise brain tumor segmentation. Attention gate units were added into the up-skip connection of the U-Net structure to highlight the important feature details along with disambiguates in noise and irrelevant feature responses. The AGResU-Net was found to enhance feature learning by extracting important semantic features focusing on the details of small-scale brain tumor sub-regions, which improves the segmentation performance of the brain tumors. Nevertheless, the AGResU-Net model has a drawback, where an amount of contextual information and local details among different intra-slices were not included due to modeling based on 2D U-Net.

In the same year, Zhang et al.

[32] proposed another new method using attention residual U-Net (AResU-Net) for end-to-end 2D brain tumor segmentation. The AResU-Net embedded a series of attention and residual units among corresponding down-sampling and up-sampling processes. The system simultaneously improved the local responses of down-sampling and the recovery effects of the up-sampling process. However, the model neglects contextual and local details of different intra-slices due to modeling based on 2D slices.

Recently, an innovative brain tissue segmentation method from MR images was proposed by Lee et al.

[33], where a patch-wise U-net architecture was used to divide the MR image slices into non-overlapping patches. Corresponding patches of ground truth were incorporated into the U-net model, and input patches were predicted individually. The model was found to retain the local spatial information better compared to the conventional U-Net model. The design successfully fixed the drawback, specifically the limited memory problem, which was caused by multiple down and upsampling stages. The memory problem was attributed to the storage of parameter values at each stage and difficulty in maintaining local details as the entire image is incorporated into the network. Although the memory problem was resolved using the proposed model, computational complexity was higher in the training phase.

In another study, Silva et al.

[34] proposed a three-stage cascade FCN architecture based on the deep layer aggregation technique to gather further spatial and semantic information for intra-tumor segmentation. The output features of one FCN are directly fed to the next layer for extending the feature hierarchy over different depths for better segmentation refinement. However, the model requires high computational resources and post-processing to refine the extracted tumor regions.

In addition to the various proposed methods, Wu et al.

[35] suggested a multifeatures refinement and aggregation network (termed MRANet) based on CNN for end-to-end brain tumor segmentation. The model fully utilized the hierarchical features by adopting the feature fusion concept at several levels, which extracts low-level, mid-level, and high-level features by sampling similar hierarchical features of encoder and decoder. These features were then aggregated and re-extracted for better segmentation refinement.

Ribalta Lorenzo et al.

[36] proposed a deep learning method for brain tumor delineation from the FLAIR modality of MR using the fully convolution neural network (FCNN) inspired by U-Net. The authors trained the model on 256 × 256 patches extracted from the intra-tumor regions that belong to only positive (tumorous) full-sized FLAIR MR image sequences. Firstly, data augmentation methods were used to expand the dataset and achieve a robust algorithm against the heterogeneity of small training datasets. Subsequently, the FCNN was trained using the DSC to maximize the model training to improve the quality of the segmentation. The proposed FCNN model was claimed to be the best modern FLAIR MR image segmentation method that applied hand-crafted features and was classified using extreme random trees. This model offers controllable training time and instant robust segmentation using the FCNN that was trained on heterogeneous and imbalanced datasets. Nevertheless, this model exhibited potential drawbacks caused by the rapid data augmentation process, as the unnatural increasing number of training patches resulted in a reduction in overall average data accuracy.

Gunasekara et al.

[37] proposed cascaded algorithms for glioma and meningioma brain tumor segmentation and classification. Firstly, CNN was implemented to classify meningioma and glioma regions. Then, the classified images were fed to R-CNN to localize the tumor regions of interest, which was accompanied by active contouring to delineate the exact tumor boundary. Finally, the Chan–Vese level set model was used to segment the target tumor boundary.

Deep Learning-Based Methods Using 3D Images

The second category of deep learning-based tumor segmentation approaches uses 3D MR images for segmentation to overcome the limitation of neglecting contextual information in 2D CNN. Several studies

[38][39][40][41][42][43][38,39,40,41,42,43] have reported the approaches under this sub-class.

The intra-tumor region segmentation method from 3D MR images based on the asymmetric encoder-decoder network was presented by Myronenko

[38]. The researchers adopted CNN’s encoder-decoder structure with an asymmetrical large encoder to extract deep features and reconstruct the dense segmentation masks using a decoder. To tackle the issue of a small training dataset, a variational auto-encoder was added to the endpoint of the encoder, and the input image was reconstructed together with the segmentation to regularize the shared encoder at the inference time. This model enables accurate intra-tumor segmentation based on the unsupervised feature learning method with a lower requirement for ground truth labels and without the post-processing step. However, the proposed method requires high computational resources to accelerate tumor annotation in MR images.

To decrease the dependency on the ground truth images during the training stage, Nema et al.

[39] proposed a RescueNet approach for multi-class brain tumor segmentation utilizing both residual and mirroring principles. Different training was performed to segment whole, core, and enhancing tumors using three different networks. The proposed RescueNet approach was trained based on the unpaired generative adversarial network (GAN) method, which was utilized to enrich data for the training stage with better segmentation results obtained using a larger amount of testing data. Finally, a scale-invariant algorithm was suggested as a post-processing stage to improve the segmentation accuracy. The pros of this approach include robustness to the appearance variations in brain tumors, the minimum requirement of labeled datasets for model training, and that the model is 10% trained and 90% tested. However, this approach requires a post-processing step for further segmentation refinement.

In a more recent study by Baid et al.

[40], an effective weighted patch extraction was combined with a new 3D U-Net architecture for a fully automatic brain tumor segmentation. The authors proposed a weighted patch-based segmentation approach to address the imbalance of class among tumor and non-tumorous patches. The 3D weighted patch-based method and a unique number of feature maps were designed to train the architecture, which enables the accurate segmentation of intra-tumor structures. Finally, a 3D connected component analysis was used as the post-processing method to improve the accuracy of the tumor delineation. However, this approach failed to segment some of the tumor parts with a small necrotic tumor cavity from the MR images due to a large variance in the training and validation dataset features. This can be resolved by increasing the number of training data to overcome the inter-patient variations.

To address the two main challenges, namely, exploding and vanishing gradients affecting the traditional DCNNs performance, Zhou et al.

[41] proposed a novel three-phase framework for automatic brain tumor segmentation of the 3D MR images. Firstly, a dense three-dimensional networking architecture was adopted to construct the features to be re-used. Secondly, 3D atrous convolutional layers were used to design a new feature pyramid module, which was added to the backbone end to fuse the multiscale contexts. Finally, for further training promotion, a supervision 3D deep mechanism was equipped to enhance the network convergence by adding auxiliary classifiers to alleviate the problem of exploding and vanishing gradients by utilizing dense connectivity. Overall, this framework is considered a complete architecture without additional post-processing stages. Furthermore, simple implementation and the use of adjustable parameters are the main advantages of this framework. However, the segmentation of cores and enhancing tumors are inferior compared to the complete tumor, which requires considerable improvement.

In another study, Sun et al.

[42] presented a multipath way 3D FCN architecture for brain tumor segmentation. The model extracts different receptive fields of feature maps from multi-modal MR images using the 3D dilated convolution in each pathway and fuses these features spatially using skip connections. This model helps FCN architectures to better locate the boundaries of tumor regions. However, the model requires a post-processing step, as direct connections between high- and low-level features will lead to unpredictable consequences and the semantic gap between the encoder and decoder.

An effective mapping from MR volumes to voxel-level brain tissue segments was proposed by Ramzan et al.

[43]. A 3D CNN, which utilized the concept of residual learning, skip connections, and dilated convolutions, was applied in the study. Dilated convolutions were utilized to decrease the computational cost by computing spatial features with a high resolution. However, the space complexity of this model was higher as dilated convolution was used, and down-sampling of input volumes was neglected, which led to an increase in the number of parameters and kernels by a certain factor.

Deep Learning-Based Methods Using 2.5D Images

Although 3D deep neural network (DNN)-based segmentation can better exploit 3D features of 3D MR image information data, this approach has limitations related to network intensiveness and memory consumption. Therefore, another category of 2.5D DNN was researched. In comparison to the 2D and 3D DNN, 2.5 DNN has inter-slice characteristics and lower memory demand.

An automated 2.5D patch-wise Hough-CNN model based on a voting strategy for localizing and segmenting brain anatomies of interest (26 regions of the basal ganglia and the midbrain) was presented by F. Milletari et al.

[44] for different modalities of MRI and ultrasound slices. The patch-based voting strategy was designed and integrated into the Hough-CNN model to localize and segment brain structures that are corrupted by artifacts or are partially visible.

To overcome network complexity and memory consumption of the 3D based-segmentation methods, Wang et al.

[45] suggested a cascade of 2.5D CNN voxel-wise architecture for sequential segmentation of brain tumors from MR images. The task of multiclass segmentation was largely divided into a sequence of binary hierarchical tasks to segment complete, core, and enhancing tumors for better utilization of hierarchical features of brain tumor structures. The resultant segments were then used as a crisp mask to identify tumor cores and enhancing tumors, which could lead to anatomical constraints during the final segmentation. The predicted tumor core was constrained to be within the whole tumor, while the enhancing tumor region was within the core tumor region. Additionally, the test-time augmentation technique was used to obtain structure-wise and voxel-wise uncertainty estimation of the segmentation results. Finally, a CRF was proposed as the post-processing stage to smoothen the segmentation results. A robust segmentation resulted in a balanced property of memory consumption, model complexity, and multi-view fusion. However, the method has two main limitations: (i) it is highly dependent on the voxel-wise annotations technique and (ii) time-consuming for large datasets. Additionally, this approach requires post-processing for segmentation tuning. The advantages and disadvantages of all of the discussed segmentation approaches are summarized in

Table 1.

Table 1. Strengths and limitations of intensity-based and machine learning approaches for brain segmentation.

| Categories |

Ref |

Strengths |

Limitations |

| Thresholding |

[5][6][7][8][9][10] | [5,6,7,8,9,10] |

|

-

Simple implementation.

-

Low computation time.

|

|

| |

|

| Region based |

[11][12][13][14][15] | [11,12,13,14,15] |

|

-

High segmentation accuracy required for tumor regions.

-

Low computation time.

-

High segmentation efficiency for 3D images.

-

High segmentation performance in complex regions.

|

|

| |

|

Traditional

machine learning |

[16][17][18][19][20] | [16,17,18,19,20] |

| |

|

|

-

Parameter initialization is subjective.

-

Requires skillful users.

-

Low segmentation performance for semantic type segmentation.

-

Optimum representation features determination is very subjective.

-

Model trapped in a local minimum due to imbalance between exploration and exploitation.

|

|

| Deep learning |

[21][22][23][24][25][26][27][28][29][30][31][32][33][34][35][36][37][38][39][40][41][42][43][44][45] | [21,22,23,24,25,26,27,28,29,30,31,32,33,34,35,36,37,38,39,40,41,42,43,44,45] |

|

-

Adaptive feature map.

-

High performance of semantic-based segmentation.

-

High performance in complex regions.

-

Best segmentation results compared to other categories.

|

|

| |

|

3.3. Hybrid Segmentation Approaches

Hybrid segmentation is the fourth category of brain image segmentation, which includes the integration of different methods to improve the segmentation performance and achieve the segmentation objectives. Therefore, hybrid approaches refer to the combination of two or more related methods by utilizing their advantages to achieve high segmentation accuracy. In general, hybrid-based approaches perform well, possess better designs, have shorter computational time, and have adaptive modulations towards the target task in comparison to other segmentation approaches. Hybrid segmentation can be divided into three sub-categories, namely, (i) contour-based and machine learning, (ii) metaheuristic, and machine learning and (iii) deep learning and machine learning. Each sub-category contains several approaches that aim to segment the required MR image.

3.3.1. Contour-Based and Machine Learning

The combination of the contour-based and machine learning approach can improve initialization parameters, perform further spatial constraints, direct the evolution of intensity-based pipelines, and enhance data mining algorithms by refining the process. There are several previous studies

[46][47][48][49][46,47,48,49] that were conducted based on this sub-category.

Ma et al.

[46] hybridized concatenated and connected random forests (ccRFs) and multi patch active contour (mpAC) methods to automate the segmentation of glioma structures from volumetric multimodal MR images and impose a contour evolution on the voxel classification, which was considered as the local dependency of labels. The ccRFs were used to represent the adaptive features iteratively and efficiently to handle data imbalance issues by exploring both local and contextual information from multimodal images. Meanwhile, the mpAC technique was used for the final segmentation of the initially inferred tumor structure from the voxel classification of the ccRFs model. Although the proposed method resulted in promising findings, there are some drawbacks. Firstly, the hybridized approach highly depends on the labeled training data. Secondly, the use of multiple imaging modalities for model training on a specific feature of learning kernels and aggregation of feature maps by the max-out process is not optimal for the aggregation of imaging modalities.

In another hybridization study, Lim and Mandava

[47] proposed a semi-automatic method that incorporated both prior knowledge and image statistics in three major phases for the detection of brain abnormalities in the MR image. For the first phase, a user was permitted to determine the regions of interest using a modified random walks algorithm to perform initial segmentation and produce a feature map from each image. Then, the feature maps were incorporated into the image information and combined using the weighted averaging method. Finally, information-theoretic rough sets (ITRS) were used for the post-processing phase to locate the ambiguous boundary regions between the tumor and its background. However, the user-based interaction approach requires users to place seeds manually to distinguish between the objects and backgrounds. The inappropriate initialization of the seed can produce poor and inaccurate results. Moreover, the proposed model was only tested using limited real brain images.

Recently, Tripathi et al.

[48] proposed an integrated Otsu k-means method for tumor components segmentation. This method integrated Otsu thresholding and k-means clustering to generate tumors using T2-W and FLAIR image modalities. Although this model addressed the data limitation problem, it is highly influenced by noise.

Another recent work by Khalil et al.

[49] adapted the dragonfly algorithm (DA) to perform a clustering-based contouring approach for brain tumor segmentation. First, the two-step DA-based clustering was used to extract tumor edge as initial tumor contour for the MR image sequence. Instead of using a random initial position in DA, k-means was employed to identify the initial swarm centroids. Finally, the level set model was used to extract the tumor region from all volume slices. However, the usage of k-means to determine the initial centroids for DA may lead to non-stable performance because k-means is known to suffer from (i) dependency on initialization and (ii) the tendency to terminate in local optima.

3.3.2. Metaheuristic and Machine Learning

The combination of metaheuristic and machine learning methods is the second sub-category of the hybridization method that can be used to optimize the separation characteristics of the machine learning method in segmented images. Additionally, this type of hybridized approach is generally used to solve or reduce the major drawbacks of machine learning segmentation methods, such as the possibility of being trapped in a local minimum and sensitivity to noise. Several studies

[50][51][52][53][54][55][50,51,52,53,54,55] have employed a combination of metaheuristic and machine learning methods.

A new hybridization method for brain tissue segmentation, which is a combination of metaheuristic particle swarm optimization (PSO) method and kernelized fuzzy entropy clustering with Baize correction method and spatial information (PSO-KFECSB), was introduced by Pham et al.

[50]. The approach was developed to partially overcome clustering-based segmentation problems such as (i) intensity non-uniformity (INU) artifact and sensitivity to noise and (ii) dependency on the initial clustering centroids and being trapped in local minima. However, the performance of this approach decreased with the co-existence of high noise levels and INU artifacts in the MR image data. Moreover, only one KFECSB criterion was used to direct the solution search process where the global optimum of standards may not be optimum for segmentation. The issue was solved as reported in a different study by the same group of researchers, Pham et al.

[51]. A multi-objective optimization strategy was carried out to exploit the strengths of other criteria to enhance the trade-off property between preserving image details and restraining noise for image segmentation. A modified multi-objective particle swarm optimization (MOPSO) approach was proposed to optimize both objective functions of fuzzy c-means (FCM) and a region-based active contour method simultaneously to solve major drawbacks of this hybrid segmentation approach for segmenting brain tissue. This approach aimed to achieve compactness and separation by optimizing the separation between the clusters/regions from each other and consider both bias correction and spatial information in the objective functions to reduce noise effects and intensity non-uniformity artifacts. Nevertheless, this approach requires high computational time to specify the two-scale parameters (ρ, ζ), where ρ is the level of intensity inhomogeneity, and ζ is the level of noise. These parameters control the influence of global and local fitting energy force that is subjective and highly dependent on the degree of noise and INU artifact of the input images.

In another study, a hybridized model based on the combination of FCM, particle swarm optimization (PSO), and the level set method for the segmentation of the brain tumor was investigated by Ali et al.