1. Introduction

In this technological era, smartphone usage and its associated applications are rapidly increasing [1] due to the convenience and efficiency in various applications and the growing improvement in the hardware and software on smart devices. It is predicted that there will be 4.3 billion smartphone users by 2023 [1]. Android is the most widely used mobile operating system (OS). As of May 2021, its market share was 72.2% [2]. The second highest market share of 26.99% is owned by Apple iOS, while the rest of the 0.81% is shared among Samsung, KaiOS, and other small vendors [2]. Google Play is the official app store for Android-based devices. The number of apps published on it was over 2.9 million as of May 2021. Of these, more than 2.5 million apps are classified as regular apps, while 0.4 million apps are classified as low-quality apps by AppBrain [3]. Android’s worldwide popularity makes it a more attractive target for cybercriminals and is more at risk from malware and viruses. Studies have proposed various methods of detecting these attacks, and ML is one of the most prominent techniques among them [4]. This is because ML techniques are able to derive a classifier from a (limited) set of training examples. The use of examples thus avoids the need to explicitly define signatures in developing malware detectors. Defining signatures requires expertise and tedious human involvement and for some attack scenarios explicit rules (signatures) do not exist, but examples can be obtained easily. Numerous industrial and academic research has been carried out on ML-based malware detection on Android, which is the focus of this review paper.

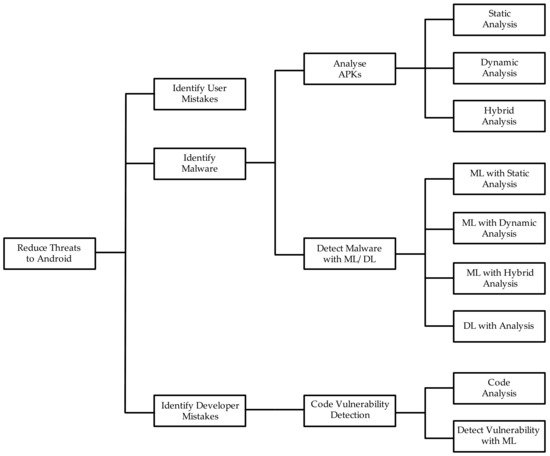

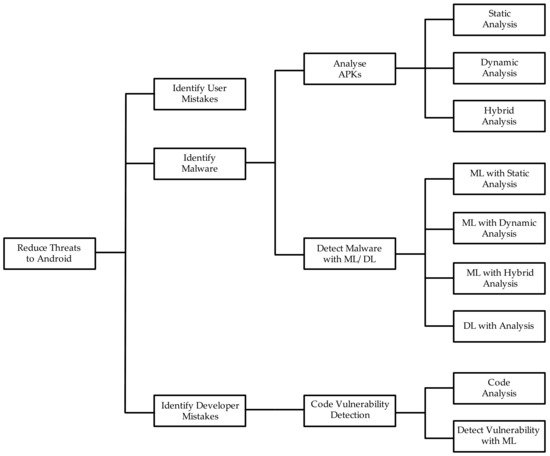

The taxinomical classification of the review is presented in Figure 1. Android users and developers are known to make mistakes that expose them to unnecessary dangers and risks of infecting their devices with malware. Therefore, in addition to malware detection techniques, methods to identify these mistakes are important and covered in this paper (see Figure 1). Detecting malware with ML involves two main phases, which are analyzing Android Application Packages (APKs) to derive a suitable set of features and then training machine and deep learning (DL) methods on derived features to recognize malicious APKs. Hence, a review of the methods available for APK analysis is included, which consists of static, dynamic, and hybrid analysis. Similar to malware detection, vulnerability detection in software code involves two main phases, namely feature generation through code analysis and training ML on derived features to detect vulnerable code segments. Hence, these two aspects are included in the review’s taxonomy.

The rest of this paper is organised as follows:

Section 2 lays out the background to this study.

Section 3 provides a detailed description of the review methodology, while

Section 4 discusses related previous reviews on the topic.

Section 5 discusses static, dynamic, and hybrid analysis techniques for Android malware detection and the application of ML and DL methods as well as a comparison of the methods used in the individual studies.

Section 6 discusses ML methods to identify code vulnerabilities, with

Section 7 exploring the results and discussions thereof. Finally,

Section 8 concludes the paper.

2. Background

This section provides a high-level overview of the Android architecture and its built-in security as well as potential threat vectors for Android. It also provides an introduction to the ML process as it would be useful for non-ML background readers to understand the contents of this paper.

2.1. Android Architecture

Android is built on top of the Linux Kernel. Linux is chosen because it is open source, verifies the pathway evidence, provides drivers and mechanisms for networking, and manages virtual memory, device power, and security

[5]. Android has a layered architecture

[6]. The layers are arranged from bottom to top. On top of the Linux Kernal Layer, the Hardware Abstraction Layer, Native C/C++ Libraries and Android Runtime, Java Application Programming Interface (API) Framework, and System Apps are stacked on top of each. Each layer is responsible for a particular task. For example, the Java API Framework provides Java libraries to perform a location awareness application-related activity such as identifying the latitude and the longitude.

Android-based applications and some system services use the Android Runtime (ART). Dalvik was the runtime environment used before the ART. Both ART and Dalvik were created for the Android applications-related projects. The ART executes the Dalvik Executable (DEX) format and the bytecode specification

[7]. The other aspects are memory management and power management since the Android-based applications run on battery-powered devices with limited memory. Therefore, the Android operating system is designed in a way that any resource can be well managed

[5]. For instance, the Android OS will automatically suspend the application in memory if an application is not in use at the moment. This state is known as the running state of the application life cycle. By doing this, it can preserve the power that can be utilised when the application reopens. Otherwise, the applications are kept idle until they are closed

[8].

Built-In Security

Android comes with security already built in. It is a privileged separated operating system

[9]. Sandboxing technique and the permission system in Android reduce some risks and bugs in the application. Sandboxing technique in Android isolates the running applications using unique identifiers which are based on the Linux environment

[10]. Without having permissions granted from the user at the time of app installation or reconfiguration, apps cannot access system resources. If some of the permissions are not granted, then the application itself will not be usable. When a system update or upgrade happens, several improvements happen in terms of security and privacy. For example, Android 11, the latest stable Android version contains some changes related to security and privacy such as scoped storage enforcement, one-time permissions, permissions auto-reset, background location access, package visibility, and foreground services

[11].

However, there are possibilities of malware attacks to exploit some vulnerabilities in the applications developed by various users, because the Google Play Store will not detect some vulnerabilities when publishing applications in the Play Store as in Apple App Store

[12].

2.2. Threats to Android

While Android has good built-in security measures, there are several design weaknesses and security flaws that have become threats to its users. Awareness about those threats is also important to perform a proper malware detection and vulnerability analysis. Many research and technical reports have been published related to the Android threats

[13] and classified Android threats based on the attack methodology. Social engineering attacks, physical access retrieving attacks, and network attacks are described under the ways of gaining access to the device. For the vulnerabilities and exploitation methods, man in the middle attacks, return to libc attacks, JIT-Spraying attacks, third-party library vulnerabilities, Dalvik vulnerabilities, network architecture vulnerabilities, virtualization vulnerabilities, and Android debug bridges and kernel vulnerabilities are considered.

The survey in

[14] identified four types of attacks to Android; hardware-based attacks, kernel-based attacks, Hardware Abstraction Layer (HAL) based attacks, and application-based attacks. Hardware-based attacks such as Rowhammer, Glitch, and Drammer are related to sensors, touch screens, communication media, and DRAM. Kernel-based attacks such as Gooligan, DroidKungfu, Return-oriented Programming are related to Root Privilege, Memory, Boot Loader, and Device Driver. HAL-based attacks such as Return to User and TocTou are related to interfaces for cameras, Bluetooth, Wi-Fi, Global Positioning System (GPS), and Radio. Application-based attacks such as AdDetect, WuKong, and LibSift are related to third-party libraries, Intra-Library collusion, and privilege escalations.

Android applications are easily penetrable with proper knowledge of Android programming if suitable security mechanisms are not in place. In addition, Android marketplaces such as Google Play are not following extensive security protocols when new apps are published. For example, the Android game known as Angry Bird was hacked and the hacker managed to get into its APK file and embed a malicious code that sent text messages unknowingly by the user. The cost was 15 GPB to the user per message. More than a thousand users were affected

[15].

2.2.1. Malware Attacks on Android

Malware attacks are the most common case that can be identified as a threat to Android. There are various definitions for malware given by many researchers depending on the harm they cause. The ultimate meaning of the malware is any of the malicious application with a piece of malicious code

[16] which has an evil intent

[17] to obtain unauthorised access and to perform neither legal nor ethical activities while violating the three main principles in security: confidentiality, integrity, and availability.

Malware related to smart devices can be classified into three perspectives as attack goals and behaviour, distribution and infection routes, and privilege acquisition modes

[18]. Frauds, spam emails, data theft, and misuse of resources can be mentioned as the attack goals and behaviour perspective. Software markets, browsers, networks, and devices can be identified as the distribution and infection routes. Technical exploitation and user manipulation such as social engineering can be listed under the privilege and acquisition modes. Malware specifically related to the Android operating system is identified as Android malware

[19] which harms or steals data from an Android-based mobile device. These are categorised as Trojans, Spyware, adware, ransomware, worms, botnet, and backdoors

[20]. Google describes malware as potentially harmful applications. They classified malware as commercial and noncommercial spyware, backdoors, privilege escalation, phishing, types of frauds such as click fraud, toll fraud, Short Message Service (SMS) fraud, and Trojans

[21].

App collusion also should be considered when studying malware. App collusion is two or more apps working together to achieve a malicious goal

[22]. However, if those apps perform individually, there is no possibility of a malicious activity happening. It is a must to detect malicious inter-app communication and app permissions for app collusion detection

[23,24][23][24].

2.2.2. Users and App Developers’ Mistakes

The mistakes can happen knowingly or unknowingly from the developers as well as users. These mistakes may lead to threats arising to Android OS and its applications.

It has been identified that users are responsible for most security issues

[25]. Some common mistakes done by the users will lead to serious threats in an Android application. At the time of installing Android applications, users will be asked to allow some permissions. However, all the users may not understand the purpose of each permission. They allow permission to run the application without considering the severity of it. Fraudulent applications might steal data and perform unintended tasks after getting the required permissions. It is possible to arise threats to the Android systems due to the mistakes performed by the app developers at the time of developing applications. In the publishing stage of the Android apps, Google Play will have only limited control over the code vulnerabilities in the applications. Sometimes developers are specifying unwanted permissions in the Android manifest file mistakenly, which encourages the user to grant the permissions if the permissions were categorised as not simple permissions

[26]. Though the app development companies and some of the app stores are advising about following the security guidelines implemented at the time of development, many developers still fail to write secure codes to build secured mobile applications

[27].

2.3. Machine Learning Process

ML is a branch of artificial intelligence that focuses on developing applications by learning from data without explicitly programming how the learned tasks are performed. The traditional ML methods make predictions based on past data. ML process lifecycle consists of multiple sequential steps. They are data extraction, data preprocessing, feature selection, model training, model evaluation, and model deployment

[9]. Supervised learning, unsupervised learning, semisupervised learning, reinforcement learning, and deep learning are the different subcategories of ML

[28]. The supervised learning approach uses a labelled dataset to train the model to solve classification and regression problems depend on the output variable type (continuous or discreet). Unsupervised learning is used to identify the internal structures (clusters), the characteristics of a dataset, and a labelled dataset is not required to train the model. A mix of both supervised and unsupervised learning techniques are applied in semisupervised learning and used in a case of limited labelled data in the used dataset

[29]. The learning model and the data used for training are inferred. The model parameters are updated with the received feedback from the environment in reinforcement learning where no training data is involved. This ML method proceeds as prediction and evaluation cycles

[30]. DL is defined as learning and improving by analysing algorithms on their own. It works with models such as artificial neural networks (ANN) and consists of a higher or deeper number of processing layers

[31].

3. Methodology

Android was first released in 2008. A few years later, the security concerns were discussed with the increasing popularity of Android applications

[2]. More attention was received towards applying ML for software security in the last five years because many researchers continuously identify and propose novel ML-based methods

[9]. This review was conducted according to the Preferred Reporting Items for Systematic reviews and Meta-Analysis (PRISMA) model

[32]. Based on the objective of this study, first we formulated several research questions (see

Section 3.1). Next, a search strategy was defined to identify the conducted studies which can be used to answer our research questions. The database usage and inclusion and exclusion criteria were also defined at this stage. The study selection criteria were defined to identify the studies aiming to answer the formulated research questions as the third stage. The fourth stage is defined as data extraction and synthesis, which describes the usage of the collected studies to analyse for providing answers to the research questions. We reviewed threats to the validity of the review and the mechanism to reduce the bias and other factors that could have influenced the outcomes of this study as the last step of the review process.

3.1. Research Questions

This systematic review aims to answer the following research questions.

- RQ1:

-

What are the existing reviews conducted in ML/DL based models to detect Android malware and source code vulnerabilities?

- RQ2:

-

What are code/APK analysing methods that can be used in malware analysis?

- RQ3:

-

What are the ML/DL based methods that can be used to detect malware in Android?

- RQ4:

-

What are the accuracy, strengths, and limitations of the proposed models related to Android malware detection?

- RQ5:

-

Which techniques can be used to analyse Android source code to detect vulnerabilities?

3.2. Search Strategy

The search strategy involves the outline of the most relevant bibliographic sources and search terms. In this review, we have used several top research repositories as main sources to identify studies. They were ACM Digital Libraries, IEEEXplore Digital Library, Science Direct, Web of Science and Springer Link. Google Scholar, and Research Gate were also used to identify research studies published in some quality venues. The search string that we used to browse through research repositories contained the following search terms: (“android malware”) OR (“malware detection”) OR (“machine learning”) OR (“deep learning”) OR (“static analysis”) OR (“dynamic analysis”) OR (“hybrid analysis”) OR (“malware analysis”) OR (“android vulnerability analysis”) OR (“ML based malware detection”) OR (“DL based malware detection”).

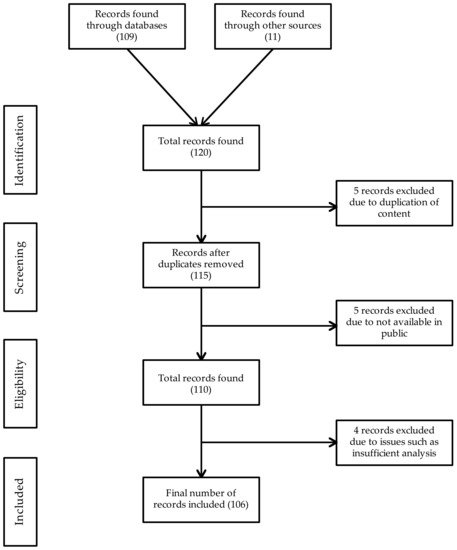

3.3. Study Selection Criteria

Since mobile malware detection using ML techniques related trends increased from 2016, we limit our review to study related work from 2016 to May 2021. Initially through the research database search in the top research repositories, 109 research papers and from another sources 11 research papers were identified. From these 120 papers, 5 were excluded because of duplicate entries and another 5 were excluded because they were not available in public from those 110 articles. Due to data analysis issues and experiment issues in the given context, 4 articles were excluded though the full text is available. The remaining 106 articles were reviewed in this study. We performed the snowballing process

[33], considering all the references presented in the retrieved papers and evaluating all the papers referencing the retrieved ones, which resulted in two additional relevant paper. We applied the same process as for the retrieved papers. The snowballing search was conducted in March 2021.

Figure 2 shows a summary of the paper selection method for this systematic review.

Figure 2. PRISMA method: collection of papers for the review.

3.4. Data Extraction and Synthesis

We extracted data from 9 studies to answer the RQ1, which is about the existing literature reviews related to Android malware detection using ML/DL models and Android vulnerability analysis. To map with RQ2, related studies were identified related to Android code/APK analysing techniques that can be used to analyse malware. The count for those studies was 22. To answer the RQ3 about ML/DL based techniques which can be used to detect malware, we extracted data from 18 different studies. Data from 36 research studies were extracted to find answers for the RQ4, which is about detection model accuracy, strengths, and weaknesses. The remaining 21 papers about Android source code vulnerability analysis and detection methods were used to answer the RQ5.

3.5. Threats to Validity of the Review

This review was conducted in a systematic approach explained above. We tried to minimise the bias and the other factors affecting the review study. Though we have conducted our review comprehensively, still there can be good papers which were not reviewed in this study since they are not available in the research repositories that we used. The period we were considering for the paper selection is from 2016 to May 2021, as the use of ML techniques for malware detection has increased significantly during this period due to recent advances in artificial intelligence. Therefore, if comprehensive studies were conducted before that, those studies were not captured in our work. When searching for the papers we considered the research papers written in the English language. Because of this limitation, our work may have overlooked some important works written in other languages such as Chinese, German, and Spanish.

4. Related Work

Previous reviews in

[9,13,17,34,35,36,37][9][13][17][34][35][36][37] discussed various ML-based Android malware detection techniques and ways to improve Android security.

The review in

[34] systematically reviewed the studies conducted in static analysis techniques used for Android applications from 2011 to 2015. The tools that can be used to perform Android code analysis using static analysis techniques were also summarised. Abstract representation, taint analysis, symbolic execution, program slicing, code instrumentation, and type/model checking were identified as fundamental analysis methods. Though this review correctly identified the most widely used approach to detect privacy and security related issues, the applicability of static analysis techniques for malware detection was not discussed. Apart from that, it did not take into account the recent research where novel analysis methods and malware detection methods were suggested. The study conducted in

[35] provided a good systematic review mainly about static analysis techniques that can be used in Android malware detection. Four methods were identified as characteristic-based, opcode-based, program graph-based and symbolic execution-based. After that, it evaluated the capabilities of static analysis based Android malware detection methods on those four methods using the existing literature. The paper has identified ML and statistical models as possible methods by which Android malware can be identified. However, ML-based machine learning methods have not been thoroughly reviewed as the main focus is only on the static analysis techniques.

In

[13], a survey was carried out using existing literature up to 2017 to identify malware detection techniques together with their advantages and disadvantages. Under static and dynamic analysis, they have grouped several approaches that can be used to identify Android malware. However, the analysis of this survey was not comprehensive as it focused on a limited number of studies. Based on the previous studies, a systematic review was conducted in

[17]. According to it, there are five types of Android malware detection techniques. They are static detection, dynamic detection, hybrid detection, permission-based detection, and emulation based detection. They also summarised the reviewed work with the model accuracy of malware detection, but the approach of those studies was not discussed. The review conducted in

[9] analysed several studies conducted until 2019 related to ML models which can be used to detect Android malware. The malware and APK analysis methods were not discussed in detail since the focus on identifying different ML models was the priority in this review. It is better to analyse the accuracies of the identified ML models. The novel ML/DL and other models which can be used to detect Android malware were also not in the focus of this review. The review in

[36] provides a good analysis of static, dynamic, and hybrid detection techniques used in the existing research studies for Android malware detection. Along with that possibility of using machine learning models, several deep learning models are also discussed. However, this study did not comprehensively analyse the model accuracy of the machine learning methods for Android malware detection since this study focused more on discussing different malware detection approaches instead of considering the accuracy of those approaches. Hence, these works differ from our study.

In

[37], a systematic review on DL-based methods for Android malware defence was discussed. Malware detection, malware family detection, repackaged/fake app detection, adversarial learning attacks and protections, and malicious behaviour analysis were identified as the malware defines objectives in this review together with the usage of DL models. Though they have identified the possible DL models, it is still better to analyse the accuracy and compare it with traditional ML methods and other hybrid approaches.

Apart from Android malware detection techniques, source code vulnerability analysis is also important to address security concerns in Android. The survey in

[38] analysed several studies on ML-based and data mining approaches which can be used to identify software vulnerabilities until 2017. Though this survey provides a good analysis, they considered most of the research work in general software security. Therefore, the vulnerability analysis in Android code was not discussed. However, findings such as ML models’ usage for vulnerability analysis are still beneficial for specific programming languages’ related analysis.

However, several limitations have been identified in the above works, such as not covering recent proposals on ML methods to detect malware, narrow scopes, and lack of critical appraisals of suggested detection methods. The lack of a thorough analysis of ML/DL-based methods was also identified as a limitation of existing works. Android malware detection and Android code vulnerability analysis have a lot in common. ML methods used in one task can be customised for use in the other task. However, as per our understanding, there are no reviews that cover these two areas together. These shortcomings have been addressed in this work and therefore our work is unique.

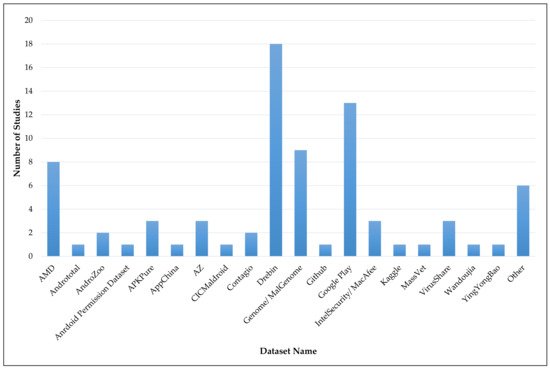

5. Machine Learning to Detect Android Malware

Malware detection in Android can be performed in two ways; signature-based detection methods and behaviour-based detection methods

[39]. The signature-based detection method is simple, efficient, and produces low false positives. The binary code of the application is compared with the signatures using a known malware database. However, there is no possibility to detect unknown malware using this method. Therefore, the behaviour-based/anomaly-based detection method is the most commonly used way. This method usually borrows techniques from machine learning and data science. Many research studies have been conducted to detect Android malware using traditional ML-based methods such as Decision Trees (DT) and Support Vector Machines (SVM) and novel DL-based models such as Deep Convolutional Neural Network (Deep-CNN)

[40] and Generative adversarial networks

[41]. These studies have shown that ML can be effectively utilised for malware detection in Android

[9]. Most of these studies used datasets such as Drebin

[42], Google Play

[43], AndroZoo

[44], AppChina

[45], Tencent

[46], YingYongBao

[47], Contagio

[48], Genome/MalGenome

[49], VirusShare

[50], IntelSecurity/MacAfee

[51], MassVet

[52], Android Malware Dataset (AMD)

[53], APKPure

[54], Anrdoid Permission Dataset

[55], Andrototal

[56], Wandoujia

[57], Kaggle

[58], CICMaldroid

[59], AZ

[60], and Github

[61] to perform experiments and model training in their studies.

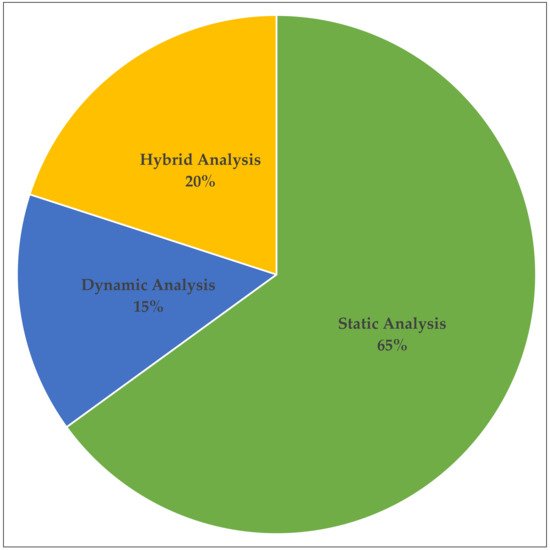

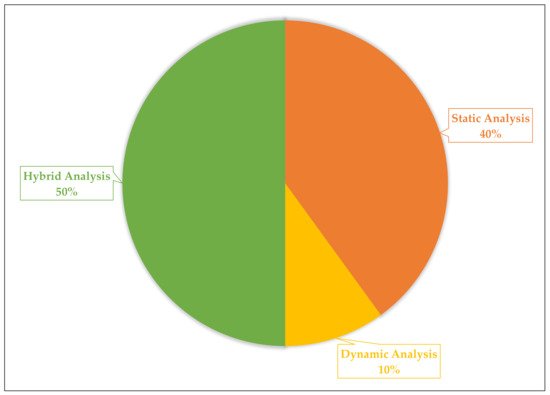

5.1. Static, Dynamic, and Hybrid Analysis

As mentioned earlier, analysing APKs to extract features is required to use some of the proposed ML techniques in the literature. To this end, three analysis techniques are identified as static, dynamic, and hybrid analysis method

[62,63,64][62][63][64]. Static analysis can be performed by analysing the bytecode and source code (or re-engineered APK) instead of running it on a mobile device. Dynamic analysis detects malware by analysing the application while it is running in a simulated or real environment. However, there is a high chance of exposing the risks to a certain extent to the runtime environment in the dynamic analysis since malicious codes will be executed which can harm the environment. The hybrid analysis involves methods in both static and dynamic analysis.

Under the static analysis, four aspects were proposed

[28] which are analysis techniques, sensitivity analysis, data structure, and code representation. Under the analysis techniques, Symbolic execution, taint analysis, program slicing, abstract interpretation, type checking, and code instrumentation were identified. For the sensitivity analysis, object, context, field, path, and flow were identified. For the data structure aspect, it is possible to list call graph (CG), Control Flow Graph (CFG), and Inter-Procedural Control Flow Graph (ICFG). Smali, Jimple, Wala-IR, Dex-Assembler, Java Byte code, or class were listed under the code representation aspect. Kernel, application, and emulator can be taken under inspection level aspect. Taint analysis and anomaly-based can be taken under the dynamic analysis approaches.

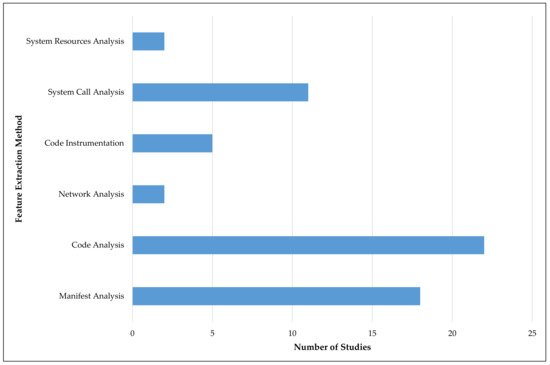

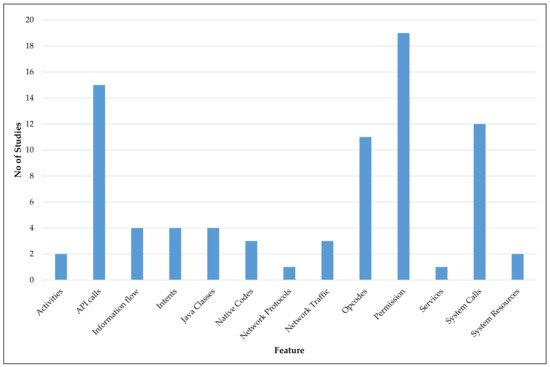

The feature extraction methods available in the static analysis consist of two types: Manifest Analysis and Code Analysis

[65]. Features such as package name, permissions, intents, activities, services, and providers can be identified in Manifest Analysis. In the code analysis, features such as API calls, information flow, taint tracking, opcodes, native code, and cleartext analysis can be identified as possible features to extract. For the dynamic analysis, five feature extraction methods were identified. They were (1) Network traffic analysis for features like Uniform Resource Locators (URL), Internet Protocol (IP), Network protocols, certificates, and nonencrypted data, (2) Code instrumentation for features such as Java classes, intents, and network traffic, (3) System calls analysis, (4) System resources analysis for features such as processor, memory and battery usage, process reports, network usage, and (5) User interaction analysis for features such as buttons, icons, and actions/events. The study in

[66] has explored the security of ML for Android malware detection techniques using a learning-based classifier with API calls extracted from converted smali files. Then a sophisticated secure learning method is proposed, which showed that it is possible to enhance the security of the system against a wide range of evasion attacks. This model is also applicable to anti-spam and fraud detection areas. This study can be further improved by exploring the possibilities of identifying attacks that can alter the training process.

5.2. Static Analysis with Machine Learning

Static analysis is the widely used mechanism for detecting Android malware. This is because malicious apps do not need to be installed on the device as this approach does not use the runtime environment

[67].

5.2.1. Manifest Based Static Analysis with ML

Manifest based static analysis is a widely used static analysis technique. The model proposed in SigPID

[68] discussed an Android permission-based malware detection mechanism. This model has identified only 22 permissions out of all the permissions listed in sample APKs that are significant by developing a three-level data purring method: permission ranking with negative rate, support based permission ranking, and permission mining with association rules. After that, the ML algorithms were employed to detect the malware. To this process, a binary format dataset of permissions, which was created using a database of malware and benign apps from Google Play was used. The support-vector machine (SVM) outperformed the other studied ML algorithms (Naïve Bayes (NB) and (DT)) with over 90% accuracy. For the permission-based static analysis, this work was conducted comprehensively. However, it is better to check the other variables which are affecting the malware apart from permissions.

A malware detection method using Android manifest permission analysing was proposed in

[69] with the use of static analyser and decompilation support of APKTool for the APK to code level extraction. AndroZoo repository was used as the dataset to train four different ML algorithms. Random Forest (RF), SVM, NB, and K-Means were used to perform the model validity process, and RF produced the highest accuracy for this model with 82.5% precision and 81.5% recall. However, the accuracy of this model is comparatively low with the other studies conducted in the same area. The close reason for that would be that this approach compares the permissions only.

The proposed work in

[70] checked the possibility of using reduced dimension vector generating for malware detection. Based on that, malware detection using ML models with permission-based static analysis was performed. In the feature selection stage of this approach, the model removed the unnecessary features using a linear regression-based feature selection approach. Therefore, the classification model can run in real-time since the training time was decreased, with an accuracy of over 96%. The Multi-Layer Perception Model (MLP) algorithm outperformed NB, Linear Regression, k-nearest neighbors (KNN), C4.5, RF and Sequential Minimal Optimization (SMO). It is better to focus on hypermeter selections to also increase the performance of the classification. The model proposed in

[71] performed a static analysis on Android apps. Android permissions and intents were used as the basic static features of malware classification while URLs, Emails, and IPs were used as the basic dynamic features. Initially, the APK files were decompiled using ApkTool. The extractor module of this extracted different types of information related to malware. After extracting the data through disassembling the dex files, the data were kept in a text files and they were used to create the feature vector. Then the ML algorithms RF, NB, Gradient Boosting (GB), and Ada Boosting (AB) were used to train and test the malware detection model with the usage of Drebin dataset and Google Play Store. After performing ML training and testing part for each of permission, intent, and network features individually it has identified that the above ML algorithms were performing with different accuracies. For permissions RF performed well with 0.98 precision and recall, for intents NB performed well with 0.92 precision and 0.93 recall, and for network both RF and AB performed similarly well with 0.97 precision and recall. Though this research concluded with such accuracies for malware detection it is still lacking the study of some other features like API calls, etc.

Android malware detection technique using feature weighting with join optimisation of weight mapping and classifier parameters model is proposed in JOWMDroid Framework in

[72]. This model is a static analysis-based technique that selected a certain number of features out of the extracted features from the app which were related to malware detection. This process was done by decompiling the APK to manifest and class.dex files and prepared a binary feature matrix. Initial weight was calculated using Random Forest, SVM, Logistic regression (LR), and KNN ML models. Weight machine functions were designed to map the initial weight with final weights. As the last step, classifiers and weight mapping function parameters were jointly optimised by the Differential Evolutional algorithm. Drebin, AMD, Google Play, and APKPure datasets were used to train the model. Finally, it is identified that among weight unaware classifiers, RF performed better with 95.25% accuracy and for weight-aware classifiers, KNN and MLP performed better. However, with the integration of this JOWM-IO method, SVM and LR beat the RF with over 96% accuracy. If the correlation between features is also considered, the model accuracy for detecting malware will increase.

Table 1 comparatively summarises the above research studies related to manifest analysis based methods.

Table 1. Manifest based static Analysis with ML.

Figure 6. Usage of datasets.

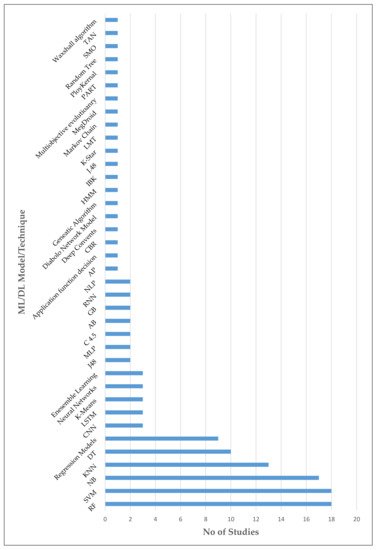

It is identified that the RF, SVM, and NB are at the top of widely studied ML models to detect Android malware. The reason may be that the resource cost to run RF, SVM, or NB based models is low. Models like CNN, LSTM, and AB have less usage because to run such advanced models, good computing power is required, and the trend for DL-based models was also boosted in recent years. Table 12 summarises widely used ML/DL algorithms with their advantages and disadvantages. Figure 7 illustrates all of the studied ML/DL models with their usage in the reviewed studies.

Figure 7. ML/DL models used in the reviewed studies.

Table 12. Commonly used ML/DL algorithms for Android malware detection.

| Algorithm |

Advantages |

Disadvantages |

| DT |

|

|

| NB |

|

|

| Regression Models |

|

|

| KNN |

|

|

| Year |

Study |

Detection Approach |

Feature Extraction Method |

Used Datasets |

ML Algorithms/Models |

Selected ML Algorithms/Models |

Model Accuracy |

Strengths |

Limitations/Drawbacks |

| 2018 |

[68] |

Developing 3 level data purring method and applying ML models with SigPID |

Manifest Analysis for Permissions |

Google Play |

| Did not consider the improving clustering techniques and applicability of other ML models |

5.3. Dynamic Analysis with Machine Learning

The second analysis approach is dynamic analysis. Using this approach it is possible to detect malware with ML after running the application in a runtime environment. Android Malware detection using a network-based approach was introduced in

[87]. In this approach, a detection application was developed. It contained three modules: network traces collection, network feature extraction, and detection. In the traces collection module, network activities of running applications were monitored and recorded the network traces periodically. The features extraction module extracted features of the network used by the applications. Those features were Domain Name System (DNS) based features, HyperText Transfer Protocol (HTTP) based features, Origin destination based features, and Transmission Control Protocol (TCP) based features. DT, LR, KNN, Bayes Network, and RF algorithm were used in the detection module. The RF algorithm provided the highest accuracy (98.7%) among them. However, this approach used network-based analysis. If the malware apps were using encrypted transfers, the malware detection accuracy would decrease. Therefore, the model also should consider such factors.

The proposed model in 6th Sense

[88], using Markov Chain, NB, Logistic Model Tree (LMT) to detect malware using dynamic analysis is based on sensors available in a mobile device. A context-aware intrusion detection system is studied in this approach by collecting and observing changes in sensor data. This step happened when the applications were performing activities that enhanced security. This model distinguishes malware and benign applications. Three types of malware activities (triggering, leaking information, and stealing data) were identified using this approach via sensors available in the device. The collected data was divided as 75% for training and 25% for testing. For the Markov Chain-based detection technique, a training dataset was used to compute the state transitions and build a transition matrix. A training dataset was used with NB to determine the sensor condition changing frequency. For the other ML algorithms, all the data were defined as benign and malware. In this study, LMT outperformed others with 99.3% precision and 99.98% recall. Though this study is a comprehensive one, it is better if the tradeoffs such as frequency accuracy, battery frequency, etc. are considered.

The proposed method in

[89] discussed dynamic analysis-based techniques which extract a set of dynamic permissions from APKs in different sources and run them in an emulator. Then it evaluates the model using NB, RF, Simple Logistic, DT, and K-Star ML models. After that, it is identified that Simple Logistic performs well with 0.997 precision and 0.996 recall. Some issues were in the dataset used in this model. For example, some benign and malicious apps were using the same permissions, and some apps crashed when running the application in an emulator. Therefore, if the dataset is fine-tuned more before use, this model provides even more accuracy.

In

[90], a framework called Service Monitor was proposed, which is a lightweight host-based detection system that can detect malware on devices. This framework was built using dynamic analysis. Service Monitor monitored the way of requesting system services to create the Markov Chain Model. The Markov Chain is used as a feature vector to perform the classification tasks with ML algorithms: RF, KNN, and SVM. The RF method performed well with an accuracy of 96.7% after training the model with AndroZoo, Drebin, and Malware Genome datasets. Some benign apps also requested the system services in a similar way to malware. Therefore, this could lead to some misclassification of this model. To avoid that and enhance the classification accuracy, signature-based verification to the Service Monitor can be applied.

A mechanism named DATDroid was proposed in

[91] which is a dynamic analysis based malware detection technique with an overall accuracy of 91.7% with 0.931 precision and 0.9 recall values with RF ML algorithm. As the initial stage, feature extraction was performed by collecting system calls, recording CPU and memory usage, and recording network packet transferring. Then in the feature selection stage, Gain Ratio Attribute Evaluator was applied. After that, the model training and validation were performed as the next stage to identify malicious and benign applications using APKPure and Genome Project datasets. In addition to the features studied in this, there can be an impact from features like HTTP, DNS, TCP/IP, and memory usage patterns towards identifying malware which should be discussed.

In

[92], a framework which is named as MEGDroid, using the dynamic analysis was proposed to improve the event generation process in Android malware detection. In this method, it automatically extracted and represented information related to malware as a domain-specific model. Decompilation, model discovery, integration and transformation, analysis and transformation, and event production were the steps included in this model. The model was then used to analyse malware after training with the AMD dataset. This model extracted every possible event source from malware code and was developed as an Eclipse plugin. Based on the results, MEGDroid provides better coverage in malware detection through generating UI, whereas system events and monitoring the system calls are lacking in this approach.

Table 5 comparatively summarises the above research studies related to dynamic analysis based methods.

Table 5. Dynamic analysis based malware detection approaches.

Hybrid analysis based malware detection approaches (model accuracy is above 90%).

| Year |

Study |

Detection Approach |

Feature Extraction Method |

Used Datasets |

ML Algorithms/Models |

Selected ML Algorithms/Models |

| Feature Extraction Method |

|---|

| Selected ML Algorithms/Models |

Model Accuracy |

Strengths |

Limitations/Drawbacks |

| 2017 |

[ |

In

[95], authors conducted an experiment using various ML technologies to analyse the relative effectiveness of the static and dynamic analysis method towards detecting malware. This study used the Drebin dataset and a custom dataset to train the ML algorithm to classify malware and benign apps. Altogether the whole dataset contains 103 malware and 97 benign apps. For the static analysis, the APK files were reverse-engineered by a tool available in Virustotal and extracted the permissions using a custom XML parser. Then binary feature vectors and permission vectors were created, and ML algorithms were applied. For dynamic analysis, applications were executed on separated Android Virtual Devices (AVDs). System calls and their frequencies were traced using the MonkeyRunner tool since the frequency representation of system calls contained behavioural information on apps. Usually, malware has higher frequencies compared to benign apps. After that, a feature vector of system calls was created, and ML algorithms were applied. The RF, J.48, Naïve Bayes, Simple Logistic, BayesNet Augmented Naïve Bayes (TAN), BayesNet K2, Instance Based Learner (IBk), SMO PolyKernel, and SMO NPolyKernel algorithms were used for both static and dynamic analysis. The best results of 0.96 for static analysis and 0.88 for dynamic analysis were achieved when RF with 100 trees was used. Permissions extracted from the AndroidManifest.xml file were considered for static analysis, and system calls extracted from the runtime were considered in the dynamic analysis.

The model proposed in

[96] explained a hybrid analysis process to detect malware using ML algorithms with the accuracy of 80% when using the permissions analysis in static analysis approach and 60% accuracy when analysing by system calls. Malware samples were collected using a honeypot and search repositories such as Androditotal to train the model. However, this study lacks the consideration of other features’ which affect malware detection that should also be considered to achieve a high accuracy model.

In

[97], the model proposed a hybrid analysis-based efficient mechanism for Android malware detection, which used the malware genome dataset and the Drebin dataset to train the ML and DL models in the static analysis approach. CICMalDroid dataset for the dynamic analysis approach and 261 combined features were extracted for the hybrid analysis. To increase the performance, this model used dimension reduction using Principal Component Analysis (PCA). SVM, KNN, RF, DT, NB, MLP, and GB were used to train and test the model. Out of these ML/DL algorithms, GB outperformed the others in terms of accuracy (96.35%), but it took a comparatively long training time. Forty-six features from dynamic analysis results were also analysed. After performing combined hybrid analysis, GB again performed well with an accuracy of 99.36% and efficiency compared to the Random Forest and MLP. It is better to study the runtime environment and configuration more because this does not cover some areas.

The model described in

[98] proposed a Tree TAN based hybrid malware detection mechanism by considering both static and dynamic features such as API calls, permissions, and system calls. LR algorithms were trained for these three features. Drebin, AMD, AZ, Github, and GP datasets were used in this and modelled the output relationships as a TAN to detect if the given app is malicious or benign with an accuracy of 0.97. There is a possibility of some malware remaining undetected from the model, which can be reduced using Reinforcement Learning techniques.

Table 6 and Table 7 comparatively summarise the above research studies related to hybrid analysis based methods, where Table 6 listed studies with model accuracy below 90% and Table 7 listed studies with model accuracy above 90%.

Table 6. Hybrid analysis based malware detection approaches (model accuracy is below 90% or overall accuracy is not available).

| Year |

Study |

Detection Approach |

Feature Extraction Method |

Used Datasets |

ML algorithms/Models |

Selected ML algorithms/Models |

Model Accuracy |

Strengths |

Limitations/Drawbacks |

| Model Accuracy |

Strengths |

Limitations/Drawbacks |

2017 |

[96] |

Using a set of Python and Bash scripts which automated the analysis of the Android data. | Model execution is efficient |

Consider system call appearance rather than frequency and Lower number of samples used to train |

| Manifest analysis for permissions and System call analysis for dynamic analysis |

Andrototal |

NB, DT |

| 2017 | DT |

[99] |

]Using import term extraction, clustering and applying genetic algorithm with MOCODroid |

Code analysis for API calls and information flow and system call analysis | 80% |

[Virus-total, Google Play |

103Genatic algorithm, Multiobjective evolutionary algorithm |

Multiobjective evolutionary classifier |

]95.15% |

Possible to avoid the effects of the concealment strategies |

Did not consider about other clustering methods. |

Using n-Gram methods after getting the Opcode sequence from .smali after dissembling .apk |

Code Analysis for Opcodes |

Genome, IntelSecurity, MacAfee, Google Play |

CNN, NLP |

Deep CNN |

87% |

Automatically learn the feature indicative of malware without hand engineering |

Assumption of all APKs are benign in Google Play dataset while all are malicious in malware dataset |

2018 |

[95] |

Using Binary feature vector and permission vector datasets were created using the analysis techniques and was used with the ML algorithms |

Manifest analysis for permissions and system call analysis |

Drebin |

RF, J.48, NB, Simple Logistic, BayesNet TAN, BayesNet K2, SMO PolyKernel, IBK, SMO NPolyKernel |

RF |

Static-96%, Dynamic-88% |

Compared with several ML algorithms |

Accuracy depends on the 3rd party tool (Monkey runner) used to collect features. |

| 2020 |

[97] |

Extracted 261 combined features of the hybrid analysis with using the support of datasets and performed the ML/DL models |

Manifest analysis for permissions and system call analysis |

| 2021 |

[108] | MalGenome, Drebin, CICMalDroid |

SVM, KNN, RF, DT, NB, MLP, GB |

GB |

99.36% |

Hybrid analysis is having higher accuracy comparing to static analysis and dynamic analysis individually |

Runtime environment and configuration is not considered |

[1072019 |

[94] |

Preparing a JSON file after reverse engineering, decompiling, and analysing the APK by running in a sandbox environment and then extracting the key features and applied ML |

Manifest analysis for permissions, code analysis for API calls and System call analysis |

MalGenome, Kaggle, Androguard [79] |

SVM, LR, KNN, RF |

| 2020 |

[98] |

Using Conditional dependencies among relevant static and dynamic features. Then trained ridge regularised LR classifiers and modelled their output relationships as a TAN |

built a model to predict software vulnerabilities of codes using ML before releasing the code. After developing a source code representation using AST and intelligently analysing it, the ML models were applied. Popular datasets such as NIST SAMATE, Draper VDISC, and SATE IV Juliet Test Suite, which contain C, C++, Java, and Python source codes, were used to train the model. However, using this model, it was not possible to locate a specific place of vulnerability. It is identified as a drawback, and it has not proven that the same approach is possible to apply to other programming languages and frameworks. However, there is a possibility of using this approach for Android applications, which were developed using Java.

In

[125], using C and C++ source codes, a vulnerability detection system was proposed using ML and deep feature representation learning. Apart from using the existing datasets, the Drapper dataset was compiled using Drebin and Github repositories with millions of open-source functions and labelled with carefully selected findings. The findings of the research were compared with Bag of Words (BOW), RF, RNN, and CNN models.

The study conducted in

[126] developed a mechanism to classify subroutines as vulnerable or not vulnerable in C language using ML methods. The National Vulnerability Dataset (NVD) was used to collect C programming code blocks and their known vulnerabilities. After preparing the AST and preprocessing the data, feature extraction, feature selection, and classification tasks were performed and ML algorithms were applied.

The applicability of deep learning to detect code vulnerabilities was discussed in

[127]. Comparison of using three DL algorithms CNN, LSTM, and CNN-LSTM were discussed in this study. The proposed model has an accuracy of 83.6% when applying the DL models. Using Deep Neural Networks, it was possible to predict vulnerable code components. The model in

[128] evaluated it using some Java-based Android applications. In this mechanism, N-gram analysis and statistical feature selection for constructing features were performed. This model can classify vulnerable classes with high precision, accuracy, and recall.

In

[129], a model was proposed to detect zero-day Android malware using a distinctive parallel classifier and a mechanism to identify oncoming highly elusive vulnerabilities in the source code with an accuracy of 98.27% with the use of Ml algorithms; PART, Ripple Down Rule Learner (RIDOR), SVM, and MLP.

ML-Based Vulnerability Detection Specifically for Android

There is less research conducted relating to Android vulnerability detection with ML. The methodology of the studies, which were conducted on general programming languages, could apply to the Android code vulnerability detection after training the model using specific code datasets and adjusting the generalisation mechanism.

The work conducted in

[130] prepared a manually curated dataset that can be used to fix vulnerabilities of open-source software. The possibility of automatically identifying security-related commits in the relevant code repository has been proven since it has been successfully used to train classifiers.

In

[131] repository of Android security vulnerabilities was created named AndroVul, which includes dangerous permissions, security code smells, and high-risk shell command vulnerabilities. In

[132], a study was conducted to predicatively analyse the vulnerabilities in Internet of Things (IoT) related Android applications using statistical codes and applying ML. In this study, 1406 Android apps were taken with various risk levels, and six ML models (KNN, LR, RF, DT, SVM, and GB) were administered to examine security risk prediction. It is identified that RF performs well in the intermediate risk level. GB performs well at a very high-risk level compared to the other ML model-based approaches. The study conducted in

[133] proposed an ML-based vulnerabilities detection mechanism to identify security flaws of Android Intents using hybrid analysis. Adaboost algorithm was used to perform the ML based analysis.

Table 10 and Table 11 summarise selected studies from above which are related to Android vulnerability analysis. Table 10 lists the studies which have model accuracy below 90% and Table 11 lists the studies which have model accuracy above 90%.

Table 10. Android vulnerability detection mechanisms (Model accuracy is below 90%).

| Year |

Study |

Code Analysis Method |

YearApproach |

Used ML/DL Methods/Frameworks |

Study |

Code Analysis Method |

ApproachAccuracy of the Model |

| Used ML/DL Methods/Frameworks |

Accuracy of the Model |

2017 |

[ |

| 2017 | 127] |

[Dynamic Analysis |

113Collected 9872 sequences of function calls as features. Performed dynamic analysis with DL methods |

CNN-LSTM |

83.6% |

| LR for static analysis and RF for dynamic analysis |

| ] |

Static Analysis |

Generated the AST, navigated it, and computed detection rules. Identified smells when training with manually created dataset. |

ADOCTOR framework |

98% |

] |

Using DL based method which uses Convolution Neural Network based approach to analyse features |

Code Analysis for API calls, Opcode and Manifest Analysis for Permission |

Drebin, AMD |

2017CNN |

[133] |

Hybrid Analysis |

Decompiled the apk file. Performed static analysis of the manifest file to obtain the components/permissions. Dynamic analysis and fuzzy testing were conducted and obtained system status.CNN |

91% and 81% on two datasets |

Reduce over fitting and possible to train to detect new malware just by collecting more sample apps |

Did not compared with other ML/DL methods |

Table 9. Deep learning based malware detection approaches (model accuracy is above 90%).

| 2017 |

[128] |

Static Analysis | AB and DT |

77% |

| Manifest analysis for permissions, code analysis for API calls and system call analysis |

Drebin, AMD, AZ, Github, GP |

TAN |

TAN | Static-81.03%, Dynamic-93% |

Dynamic analysis performed was better than the static analysis approach in terms of detection accuracy |

Did not perform a proper hybrid analysis approach to increase the overall accuracy |

Table 7.

| Combined N-gram analysis and statistical feature selection for constructing features. Evaluated the performance of the proposed technique based on a number of Java Android programs. |

Deep Neural Network |

| 97% |

2019 |

[115] |

Hybrid Analysis |

Reverse engineered the APK, Decoded the manifest files & codes and extracted meta data from it. Performed dynamic analysis to identify intent crashing and insecure network connections for API calls. Generated the report. |

AndroShield | Highly accurate |

Possibility of some malwares remain undetected |

| 84% |

2021 |

[100] |

Using exploit static, dynamic, and visual features of apps to predict the malicious apps using information fusion and applied Case Based Reasoning (CBR) |

| 2020 |

[124] |

Hybrid Analysis |

Performed intelligent analysis of generated AST. Checked ML can differentiate vulnerable and nonvulnerable. |

MLP and a customised model |

70.1% |

Table 11. Android vulnerability detection mechanisms (model accuracy is above 90%).

| 92.87% |

| 2019 |

[129] |

Hybrid Analysis |

Decompiled the APK and selected the features and executed the APK and generated log files with system calls. Generated the vector space and trained with ML algorithms as parallel classifiers. |

MLP, SVM, PART, RIDOR, MaxProb, ProdProb |

98.37% |

| Manifest analysis for permissions and System call analysis |

| 2020 |

[ | Drebin |

121] |

Hybrid Analysis |

In static analysis, vulnerabilities of SSL/TLS certification were identified. Results from static analysis about user interfaces were analysed to confirm SSL/TLS misuse in dynamic analysis. |

DCDroidCBR, SVM, DT |

CBR |

95% |

Require limited memory and processing capabilities |

99.39% | Require to present the knowledge representation to address some limitations |

|

|

|

| |

|

| 2021 |

[ |

| SVM |

|

|

| K-Means |

-

Easy to implement

-

Fast and simple

|

|

| NB, DT, SVM |

| SVM |

90% |

High effectiveness and accuracy |

Considered only the permission analysis which may lead to omit other important analysis aspects |

| 2021 |

[69] |

Analysing permission and training the model with identified ML algorithm |

Manifest Analysis for Permissions |

Google Play, AndroZoo, AppChina |

RF, SVM, Gaussian NB, K-Means, |

RF |

81.5% |

The model was trained with comparatively different datasets |

Did not consider other static analysis features such as OpCode, API calls, etc. |

| 2021 |

[70] |

Reducing dimension vector generation and based on that perform malware detection using ML models |

Manifest Analysis for permissions |

AMD, APKPure |

MLP, NB, Linear Regression, KNN, C.4.5, RF, SMO |

MLP |

96% |

Efficiency, applicability and understandability are ensured |

Hyper-parameter selections are not made in the use |

| 2021 |

[71] |

Selecting feature using dimensionality reduction algorithms and using Info Gain method |

Manifest Analysis for permissions and intents |

Drebin, Google Play |

RF, NB, GB, AB |

RF, NB, AB |

RF-98%, NB-92%, AB-97% |

Analysed the features as individual components and not as a whole |

Did not consider about other features such as API calls, Opcode etc. |

| 2021 |

[72] |

Feature weighting with join optimisation of weight mapping with proposed JOWMDroid framework |

Manifest Analysis for permission, Intents, Activities and Services |

Drebin, AMD, Google Play APKPure |

RF, SVM, LR, KNN |

JOWM-IO method with SVM and LR |

96% |

Improved accuracy and efficiency |

Correlation between features were not considered |

5.2.2. Code Based Static Analysis with ML

Code based analysis is the other way of performing the static analysis to detect Android malware with ML. The model proposed in TinyDroid

[39] analysed the latest malware listed in the Drebin dataset. Instruction simplification and ML are used in the model. Using the decompiled DEX files by converting APK to smali codes, the opcode sequence was abstracted. Then using that, features were extracted through N-gram and integrated with the exemplar selection method. In the exemplar selection method, for intrusion detection, a good representative of data was generated through a clustering algorithm, Affinity Propagation (AP). This is because in AP, the number of clusters determination or estimation is not required before running the application. Then the generated 2,3, and 4-gram sequences were fed into SVM, KNN, RF, and NB ML classifiers. RF algorithm was identified as the optimal algorithm for this scenario with 0.915 True Positive Rate, 0.106 False Positive Rate, 0.876 Precision, and 0.915 Recall for 2-gram sequence. High accuracy rates for the other 3 and 4-grams were also achieved compared to the studied ML algorithms. However, the proposed method still has issues such as using the malware samples taken only from few research studies and some organisation and lack of metamorphic malware samples. Therefore, some malware could remain undetected.

The approach proposed in

[73] used the Drebin dataset with 5560 malware samples along with 361 malware from the Contagio dataset and 5900 benign apps from Google Play to propose another approach to detect malware by analysing API calls used in operand sequences. For the malware prediction model, the package level details were extracted from the API calls. The package n-grams were extracted from the package sequence, which represents application behaviour. Then they were combined with DT, RF, KNN, and NB ML algorithms to build a predictive model in this study and concluded that the RF algorithm performed with an accuracy of 86.89% after training the model on 2415 package n-grams. It is better to consider other information which contains in operands since it might affect the overall model. The relationship of system functions, sensitive permissions, and sensitive APIs were analysed initially in Anrdoidect

[74]. A combination of system functions was used to describe the application behaviours and construct eigenvectors using the dynamic analysis technique. Based on the eigenvectors, effective methodologies of malware detection were compared along with the NB, J48 DT, and application functions decision algorithm and identified that the application functions’ decision algorithm outperformed the others. There are still some improvements to be performed to this approach.

In MaMaDroid

[75] model, API calls performed by apps were abstracted using static analysis techniques to classes, packages, or families. Then to determine the call graph of apps as Markov chain, the sequence of API calls was obtained. Then using ML algorithms, classification was performed using RF, KNN, and SVM and it was identified that RF had the highest accuracy among these three. However, in this method, dynamic analysis was not considered. The dynamic analysis is useful for an API calls analysis in a runtime environment to detect malicious applications.

Android malware detection approach using the method-level correlation relationship of application’s abstracted API calls was discussed in

[76]. Initially, the source codes of Android applications were split into methods, and abstracted API calls were kept. After that, the confidence of association rules between those calls was calculated. This approach provided behavioural semantic of the application. Then SVM, KNN, and RF algorithms were used to identify the behavioural patterns of the apps towards classifying as benign or malicious. Drebin and AMD datasets were used for this, and 96% accuracy was received with the RF algorithm. This method does not address the problems such as dynamic loading, native codes, encryptions, etc. though it has such high accuracy. If the dynamic analysis methods are also used, the accuracy of this model will increase to a further high level.

The model named SMART in

[77] proposed a semantic model of Android malware based on Deterministic Symbolic Automation (DSA) to comprehend, detect, and classify malware. This approach identified 4583 malware that were not identified by leading anti-malware tools. Two main stages were included in this approach; malicious behaviour learning and malware detection and classification. In Stage 1, the model identified semantic clones among malware, and semantic models were constructed based on that. Then malicious features were extracted from DSA, and ML techniques were used to detect malware in Stage 2 after performing static analysing activities with bytecode analysis. Random Forest achieved the best classification results of 97% accuracy, and AB, C45, NB, and Linear SVM provided lower accuracy. Therefore, this work identified that DSA is possible to use for malware detection. DroidChain

[78] proposed a static analysis model with behaviour chain model. The malware detection problem was transformed to a matrix model using the Wxshal algorithm to further analyse this approach. Privacy leakage, SMS financial charges, malware installation, and privilege escalation were proposed as malware models in this study using the behaviour chain model. In the static analysis part, using APKTool and DroidChain, Smali codes were extracted. Then the API call graph was generated using the Androguard

[79] tool. After that, the incidence matrix was built, and the accessibility of the matrix to detect malware was calculated. The average accuracy of this model was 83%. This method can be improved to detect malware more accurately and efficiently by considering other static analysis features such as code analysis, permission analysis, etc.

The study conducted in

[80] discussed testing malware detection techniques based on opcode sequence and API call sequence. The Hidden Markov Model (HMM) was trained in this and detection rates for models based on static, dynamic, and hybrid approaches were identified and it was concluded that the hybrid approaches are highly effective without performing static or dynamic analysis alone.

Table 2 and Table 3 comparatively summarise the above research studies related to code analysis based methods, while Table 2 listed studies with model accuracy below 90% and Table 3 listed studies with model accuracy above 90%.

Table 2. Code based static Analysis with ML (Model Accuracy is below 90%).

|

|

|

|

| RF |

|

|

|

|

| Neural Networks |

-

Highly accurate

-

Strong fault tolerance

|

|

| LSTM |

|

|

| CNN |

|

|

| Ensemble Learning |

|

|

| 87 |

| ] |

Extracting the DNS, HTTP, TCP, Origin based features of the network used by apps |

Network traffic analysis for network protocols |

Genome |

DT, LR, KNN, Bayes Network, RF |

RF |

98.7% |

Work with different OS versions, Detect unknown malware, and infected apps |

If the malware apps using encrypted, not possible to detect malware properly |

| 2017 |

[88] |

Using Markov Chain-based detection technique, to compute the state transitions and to build transition matrix with 6thSense |

System resources analysis for process reports and sensors |

Google Play |

Markov Chain, NB, LMT |

LMT |

95% |

Highly effective and efficient at detecting sensor-based attacks while yielding minimal overhead |

Tradeoffs such as frequency accuracy, battery frequency are not discussed which can affect the malware detection accuracy |

| 2017 |

[89] |

Using Dynamic based permission analysis using a run-time and detect malware using ML calculate the accuracy |

Code instrumentation analysis Java classes and dynamic permissions |

Pvsingh, Android Botnet, DroidKin |

NB, RF, Simple Logistic, DT K-Star |

Simple Logistic |

99.7% |

High Accuracy |

Need to address the app crashing issue in the selected emulators in dynamic analysis |

| 2019 |

[90] |

Using dynamically tracks execution behaviours of applications and using ServiceMonitor framework |

System call analysis |

AndroZoo, Drebin and Malware Genome |

RF, KNN, SVM |

RF |

96.7% |

High accuracy and high efficiency |

Not detecting difference in some system calls of malware and benign apps since signature based verification was not applied |

| 2020 |

[91] |

Extracting the features and permissions from Android app. Performing feature selection and proceed to classification with DATDroid |

System call analysis, Code instrumentation for network traffic analysis and System resources analysis |

APKPure, Genome |

RF, SVM |

RF |

91.7% |

High efficiency |

Impact from features like HTTP, DNS, TCP/IP patterns are not considered |

| 2021 |

[92] |

Using decompilation, model discovery, integration and transformation, analysis and transformation, event production |

Code instrumentation for java classes, intents |

AMD |

ML algorithms used in MEGDroid, Monkey, Droidbot |

MEGDroid |

91.6% |

Considerably increases the number of triggered malicious payloads and execution code coverage |

System calls are not monitored |

5.4. Hybrid Analysis with Machine Learning

Hybrid analysis is the third approach which can be used in ML-based Android malware detection. The review in

[93] identified three approaches of malware detection, which are the signature-based, anomaly-based, and topic modelling based approaches. ML algorithms such as DT, J48, RF, KNN, KMeans, and SVM can be applied to all these approaches. Signature-based malware was detected using ML algorithms after the feature extraction process. After the feature extraction, sensitive API calls were also analysed before applying ML algorithms. Documents were collected such as reviews, user documents, and app descriptions before following a similar approach as the signature-based method, initially in the topic modelling approach. It was identified that the behavioural based approach is better than the signature-based approach. If the topic modelling is combined with that approach, it was possible to achieve good results. The hybrid analysis method is created when the dynamic analysis method is integrated with the static analysis method. According to this study, the SVM classifier with the hybrid analysis method performed better than the other ML algorithms.

The model proposed in

[94] discussed a methodology of using ML algorithms with static analysis and dynamic analysis. In the static analysis approach, malicious and benign applications’ manifest data were taken as JSON files from MalGenome and Kaggale datasets to train the ML model. The trending apps were taken from well-known app stores. Androguard

[79] was used to extract information from the APK files. After reverse engineering, decompiling, testing, and training with SVM, LR, KNN based ML models, a JSON file was prepared. According to this model, LR was identified as the most suitable ML algorithm, which has 81.03% accuracy. Many improvements are required to the proposed static analysis model since comparatively this has a low accuracy. However, the proposed dynamic analysis approach outperformed the static analysis approach with high accuracy of 93% of both precision and recall over the RF. In this approach, Droidbox was used to run APKs obtained from MalGenome and Android Wave Lock in a sandbox environment. Then a CSV file is obtained after converting the JSON file obtained by analysing the APK and after that the key features are extracted. As the last step, DT, RF, SVM, KNN, and LR ML algorithms were used with extracted key features. Then accuracy and results were checked and the particular app was labelled as malware or benign. It would be better if this study explored the possibilities of using other ML algorithms also.

5.5. Use of Deep Learning Based Methods

It is possible to use deep learning techniques also for detecting Android malware. In MLDroid, a web-based Android malware detection framework

[101][100] was proposed by performing dynamic analysis. In this work, ML and DL methods were used with an overall 98.8% malware detection rate.

The model proposed in

[102][101] disused a method to detect malware using a semantic-based DL approach and implemented a tool called DeepRefiner. This approach used the Long Short Term Memory (LSTM) on the semantic structure of Android bytecode with two layers of detection and validation. This method used the LSTM over Recurrent Neural Network (RNN) since RNN contains gradient vanish problem. Using this approach with an accuracy of 97.4% and a false positive rate of 2.54%, it was possible to detect malware. It was efficient and accurate compared with the traditional approaches. Since this approach uses the static analysis approach, some limitations can arise based on the runtime environment, which can be identified if this model uses the hybrid analysis approach.

MOCDroid

[99] model discussed a multiobjective evolutionary classifier to detect malware in Android. It combined multiobjective optimisation with clustering to generate a classifier using third-party call group behaviours. This method produced an accuracy of 95.15%. Import term extraction, clustering, and applying a genetic algorithm were the three steps included in this process. Initially, the DEX files were uncompressed from the APK after using the decompression tool, and Java codes were obtained using the JADX tool

[103][102]. Then the document term matrix was transformed. As the next step, K-Means clustering was applied since it was identified as the highest accuracy model for this, and the genetic algorithm was also applied. The results were compared with a random set of 10,000 benign and malicious apps with different antivirus engines. It is possible to consider other clustering methods to improve the accuracy of this method.

The work proposed in

[104][103] discussed a method to detect Android malware using a deep convolutional neural network (CNN). Raw opcode sequence from disassembled Smali program was analysed using static analysers to classify the malware. The advantage of this method is automatically learning the feature indicative of malware. This work was inspired by n-gram based methods. To train the models Android Malware Genome project dataset

[49] and Intel Security/MacAfee Lab dataset were used. The classification system of this provides 0.87 precision and recall accuracies. The accuracy of the malware detection can be increased when the dynamic analysis is also performed.

A deep learning-based static analysis approach was experimented with an accuracy of 99.9% and with an F1-score of 0.996 in

[105][104]. This approach used a dataset of over 1.8 million Android apps. The attributes of malware were detected through vectorised opcode extracted from the bytecode of the APKs with one-hot encoding. After performing experiments on Recurrent Neural Networks, Long Short Term Memory Networks, Neural Networks, Deep Convents, and Diabolo Network models, it was identified that Bidirectional Long Short-Term Memory (BiLSTMs) is the best model for this approach. It is better to analyse the complete byte code using static analysis and check the app behaviour with dynamic analysis to build a more comprehensive malware detection tool based on deep learning techniques.

The DL-Droid framework based on deep learning techniques

[106][105] proposed a new way of detecting Android malware with dynamic analysis techniques. This approach was having a detection rate of 97.8% by only including dynamic features. When the static features were also included in that, the detection rate would increase to 99.6%. The experiments were performed on real devices in which the application can run exactly the way the user experiences it. Further to this, some comparisons of detection performance and code coverage were also included in this work. Traditional ML classifier performances were also compared. This novel method outperformed the ML-based methods such as NB, SL, SVM, J48, Pruning Rule-Based Classification Tree (PART), RF, and DL. In addition to this work, seeking the possibilities to include intrusion detection mechanism in the DL-Droid would be a valuable addition.

The AdMat model proposed in

[107][106] discussed a CNN on Matrix-based approach to detect Android malware. This model characterised apps and treated them as images. Then the adjacency matrix was constructed for apps, and it was simplified with the size of 219 × 219 to enhance the efficiency in data processing after transferring decompiled source code into call-graph of Graph Modelling Language (GML) format. Those matrices were the input images to the CNN, and the model was trained to identify and classify malware and benign apps. This model has an accuracy of 98.2%. Even though the model is highly accurate, there are limitations to this work, such as performing static analysis only, and the performance depends on the number of used features.

The model proposed in

[108][107] discussed a DL-based method that uses CNN approach to analyse API sequence call, opcode, and permissions to detect Android malware in a zero-day scenario. The model achieved a weighted average detection rate of 91% and 81% on two datasets Drebin and AMD after the model was trained. The model can further improve if the dynamic analysis techniques are also considered.

With an accuracy of 95%, a multimodal analysis of malware apps using information fusion was presented in