Real-time UAV tracking refers to the tracking processing that is completed within the actual time of the acquisition of the image sequence by the drone's airborne imaging device. This processing is used to acquire the motion parameters of the target in the image moment by moment, including the target's position, speed, acceleration, and motion trajectory, etc.

- visual object tracking

- unmanned aerial vehicle (UAV) videos

1. Visual tracking for UAV videos

2. Real-time UAV Tracker by MultiRPN-DIDNet

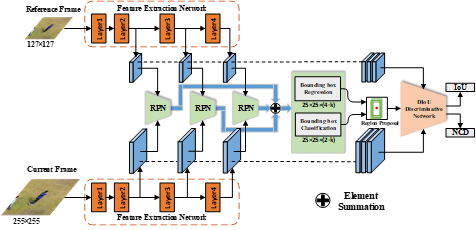

As shown in Figure 1, the single object tracking method proposed in this paper consists of multiple RPNs and a DIoU discriminative network, in which ResNet-50 is used as the backbone network for feature extraction. RPN is constructed under the framework of SNN to perform bounding box regression and classification. The RPNs built on the Block 2, Block 3 and Block4 of ResNet50 output their own Reg coefficients and Cls scores respectively. They are weighted and then fused through a set of offline learning weight coefficients, obtaining the final Reg coefficients and Cls scores. The foreground with a higher Cls score is selected as the anchor, and the corresponding region proposal is determined by combining the Reg coefficients of the anchor. The convolutional features from multiple layers of ResNet50 are fused. The fused features and the information of the candidate area are input into the DIoU discriminative network, and the region proposal with the best DIoU value is finally determined as the tracking result.

Figure 1. Target tracking framework combining multiple RPNs and DIoU discriminative network. The multiple RPNs are used to determine high-quality candidate regions, and the DIoU discriminative network performs the correction of the candidate regions, and then outputs the final result.

References

- Bolme, D.S.; Beveridge, J.R.; Draper, B.A.; Lui, Y.M. Visual object tracking using adaptive correlation filters. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), San Francisco, CA, USA, 13–18 June 2010; pp. 2544–2550.

- Henriques, J.F.; Caseiro, R.; Martins, P.; Batista, J. Exploiting the Circulant Structure of Tracking-by-Detection with Kernels. In Proceedings of the European Conference on Computer Vision (ECCV), Florence, Italy, 7–13 October 2012; pp. 702–715.

- Henriques, J.F.; Caseiro, R.; Martins, P.; Batista, J. High-Speed Tracking with Kernelized Correlation Filters. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 583–596.

- Danelljan, M.; Häger, G.; Khan, F.S.; Felsberg, M. Learning Spatially Regularized Correlation Filters for Visual Tracking. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 4310–4318.

- Valmadre, J.; Bertinetto, L.; Henriques, J.; Vedaldi, A.; Torr, P.H.S. End-to-End Representation Learning for Correlation Filter Based Tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 5000–5008.

- Wang, Q.; Gao, J.; Xing, J.; Zhang, M.; Hu, W. Dcfnet: Discriminant correlation filters network for visual tracking. arXiv 2017, arXiv:1704.04057.

- Held, D.; Thrun, S.; Savarese, S. Learning to Track at 100 FPS with Deep Regression Networks. In Proceedings of the European Conference on Computer Vision (ECCV), Amsterdam, The Netherlands, 8–16 October 2016; pp. 749–765.

- Bertinetto, L.; Valmadre, J.; Henriques, J.F.; Vedaldi, A.; Torr, P.H.S. Fully-Convolutional Siamese Networks for Object Tracking. In Proceedings of the European Conference on Computer Vision Workshops (ECCVW), Amsterdam, The Netherlands, 8–16 October 2016; pp. 850–865.

- Tao, R.; Gavves, E.; Smeulders, A.W.M. Siamese Instance Search for Tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 1420–1429.

- Zhu, Z.; Wang, Q.; Li, B.; Wu, W.; Yan, J.; Hu, W. Distractor-Aware Siamese Networks for Visual Object Tracking. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 103–119.

- Danelljan, M.; Bhat, G.; Khan, F.S.; Felsberg, M. ATOM: Accurate Tracking by Overlap Maximization. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 4655–4664.

- Li, B.; Wu, W.; Wang, Q.; Zhang, F.; Xing, J.; Yan, J. SiamRPN++: Evolution of Siamese Visual Tracking with Very Deep Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 4277–4286.

- Huang, L.; Zhao, X.; Huang, K. Bridging the gap between detection and tracking: A unified approach. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Seoul, Korea, 29 October–1 November 2019; pp. 3999–4009.