Computer-aided diagnosis (CAD) system based on deep learning to assist doctors in diagnosis is of great significance, because diagnosing lesions in the stomach, intestines, and esophagus is laborious for doctors. In addition, misdiagnoses can occur based on a subjective judgment.

- artificial intelligence

- computer-aided diagnosis system

- deep learning

- esophageal lesion

- gastric lesion

- gastrointestinal endoscopy

- intestinal lesion

1. Introduction

In recent years, the application of artificial intelligence (AI) based on deep learning in the medical field has become more extensive and suitable for clinical practice compared with traditional machine learning. Constructing a computer-aided diagnosis (CAD) system based on deep learning to assist doctors in diagnosis is of great significance, because diagnosing lesions in the stomach, intestines, and esophagus is laborious for doctors. In addition, misdiagnoses can occur based on a subjective judgment.

2. Deep Learning

Deep learning is a novel research direction in the field of machine learning. It is based on the self-learning ability to learn complex and abstract features, rather than on traditional machine learning with manual features. Initially, deep learning did not attract much attention from researchers because of hardware limitations; however, it has greatly developed with the continuous progress of computer processing power. Deep learning can help learn the internal regularity and representation levels of training data, and the information obtained is of great help for interpreting the data. Deep learning has led to many achievements in object detection, image segmentation, and classification applications. Compared with traditional machine learning algorithms, deep learning approaches are usually more accurate and robust.

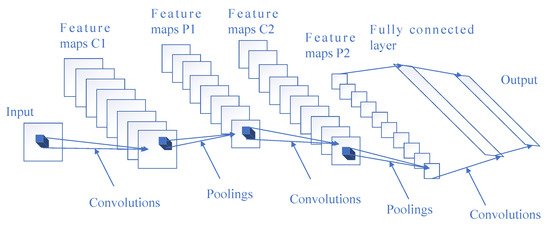

From a technical point of view, deep learning algorithms can make use of a convolutional neural network (CNN) to analyze complex information, which is usually an advantage over manual feature extraction, but it requires learning a great deal of data for accurate inference and analysis. A representative CNN framework is mainly composed of multiple convolutional layers, pooling layers, and fully connected layers, as shown in Figure 1. Convolutional layers are often used for feature extraction. Pooling layers downsample the outputs of convolutional layers, reduce the number of parameters, and speed up calculation. The role of the fully connected layer is to obtain an output by the nonlinear combination of extracted features. The output of the fully connected layers is then input into a softmax activation function to get the final results, so as to generate a prediction of the input data.

Figure 1. Architecture of a representative convolutional neural network (CNN).

3. Diagnosis of Gastrointestinal Endoscopy Based on Deep Learning

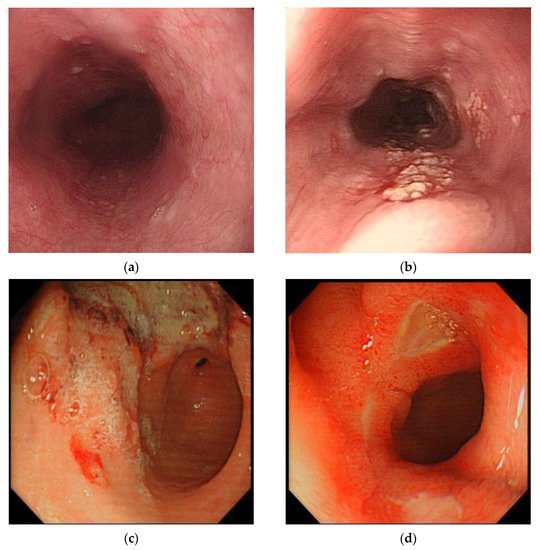

With the continuous progress of deep learning technology, a large number of researchers are paying close attention to its possible application in medical research. This is mainly on account of the characteristics of medical data, which are usually unstructured texts, images, or videos instead of data with distinct characteristics. For example, the endoscopic images of a normal esophagus, early esophageal cancer (EC), gastric cancer, and duodenal ulcer are shown in Figure 2. Therefore, the advancement of deep learning has an inevitable tendency towards medicine. In a CAD system based on traditional machine learning algorithms, researchers manually extract data features, such as the lesion size, edge, and object surface features, based on clinical experience. However, the deep learning algorithm automatically extracts features and learns to recognize them. Using a deep learning algorithm rather than a traditional machine learning algorithm can effectively reduce the loss of feature information, make full use of the feature information for accurate reference, and reduce doctors’ burdens. In recent years, researchers have presented various CAD systems following the success of deep learning in image classification and object detection in medicine. Transfer learning, which transfers information learned in other domains to the current domain, has also been applied to the CAD model because of the shortage of medical data, and has achieved excellent performance.

Figure 2. Examples of endoscopic images of normal esophagus, early esophageal cancer (EC), gastric cancer, and duodenal ulcer: (a) normal esophagus, (b) early EC, (c) gastric cancer, and (d) duodenal ulcer.

4. Conclusions

In conclusion, a CAD system based on the deep learning method can automatically extract and recognize features. Using the deep learning method rather than traditional machine learning can effectively reduce the omission of feature information and make full use of the feature information to improve the performance of diagnosing lesions in the stomach, intestines, and esophagus. It can also assist endoscopists through auxiliary advice and reducing their burden. Moreover, it is of great help to solve the problem of insufficient medical resources in remote areas by employing an online CAD system.

We think that it is necessary to make improvements in the following aspects in order to promote the further development of CAD systems. Initially, the dataset for training needs to be augmented as much as possible and needs to be collected from a wide range of sources in order to obtain a network model with a better generalization ability. Then, to deal with the shortage of training data, both weakly supervised learning methods and transfer learning methods are excellent for avoiding the annotation of a large amount of data. We intend to use a few-shot learning strategy to achieve excellent diagnostic accuracy based on a small amount of labeled data in the following research. Third, some image preprocessing techniques, such as image deblurring and resolution improvement, can be used to improve the performance of the model when low-quality images are input. We still need to make further improvements on 3D CAD systems in order to acquire more feature information, and should implement further speed optimization to achieve real-time clinical applications while ensuring excellent performance, especially on 3D models. Furthermore, when something goes wrong in one part of the body, it often sets off alarms elsewhere because the body is a collaborative group of organs. Therefore, when constructing a CAD system, we should pay more attention to the transfer of the feature information of different diseases, try to find the relationship among different diseases, and establish a CAD system that can diagnose multiple related diseases. More importantly, the trust between CAD systems and doctors should be built by increasing the interpretability of CNN. Last, but not least, a unified validation dataset and common criteria are needed to make accurate comparisons among various CAD systems so as to facilitate further development.

(References would be added automatically after the entry is online)