Spoken ATC Instruction Understanding means in air traffic control, speech communication with radio transmission is the primary way to exchange information between the controller and aircrew. A wealth of contextual situational dynamics is embedded implicitly; thus, understanding the spoken instruction is particularly significant to the ATC research. ATC is a complicated and time-varying system, in which operational safety is always a hot research topic. All achievements of an ATC center can be vetoed without any hesitation if any safety incident occurs. Air traffic safety is affected by various aspects of air traffic operation, from mechanical maintenance, resource management, to air traffic control. The safety of air traffic control is particularly important since the aircraft is already in the air. There is no doubt that any effort deserves to be made to improve ATC safety.

- air traffic control

- speech communication

- automatic speech recognition

- spoken instruction understanding

- voiceprint recognition

1. Introduction

Air traffic is an extension of ground transportation, in which the aircraft flies in the three-dimensional (3D) earth space. Since no signs and traffic signals can be designed to provide required guidance for flights in the air, the pilot is almost “blind” once the aircraft has taken off, with few approaches to obtain traffic situation around the aircraft. Considering this issue, a position, called air traffic control, is established to ensure flight safety in a local airspace area. Various infrastructures were developed to collect the global air traffic situation (radar) and then transmit the information between air and ground (communication). Based on the real-time traffic situations and a set of well-designed ATC rules, the air traffic controller (ATCO) is able to direct the flight to their destination in a safe and highly efficient manner.

Although enormous efforts have been made to build a qualified controller pilot data link communication (CPDLC) [1], digital data transmission is still a dilemma of the communication for air traffic control. In the current ATC procedure, speech communication with radio transmission is still the primary way to exchange information between the ATCO and aircrew. Therefore, the spoken instruction is transmitted in an analog manner and can be easily impacted by environmental factors, such as communication conditions, equipment error, etc. The spoken instruction contains a wealth of contextual situational dynamics that indicates the evolutions of the flight and traffic in the future [2][3], which is highly significant to the air traffic operation.

However, speech communication is also a typical human-in-the-loop (HITL) procedure in the ATC loop, since the current ATC system fails to process the speech signal directly. Any speech error may cause communication misunderstanding between the ATCO and aircrew [4][5]. As a first step of performing an ATC instruction, the communication misunderstanding likely results in incorrect aircraft motion states and further induces a potential conflict (safety risk) during the air traffic operation. Based on the statistics released by EUROCONTROL [6], up to 30% of all incidents related to speech communication errors (rising to 50% in airport environments) and 40% of all runway incursions also involve communication problems. Consequently, understanding the spoken instruction is particularly significant to detect the potential risk and further improve the ATC safety.

2. Spoken Instruction Understanding

The main purpose of understanding spoken instruction is to obtain the near-future traffic dynamics in advance and further to detect the communication errors that may cause potential safety risks. It not only enhances the information source of the current ATC system but also is capable of providing reliable warnings before the pilot performs the incorrect instruction (with more prewarning time).

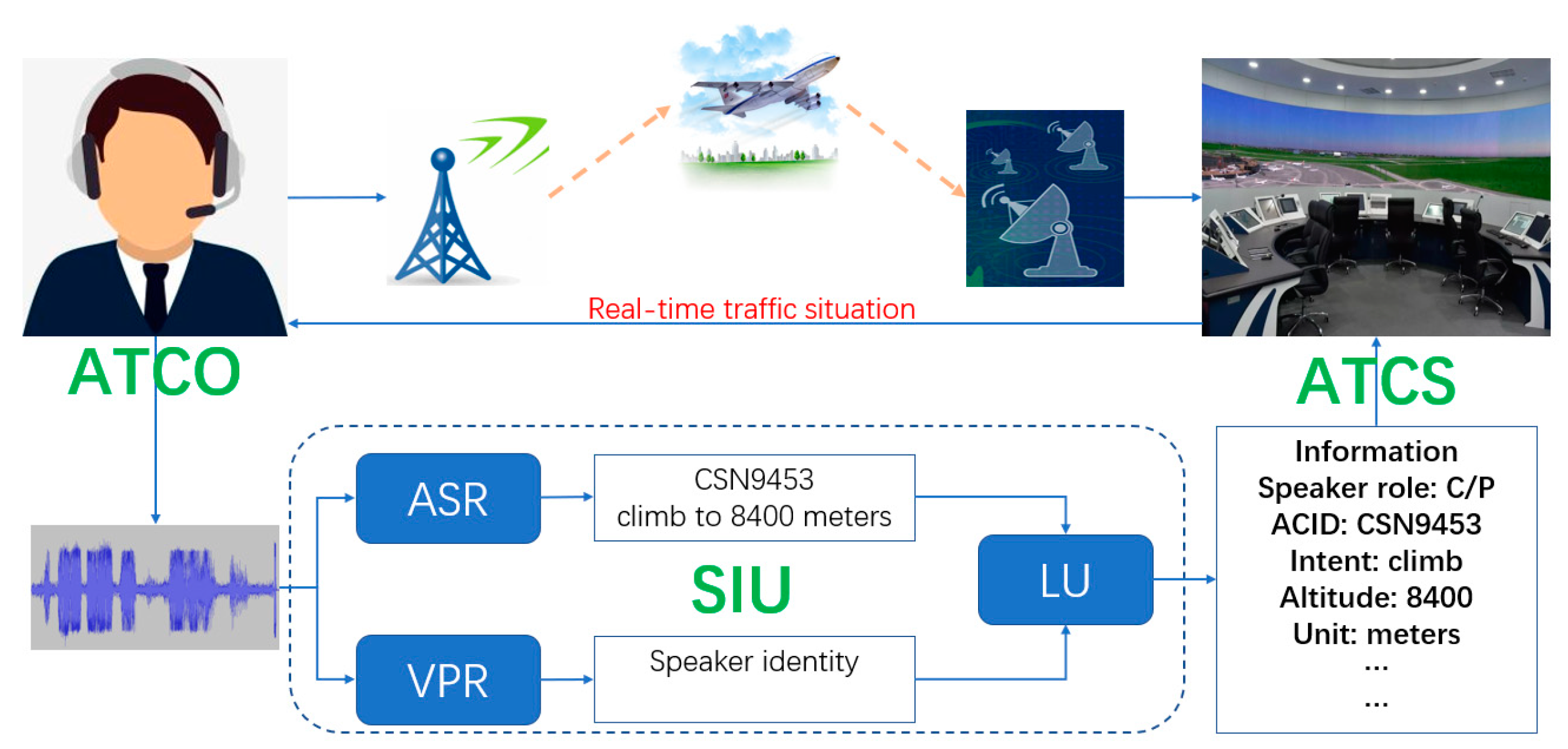

As shown in Figure 1, the upper part presents the typical ATC communication procedure, while the lower part illustrates the required spoken instruction understanding (SIU) task in the ATC domain. In general, the SIU mainly consists of two steps: automatic speech recognition (ASR) and language understanding (LU) [7][8], as described below:

Figure 1.

Figure 1. The air traffic control (ATC) procedure and spoken instruction understanding (SIU) roles in ATC.

(a) ASR: translates the ATCO’s instruction from speech signal into text representation (human- or computer-readable). The ASR technique concerns the acoustic model, language model, or other contextual information.

(b) LU: also known as text instruction understanding, with the goal to extract ATC-related elements from the text instruction since the ATC system cannot process the text directly, i.e., from text to an ATC-related structured data. The ATC elements are further applied to improve the operational safety of air traffic. In general, the LU task can be divided into three parts: role recognition, intent detection, and slot filling (ATC-related element extraction, such as aircraft identity, altitude, etc.).

In addition, since the ATC communication is a multi-speaker and multi-turn conversation system, to support the correlation among different instructions in the same sector, voiceprint recognition (VPR) is also needed to distinguish the identity of different speakers for the LU task. The VPR technique can also be applied for security purposes. For instance, if an ATCO instruction for a certain flight A is incorrectly responded to by the aircrew of flight B (usually the similar aircraft identity), the potential risks may be raised due to the mismatched traffic dynamics. In this way, the VPR technique is expected to be applied to detect this emergency situation from the perspective of the vocal feature of different speakers and further prevent the potential flight conflict (improve operational safety).

All the time, new techniques failed to be applied to the ATC domain promptly due to the various limitations (safety, complex environment, etc.). Although enormous academic studies for speech instruction have been reported in the ATC domain [9][10][11][12][13], currently, there is no valid processing devoted to speech instruction in a real industrial ATC system. The only contribution of speech communication is regarded as the evidence of the post-event analysis, which cannot present its important role in improving air traffic safety. Fortunately, thanks to a large amount of available industrial data storage and widespread applications of information technology, it is possible to obtain extra real-time traffic information from speech communication and further make contributions to the air traffic operation.

3. Future Perspectives

Based on the aforementioned technique challenges and exiting works, the possible research topics related to the SIU task in the future are prospected, from the perspective of automatic speech recognition, language understanding, and voiceprint recognition, as summarized below:

3.1. Speech Quality

(1) Speech enhancement: Facing the inferior speech quality in the ATC domain, an intuitive way is to achieve the speech enhancement to further improve the ASR and VPR performance. With this technique, a high-quality ATC speech is expected to be obtained to support the SIU task and further benefit to achieve the high-performance subsequent ATC applications.

(2) Representation learning: Facing the diverse distribution of speech features raised by different communication conditions, devices, multilingual, unstable speech rate, etc., there are reasons to believe that the handcrafted feature engineering algorithms (such as MFCC) may fail to support the ASR and VPR research to obtain the desired performance. The representation learning, i.e., extracting speech features by a well-optimized neural network, may be a promising way to improve the final SIU performance.

3.2. Sample Scarcity

(1) Transfer learning: Although a set of standardized phraseology has been designed for the ATC procedure, the rules and vocabulary still depend on the flight phases, locations, and control centers. It is urgent to study the transfer learning technique among different flight phases, locations, and control centers to save the sample requirement and formulate a unified global technical roadmap.

(2) Semi-supervised and self-supervised research: Since the data collection and annotation is always an obstacle of applying advanced technology to the ATC domain, the semi-supervised and self-supervised strategies are expected to be a promising way to overcome this dilemma, in which the unlabeled data samples can also be applied to contribute the model optimization based on their intrinsic characteristics, such as that in the common application area.

(3) Sample generation: Similar to the last research topic, sample generation is another way to enhance the sample size and diversity and further improve the task performance, such as text instruction generation.

3.3. Contextual Information

(1) Contextual situational incorporation: As illustrated before, contextual situational information is a powerful way to improve SIU performance. Due to the heterogeneous characteristics of the ATC information, existing works failed to take full advantage of this type of information. Learning from the state-of-the-art studies, the deep neural network may be a feasible tool to fuse the multi-modal input by encoding them as a high-level abstract representation using the learning mechanism and further make contributions to improve the SIU performance.

(2) Multi-turn dialog management: Obviously, the ATC communication in the same frequency is a multi-turn and multi-speaker dialog with a task-oriented goal (ATC safety). During the dialog, the historical information is able to provide significant guidance to current instruction based on the air traffic evolution. Thus, it is important to consider the multi-turn history information to enhance the SIU task of current dialog, similar to what is required in the field of natural language processing.

3. Future Perspectives

Based on the aforementioned technique challenges and exiting works, the possible research topics related to the SIU task in the future are prospected, from the perspective of automatic speech recognition, language understanding, and voiceprint recognition, as summarized below:

3.1. Speech Quality

- (1) Speech enhancement: Facing the inferior speech quality in the ATC domain, an intuitive way is to achieve the speech enhancement to further improve the ASR and VPR performance. With this technique, a high-quality ATC speech is expected to be obtained to support the SIU task and further benefit to achieve the high-performance subsequent ATC applications.

- (2) Representation learning: Facing the diverse distribution of speech features raised by different communication conditions, devices, multilingual, unstable speech rate, etc., there are reasons to believe that the handcrafted feature engineering algorithms (such as MFCC) may fail to support the ASR and VPR research to obtain the desired performance. The representation learning, i.e., extracting speech features by a well-optimized neural network, may be a promising way to improve the final SIU performance.

3.2. Sample Scarcity

- (1) Transfer learning: Although a set of standardized phraseology has been designed for the ATC procedure, the rules and vocabulary still depend on the flight phases, locations, and control centers. It is urgent to study the transfer learning technique among different flight phases, locations, and control centers to save the sample requirement and formulate a unified global technical roadmap.

- (2) Semi-supervised and self-supervised research: Since the data collection and annotation is always an obstacle of applying advanced technology to the ATC domain, the semi-supervised and self-supervised strategies are expected to be a promising way to overcome this dilemma, in which the unlabeled data samples can also be applied to contribute the model optimization based on their intrinsic characteristics, such as that in the common application area.

- (3) Sample generation: Similar to the last research topic, sample generation is another way to enhance the sample size and diversity and further improve the task performance, such as text instruction generation.

3.3. Contextual Information

- (1) Contextual situational incorporation: As illustrated before, contextual situational information is a powerful way to improve SIU performance. Due to the heterogeneous characteristics of the ATC information, existing works failed to take full advantage of this type of information. Learning from the state-of-the-art studies, the deep neural network may be a feasible tool to fuse the multi-modal input by encoding them as a high-level abstract representation using the learning mechanism and further make contributions to improve the SIU performance.

- (2) Multi-turn dialog management: Obviously, the ATC communication in the same frequency is a multi-turn and multi-speaker dialog with a task-oriented goal (ATC safety). During the dialog, the historical information is able to provide significant guidance to current instruction based on the air traffic evolution. Thus, it is important to consider the multi-turn history information to enhance the SIU task of current dialog, similar to what is required in the field of natural language processing.

References

- Rossi, M.A.; Lollini, P.; Bondavalli, A.; Romani de Oliveira, I.; Rady de Almeida, J. A Safety Assessment on the Use of CPDLC in UAS Communication System. In Proceedings of the 2014 IEEE/AIAA 33rd Digital Avionics Systems Conference (DASC), Colorado Springs, CO, USA, 5–9 October 2014; pp. 6B1-1–6B1-11.

- Kopald, H.D.; Chanen, A.; Chen, S.; Smith, E.C.; Tarakan, R.M. Applying Automatic Speech Recognition Technology to Air Traffic Management. In Proceedings of the 2013 IEEE/AIAA 32nd Digital Avionics Systems Conference (DASC), East Syracuse, NY, USA, 5–10 October 2013; pp. 6C3-1–6C3-15.

- Nguyen, V.N.; Holone, H. Possibilities, Challenges and the State of the Art of Automatic Speech Recognition in Air Traffic Control. Int. J. Comput. Electr. Autom. Control. Inf. Eng. 2015, 9, 1916–1925.

- Geacăr, C.M. Reducing Pilot/Atc Communication Errors Using Voice Recognition. In Proceedings of the 27th International Congress of the Aeronautical Sciences, Nice, France, 19–24 September 2010; pp. 1–7.

- Glaser-Opitz, H.; Glaser-Opitz, L. Evaluation of CPDLC and Voice Communication during Approach Phase. In Proceedings of the 2015 IEEE/AIAA 34th Digital Avionics Systems Conference (DASC), Prague, Czech, 13–18 September 2015; pp. 2B3-1–2B3-10.

- Isaac, A. Effective Communication in the Aviation Environment: Work in Progress. Hindsight 2007, 5, 31–34.

- Lin, Y.; Tan, X.; Yang, B.; Yang, K.; Zhang, J.; Yu, J. Real-Time Controlling Dynamics Sensing in Air Traffic System. Sensors 2019, 19, 679.

- Serdyuk, D.; Wang, Y.; Fuegen, C.; Kumar, A.; Liu, B.; Bengio, Y. Towards End-to-End Spoken Language Understanding. In Proceedings of the 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Calgary, AB, Canada, 15–20 April 2018; pp. 5754–5758.

- Helmke, H.; Slotty, M.; Poiger, M.; Herrer, D.F.; Ohneiser, O.; Vink, N.; Cerna, A.; Hartikainen, P.; Josefsson, B.; Langr, D.; et al. Ontology for Transcription of ATC Speech Commands of SESAR 2020 Solution PJ.16-04. In Proceedings of the 2018 IEEE/AIAA 37th Digital Avionics Systems Conference (DASC), London, UK, 23–27 September 2018; pp. 1–10.

- De Cordoba, R.; Ferreiros, J.; San-Segundo, R.; Macias-Guarasa, J.; Montero, J.M.; Fernandez, F.; D’Haro, L.F.; Pardo, J.M. Air Traffic Control Speech Recognition System Cross-Task and Speaker Adaptation. IEEE Aerosp. Electron. Syst. Mag. 2006, 21, 12–17.

- Ferreiros, J.; Pardo, J.M.; de Córdoba, R.; Macias-Guarasa, J.; Montero, J.M.; Fernández, F.; Sama, V.; D’Haro, L.F.; González, G. A Speech Interface for Air Traffic Control Terminals. Aerosp. Sci. Technol. 2012, 21, 7–15.

- Helmke, H.; Ohneiser, O.; Muhlhausen, T.; Wies, M. Reducing Controller Workload with Automatic Speech Recognition. In Proceedings of the 2016 IEEE/AIAA 35th Digital Avionics Systems Conference (DASC), Sacramento, CA, USA, 25–29 September 2016; pp. 1–10.

- Gurluk, H.; Helmke, H.; Wies, M.; Ehr, H.; Kleinert, M.; Muhlhausen, T.; Muth, K.; Ohneiser, O. Assistant Based Speech Recognition—Another Pair of Eyes for the Arrival Manager. In Proceedings of the 2015 IEEE/AIAA 34th Digital Avionics Systems Conference (DASC), Prague, Czech Republic, 13–18 September 2015; pp. 3B6-1–3B6-14.