This paper presents relations between information society (IS), electronics and artificial intelligence (AI) mainly through twenty-four IS laws. The laws not only make up a novel collection, currently non-existing in the literature, but they also highlight the core boosting mechanism for the progress of what is called the information society and AI. The laws mainly describe the exponential growth in a particular field, be it the processing, storage or transmission capabilities of electronic devices. Other rules describe the relations to production prices and human interaction. Overall, the IS laws illustrate the most recent and most vibrant part of human history based on the unprecedented growth of device capabilities spurred by human innovation and ingenuity. Although there are signs of stalling, at the same time there are still many ways to prolong the fascinating progress of electronics that stimulates the field of artificial intelligence. There are constant leaps in new areas, such as the perception of real-world signals, where AI is already occasionally exceeding human capabilities and will do so even more in the future. In some areas where AI is presumed to be incapable of performing even at a modest level, such as the production of art or programming software, AI is making progress that can sometimes reflect true human skills. Maybe it is time for AI to boost the progress of electronics in return.

- information society

- electronics

- artificial intelligence

- ambient intelligence

1. Introduction

What are the relations between information society (IS), electronics and artificial intelligence (AI)? In this paper we first introduce description of AI and related fields, and then proceed to the information society as the most general concept.

The term “artificial intelligence”, also called “machine intelligence” (MI), includes hardware, software or most common combined artificial systems, i.e., machines that exhibit some form of intelligence. For example, a mobile phone runs a game or provides a web search using algorithms written in a programming language that is actually running on a mobile phone or in a cloud.

“Ambient intelligence” (AmI) is AI implemented on machines in the surrounding environment and is mostly demonstrated by the services of the human environment. AmI is therefore even closely related to various machines, not necessarily computers, taking care of humans thus benefiting from intelligent and cognitive functionalities.

Both AI and AmI are characterized as the study of intelligent agents [1], representing a core building block of all AI and AmI methods. An intelligent agent is a system that perceives its environment and takes actions. There are several levels of agents ranging from the simplest reflective ones such as a thermostat to advanced ones that learn and follow their goals, e.g., autonomous cars [2].

The term AI was first coined by McCarthy in 1956 at the Dartmouth conference. In Europe, several researchers consider 1950 to be the start of AI, which was then termed machine intelligence. The milestone was Alan Turing’s paper [3] published in 1950. Alan Turing is regarded by many as the founding father of computer science due to his halting problem, the Turing test, the Turing machine and the decoding of Hitler’s Enigma machine that helped to end the Second World War more quickly [3].

The term AmI was introduced in the late 1990s by Eli Zelkha and his team at Palo Alto Ventures [4] and was later extended to the environment without people. A modern definition was delivered by Juan Carlos Augusto and McCullagh [5]: “Ambient Intelligence is a multi-disciplinary approach which aims to enhance the way environments and people interact with each other. The ultimate goal of the area is to make the places we live and work in more beneficial to us.” AmI is also aligned with the concept of the “disappearing computer” [6,7]. The AmI field is very closely related to pervasive computing, ubiquitous computing and context awareness [8,9,10,11].

The term AmI was introduced in the late 1990s by Eli Zelkha and his team at Palo Alto Ventures [4]and was later extended to the environment without people. A modern definition was delivered by Juan Carlos Augusto and McCullagh [5]: “Ambient Intelligence is a multi-disciplinary approach which aims to enhance the way environments and people interact with each other. The ultimate goal of the area is to make the places we live and work in more beneficial to us.” AmI is also aligned with the concept of the “disappearing computer” [6][7]. The AmI field is very closely related to pervasive computing, ubiquitous computing and context awareness [8][9][10][11].

AI and AmI are two of the most prosperous fields in the current area of human civilization, named information society (IS). In its most general form, an “information society” can be characterized as a society in which any kind of activity regarding information is an integral and inseparable part. There are many activities that involve information as the primary object: use, construction, manipulation, processing, integration, recording, accessing, storage, transfer, etc. An information society was first defined as a society in in which 30% of its gross domestic product (GDP) relied on information, but the definition was already met for most of the developed countries decades ago [12]. Historically, information had been regarded as a valuable source of any kind of progress, and information became the essential driver of an IS by being able to be dispersed and processed exponentially faster and in larger quantities than ever before with communication technologies. The rapid, radical and thorough change that these capabilities offered to society changed societies from industrial to information societies. The emergence of the importance of information has reorganized education, the economy, healthcare, warfare, governmental services and democratic operations, industry, scientific investigation and other, lower-level aspects of what is deemed important in a society. As a prominent example, medicine has seen exponential progress in screening and diagnosing of a wide range of diseases, which can mostly be attributed to AI and its use in medical imaging [13,14,15]. This fundamentally changed the populace as the main participants in the change, who entered this new phase of a civilization through accessing the Internet [16].

AI and AmI are two of the most prosperous fields in the current area of human civilization, named information society (IS). In its most general form, an “information society” can be characterized as a society in which any kind of activity regarding information is an integral and inseparable part. There are many activities that involve information as the primary object: use, construction, manipulation, processing, integration, recording, accessing, storage, transfer, etc. An information society was first defined as a society in in which 30% of its gross domestic product (GDP) relied on information, but the definition was already met for most of the developed countries decades ago [12]. Historically, information had been regarded as a valuable source of any kind of progress, and information became the essential driver of an IS by being able to be dispersed and processed exponentially faster and in larger quantities than ever before with communication technologies. The rapid, radical and thorough change that these capabilities offered to society changed societies from industrial to information societies. The emergence of the importance of information has reorganized education, the economy, healthcare, warfare, governmental services and democratic operations, industry, scientific investigation and other, lower-level aspects of what is deemed important in a society. As a prominent example, medicine has seen exponential progress in screening and diagnosing of a wide range of diseases, which can mostly be attributed to AI and its use in medical imaging [13][14][15]. This fundamentally changed the populace as the main participants in the change, who entered this new phase of a civilization through accessing the Internet [16].

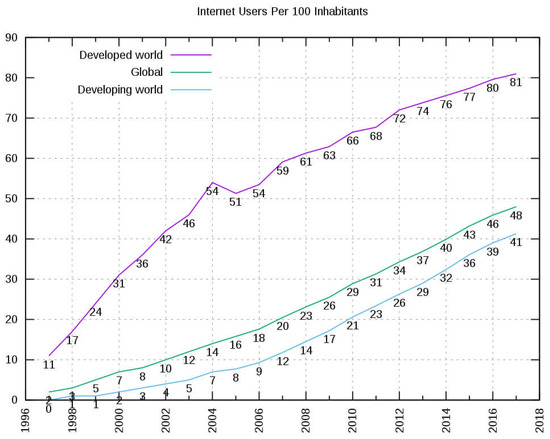

The Internet was therefore one of the main drivers of the steady change that followed its adoption. Most theoreticians pinpoint the start of the IS as the 1970s, which was when the Internet started to be used internally [17]. However, it was the early 1990s and 2000s that brought the most changes and rapid progress through net usage and information dissemination. More widely, information and communication technologies (ICTs) and their usage intensified how we centre our activities in economic, social, cultural and political areas [18]. While the start of the IS can be pinpointed as the early 1970s, the most common recent or golden IS era due to the growth in the amount of data started in approximately 2000 (see

). The promise of this change was reaffirmed by an international document signed in 2005 called the Tunis Agenda for the Information Society [19], which called for strong financing of ICTs. The reason for such a strong systemic action by many nations was that the use of ICTs resulted in progress for social good and in progress that benefitted the populace; therefore, is was seen as the new foundational grounds for progressing towards a new age in which the IS would be the standard. This progress results in the fast growth of Internet users, as presented in

. As a consequence, employment needs are shifting and influencing human everyday life.

The exponential growth of data in the information society [20].

Figure 2. Growth in the number of Internet users [21].

2. Related Information Society Laws

The IS laws presented in this section deal with relations to human activities, in particular in regards of the usage of computers, human–computer or human–human interactions.

2.1. Software Laws

]

: The cost of airplanes is proportional to the inverse of the number of planes manufactured raised to some power. The law seems to be time-independent.

-

Linus’s law [51]: Given large enough beta tester and codeveloper bases, almost every problem will be characterized quickly and the fix will be obvious to someone. In other words: “given enough eyeballs, all bugs are shallow”. The law contradicts fears that software is becoming uncontrollable with the growing amount of code and is not related to technological issues but to human ingenuity. As such, the law seems to be quite time-independent.

Linus’s law [22]: Given large enough beta tester and codeveloper bases, almost every problem will be characterized quickly and the fix will be obvious to someone. In other words: “given enough eyeballs, all bugs are shallow”. The law contradicts fears that software is becoming uncontrollable with the growing amount of code and is not related to technological issues but to human ingenuity. As such, the law seems to be quite time-independent.

-

Wirth’s, Page’s, Gates’ or May’s law [52]: Software is becoming slower more rapidly than hardware is becoming faster. This law may not be fully confirmed in recent years due to various tools and new techniques, and in particular the time relations in this rule seem to be under consideration.

-

Wirth’s, Page’s, Gates’ or May’s law [23]: Software is becoming slower more rapidly than hardware is becoming faster. This law may not be fully confirmed in recent years due to various tools and new techniques, and in particular the time relations in this rule seem to be under consideration.

-

Brooks’s law: “Adding manpower to a late software project makes it later” is an observation about software project management, but is valid in several other areas where the process cannot be parallelized. It was coined by Fred Brooks in his 1975 book The Mythical Man-Month [53]. Somewhat ironically, an incremental person, when added to a project, makes it take more, not less time.

Brooks’s law: “Adding manpower to a late software project makes it later” is an observation about software project management, but is valid in several other areas where the process cannot be parallelized. It was coined by Fred Brooks in his 1975 book The Mythical Man-Month [24]. Somewhat ironically, an incremental person, when added to a project, makes it take more, not less time.

2.2. Socioeconomic Laws

-

Metcalf’s law [54]: Value of a network = square(n), where n is number of nodes in the network; the value or effect of a network is proportional to the square of the number of nodes. It is similar to Odlyzko’s law [55]: value of a network = n × logn. This law seems to be time-independent.

Metcalf’s law [25]: Value of a network = square(n), where n is number of nodes in the network; the value or effect of a network is proportional to the square of the number of nodes. It is similar to Odlyzko’s law [26]: value of a network = n × logn. This law seems to be time-independent.

-

Tapscott’s or Negroponte’s law: The economy of an information society is a frictionless economy, information economy, Internet economy, net economy, and new economy; it is global, liberal, without restrictions and regulations, spurred by electronics and information technologies and based on bits instead of atoms. Tapscott [56] introduced e-commerce and e-business characteristics, while Negroponte [57] introduced the e-world consisting of bits instead of atoms, transforming the economy into a new stage. The economy in an IS is surely different compared to the period before, but it will last only a certain period of time until another step in human civilization occurs [58].

-

Tapscott’s or Negroponte’s law: The economy of an information society is a frictionless economy, information economy, Internet economy, net economy, and new economy; it is global, liberal, without restrictions and regulations, spurred by electronics and information technologies and based on bits instead of atoms. Tapscott [27] introduced e-commerce and e-business characteristics, while Negroponte [28] introduced the e-world consisting of bits instead of atoms, transforming the economy into a new stage. The economy in an IS is surely different compared to the period before, but it will last only a certain period of time until another step in human civilization occurs [29].

-

Gross’s law: The information overload law; or the infobesity, infoxication, information anxiety and information explosion law [59]: The side effect of an IS is information overload. This law relates to the excessive information given to people in everyday life and when making decisions due to ICTs generating massive amounts of information that grow exponentially. This law seems to be increasingly more valid with the progress of increasingly more data and information and with the lack of appropriate mechanisms that would enable people to handle the information overload issue.

-

Gross’s law: The information overload law; or the infobesity, infoxication, information anxiety and information explosion law [30]: The side effect of an IS is information overload. This law relates to the excessive information given to people in everyday life and when making decisions due to ICTs generating massive amounts of information that grow exponentially. This law seems to be increasingly more valid with the progress of increasingly more data and information and with the lack of appropriate mechanisms that would enable people to handle the information overload issue.

-

Gams’ law [30]: IS, the cyberworld double fortune. The fortune can be real or fictitious, such as cryptocurrency. First presented in 2002, when there was not as much cryptocurrency in the world such as Bitcoin, the observed law taught among the local economics faculty proposes a transition at a remote island where native people trade natural goods such as pigs and coconuts. At one point, a modern king introduces paper money; in their fictitious currency, 1 Illa is worth 1 pig. Counting the natural resources and the paper money, the island has twice as much wealth as before. If neighbouring islands accept their currency, the king can print considerably more paper money and buy a substantial amount of goods abroad. In time, the king’s successor introduces BIlla, a Bitcoin version of their paper currency Illa. The story repeats and the current king, or better, their business elite, can considerably increase their worth. This example should help understand the events in the net economy: why virtual money increases wealth, why elites become increasingly richer and why the fictitious or “normative” standard may not directly correspond to the real status of netizens. For example, the netizens on the fictitious island have the same amount of pigs and coconuts at the end of the story as in the beginning, and if the elites increase their wealth, the average islander has less than in the beginning. Note, however, that the progress enables better production of pigs and other goods, and overall, the middle class more or less stays at the same level while the overall wealth increases. However, nominal wealth is significantly different than actual wealth in terms of pigs and coconuts. As with many economic laws, this one is also not directly bound to the technological process and therefore is not as time-dependent as, e.g., Moore’s law.

-

Gams’ law [31]: IS, the cyberworld double fortune. The fortune can be real or fictitious, such as cryptocurrency. First presented in 2002, when there was not as much cryptocurrency in the world such as Bitcoin, the observed law taught among the local economics faculty proposes a transition at a remote island where native people trade natural goods such as pigs and coconuts. At one point, a modern king introduces paper money; in their fictitious currency, 1 Illa is worth 1 pig. Counting the natural resources and the paper money, the island has twice as much wealth as before. If neighbouring islands accept their currency, the king can print considerably more paper money and buy a substantial amount of goods abroad. In time, the king’s successor introduces BIlla, a Bitcoin version of their paper currency Illa. The story repeats and the current king, or better, their business elite, can considerably increase their worth. This example should help understand the events in the net economy: why virtual money increases wealth, why elites become increasingly richer and why the fictitious or “normative” standard may not directly correspond to the real status of netizens. For example, the netizens on the fictitious island have the same amount of pigs and coconuts at the end of the story as in the beginning, and if the elites increase their wealth, the average islander has less than in the beginning. Note, however, that the progress enables better production of pigs and other goods, and overall, the middle class more or less stays at the same level while the overall wealth increases. However, nominal wealth is significantly different than actual wealth in terms of pigs and coconuts. As with many economic laws, this one is also not directly bound to the technological process and therefore is not as time-dependent as, e.g., Moore’s law.

-

Clift’s law or e-democracy, digital democracy or Internet democracy progress [60]: The web enables democratic progress. The introduction of ICTs and IS tools to political and governance processes is thought to promote democracy since citizens are presumed to be eligible to participate equally in information creation and sharing. In other words, “The Internet is the most democratic and free media in the world.” The World Wide Web supposedly offers participants “a potential voice, a platform, and access to the means of production” [61]. However, in recent decades, the concentration of capital has resulted in an increased concentration of media ownership by large private entities in several American and European countries [

[32]: The web enables democratic progress. The introduction of ICTs and IS tools to political and governance processes is thought to promote democracy since citizens are presumed to be eligible to participate equally in information creation and sharing. In other words, “The Internet is the most democratic and free media in the world.” The World Wide Web supposedly offers participants “a potential voice, a platform, and access to the means of production” [

62]. According to current polls [63], over 90% of Americans from a sample of approximately 20,000 considered the media to have major importance for democracy; however, approximately 50% of them see the media as biased to various degrees, impairing and endangering democratic processes. While for decades the optimistic viewpoint prevailed in e-democracy, in recent years, we might be witnessing a change. The future of this law seems quite unclear.Clift’s law or e-democracy, digital democracy or Internet democracy progress 33]. However, in recent decades, the concentration of capital has resulted in an increased concentration of media ownership by large private entities in several American and European countries [34]. According to current polls [35], over 90% of Americans from a sample of approximately 20,000 considered the media to have major importance for democracy; however, approximately 50% of them see the media as biased to various degrees, impairing and endangering democratic processes. While for decades the optimistic viewpoint prevailed in e-democracy, in recent years, we might be witnessing a change. The future of this law seems quite unclear.

2.3. Computers and Progress Laws

When researchers from MIT tested 62 technologies [64], they found that there are two laws that fit the data very well, Moore’s law and Wright’s law, although one is related to computers and the other is related to flight. Consider Wright’s law:

When researchers from MIT tested 62 technologies [36], they found that there are two laws that fit the data very well, Moore’s law and Wright’s law, although one is related to computers and the other is related to flight. Consider Wright’s law:

-

Wright’s law [65]: The cost of airplanes is proportional to the inverse of the number of planes manufactured raised to some power. The law seems to be time-independent.

Wright’s law [

This theory was proposed as a more general law that governs the costs of technological products and is often explained on the basis that the more of a product that we make, the better and more efficient we become at making the product. It has also been shown [66] that costs decrease because of economies of scale.

This theory was proposed as a more general law that governs the costs of technological products and is often explained on the basis that the more of a product that we make, the better and more efficient we become at making the product. It has also been shown [38] that costs decrease because of economies of scale.

The analysis in [64] further compared the two most consistent laws, Wright’s and Moore’s law. It was stated that if the production of an item grows at an exponential rate, then Wright’s law and Moore’s law are quite similar. This is slightly hard to comprehend since computing is often regarded as a special case (“It’s a much more general thing,” says author Doyne Farmer, currently at the University of Oxford, United Kingdom) and that Moore published the law from purely a technological observation based on the progress of electronics. Indeed, the consequences of fast exponential growth such as that observed by Moore in computing are in fact related to the technology life cycle, which describes the commercial gain of a product through research and development expenses and the financial return during its profitable stage [67].

The analysis in [36] further compared the two most consistent laws, Wright’s and Moore’s law. It was stated that if the production of an item grows at an exponential rate, then Wright’s law and Moore’s law are quite similar. This is slightly hard to comprehend since computing is often regarded as a special case (“It’s a much more general thing,” says author Doyne Farmer, currently at the University of Oxford, United Kingdom) and that Moore published the law from purely a technological observation based on the progress of electronics. Indeed, the consequences of fast exponential growth such as that observed by Moore in computing are in fact related to the technology life cycle, which describes the commercial gain of a product through research and development expenses and the financial return during its profitable stage [39].

The commercial effects of Moore’s law in IS products are indeed fascinating. For example, the total production of semiconductor devices resulted in one transistor produced per metre of our galaxy’s diameter and billions of transistors per star in our galaxy. The calculation is as follows: according to [29], transistor production reached 2.5 × 10

The commercial effects of Moore’s law in IS products are indeed fascinating. For example, the total production of semiconductor devices resulted in one transistor produced per metre of our galaxy’s diameter and billions of transistors per star in our galaxy. The calculation is as follows: according to [40], transistor production reached 2.5 × 10

20, which is 250 billion billion, in 2014 [68]. Our Milky Way galaxy has a diameter between 100,000 and 180,000 light years [69]. The galaxy is estimated to contain 100–400 billion stars and 100 billion planets [70]. In addition, there are also approximately 100 billion neurons in our brain [71].

, which is 250 billion billion, in 2014 [41]. Our Milky Way galaxy has a diameter between 100,000 and 180,000 light years [42]. The galaxy is estimated to contain 100–400 billion stars and 100 billion planets [43]. In addition, there are also approximately 100 billion neurons in our brain [44].

3. AI, AmI and Electronics

In this section, AI and its relation to IS and IS laws is presented. Understanding the intertwined progress of AI is essential since it already influences practically every human activity and will do so even more in near future.

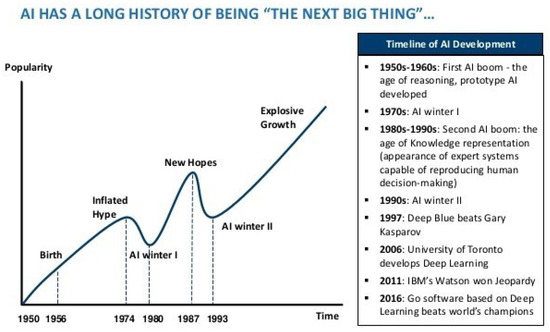

The history of AI and AmI is characterized by cycles of overoptimism and overpessimism, often referred to as the “AI winter” [75] (

The history of AI and AmI is characterized by cycles of overoptimism and overpessimism, often referred to as the “AI winter” [45] (

), somewhat resembling the stalling of a particular technology enabling Moore’s law. There are a couple of differences, however. Whereas there were papers stating that Moore’s law is coming to an end several times before, the funding in electronics never dried out. Instead, the AI funding varied a lot in each case when a particular AI approach such as expert systems met its limitations. Unlike the progress of electronics where the pessimism was local and not wide spread in public, the AI winter was generally accepted in our society as a terminal inability to perform similar to humans. In computing, there is an old saying: “Never say never”, since so many negative predictions failed. Therefore, the AI pessimism was ill-founded from the start since it was only a question of time when advanced electronics would promote AI growth. Whatever the case, the development of new chips and new AI methods soon enabled fast and prosperous progress of information society.

AI periods of overoptimism and overpessimism [46].

The ability of the broad AI field to solve more difficult problems in time is basically guaranteed by the exponential growth of computing semiconductor capabilities, as defined by IS laws. At the same time, AI progress is also characterized by methodological breakthroughs in bursts. Currently, AI and AmI achievements attract worldwide attention in practically all areas of academia, gaming, industry and real life.

AI and AmI have already penetrated every aspect and are already having large impacts on our everyday lives. Smartphones, all modern cars, autonomous vehicles, the Internet of Things (IoT), smart homes and smart cities, medicine and institutions such as banks or markets all use artificial intelligence on a daily basis [77]. Examples include when we use Siri, Google or Alexa to request directions to the nearest petrol station or to order a taxi, when we make a purchase using a credit card with an AI system in the background checking for potential fraud, when an intelligent agent in a smart home regulates user-specific comfort, or when a smart city optimizes heterogeneous sources and environment demands.

AI and AmI have already penetrated every aspect and are already having large impacts on our everyday lives. Smartphones, all modern cars, autonomous vehicles, the Internet of Things (IoT), smart homes and smart cities, medicine and institutions such as banks or markets all use artificial intelligence on a daily basis [47]. Examples include when we use Siri, Google or Alexa to request directions to the nearest petrol station or to order a taxi, when we make a purchase using a credit card with an AI system in the background checking for potential fraud, when an intelligent agent in a smart home regulates user-specific comfort, or when a smart city optimizes heterogeneous sources and environment demands.

When fed with huge numbers of examples and with fine-tuned parameters, AI methods and, in particular, deep neural networks (DNNs) regularly beat the best human experts in increasing numbers of artificial and real-life tasks [78], such as diagnosing tissue resulting from several diseases. There are other everyday tasks, e.g., the recognition of faces from a picture, where DNNs recognize hundreds of faces in seconds, a result no human can match.

When fed with huge numbers of examples and with fine-tuned parameters, AI methods and, in particular, deep neural networks (DNNs) regularly beat the best human experts in increasing numbers of artificial and real-life tasks [48], such as diagnosing tissue resulting from several diseases. There are other everyday tasks, e.g., the recognition of faces from a picture, where DNNs recognize hundreds of faces in seconds, a result no human can match.

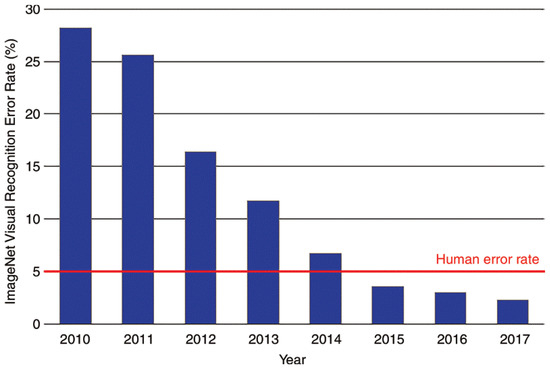

Figure 6 demonstrates the progress of DNNs in visual tasks. In approximately 2015, visual recognition using neural networks was slightly better than that of humans in specific domains; now, neural networks have surpassed humans quite significantly in several visual tests, for example, fast recognition of faces in foggy conditions. Research on how human vision works using AI that can inform future advances in artificial vision has also made strides [79]. In one of the core areas of AmI research, human-activity recognition, the application of deep-learning algorithms also achieved human-level results in recent years (see [10,80,81,82]).

demonstrates the progress of DNNs in visual tasks. In approximately 2015, visual recognition using neural networks was slightly better than that of humans in specific domains; now, neural networks have surpassed humans quite significantly in several visual tests, for example, fast recognition of faces in foggy conditions. Research on how human vision works using AI that can inform future advances in artificial vision has also made strides [49]. In one of the core areas of AmI research, human-activity recognition, the application of deep-learning algorithms also achieved human-level results in recent years (see [10][50][51][52]).

Error rates of deep neural networks (DNNs) on the ImageNet competition over time [53].

DNNs make it possible to solve several practical tasks in real life, such as detecting cancer [84,85,86] or Alzheimer’s disease [87,88,89]. Furthermore, studies applying DNNs to assess facial properties can reveal several diseases, sexual orientation, intelligence quotient (IQ) and political preferences. One of the essential tasks of AmI is to detect the physical, mental, emotional and other states of a user [90,91,92]. In real life, it is important to provide users with the comfort required at a particular moment without demanding tedious instructions.

DNNs make it possible to solve several practical tasks in real life, such as detecting cancer [54][55][56] or Alzheimer’s disease [57][58][59]. Furthermore, studies applying DNNs to assess facial properties can reveal several diseases, sexual orientation, intelligence quotient (IQ) and political preferences. One of the essential tasks of AmI is to detect the physical, mental, emotional and other states of a user [60][61][62]. In real life, it is important to provide users with the comfort required at a particular moment without demanding tedious instructions.

When will AI systems outperform humans in nearly all properties? The phenomenon is termed superintelligence (SI) or sometimes superartificial intelligence (SAI), and it is often related to singularity theory [93]. The seminal work on superintelligence is Bostrom’s Superintelligence: Paths, Dangers, Strategies [94]. Another interesting, more technically oriented analysis is presented in the book Artificial Superintelligence: A Futuristic Approach by R. V. Yampolskiy [11].

When will AI systems outperform humans in nearly all properties? The phenomenon is termed superintelligence (SI) or sometimes superartificial intelligence (SAI), and it is often related to singularity theory [63]. The seminal work on superintelligence is Bostrom’s Superintelligence: Paths, Dangers, Strategies [64]. Another interesting, more technically oriented analysis is presented in the book Artificial Superintelligence: A Futuristic Approach by R. V. Yampolskiy [11].

Similar to superintelligence, there is also superambient intelligence (SAmI). SAmI is more strongly related to humans and therefore possibly more likely to approach human-related intelligence. For example, when taking care of humans and handling the environment, a user must feel as mentally and cognitively comfortable as possible; thus, AmI must understand human cognitive [95], emotional and physical current states and not just environmentally related properties such as energy consumption or safety. In addition, while hostile superintelligence in movies often evolves over the Web, in reality, SAmI would even more easily transverse in the environment of smart devices, homes and cities. However, there seems to be no motivation for hostility between an IS and humans as long as we avoid risky activities such as autonomous weapons [96].

Similar to superintelligence, there is also superambient intelligence (SAmI). SAmI is more strongly related to humans and therefore possibly more likely to approach human-related intelligence. For example, when taking care of humans and handling the environment, a user must feel as mentally and cognitively comfortable as possible; thus, AmI must understand human cognitive [65], emotional and physical current states and not just environmentally related properties such as energy consumption or safety. In addition, while hostile superintelligence in movies often evolves over the Web, in reality, SAmI would even more easily transverse in the environment of smart devices, homes and cities. However, there seems to be no motivation for hostility between an IS and humans as long as we avoid risky activities such as autonomous weapons [66].

Finally, now might be the time for AI to pay back the merit for fast progress; for decades, electronics dominated by Moore’s law stimulated fast AI progress, and now AI can help build better chips, computers and other electronic devices. There are several possibilities, e.g., the internal architecture of chips is rather simple and can be tuned to specific tasks such as vision or programming. Even programming itself can already be speeded by a factor of 1000 by smart transformation of a program in Python into an optimized C version. While it seems a distant future to code such complex tasks for current systems such as GPT-3 (Generative Pre-trained Transformer 3), the progress in recent years is more than promising [97].

Finally, now might be the time for AI to pay back the merit for fast progress; for decades, electronics dominated by Moore’s law stimulated fast AI progress, and now AI can help build better chips, computers and other electronic devices. There are several possibilities, e.g., the internal architecture of chips is rather simple and can be tuned to specific tasks such as vision or programming. Even programming itself can already be speeded by a factor of 1000 by smart transformation of a program in Python into an optimized C version. While it seems a distant future to code such complex tasks for current systems such as GPT-3 (Generative Pre-trained Transformer 3), the progress in recent years is more than promising [67].

References

- Weiss, G. Multiagent Systems (Intelligent Robotics and Autonomous Agents Series); MIT: Cambridge, MA, USA, 2013; ISBN 978-0262018890.

- Russel, S.; Norvig, P. Artificial Intelligence: A Modern Approach, 3rd ed.; Pearson Education Limited: London, UK, 2014.

- Turing, A. Computing Machinery and Intelligence. Mind 1950, 59, 433–460.

- Arribas-Ayllon, M. Ambient Intelligence: An Innovation Narrative; Lancaster University: Lancaster, UK, 2003; Available online: http://www.academia.edu/1080720/Ambient_Intelligence_an_innovation_narrative (accessed on 4 February 2021).

- Augusto, J.C.; McCullagh, P. Ambient intelligence: Concepts and applications. Comput. Sci. Inf. Syst. 2007, 4, 1–27.

- Weiser, M. The computer for the twenty-first century. Sci. Am. 1991, 165, 94–104.

- Streitz, N.; Nixon, P. Special issue on ‘the disappearing computer’. Commun. ACM 2005, 48, 32–35.

- Daoutis, M.; Coradeshi, S.; Loutfi, A. Grounding commonsense knowledge in intelligent systems. J. Ambient. Intell. Smart Environ. 2009, 1, 311–321.

- Ramos, C.; Augusto, J.C.; Shapiro, D. Ambient intelligence—The next step for artificial intelligence. IEEE Intell. Syst. 2008, 23, 15–18.

- Nakashima, H.; Aghajan, H.; Augusto, J.C. Handbook of Ambient Intelligence and Smart Environments; Springer: New York, NY, USA, 2009.

- Yampolskiy, R.V. Artificial Superintelligence: A Futuristic Approach, 1st ed.; CRC Press: Boca Raton, FL, USA, 2015.

- Soll, J. The Information Master: Jean-Baptiste Colbert’s Secret State Intelligence System; University of Michigan Press: Michigan, WI, USA, 2014.

- Artificial Intelligence in Medicine: Applications, Implications, and Limitations. Available online: http://sitn.hms.harvard.edu/flash/2019/artificial-intelligence-in-medicine-applications-implications-and-limitations/ (accessed on 4 February 2021).

- Goyal, H.; Mann, R.; Gandhi, Z.; Perisetti, A.; Ali, A.; Aman Ali, K.; Sharma, N.; Saligram, S.; Tharian, B.; Inamdar, S. Scope of Artificial Intelligence in Screening and Diagnosis of Colorectal Cancer. J. Clin. Med. 2020, 9, 3313.

- Oren, O.; Gersh, B.J.; Bhatt, D.L. Artificial intelligence in medical imaging: Switching from radiographic pathological data to clinically meaningful endpoints. Lancet Digit. Health 2020, 2, e486–e488.

- Beniger, J.R. The Control Revolution: Technological and Economic Origins of the Information Society; Harvard University Press: Cambridge, MA, USA, 1986.

- Byung-Keun, K. Internationalising the Internet the Co-Evolution of Influence and Technology; Edward Elgar Publishing: Cheltenham, UK, 2005.

- Webster, F.V. Theories of the Information Society, 3rd ed.; Routledge: New York, NY, USA, 2006.

- WSIS: Tunis Agenda for the Information Society. Available online: http://www.itu.int/net/wsis/docs2/tunis/off/6rev1.html (accessed on 10 December 2020).

- Scholz, R. Sustainable Digital Environments: What Major Challenges Is Humankind Facing? Sustainability 2016, 8, 726.

- Global Internet Usage. Available online: https://en.wikipedia.org/wiki/Global_Internet_usage (accessed on 10 December 2020).

- Raymond, E.S. The Cathedral and the Bazaar; O’Reilly Media: Sebastopol, CA, USA, 1999.

- Wirth, N. A Plea for Lean Software. Computer 1995, 28, 64–68.

- Brooks, F.P., Jr. The Mythical Man-Month; Addison-Wesley: Boston, MA, USA, 1995.

- Shapiro, C.; Varian, H.R. Information Rules; Harvard Business Press: Brighton, MA, USA, 1999.

- Odlyzko, A.; Tilly, B. A Refutation of Metcalfe’s Law and a Better Estimate for the Value of Networks and Network Interconnections; University of Minnesota: Minneapolis, MN, USA, 2005.

- Tapscott, D. The Digital Economy: Promise and Peril in the Age of Networked Intelligence; McGraw-Hill: New York, NY, USA, 1997.

- Bits and Atoms. Available online: https://www.wired.com/1995/01/negroponte-30/ (accessed on 10 December 2020).

- Computer laws revisited (Special issue). Computer 2013, 46, 12.

- Gams, M. Intelligence in Information Society. In Enabling Society with Information Technology; Jin, Q., Li, J., Zhang, N., Cheng, J., Yu, C., Noguchi, S., Eds.; Springer: Tokyo, Japan, 2002.

- Challenge and Promise of E-Democracy. Available online: https://www.griffithreview.com/articles/challenge-and-promise-of-e-democracy/ (accessed on 10 December 2020).

- Kidd, J. Are new media democratic? Cult. Policy Crit. Manag. Res. 2011, 5, 99–109.

- Ownership Chart: The Big Six. Available online: http://files.meetup.com/17628282/Media-Big-Six.pdf (accessed on 10 December 2020).

- American Views 2020: Trust, Media and Democracy. Available online: https://knightfoundation.org/reports/american-views-2020-trust-media-and-democracy/ (accessed on 10 December 2020).

- Nagy, B.; Farmer, J.D.; Bui, Q.M.; Trancik, J.E. Statistical Basis for Predicting Tech-nological Progress. PLoS ONE 2013, 8, e52669.

- Wright, T.P. Factors affecting the costs of airplanes. J. Aeronaut. Sci. 1936, 3, 122–128.

- Ball, P. Moore’s law is not just for computers. Nature 2013.

- Bayus, B.L. An Analysis of Product Lifetimes in a Technologically Dynamic Industry. Manag. Sci. 1998, 44, 763–775.

- Unimaginable Output: Global Production of Transistors. Available online: https://www.darrinqualman.com/global-production-transistors/ (accessed on 10 December 2020).

- Astronomers Have Found the Edge of the Milky Way at Last. Available online: https://www.sciencenews.org/article/astronomers-have-found-edge-milky-way-size (accessed on 10 December 2020).

- How Many Solar Systems Are in Our Galaxy? Available online: https://spaceplace.nasa.gov/other-solar-systems/en (accessed on 10 December 2020).

- Herculano-Houzel, S. The human brain in numbers: A linearly scaled-up primate brain. Front. Hum. Neurosci. 2009, 3, 31.

- Winston, P. Artificial Intelligence; Pearson: London, UK, 1992.

- History of AI Winters. Available online: https://www.actuaries.digital/2018/09/05/history-of-ai-winters/ (accessed on 10 December 2020).

- Moloi, T.; Marwala, T. Artificial Intelligence in Economics and Finance Theories; Springer: Berlin/Heidelberg, Germany, 2020.

- IJCAI Conference. 2017. Available online: https://ijcai-17.org (accessed on 4 February 2021).

- Jug, J.; Kolenik, T.; Ofner, A.; Farkas, I. Computational model of enactive visuospa-tial mental imagery using saccadic perceptual actions. Cogn. Syst. Res. 2018, 49, 157–177.

- IEEE Computational Intelligence Society. Available online: https://cis.ieee.org/ (accessed on 10 December 2020).

- 55th Anniversary of Moore’s Law. Available online: https://www.infoq.com/news/2020/04/Moores-law-55/ (accessed on 10 December 2020).

- Riding the S-Curve: The Global Uptake of Wind and Solar Power. Available online: https://www.uib.no/en/cet/127447/riding-s-curve-global-uptake-wind-and-solar-power (accessed on 10 December 2020).

- Rotman, D. We’re not prepared for the End of Moore’s Law. Available online: https://www.technologyreview.com/2020/02/24/905789/were-not-prepared-for-the-end-of-moores-law (accessed on 10 December 2020).

- Gjoreski, M.; Janko, V.; Slapničar, G.; Mlakar, M.; Reščič, N.; Bizjak, J.; Drobnič, V.; Marinko, M.; Mlakar, N.; Luštrek, M.; et al. Classical and deep learning methods for recognizing human activities and modes of transportation with smartphone sensors. Inf. Fusion 2020, 62, 47–62.

- Yun, Y.; Gu, I.Y.H. Riemannian Manifold-Valued Part-Based Features and Geodesic-Induced Kernel Machine for Human Activity Classification Dedicated to Assisted Living. Comput. Vis. Image Underst. 2017, 161, 65–76.

- The 4 Deep Learning Breakthroughs You Should Know about. Available online: https://towardsdatascience.com/the-5-deep-learning-breakthroughs-you-should-know-about-df27674ccdf2 (accessed on 10 December 2020).

- Zhang, X.; Tian, Q.; Wang, L.; Liu, Y.; Li, B.; Liang, Z.; Gao, P.; Zheng, K.; Zhao, B.; Lu, H. Radiomics Strategy for Molecular Subtype Stratification of Lower-Grade Glioma: Detecting IDH and TP53 Mutations Based on Multimodal MRI. J. Magn. Reason. Imaging 2018, 48, 916–926.

- Ge, C.; Gu, I.Y.H.; Jakola, A.S.; Yang, J. Deep Learning and Multi-Sensor Fusion for Glioma Classification using Multistream 2D Convolutional Networks. In Proceedings of the 40th Annual International Conference of the IEEE Engineering in Medicine and Bi-ology Society (EMBC’18), Honolulu, HI, USA, 18–21 July 2018; pp. 5894–5897.

- Chang, K.; Bai, H.X.; Zhou, H.; Su, C.; Bi, W.L.; Agbodza, E.; Kavouridis, V.K.; Senders, J.T.; Boaro, A.; Beers, A.; et al. Residual Convolutional Neural Network for the Determination ofIDHStatus in Low- and High-Grade Gliomas from MR Imaging. Clin. Cancer Res. 2018, 24, 1073–1081.

- Eye Scans to Detect Cancer and Alzheimer’s Disease. Available online: https://spectrum.ieee.org/the-human-os/biomedical/diagnostics/eye-scans-to-detect-cancer-and-alzheimers-disease (accessed on 10 December 2020).

- Sarraf, S.; Tofighi, G. DeepAD: Alzheimer’s disease classification via deep convolutional neural networks using MRI and fMRI. bioRxiv 2016.

- Bäckström, K.; Nazari, M.; Gu, I.Y.H.; Jakola, A.S. An efficient 3D deep convolutional network for Alzheimer’s disease diagnosis using MR images. In Proceedings of the 2018 IEEE 15th International Symposium on Biomedical Imaging, Washington, DC, USA, 4–7 April 2018.

- Pejović, V.; Gjoreski, M.; Anderson, C.; David, K.; Luštrek, M. Toward Cognitive Load Inference for Attention Management in Ubiquitous Systems. IEEE Pervasive Comput. 2020, 19, 35–45.

- Gjoreski, M.; Kolenik, T.; Knez, T.; Luštrek, M.; Gams, M.; Gjoreski, H.; Pejović, V. Datasets for Cognitive Load Inference Using Wearable Sensors and Psychological Traits. Appl. Sci. 2020, 10, 3843.

- Kolenik, T.; Gams, M. Persuasive Technology for Mental Health: One Step Closer to (Mental Health Care) Equality? IEEE Technol. Soc. Mag. 2020.

- Kurzweil, R. The Singularity Is Near: When Humans Transcend Biology; Penguin Books: London, UK, 2006.

- Bostrom, N. Superintelligence—Paths, Dangers, Strategies; Oxford University Press: Oxford, UK, 2014.

- Kolenik, T. Seeking after the Glitter of Intelligence in the Base Metal of Computing: The Scope and Limits of Computational Models in Researching Cognitive Phenomena. Interdiscip. Descr. Complex Syst. 2018, 16, 545–557.

- Beard, J.M. Autonomous Weapons and Human Responsibilities. Georget. J. Int. Law 2014, 45, 617.

- Will the Latest AI Kill Coding? Available online: https://towardsdatascience.com/will-gpt-3-kill-coding-630e4518c04d (accessed on 10 December 2020).