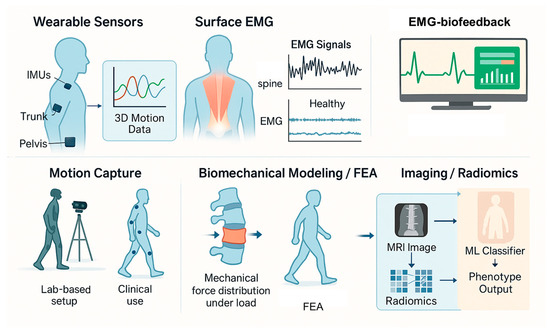

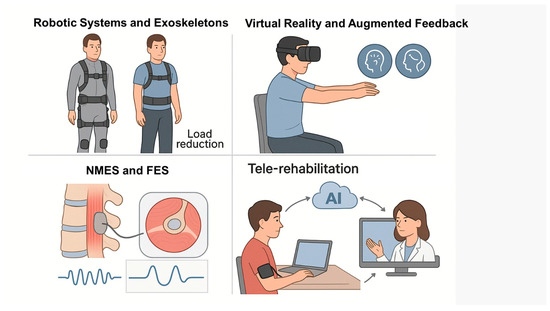

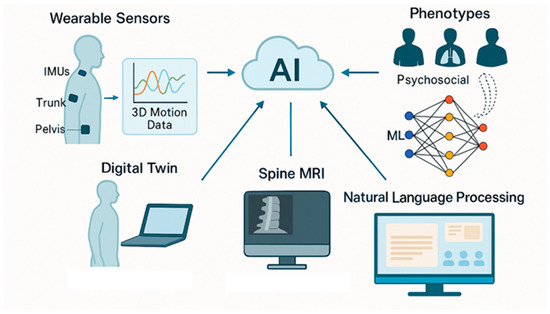

Low back pain (LBP) remains one of the most prevalent and disabling musculoskeletal conditions globally, with profound social, economic, and healthcare implications. The rising incidence and chronic nature of LBP highlight the need for more objective, personalized, and effective approaches to assessment and rehabilitation. In this context, bioengineering has emerged as a transformative field, offering novel tools and methodologies that enhance the understanding and management of LBP. This narrative review examines current bioengineering applications in both diagnostic and therapeutic domains. For assessment, technologies such as wearable inertial sensors, three-dimensional motion capture systems, surface electromyography, and biomechanical modeling provide real-time, quantitative insights into posture, movement patterns, and muscle activity. On the therapeutic front, innovations including robotic exoskeletons, neuromuscular electrical stimulation, virtual reality-based rehabilitation, and tele-rehabilitation platforms are increasingly being integrated into multimodal treatment protocols. These technologies support precision medicine by tailoring interventions to each patient’s biomechanical and functional profile. Furthermore, the incorporation of artificial intelligence into clinical workflows enables automated data analysis, predictive modeling, and decision support systems, while future directions such as digital twin technology hold promise for personalized simulation and outcome forecasting. While these advancements are promising, further validation in large-scale, real-world settings is required to ensure safety, efficacy, and equitable accessibility. Ultimately, bioengineering provides a multidimensional, data-driven framework that has the potential to significantly improve the assessment, rehabilitation, and overall management of LBP.

- low back pain

- bioengineering

- wearable sensors

- electromyography

- virtual reality

- artificial intelligence

- rehabilitation

1. Introduction

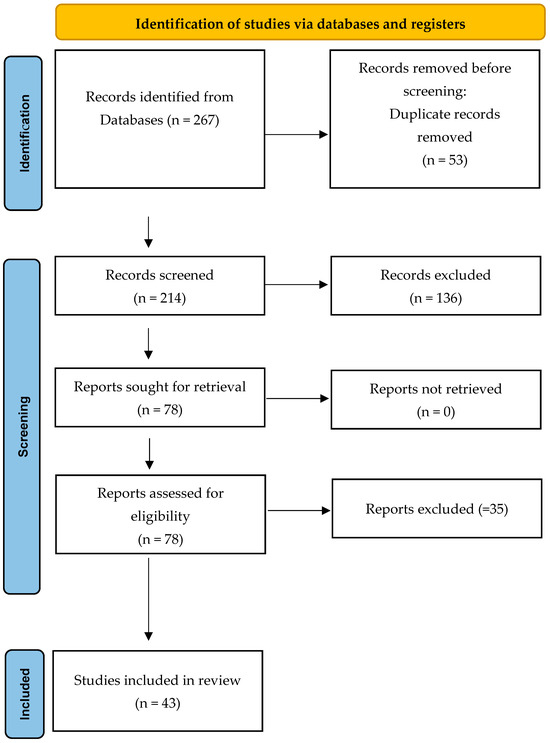

2. Materials and Methods

3. Results

| Bioengineering Domain | Applications in LBP | N. of Relevant Studies |

|---|---|---|

| Wearable Sensors | Real-time monitoring of posture and movement | 8 |

| Surface EMG | Assessment of muscle activity and fatigue | 6 |

| Motion Capture | Quantification of functional tasks and gait | 5 |

| Biomechanical Modeling | Simulation of spinal loads and tissue stress | 6 |

| Robotic Rehabilitation Systems | Automated, adaptive therapeutic support | 5 |

| Advanced Imaging | Quantitative biomarkers for diagnosis and progression | 7 |

| AI/Machine Learning | Predictive analytics, phenotyping, decision support | 6 |

3.1. Bioengineering in the Assessment of LBP

3.1.1. Wearable Sensors

3.1.2. Surface Electromyography (sEMG)

3.1.3. Motion Capture and Gait Analysis

3.1.4. Biomechanical Modeling and Finite Element Analysis (FEA)

3.1.5. Imaging Biomarkers and Radiomics

3.2. Bioengineering in Rehabilitation of LBP

3.2.1. Robotic Rehabilitation Systems

3.2.2. Virtual Reality and Augmented Feedback

3.2.3. Neuromuscular Electrical Stimulation (NMES) and Functional Electrical Stimulation (FES)

3.2.4. Computer-Guided Exercise Programs and Tele-Rehabilitation (TR)

3.2.5. Advanced Human-Robot Interfaces and Adaptive Control Systems

3.3. Integrating Artificial Intelligence (AI) and Machine Learning (ML)

4. Discussion

Challenges, Future Directions, and Limitations

5. Conclusions

Abbreviations

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Correction Statement

Abbreviations

| AI | artificial intelligence |

| CLBP | chronic low back pain |

| CSA | cross-sectional area |

| CT | computed tomography |

| DTI | diffusion tensor imaging |

| EMG | electromyography |

| FEA | finite element analysis |

| FES | functional electrical stimulation |

| IMUs | inertial measurement units |

| LBP | low back pain |

| MeSH | Medical Subject Headings |

| ML | machine learning |

| MRI | magnetic resonance imaging |

| NMES | neuromuscular electrical stimulation |

| ODI | Oswestry Disability Index |

| PROMs | patient-reported outcome measures |

| ROM | range of motion |

| SANRA | Scale for the Assessment of Narrative Review Articles |

| sEMG | surface electromyography |

| TR | tele-rehabilitation |

| VAS | Visual Analog Scale |

| VR | virtual reality |

| YLDs | years lived with disability |

References

- Balagué, F.; Mannion, A.F.; Pellisé, F.; Cedraschi, C. Non-specific low back pain. Lancet 2012, 379, 482–491. [Google Scholar] [CrossRef]

- Danilov, A.; Danilov, A.; Badaeva, A.; Kosareva, A.; Popovskaya, K.; Novikov, V. State-of-the-Art Personalized Therapy Approaches for Chronic Non-Specific Low Back Pain: Understanding the Mechanisms and Drivers. Pain Ther. 2025, 14, 479–496. [Google Scholar] [CrossRef]

- Wu, A.; March, L.; Zheng, X.; Huang, J.; Wang, X.; Zhao, J.; Blyth, F.M.; Smith, E.; Buchbinder, R.; Hoy, D. Global low back pain prevalence and years lived with disability from 1990 to 2017: Estimates from the Global Burden of Disease Study 2017. Ann. Transl. Med. 2020, 8, 299. [Google Scholar] [CrossRef]

- Varrassi, G.; Hanna, M.; Coaccioli, S.; Fabrizzi, P.; Baldini, S.; Kruljac, I.; Brotons, C.; Perrot, S. Dexketoprofen Trometamol and Tramadol Hydrochloride Fixed-Dose Combination in Moderate to Severe Acute Low Back Pain: A Phase IV, Randomized, Parallel Group, Placebo, Active-Controlled Study (DANTE). Pain Ther. 2024, 13, 1007–1022. [Google Scholar] [CrossRef] [PubMed]

- Trachsel, M.; Trippolini, M.A.; Jermini-Gianinazzi, I.; Tochtermann, N.; Rimensberger, C.; Hubacher, V.N.; Blum, M.R.; Wertli, M.M. Diagnostics and treatment of acute non-specific low back pain: Do physicians follow the guidelines? Swiss Med. Wkly. 2025, 155, 3697. [Google Scholar] [CrossRef] [PubMed]

- Brinjikji, W.; Luetmer, P.H.; Comstock, B.; Bresnahan, B.W.; Chen, L.E.; Deyo, R.A.; Halabi, S.; Turner, J.A.; Avins, A.L.; James, K.; et al. Systematic literature review of imaging features of spinal degeneration in asymptomatic populations. Am. J. Neuroradiol. 2015, 36, 811–816. [Google Scholar] [CrossRef]

- Taheri, N.; Becker, L.; Fleig, L.; Schömig, F.; Hoehl, B.U.; Cordes, L.M.S.; Grittner, U.; Mödl, L.; Schmidt, H.; Pumberger, M. Objective and subjective assessment of back shape and function in persons with and without low back pain. Sci. Rep. 2025, 15, 20105. [Google Scholar] [CrossRef]

- Garofano, M.; Del Sorbo, R.; Calabrese, M.; Giordano, M.; Di Palo, M.P.; Bartolomeo, M.; Ragusa, C.M.; Ungaro, G.; Fimiani, G.; Di Spirito, F.; et al. Remote Rehabilitation and Virtual Reality Interventions Using Motion Sensors for Chronic Low Back Pain: A Systematic Review of Biomechanical, Pain, Quality of Life, and Adherence Outcomes. Technologies 2025, 13, 186. [Google Scholar] [CrossRef]

- Srhoj-Egekher, V.; Cifrek, M.; Peharec, S. Feature Modeling for Interpretable Low Back Pain Classification Based on Surface EMG. IEEE Access 2022, 10, 73702–73727. [Google Scholar] [CrossRef]

- Ju, B.; Mark, J.I.; Youn, S.; Ugale, P.; Sennik, B.; Adcock, B.; Mills, A.C. Feasibility assessment of textile electromyography sensors for a wearable telehealth biofeedback system. Wearable Technol. 2025, 6, e26. [Google Scholar] [CrossRef]

- Bonato, P.; Feipel, V.; Corniani, G.; Arin-Bal, G.; Leardini, A. Position paper on how technology for human motion analysis and relevant clinical applications have evolved over the past decades: Striking a balance between accuracy and convenience. Gait Posture 2024, 113, 191–203. [Google Scholar] [CrossRef]

- Ahmadi, M.; Zhang, X.; Lin, M.; Tang, Y.; Engeberg, E.D.; Hashemi, J.; Vrionis, F.D. Automated Finite Element Modeling of the Lumbar Spine: A Biomechanical and Clinical Approach to Spinal Load Distribution and Stress Analysis. World Neurosurg. 2025, 201, 124236. [Google Scholar] [CrossRef] [PubMed]

- Luder, T.; Meier, M.; Neuweiler, R.; Lambercy, O. Evaluation of the support provided by a soft passive exoskeleton in individuals with back pain. Appl. Ergon. 2025, 127, 104514. [Google Scholar] [CrossRef] [PubMed]

- Chen, S.; Sun, S.; Wang, N.; Fang, X.; Xie, L.; Hu, F.; Xi, Z. Exploring the correlation between magnetic resonance diffusion tensor imaging (DTI) parameters and aquaporin expression and biochemical composition content in degenerative intervertebral disc nucleus pulposus tissue: A clinical experimental study. BMC Musculoskelet. Disord. 2025, 26, 157. [Google Scholar] [CrossRef]

- Yang, Z.; Liang, X.; Ji, Y.; Zeng, W.; Wang, Y.; Zhang, Y.; Zhou, F. Hippocampal Functional Radiomic Features for Identification of the Cognitively Impaired Patients from Low-Back-Related Pain: A Prospective Machine Learning Study. J. Pain Res. 2025, 18, 271–282. [Google Scholar] [CrossRef]

- Baethge, C.; Goldbeck-Wood, S.; Mertens, S. SANRA-a scale for the quality assessment of narrative review articles. Res. Integr. Peer Rev. 2019, 4, 5. [Google Scholar] [CrossRef]

- Sheeran, L.; Al-Amri, M.; Sparkes, V.; Davies, J.L. Assessment of Spinal and Pelvic Kinematics Using Inertial Measurement Units in Clinical Subgroups of Persistent Non-Specific Low Back Pain. Sensors 2024, 24, 2127. [Google Scholar] [CrossRef]

- Molnar, M.; Kok, M.; Engel, T.; Kaplick, H.; Mayer, F.; Seel, T. A method for lower back motion assessment using wearable 6D inertial sensors. In Proceedings of the 2018 21st International Conference on Information Fusion (FUSION), Cambridge, UK, 10–13 July 2018. [Google Scholar]

- van Amstel, R.N.; Dijk, I.E.; Noten, K.; Weide, G.; Jaspers, R.T.; Pool-Goudzwaard, A.L. Wireless inertial measurement unit-based methods for measuring lumbopelvic-hip range of motion are valid compared with optical motion capture as golden standard. Gait Posture 2025, 120, 72–80. [Google Scholar] [CrossRef]

- Bailes, A.H.; Johnson, M.; Roos, R.; Clark, W.; Cook, H.; McKernan, G.; Sowa, G.A.; Cham, R.; Bell, K.M. Assessing the Reliability and Validity of Inertial Measurement Units to Measure Three-Dimensional Spine and Hip Kinematics During Clinical Movement Tasks. Sensors 2024, 24, 6580. [Google Scholar] [CrossRef]

- Sibson, B.E.; Banks, J.J.; Yawar, A.; Yegian, A.K.; Anderson, D.E.; Lieberman, D.E. Using inertial measurement units to estimate spine joint kinematics and kinetics during walking and running. Sci. Rep. 2024, 14, 234. [Google Scholar] [CrossRef]

- McClintock, F.A.; Callaway, A.J.; Clark, C.J.; Williams, J.M. Validity and reliability of inertial measurement units used to measure motion of the lumbar spine: A systematic review of individuals with and without low back pain. Med. Eng. Phys. 2024, 126, 104146. [Google Scholar] [CrossRef]

- Triantafyllou, A.; Papagiannis, G.; Stasi, S.; Bakalidou, D.; Kyriakidou, M.; Papathanasiou, G.; Papadopoulos, E.C.; Papagelopoulos, P.J.; Koulouvaris, P. Application of Wearable Sensors Technology for Lumbar Spine Kinematic Measurements during Daily Activities following Microdiscectomy Due to Severe Sciatica. Biology 2022, 11, 398. [Google Scholar] [CrossRef] [PubMed]

- Liew, B.X.W.; Crisafulli, O.; Evans, D.W. Quantifying lumbar sagittal plane kinematics using a wrist-worn inertial measurement unit. Front. Sports Act. Living 2024, 6, 1381020. [Google Scholar] [CrossRef]

- Arvanitidis, M.; Jiménez-Grande, D.; Haouidji-Javaux, N.; Falla, D.; Martinez-Valdes, E. People with chronic low back pain display spatial alterations in high-density surface EMG-torque oscillations. Sci. Rep. 2022, 12, 15178. [Google Scholar] [CrossRef]

- Shigetoh, H.; Nishi, Y.; Osumi, M.; Morioka, S. Combined abnormal muscle activity and pain-related factors affect disability in patients with chronic low back pain: An association rule analysis. PLoS ONE 2020, 15, e0244111. [Google Scholar] [CrossRef]

- Moissenet, F.; Rose-Dulcina, K.; Armand, S.; Genevay, S. A systematic review of movement and muscular activity biomarkers to discriminate non-specific chronic low back pain patients from an asymptomatic population. Sci. Rep. 2021, 11, 5850. [Google Scholar] [CrossRef] [PubMed]

- Ebenbichler, G.; Habenicht, R.; Ziegelbecker, S.; Kollmitzer, J.; Mair, P.; Kienbacher, T. Age- and sex-specific effects in paravertebral surface electromyographic back extensor muscle fatigue in chronic low back pain. Geroscience 2020, 42, 251–269. [Google Scholar] [CrossRef] [PubMed]

- Kusche, R.; Graßhoff, J.; Oltmann, A.; Boudnik, L.; Rostalski, P. A Robust Multi-Channel EMG System for Lower Back and Abdominal Muscles Training. Curr. Dir. Biomed. Eng. 2021, 7, 159–162. [Google Scholar] [CrossRef]

- Frasie, A.; Massé-Alarie, H.; Bielmann, M.; Gauthier, N.; Roudjane, M.; Pagé, I.; Gosselin, B.; Roy, J.S.; Messaddeq, Y.; Bouyer, L.J. Potential of a New, Flexible Electrode sEMG System in Detecting Electromyographic Activation in Low Back Muscles during Clinical Tests: A Pilot Study on Wearables for Pain Management. Sensors 2024, 24, 4510. [Google Scholar] [CrossRef] [PubMed]

- McMullin, P.; Emmett, D.; Gibbons, A.; Clingo, K.; Higbee, P.; Sykes, A.; Fullwood, D.T.; Mitchell, U.H.; Bowden, A.E. Dynamic segmental kinematics of the lumbar spine during diagnostic movements. Front. Bioeng. Biotechnol. 2023, 11, 1209472. [Google Scholar] [CrossRef]

- Lunde, L.K.; Koch, M.; Merkus, S.L.; Knardahl, S.; Wærsted, M.; Veiersted, K.B. Associations of objectively measured forward bending at work with low-back pain intensity: A 2-year follow-up of construction and healthcare workers. Occup. Environ. Med. 2019, 76, 660–667. [Google Scholar] [CrossRef] [PubMed]

- Morikawa, T.; Mura, N.; Sato, T.; Katoh, H. Validity of the estimated angular information obtained using an inertial motion capture system during standing trunk forward and backward bending. BMC Sports Sci. Med. Rehabil. 2024, 16, 154. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Z.; Zou, J.; Lu, P.; Hu, J.; Cai, Y.; Xiao, C.; Li, G.; Zeng, Q.; Zheng, M.; Huang, G. Analysis of lumbar spine loading during walking in patients with chronic low back pain and healthy controls: An OpenSim-Based study. Front. Bioeng. Biotechnol. 2024, 12, 1377767. [Google Scholar] [CrossRef] [PubMed]

- Xu, X.; Sekiguchi, Y.; Honda, K.; Izumi, S.I. Motion analysis of 3D multi-segmental spine during gait in symptom remission people with low back pain: A pilot study. BMC Musculoskelet. Disord. 2025, 26, 269. [Google Scholar] [CrossRef]

- Dreischarf, M.; Zander, T.; Shirazi-Adl, A.; Puttlitz, C.M.; Adam, C.J.; Chen, C.S.; Goel, V.K.; Kiapour, A.; Kim, Y.H.; Labus, K.M.; et al. Comparison of eight published static finite element models of the intact lumbar spine: Predictive power of models improves when combined together. J. Biomech. 2014, 47, 1757–1766. [Google Scholar] [CrossRef]

- Khuyagbaatar, B.; Kim, K.; Kim, Y.H. Recent developments in finite element analysis of the lumbar spine. Int. J. Precis. Eng. Manuf. 2024, 25, 487–496. [Google Scholar]

- Patel, A.; Dada, A.; Saggi, S.; Yamada, H.; Ambati, V.S.; Goldstein, E.; Hsiao, E.C.; Mummaneni, P.V. Personalized Approaches to Spine Surgery. Int. J. Spine Surg. 2024, 18, 676–693. [Google Scholar] [CrossRef]

- Escamilla-Ugarte, R.; Alberola-Zorrilla, P.; Díaz-Benito, V.J.; Sánchez-Zuriaga, D. Pain Perception and Lumbar Biomechanics Are Modified After the Biering-Sorensen Maneuver in Patients with Low Back Pain. Int. J. Clin. Pract. 2025, 2025, 6471144. [Google Scholar] [CrossRef]

- Ahmadi, M.; Biswas, D.; Paul, R.; Lin, M.; Tang, Y.; Cheema, T.S.; Engeberg, E.D.; Hashemi, J.; Vrionis, F.D. Integrating finite element analysis and physics-informed neural networks for biomechanical modeling of the human lumbar spine. N. Am. Spine Soc. J. (NASSJ) 2025, 22, 100598. [Google Scholar] [CrossRef]

- Kozinc, Ž.; Babič, J.; Šarabon, N. Comparison of Subjective Responses of Low Back Pain Patients and Asymptomatic Controls to Use of Spinal Exoskeleton during Simple Load Lifting Tasks: A Pilot Study. Int. J. Environ. Res. Public Health 2020, 18, 161. [Google Scholar] [CrossRef]

- Quirk, D.A.; Chung, J.; Schiller, G.; Cherin, J.M.; Arens, P.; Sherman, D.A.; Zeligson, E.R.; Dalton, D.M.; Awad, L.N.; Walsh, C.J. Reducing Back Exertion and Improving Confidence of Individuals with Low Back Pain with a Back Exosuit: A Feasibility Study for Use in BACPAC. Pain Med. 2023, 24, S175–S186. [Google Scholar] [CrossRef]

- Chung, J.; Quirk, D.A.; Applegate, M.; Rouleau, M.; Degenhardt, N.; Galiana, I.; Dalton, D.; Awad, L.N.; Conor, J.; Walsh, C.J. Lightweight active back exosuit reduces muscular effort during an hour-long order picking task. Commun. Eng. 2024, 3, 35. [Google Scholar] [CrossRef]

- Poliero, T.; Lazzaroni, M.; Toxiri, S.; Di Natali, C.; Caldwell, D.G.; Ortiz, J. Applicability of an Active Back-Support Exoskeleton to Carrying Activities. Front. Robot. AI 2020, 7, 579963. [Google Scholar] [CrossRef]

- Ketola, J.H.J.; Inkinen, S.I.; Karppinen, J.; Niinimäki, J.; Tervonen, O.; Nieminen, M.T. T2-weighted magnetic resonance imaging texture as predictor of low back pain: A texture analysis-based classification pipeline to symptomatic and asymptomatic cases. J. Orthop. Res. 2021, 39, 2428–2438. [Google Scholar] [CrossRef]

- Saravi, B.; Zink, A.; Ülkümen, S.; Couillard-Despres, S.; Wollborn, J.; Lang, G.; Hassel, F. Clinical and radiomics feature-based outcome analysis in lumbar disc herniation surgery. BMC Musculoskelet. Disord. 2023, 24, 791. [Google Scholar] [CrossRef] [PubMed]

- Shady, M.M.; Abd El-Rahman, R.M.; Saied, A.M.M.; Taman, S.E. Role of magnetic resonance diffusion tensor imaging in assessment of back muscles in young adults with chronic low back pain. Egypt. J. Radiol. Nucl. Med. 2023, 54, 143. [Google Scholar] [CrossRef]

- Singh, R.; Khare, N.; Aggarwal, S.; Jain, M.; Kaur, S.; Singh, H.D. A Prospective Study to Evaluate the Clinical and Diffusion Tensor Imaging (DTI) Correlation in Patients with Lumbar Disc Herniation with Radiculopathy. Spine Surg. Relat. Res. 2022, 7, 257–267. [Google Scholar] [CrossRef] [PubMed]

- Chiou, S.Y.; Hellyer, P.J.; Sharp, D.J.; Newbould, R.D.; Patel, M.C.; Strutton, P.H. Relationships between the integrity and function of lumbar nerve roots as assessed by diffusion tensor imaging and neurophysiology. Neuroradiology 2017, 59, 893–903. [Google Scholar] [CrossRef] [PubMed]

- Acquah, G.; Sule, D.S.; Fesi, L.; Kyei, K.A.; Ago, J.L.; Agala, D.; Antwi, W.K. Magnetic resonance imaging findings in Ghanaian patients presenting with low back pain: A single centre study. BMC Med. Imaging 2025, 25, 135. [Google Scholar] [CrossRef]

- McSweeney, T.; Tiulpin, A.; Kowlagi, N.; Määttä, J.; Karppinen, J.; Saarakkala, S. Robust Radiomic Signatures of Intervertebral Disc Degeneration from MRI. Spine, 2025; in press. [Google Scholar]

- Liu, Z.; Hicks, Y.; Sheeran, L. Backtracker: Machine Learning to Identify Kinematic Phenotypes for Personalised Exercise Management in Non-Specific Low Back Pain. SSRN Work. Pap. 2025. Available online: https://papers.ssrn.com/sol3/papers.cfm?abstract_id=5295721 (accessed on 19 August 2025).

- Paccini, M.; Cammarasana, S.; Patanè, G. Framework of a multiscale data-driven digital twin of the muscle-skeletal system. arXiv 2025, arXiv:2506.11821. [Google Scholar]

- Miehling, J.; Choisne, J.; Koelewijn, A.D. Editorial: Human digital twins for medical and product engineering. Front. Bioeng. Biotechnol. 2024, 12, 1489975. [Google Scholar] [CrossRef]

- Tagliaferri, S.D.; Angelova, M.; Zhao, X.; Owen, P.J.; Miller, C.T.; Wilkin, T.; Belavy, D.L. Artificial intelligence to improve back pain outcomes and lessons learnt from clinical classification approaches: Three systematic reviews. NPJ Digit. Med. 2020, 3, 93. [Google Scholar] [CrossRef]

- Ortigoso-Narro, J.; Diaz-de-Maria, F.; Dehshibi, M.M.; Tajadura-Jiménez, A. L-SFAN: Lightweight Spatially-Focused Attention Network for Pain Behavior Detection. IEEE Sens. J. 2025; in press. [Google Scholar]

- Keller, A.V.; Torres-Espin, A.; Peterson, T.A.; Booker, J.; O’Neill, C.; Lotz, J.C.; Bailey, J.F.; Ferguson, A.R.; Matthew, R.P. Unsupervised Machine Learning on Motion Capture Data Uncovers Movement Strategies in Low Back Pain. Front. Bioeng. Biotechnol. 2022, 10, 868684. [Google Scholar] [CrossRef]

- Herrero, P.; Ríos-Asín, I.; Lapuente-Hernández, D.; Pérez, L.; Calvo, S.; Gil-Calvo, M. The Use of Sensors to Prevent, Predict Transition to Chronic and Personalize Treatment of Low Back Pain: A Systematic Review. Sensors 2023, 23, 7695. [Google Scholar] [CrossRef] [PubMed]

- Berger, M.; Bertrand, A.M.; Robert, T.; Chèze, L. Measuring objective physical activity in people with chronic low back pain using accelerometers: A scoping review. Front. Sports Act. Living 2023, 5, 1236143. [Google Scholar] [CrossRef] [PubMed]

- Karimi, M.T.; Zahraee, M.H.; Bahramizadeh, M.; Khaliliyan, H.; Ansari, M.; Ghaffari, F.; Sharafatvaziri, A. A comparative study of kinematic and kinetic analysis of gait in patients with non-specific low back pain versus healthy controls. Health 2025, 2, 107–116. [Google Scholar]

- Baker, S.A.; Billmire, D.A.; Bilodeau, R.A.; Emmett, D.; Gibbons, A.K.; Mitchell, U.H.; Bowden, A.E.; Fullwood, D.T. Wearable Nanocomposite Sensor System for Motion Phenotyping Chronic Low Back Pain: A BACPAC Technology Research Site. Pain Med. 2023, 24, S160–S174. [Google Scholar] [CrossRef]

- Kapil, D.; Wang, J.; Olawade, D.B.; Vanderbloemen, L. AI-Assisted Physiotherapy for Patients with Non-Specific Low Back Pain: A Systematic Review and Meta-Analysis. Appl. Sci. 2025, 15, 1532. [Google Scholar] [CrossRef]

- Han, X.; Guffanti, D.; Brunete, A. A Comprehensive Review of Vision-Based Sensor Systems for Human Gait Analysis. Sensors 2025, 25, 498. [Google Scholar] [CrossRef]

- Mohammadi Moghadam, S.; Ortega Auriol, P.; Yeung, T.; Choisne, J. 3D gait analysis in children using wearable sensors: Feasibility of predicting joint kinematics and kinetics with personalized machine learning models and inertial measurement units. Front. Bioeng. Biotechnol. 2024, 12, 1372669. [Google Scholar] [CrossRef]

- Muñoz-Gómez, E.; McClintock, F.; Callaway, A.; Clark, C.; Alqhtani, R.; Williams, J. Towards the Real-World Analysis of Lumbar Spine Standing Posture in Individuals with Low Back Pain: A Cross-Sectional Observational Study. Sensors 2025, 25, 2983. [Google Scholar] [CrossRef] [PubMed]

- Poitras, I.; Dupuis, F.; Bielmann, M.; Campeau-Lecours, A.; Mercier, C.; Bouyer, L.J.; Roy, J.S. Validity and reliability of wearable sensors for joint angle estimation: A systematic review. Sensors 2019, 19, 1555. [Google Scholar] [CrossRef] [PubMed]

- Robert-Lachaine, X.; Mecheri, H.; Larue, C.; Plamondon, A. Validation of inertial measurement units with an optoelectronic system for whole-body motion analysis. Med. Biol. Eng. Comput. 2017, 55, 609–619. [Google Scholar] [CrossRef]

- Farì, G.; Megna, M.; Ranieri, M.; Agostini, F.; Ricci, V.; Bianchi, F.P.; Rizzo, L.; Farì, E.; Tognolo, L.; Bonavolontà, V.; et al. Could the Improvement of Supraspinatus Muscle Activity Speed up Shoulder Pain Rehabilitation Outcomes in Wheelchair Basketball Players? Int. J. Environ. Res. Public Health 2022, 20, 255. [Google Scholar] [CrossRef] [PubMed]

- Lathlean, T.J.H.; Ramachandran, A.K.; Sim, S.; Whittle, I.R. The clinical utility and reliability of surface electromyography in individuals with chronic low back pain: A systematic review. J. Clin. Neurosci. 2024, 129, 110877. [Google Scholar] [CrossRef]

- Medagedara, M.H.; Ranasinghe, A.; Lalitharatne, T.D.; Gopura, R.A.R.C.; Nandasiri, G.K. Advancements in Textile-Based sEMG Sensors for Muscle Fatigue Detection: A Journey from Material Evolution to Technological Integration. ACS Sens. 2024, 9, 4380–4401. [Google Scholar] [CrossRef]

- Yassin, M.M.; Saad, M.N.; Khalifa, A.M.; Said, A.M. Advancing clinical understanding of surface electromyography biofeedback: Bridging research, teaching, and commercial applications. Expert Rev. Med. Devices 2024, 21, 709–726. [Google Scholar] [CrossRef]

- Farì, G.; Megna, M.; Fiore, P.; Ranieri, M.; Marvulli, R.; Bonavolontà, V.; Bianchi, F.P.; Puntillo, F.; Varrassi, G.; Reis, V.M. Real-Time Muscle Activity and Joint Range of Motion Monitor to Improve Shoulder Pain Rehabilitation in Wheelchair Basketball Players: A Non-Randomized Clinical Study. Clin. Pract. 2022, 12, 1092–1101. [Google Scholar] [CrossRef]

- De Luca, C.J.; Gilmore, L.D.; Kuznetsov, M.; Roy, S.H. Filtering the surface EMG signal: Movement artifact and baseline noise contamination. J. Biomech. 2010, 43, 1573–1579. [Google Scholar] [CrossRef]

- Dankaerts, W.; O’Sullivan, P.B.; Burnett, A.F.; Straker, L.M.; Davey, P.; Gupta, R. Discriminating healthy controls and two clinical subgroups of nonspecific chronic low back pain patients using trunk muscle activation and lumbosacral kinematics of postures and movements. Spine 2009, 34, 1610–1618. [Google Scholar] [CrossRef] [PubMed]

- Nordander, C.; Willner, J.; Hansson, G.A.; Larsson, B.; Unge, J.; Granquist, L.; Skerfving, S. Influence of the subcutaneous fat layer, as measured by ultrasound, skinfold calipers and BMI, on the EMG amplitude. Eur. J. Appl. Physiol. 2003, 89, 514–519. [Google Scholar] [CrossRef] [PubMed]

- Roggio, F.; Trovato, B.; Sortino, M.; Musumeci, G. A comprehensive analysis of the machine learning pose estimation models used in human movement and posture analyses: A narrative review. Heliyon 2024, 10, e39977. [Google Scholar] [CrossRef]

- Afzal, H.M.R.; Louhichi, B.; Alrasheedi, N.H. Challenges in Combining EMG, Joint Moments, and GRF from Marker-Less Video-Based Motion Capture Systems. Bioengineering 2025, 12, 461. [Google Scholar] [CrossRef]

- Giglio, M.; Farì, G.; Preziosa, A.; Corriero, A.; Grasso, S.; Varrassi, G.; Puntillo, F. Low Back Pain and Radiofrequency Denervation of Facet Joint: Beyond Pain Control-A Video Recording. Pain Ther. 2023, 12, 879–884. [Google Scholar] [CrossRef]

- Edriss, S.; Romagnoli, C.; Caprioli, L.; Zanela, A.; Panichi, E.; Campoli, F.; Padua, E.; Annino, G.; Bonaiuto, V. The role of emergent technologies in the dynamic and kinematic assessment of human movement in sport and clinical applications. Appl. Sci. 2024, 14, 1012. [Google Scholar] [CrossRef]

- Scataglini, S.; Abts, E.; Van Bocxlaer, C.; Van den Bussche, M.; Meletani, S.; Truijen, S. Accuracy, Validity, and Reliability of Markerless Camera-Based 3D Motion Capture Systems versus Marker-Based 3D Motion Capture Systems in Gait Analysis: A Systematic Review and Meta-Analysis. Sensors 2024, 24, 3686. [Google Scholar] [CrossRef]

- Carse, B.; Meadows, B.; Bowers, R.; Rowe, P. Affordable clinical gait analysis: An assessment of the marker tracking accuracy of a new low-cost optical 3D motion analysis system. Physiotherapy 2013, 99, 347–351. [Google Scholar] [CrossRef]

- Vendeuvre, T.; Germaneau, A. Biomechanical Insights and Innovations in Spinal Pathology and Surgical Interventions. In Spine Surgery; SFCR Experts Series; Barrey, C.Y., Ed.; Springer: Cham, Switzerland, 2025; pp. 47–62. [Google Scholar]

- Rivaroli, S.; Lippi, L.; Pogliana, D.; Turco, A.; de Sire, A.; Invernizzi, M. Biomechanical Changes Related to Low Back Pain: An Innovative Tool for Movement Pattern Assessment and Treatment Evaluation in Rehabilitation. J. Vis. Exp. 2024, 214, e67006. [Google Scholar] [CrossRef]

- Eubank, B.H.F.; Martyn, J.; Schneider, G.M.; McMorland, G.; Lackey, S.W.; Zhao, X.R.; Slomp, M.; Werle, J.R.; Robert, J.; Thomas, K.C. Consensus for a primary care clinical decision-making tool for assessing, diagnosing, and managing low back pain in Alberta, Canada. J. Evid. Based Med. 2024, 17, 224–234. [Google Scholar] [CrossRef]

- Daroudi, S.; Arjmand, N.; Mohseni, M.; El-Rich, M.; Parnianpour, M. Evaluation of ground reaction forces and centers of pressure predicted by AnyBody Modeling System during load reaching/handling activities and effects of the prediction errors on model-estimated spinal loads. J. Biomech. 2024, 164, 111974. [Google Scholar] [CrossRef] [PubMed]

- van den Bogert, A.J.; Blana, D.; Heinrich, D. Implicit methods for efficient musculoskeletal simulation and optimal control. Procedia IUTAM 2011, 2, 297–316. [Google Scholar] [CrossRef]

- Jones, A.C.; Wilcox, R.K. Finite element analysis of the spine: Towards a framework of verification, validation and sensitivity analysis. Med. Eng. Phys. 2008, 30, 1287–1304. [Google Scholar] [CrossRef]

- Varrassi, G.; Moretti, B.; Pace, M.C.; Evangelista, P.; Iolascon, G. Common Clinical Practice for Low Back Pain Treatment: A Modified Delphi Study. Pain Ther. 2021, 10, 589–604. [Google Scholar] [CrossRef] [PubMed]

- Rajasekaran, S.; Bt, P.; Murugan, C.; Mengesha, M.G.; Easwaran, M.; Naik, A.S.; Ks, S.V.A.; Kanna, R.M.; Shetty, A.P. The disc-endplate-bone-marrow complex classification: Progress in our understanding of Modic vertebral endplate changes and their clinical relevance. Spine J. 2024, 24, 34–45. [Google Scholar] [CrossRef]

- Cascella, M.; Leoni, M.L.G.; Shariff, M.N.; Varrassi, G. Artificial Intelligence-Driven Diagnostic Processes and Comprehensive Multimodal Models in Pain Medicine. J. Pers. Med. 2024, 14, 983. [Google Scholar] [CrossRef]

- Scullen, T.; Milburn, J.; Aria, K.; Mathkour, M.; Tubbs, R.S.; Kalyvas, J. The use of diffusion tensor imaging in spinal pathology: A comprehensive literature review. Eur. Spine J. 2024, 33, 3303–3314. [Google Scholar] [CrossRef]

- Muhaimil, A.; Pendem, S.; Sampathilla, N.; Priya, P.S.; Nayak, K.; Chadaga, K.; Goswami, A.; Chandran, M.O.; Shirlal, A. Role of Artificial intelligence model in prediction of low back pain using T2 weighted MRI of Lumbar spine. F1000Research 2024, 13, 1035. [Google Scholar] [CrossRef]

- Climent-Peris, V.J.; Martí-Bonmatí, L.; Rodríguez-Ortega, A.; Doménech-Fernández, J. Predictive value of texture analysis on lumbar MRI in patients with chronic low back pain. Eur. Spine J. 2023, 32, 4428–4436. [Google Scholar] [CrossRef]

- Mikołajewska, E.; Masiak, J.; Mikołajewski, D. Applications of Artificial Intelligence-Based Patient Digital Twins in Decision Support in Rehabilitation and Physical Therapy. Electronics 2024, 13, 4994. [Google Scholar] [CrossRef]

- Traverso, A.; Wee, L.; Dekker, A.; Gillies, R. Repeatability and reproducibility of radiomic features: A systematic review. Int. J. Radiat. Oncol. Biol. Phys. 2018, 102, 1143–1158. [Google Scholar] [CrossRef]

- Alashram, A.R. Combined robot-assisted therapy and neuromuscular electrical stimulation in upper limb rehabilitation in patients with stroke: A systematic review of randomized controlled trials. J. Hand Ther. 2025, 38, 347–359. [Google Scholar] [CrossRef]

- Kang, S.H.; Mirka, G.A. Effects of a Passive Back-Support Exosuit on Erector Spinae and Abdominal Muscle Activity During Short-Duration, Asymmetric Trunk Posture Maintenance Tasks. Hum. Factors 2024, 66, 1830–1843. [Google Scholar] [CrossRef] [PubMed]

- Huang, X.; Huang, L.; Shi, L.; Xu, L.; Cao, C.; Wu, H.; Cao, M.; Lv, C.; Shi, P.; Zhang, G.; et al. Evaluation of the efficacy of a novel lumbar exoskeleton with multiple interventions for patients with lumbar disc herniation: A multicenter randomized controlled trial of non-inferiority. Front. Bioeng. Biotechnol. 2025, 12, 1520610. [Google Scholar] [CrossRef] [PubMed]

- Yang, S.; Yang, G.; Kim, D.; Wui, S.H.; Park, S.W.; Kang, B.B. Development of Intelligent Soft Wearable Lumbar Support Robot for Flat Back Syndrome. Int. J. Control Autom. Syst. 2025, 23, 1147–1156. [Google Scholar] [CrossRef]

- Schmalz, T.; Colienne, A.; Bywater, E.; Fritzsche, L.; Gärtner, C.; Bellmann, M.; Reimer, S.; Ernst, M. A Passive Back-Support Exoskeleton for Manual Materials Handling: Reduction of Low Back Loading and Metabolic Effort during Repetitive Lifting. IISE Trans. Occup. Ergon. Hum. Factors 2022, 10, 7–20. [Google Scholar] [CrossRef]

- Qi, K.; Yin, Z.; Li, C.; Zhang, J.; Song, J. Effects of a lumbar exoskeleton that provides two traction forces on spinal loading and muscles. Front. Bioeng. Biotechnol. 2025, 13, 1530034. [Google Scholar] [CrossRef]

- Ali, A.; Fontanari, V.; Schmoelz, W.; Agrawal, S.K. Systematic Review of Back-Support Exoskeletons and Soft Robotic Suits. Front. Bioeng. Biotechnol. 2021, 9, 765257. [Google Scholar] [CrossRef]

- Moya-Esteban, A.; Refai, M.I.; Sridar, S.; van der Kooij, H.; Sartori, M. Soft back exosuit controlled by neuro-mechanical modeling provides adaptive assistance while lifting unknown loads and reduces lumbosacral compression forces. Wearable Technol. 2025, 6, e9. [Google Scholar] [CrossRef]

- Marican, M.A.; Chandra, L.D.; Tang, Y.; Iskandar, M.N.S.; Lim, C.X.E.; Kong, P.W. Biomechanical Effects of a Passive Back-Support Exosuit During Simulated Military Lifting Tasks-An EMG Study. Sensors 2025, 25, 3211. [Google Scholar] [CrossRef]

- Lo, H.S.; Xie, S.Q. Exoskeleton robots for upper-limb rehabilitation: State of the art and future prospects. Med. Eng. Phys. 2012, 34, 261–268. [Google Scholar] [CrossRef]

- García-de-la-Banda-García, R.; Cruz-Díaz, D.; García-Vázquez, J.F.; Martínez-Lentisco, M.D.M.; León-Morillas, F. Effects of Virtual Reality on Adults Diagnosed with Chronic Non-Specific Low Back Pain: A Systematic Review. Healthcare 2025, 13, 1328. [Google Scholar] [CrossRef]

- Feng, D.; Shuqi, J.; Shufan, L.; Peng, W.; Cong, L.; Xing, W.; Yanran, S. Effect of non-pharmacological interventions on cognitive function in multiple sclerosis patients: A systematic review and network meta-analysis. Mult. Scler. Relat. Disord. 2025, 99, 106500. [Google Scholar] [CrossRef]

- Ma, L.; Yosef, B.; Talu, I.; Batista, D.; Jenkens-Drake, E.; Suthana, N.; Cross, K.A. Effects of virtual reality on spatiotemporal gait parameters and freezing of gait in Parkinson’s disease. NPJ Park. Dis. 2025, 11, 148. [Google Scholar] [CrossRef]

- Bordeleau, M.; Stamenkovic, A.; Tardif, P.A.; Thomas, J. The Use of Virtual Reality in Back Pain Rehabilitation: A Systematic Review and Meta-Analysis. J. Pain 2022, 23, 175–195. [Google Scholar] [CrossRef]

- Matheve, T.; Bogaerts, K.; Timmermans, A. Virtual reality distraction induces hypoalgesia in patients with chronic low back pain: A randomized controlled trial. J. Neuroeng. Rehabil. 2020, 17, 55. [Google Scholar] [CrossRef] [PubMed]

- Morone, G.; Papaioannou, F.; Alberti, A.; Ciancarelli, I.; Bonanno, M.; Calabrò, R.S. Efficacy of Sensor-Based Training Using Exergaming or Virtual Reality in Patients with Chronic Low Back Pain: A Systematic Review. Sensors 2024, 24, 6269. [Google Scholar] [CrossRef] [PubMed]

- Groenveld, T.D.; Smits, M.L.M.; Knoop, J.; Kallewaard, J.W.; Staal, J.B.; de Vries, M.; van Goor, H. Effect of a Behavioral Therapy-Based Virtual Reality Application on Quality of Life in Chronic Low Back Pain. Clin. J. Pain 2023, 39, 278–285. [Google Scholar] [CrossRef] [PubMed]

- Li, R.; Li, Y.; Kong, Y.; Li, H.; Hu, D.; Fu, C.; Wei, Q. Virtual Reality-Based Training in Chronic Low Back Pain: Systematic Review and Meta-Analysis of Randomized Controlled Trials. J. Med. Internet Res. 2024, 26, e45406. [Google Scholar] [CrossRef] [PubMed]

- Massah, N.; Kahrizi, S.; Neblett, R. Comparison of the Acute Effects of Virtual Reality Exergames and Core Stability Exercises on Cognitive Factors, Pain, and Fear Avoidance Beliefs in People with Chronic Nonspecific Low Back Pain. Games Health J. 2025, 14, 233–241. [Google Scholar] [CrossRef]

- Saredakis, D.; Szpak, A.; Birckhead, B.; Keage, H.A.D.; Rizzo, A.; Loetscher, T. Factors associated with virtual reality sickness in head-mounted displays: A systematic review and meta-analysis. Front. Hum. Neurosci. 2020, 14, 96. [Google Scholar] [CrossRef]

- Dermody, G.; Whitehead, L.; Wilson, G.; Glass, C. The role of virtual reality in improving health outcomes for community-dwelling older adults: Systematic review. J. Med. Internet Res. 2020, 22, e17331. [Google Scholar] [CrossRef]

- Capriotti, A.; Moret, S.; Del Bello, E.; Federici, A.; Lucertini, F. Virtual Reality: A New Frontier of Physical Rehabilitation. Sensors 2025, 25, 3080. [Google Scholar] [CrossRef] [PubMed]

- Kashyap, N.; Baranwal, V.K.; Basumatary, B.; Bansal, R.K.; Sahani, A.K. A Systematic Review on Muscle Stimulation Techniques. IETE Tech. Rev. 2022, 40, 76–89. [Google Scholar] [CrossRef]

- Songjaroen, S.; Sungnak, P.; Piriyaprasarth, P.; Wang, H.K.; Laskin, J.J.; Wattananon, P. Combined neuromuscular electrical stimulation with motor control exercise can improve lumbar multifidus activation in individuals with recurrent low back pain. Sci. Rep. 2021, 11, 14815. [Google Scholar] [CrossRef]

- Wolfe, D.; Dover, G.; Boily, M.; Fortin, M. The Immediate Effect of a Single Treatment of Neuromuscular Electrical Stimulation with the StimaWELL 120MTRS System on Multifidus Stiffness in Patients with Chronic Low Back Pain. Diagnostics 2024, 14, 2594. [Google Scholar] [CrossRef]

- Hayami, N.; Williams, H.E.; Shibagaki, K.; Vette, A.H.; Suzuki, Y.; Nakazawa, K.; Nomura, T.; Milosevic, M. Development and Validation of a Closed-Loop Functional Electrical Stimulation-Based Controller for Gait Rehabilitation Using a Finite State Machine Model. IEEE Trans. Neural Syst. Rehabil. Eng. 2022, 30, 1642–1651. [Google Scholar] [CrossRef] [PubMed]

- Wattananon, P.; Sungnak, P.; Songjaroen, S.; Kantha, P.; Hsu, W.L.; Wang, H.K. Using neuromuscular electrical stimulation in conjunction with ultrasound imaging technique to investigate lumbar multifidus muscle activation deficit. Musculoskelet. Sci. Pract. 2020, 50, 102215. [Google Scholar] [CrossRef]

- Gregory, C.M.; Bickel, C.S. Recruitment patterns in human skeletal muscle during electrical stimulation. Phys. Ther. 2005, 85, 358–364. [Google Scholar] [CrossRef]

- Cui, D.; Janela, D.; Costa, F.; Molinos, M.; Areias, A.C.; Moulder, R.G.; Scheer, J.K.; Bento, V.; Cohen, S.P.; Yanamadala, V.; et al. Randomized-controlled trial assessing a digital care program versus conventional physiotherapy for chronic low back pain. NPJ Digit. Med. 2023, 6, 121. [Google Scholar] [CrossRef]

- Jirasakulsuk, N.; Saengpromma, P.; Khruakhorn, S. Real-Time Telerehabilitation in Older Adults with Musculoskeletal Conditions: Systematic Review and Meta-analysis. JMIR Rehabil. Assist. Technol. 2022, 9, e36028. [Google Scholar] [CrossRef] [PubMed]

- Bunting, J.W.; Withers, T.M.; Heneghan, N.R.; Greaves, C.J. Digital interventions for promoting exercise adherence in chronic musculoskeletal pain: A systematic review and meta-analysis. Physiotherapy 2021, 111, 23–30. [Google Scholar] [CrossRef]

- Munce, S. The Importance of Telerehabilitation and Future Directions for the Field. JMIR Rehabil. Assist. Technol. 2025, 12, e76153. [Google Scholar] [CrossRef]

- Paolucci, T.; Pezzi, L.; Bressi, F.; Russa, R.; Zobel, B.B.; Bertoni, G.; Farì, G.; Bernetti, A. Exploring ways to improve knee osteoarthritis care: The role of mobile apps in enhancing therapeutic exercise: A systematic review. Digit. Health 2024, 10, 20552076241297296. [Google Scholar] [CrossRef]

- Jafari, B.; Amiri, M.R.; Labecka, M.K.; Rajabi, R. The effect of home-based and remote exercises on low back pain during the COVID-19 pandemic: A systemic review. J. Bodyw. Mov. Ther. 2025, 43, 143–151. [Google Scholar] [CrossRef]

- Lara-Palomo, I.C.; Gil-Martínez, E.; Ramírez-García, J.D.; Capel-Alcaraz, A.M.; García-López, H.; Castro-Sánchez, A.M.; Antequera-Soler, E. Efficacy of e-Health Interventions in Patients with Chronic Low-Back Pain: A Systematic Review with Meta-Analysis. Telemed. J. E Health 2022, 28, 1734–1752. [Google Scholar] [CrossRef]

- Molina-Garcia, P.; Mora-Traverso, M.; Prieto-Moreno, R.; Díaz-Vásquez, A.; Antony, B.; Ariza-Vega, P. Effectiveness and cost-effectiveness of telerehabilitation for musculoskeletal disorders: A systematic review and meta-analysis. Ann. Phys. Rehabil. Med. 2024, 67, 101791. [Google Scholar] [CrossRef]

- Fatoye, F.; Gebrye, T.; Fatoye, C.; Mbada, C.E.; Olaoye, M.I.; Odole, A.C.; Dada, O. The Clinical and Cost-Effectiveness of Telerehabilitation for People With Nonspecific Chronic Low Back Pain: Randomized Controlled Trial. JMIR Mhealth Uhealth 2020, 8, e15375. [Google Scholar] [CrossRef]

- Martínez de la Cal, J.; Fernández-Sánchez, M.; Matarán-Peñarrocha, G.A.; Hurley, D.A.; Castro-Sánchez, A.M.; Lara-Palomo, I.C. Physical Therapists’ Opinion of E-Health Treatment of Chronic Low Back Pain. Int. J. Environ. Res. Public Health 2021, 18, 1889. [Google Scholar] [CrossRef] [PubMed]

- Seron, P.; Oliveros, M.J.; Gutierrez-Arias, R.; Fuentes-Aspe, R.; Torres-Castro, R.C.; Merino-Osorio, C.; Nahuelhual, P.; Inostroza, J.; Jalil, Y.; Solano, R.; et al. Effectiveness of telerehabilitation in physical therapy: A rapid overview. Phys. Ther. 2021, 101, pzab053. [Google Scholar] [CrossRef] [PubMed]

- Zhang, L.; Liu, G.; Han, B.; Wang, Z.; Zhang, T. Adaptive human-robot interaction torque estimation with high accuracy and strong tracking ability for a lower limb rehabilitation robot. IEEE/ASME Trans. Mechatron. 2024, 29, 1184–1195. [Google Scholar]

- Chen, Y.; Wang, S.; Zhang, J.; Li, X. ViT-based Terrain Recognition System for wearable soft exosuit. Biomim. Intell. Robot. 2023, 3, 100121. [Google Scholar]

- Liu, H.; Zhao, W.; Chen, X.; Wu, Q. Human–robot interface based on sEMG envelope signal for the collaborative wearable robot. Biomim. Intell. Robot. 2023, 3, 100115. [Google Scholar] [CrossRef]

- Jujjavarapu, C. Applying Machine Learning and Application Development to Lower Back Pain and Genetic Medicine. Ph.D. Thesis, University of Washington, Seattle, WA, USA, 2021. [Google Scholar]

- Metsavaht, L.; Gonzalez, F.F.; Guadagnin, E.C.; Samartzis, D.; Gustafson, J.; Oliveira Generoso, T.; Chahla, J.; Luzo, M.; Leporace, G. Identifying gait subgroups in low back pain patients with artificial intelligence: Implications for individualized interventions. Eur. Spine J. 2025. [Google Scholar] [CrossRef]

- Thiry, P.; Houry, M.; Philippe, L.; Nocent, O.; Buisseret, F.; Dierick, F.; Slama, R.; Bertucci, W.; Thévenon, A.; Simoneau-Buessinger, E. Machine Learning Identifies Chronic Low Back Pain Patients from an Instrumented Trunk Bending and Return Test. Sensors 2022, 22, 5027. [Google Scholar] [CrossRef]

- Cascella, M.; Ponsiglione, A.M.; Santoriello, V.; Romano, M.; Cerrone, V.; Esposito, D.; Montedoro, M.; Pellecchia, R.; Savoia, G.; Lo Bianco, G.; et al. Expert consensus on feasibility and application of automatic pain assessment in routine clinical use. J. Anesth. Analg. Crit. Care 2025, 5, 29. [Google Scholar] [CrossRef]

- Aggarwal, N. Prediction of low back pain using artificial intelligence modeling. J. Med. Artif. Intell. 2021, 4, 2. [Google Scholar] [CrossRef]

- Sun, T.; He, X.; Li, Z. Digital twin in healthcare: Recent updates and challenges. Digit. Health 2023, 9, 20552076221149651. [Google Scholar] [CrossRef]

- Topol, E.J. High-performance medicine: The convergence of human and artificial intelligence. Nat. Med. 2019, 25, 44–56. [Google Scholar] [CrossRef]

- Diniz, P.; Grimm, B.; Garcia, F.; Fayad, J.; Ley, C.; Mouton, C.; Oeding, J.F.; Hirschmann, M.T.; Samuelsson, K.; Seil, R. Digital twin systems for musculoskeletal applications: A current concepts review. Knee Surg. Sports Traumatol. Arthrosc. 2025, 33, 1892–1910. [Google Scholar] [CrossRef]

- Mienye, I.D.; Swart, T.G.; Obaido, G.; Jordan, M.; Ilono, P. Deep Convolutional Neural Networks in Medical Image Analysis: A Review. Information 2025, 16, 195. [Google Scholar] [CrossRef]

- Bacco, L.; Russo, F.; Ambrosio, L.; D’Antoni, F.; Vollero, L.; Vadalà, G.; Dell’Orletta, F.; Merone, M.; Papalia, R.; Denaro, V. Natural language processing in low back pain and spine diseases: A systematic review. Front. Surg. 2022, 9, 957085. [Google Scholar] [CrossRef]

- Vidal, R.; Grotle, M.; Johnsen, M.B.; Yvernay, L.; Hartvigsen, J.; Ostelo, R.; Kjønø, L.G.; Enstad, C.L.; Killingmo, R.M.; Halsnes, E.H.; et al. Prediction models for outcomes in people with low back pain receiving conservative treatment: A systematic review. J. Clin. Epidemiol. 2025, 177, 111593. [Google Scholar] [CrossRef] [PubMed]

- Leoni, M.L.G.; Mercieri, M.; Varrassi, G.; Cascella, M. Artificial Intelligence in Interventional Pain Management: Opportunities, Challenges, and Future Directions. Transl. Med. UniSa 2024, 26, 134–137. [Google Scholar] [CrossRef]

- Cascella, M.; Shariff, M.N.; Viswanath, O.; Leoni, M.L.G.; Varrassi, G. Ethical Considerations in the Use of Artificial Intelligence in Pain Medicine. Curr. Pain Headache Rep. 2025, 29, 10. [Google Scholar] [CrossRef]

- Rudin, C. Stop explaining black box machine learning models for high stakes decisions and use interpretable models instead. Nat. Mach. Intell. 2019, 1, 206–215. [Google Scholar] [CrossRef]

- Park, C.; Yi, C.; Choi, W.J.; Lim, H.S.; Yoon, H.U.; You, S.J.H. Long-term effects of deep-learning digital therapeutics on pain, movement control, and preliminary cost-effectiveness in low back pain: A randomized controlled trial. Digit. Health 2023, 9, 20552076231217817. [Google Scholar] [CrossRef]

- Fatoye, F.; Gebrye, T.; Mbada, C.E.; Fatoye, C.T.; Makinde, M.O.; Ayomide, S.; Ige, B. Cost effectiveness of virtual reality game compared to clinic based McKenzie extension therapy for chronic non-specific low back pain. Br. J. Pain 2022, 16, 601–609. [Google Scholar] [CrossRef]

- Leardini, A.; Belvedere, C.; Nardini, F.; Sancisi, N.; Conconi, M.; Parenti-Castelli, V. Kinematic models of lower limb joints for musculo-skeletal modelling and optimization in gait analysis. J. Biomech. 2017, 62, 77–86. [Google Scholar] [CrossRef]

- Toelle, T.R.; Utpadel-Fischler, D.A.; Haas, K.K.; Priebe, J.A. App-based multidisciplinary back pain treatment versus combined physiotherapy plus online education: A randomized controlled trial. NPJ Digit. Med. 2019, 2, 34. [Google Scholar] [CrossRef]

- Koppenaal, T.; Pisters, M.F.; Kloek, C.J.; Arensman, R.M.; Ostelo, R.W.; Veenhof, C. The 3-Month Effectiveness of a Stratified Blended Physiotherapy Intervention in Patients with Nonspecific Low Back Pain: Cluster Randomized Controlled Trial. J. Med. Internet Res. 2022, 24, e31675. [Google Scholar] [CrossRef] [PubMed]

- Leoni, M.L.G.; Mercieri, M.; Paladini, A.; Cascella, M.; Rekatsina, M.; Atzeni, F.; Pasqualucci, A.; Bazzichi, L.; Salaffi, F.; Sarzi-Puttini, P.; et al. Web search trends on fibromyalgia: Development of a machine learning model. Clin. Exp. Rheumatol. 2025, 43, 1082–1094. [Google Scholar] [CrossRef] [PubMed]

- Chen, S.; Fan, S.; Qiao, Z.; Wu, Z.; Lin, B.; Li, Z.; Riegler, M.A.; Wong, M.Y.H.; Opheim, A.; Korostynska, O.; et al. Transforming Healthcare: Intelligent Wearable Sensors Empowered by Smart Materials and Artificial Intelligence. Adv. Mater. 2025, 37, e2500412. [Google Scholar] [CrossRef] [PubMed]