Your browser does not fully support modern features. Please upgrade for a smoother experience.

Please note this is a comparison between Version 2 by Catherine Yang and Version 1 by Ali Olyanasab.

Wearable devices have emerged as promising tools for personalized health monitoring, utilizing machine learning to distill meaningful insights from the expansive datasets they capture. Within the bio-electrical category, these devices employ biosignal data, such as electrocardiograms (ECGs), electromyograms (EMGs), electroencephalograms (EEGs), etc., to monitor and assess health.

- wearable devices

- personalized

- machine learning

1. Bio-Electrical Wearable Devices

Personalized wearable devices that utilize bio-electrical signals, such as ECG, EEG, and EMG, play a crucial role in revolutionizing healthcare and well-being. These devices offer the continuous and non-invasive monitoring of physiological signals, providing valuable insights into an individual’s health status and enabling personalized health management. The integration of machine learning algorithms with these wearable devices enhances their capabilities by enabling the analysis of complex bio-electrical data to detect anomalies, predict health conditions, and provide personalized recommendations. For instance, machine learning models can be trained to classify ECG signals for blood pressure estimation [6][1], EEG signals for emotion recognition [16][2], and EMG signals for gesture recognition [17][3]. This combination of personalized wearable devices and machine learning holds great promise in advancing preventive healthcare, early disease detection, and personalized treatment strategies, ultimately leading to improved patient outcomes and quality of life.

The integration of bio-electrical wearables into healthcare has ushered in a new era, with machine learning algorithms enhancing their capabilities across a myriad of applications. In the realm of personalized Parkinson’s disease management, LeMoyne et al. developed a groundbreaking system using the BioStamp nPoint. By adjusting the amplitude of the current applied to deep brain stimulation (4.0 mA, 2.5 mA, 1.0 mA, off), this multilayer neural network was capable of classifying tremor responses with 95% accuracy, demonstrating the potential for tailored interventions [10][4]. Moving to the domain of rehabilitation, LeMoyne et al. undertook a longitudinal investigation spanning 10 months. During this study, a smartphone affixed to the foot with an armband was employed to capture gyro data and transfer them to the cloud. The gathered data encompassed key metrics such as the maximum, minimum, mean, standard deviation, and coefficient of variation of the gyroscope signal. Subsequently, a support vector machine, facilitated by the Waikato Environment for Knowledge Analysis (WEKA), was employed to classify the gyroscope-acquired data in order to distinguish between the initial and final phases of the therapy regimen. The evaluation of the data demonstrates the effectiveness of the rehabilitation process [18][5].

Transitioning to cardiovascular health, Banerjee et al. proposed a methodology for blood pressure estimation using ECG data. Utilizing XGBoost for classification and an artificial neural network (ANN) for regression, their system achieved a mean error of 0.89 mm Hg. This application highlights the potential of lightweight ML algorithms in remote health monitoring, particularly in cardiovascular conditions [6][1]. An example of a wearable cardiovascular healthcare device is the system developed by Chiang et al. for predicting blood pressure (BP) and providing personalized lifestyle recommendations based on ECG data. Utilizing ECG, sleep, and physical activity data collected from smartwatches, the system uses ML models such as random forest and autoregressive integrated moving average (ARIMA) to predict BP and make lifestyle recommendations. The subjects experienced decreased BPs by 3.8 and 2.3 for systolic and diastolic BP. Furthermore, the system used Shapley values to identify lifestyle factors that contribute to high blood pressure [19][6]. Pramukantoro et al.’s real-time heartbeat monitoring system utilized the Polar H10 wearable device. For classification, they used SVM to categorize the data into five sections: normal, supraventricular, ventricular ectopic, fusion, and unknown. This exemplifies the potential for the accurate classification of heartbeats into five categories. The system’s use of RR interval data and Bluetooth low energy (BLE) enables real-time monitoring, showcasing bio-electrical wearables’ potential in cardiovascular health [20][7]. To show the unlimited features of an ECG signal, Maged et al. utilized ECG sensors in smartwatches to predict blood glucose levels in diabetic patients. Leveraging machine learning methods for regression, such as LGBM, GBR, AdaBoost, and linear and ridge regressors. and heart rate variability parameters, their system presented a novel approach to health monitoring. This application underscores the versatility of bio-electrical wearables in managing chronic conditions [21][8].

In the field of predictive healthcare and occupational safety, Shimazaki et al. employed supervised machine learning to prevent heat stroke in hot environments. Based on a personalized heat strain temperature (pHST) meter, their web survey-based automatic annotation system classified workers into thermal and non-thermal groups based on vital data, achieving an 85.2% accuracy in predicting heat stroke. This application highlights the potential of bio-electrical wearables in ensuring safety in challenging occupational environments [22][9].

Shifting focus to mental health, Campanella et al.’s stress detection system, utilizing physiological signals collected by the Empatica E4 bracelet and machine learning algorithms, introduces an application in stress management. The system collects physiological data through four sensors: a temperature sensor, accelerometer, photoplethysmogram (PPG) sensors, and electrodermal activity (EDA) sensors. Achieving an accuracy range of 70% to 79.17%, this study emphasizes the need for more extensive and diverse datasets to improve model accuracy, showcasing the potential of bio-electrical wearables in mental health [23][10]. Examining the real-time stress detection domain, Zhu et al. delved into EDA, ECG, and PPG signals from wearable devices. Utilizing six machine learning methods, including support vector machine (SVM) and k-nearest neighbors (KNNs), their stacking ensemble learning method achieved the best accuracy of 86.4% for EDA signals. This application demonstrates the potential of bio-electrical wearables in managing stress, offering real-time insights for users [7][11]. Tsai et al. devised a 7-day panic attack prediction model by leveraging data from a mobile app and a Garmin Vivosmart 4 smartwatch. The study encompassed 59 participants diagnosed with panic disorder (PD), and data on activity levels, heart rate, sleep patterns, anxiety, and depression scores were collected over a one-year period. Integrating questionnaires and additional physiological and environmental data, including the Air Quality Index (AQI), the researchers employed a random forest model, achieving prediction accuracies ranging from 67.4% to 81.3%. Crucial features such as Beck Anxiety Inventory (BAI), Beck Depression Inventory (BDI), State-Trait Anxiety Inventory (STAI), a Mini International Neuropsychiatric Interview (MINI), heart rate (HR), and deep sleep duration played pivotal roles in ensuring accurate predictions. This underscores the potential for early and personalized mental health interventions for patients diagnosed with panic disorder [13][12].

On the topic of gesture recognition, Ghaffar Nia et al. developed an artificial neural network (ANN) model with a few control parameters, achieving 98.9% and 93% accuracy in training and testing processes, respectively. The study focused on classifying EMG signals to control assistive devices for individuals with sensory-motor disorders. The ANN model’s application demonstrates the potential of machine learning algorithms in improving the accuracy and efficiency of EMG signal classification [17][3]. In another work, Avramoni et al. developed a sophisticated algorithm to detect pill intake using a smart wearable device with inertial measurement unit (IMU) sensors by evaluating the associated gestures. Employing supervised machine learning, the algorithm achieved over 99% accuracy in training and validation datasets and 100% accuracy in testing datasets. This application showcases the potential of bio-electrical wearables in gesture recognition and human–computer interaction [24][13].

Moving to neurological applications, Meisel et al. harnessed wristband sensor data and machine learning to develop a seizure forecasting system. The acquired signals includes EDA, blood volume pulse (BVP), temperature, and accelerometer data. The use of long short-term memory (LSTM) and 1D convolutional neural networks, optimized through grid searches, yielded promising results. The system not only showcased accurate predictions but also hinted at the potential for further improvement through individualized parameter tuning [25][14]. Transitioning to seizure detection, Jeppesen et al. developed a personalized seizure detection algorithm using patient-adaptive logistic regression machine learning (LRML). Utilizing a wearable ECG device and collecting heart rate variability (HRV) during a long-term video-EEG recording, the system achieved a 78.2% sensitivity and a 31% reduction in false alarm rates. This system contributes to the evolution of personalized healthcare interventions, showcasing the potential of bio-electrical wearables in neurological health [26][15].

Multiple studies have been conducted on sleep apnea detection and intervention. As an example, Ji et al. developed an airline point-of-care system for hybrid physiological signal monitoring, achieving high accuracy of 84–85% using a long short-term memory recurrent neural network (LSTM-RNN). The system detects electrocardiogram (ECG), breathing, and motion signals, with the diagnosis of sleep apnea-hypopnea syndrome (SAHS) as a key application. The hardware design includes ECG electrodes, flexible piezoelectric belts, and a control box, providing a low-cost, long-term monitoring solution for passengers during flights [27][16]. In another work, Mohan et al.’s exploration of deep learning and machine learning techniques for sleep apnea detection from single-lead ECG data emphasizes the potential of AI-based bio-signal processing. Their hybrid deep models achieved a sensitivity of 84.26%, a specificity of 92.27%, and an accuracy of 88.13%. This application showcases the potential for bio-electrical wearables in sleep monitoring and respiratory health [9][17].

The real-time emotion recognition system developed by Mai et al. using an ear-EEG-based on-chip device introduces a compact, battery-powered solution for emotion classification. Leveraging machine learning models such as SVM, MLP, and one-dimensional convolutional neural networks (1D-CNNs), this system utilizes Bluetooth low-energy wireless technology for data transmission, showcasing the potential for bio-electrical wearables in mental health applications [16][2].

2. Electro-Mechanical Wearable Devices

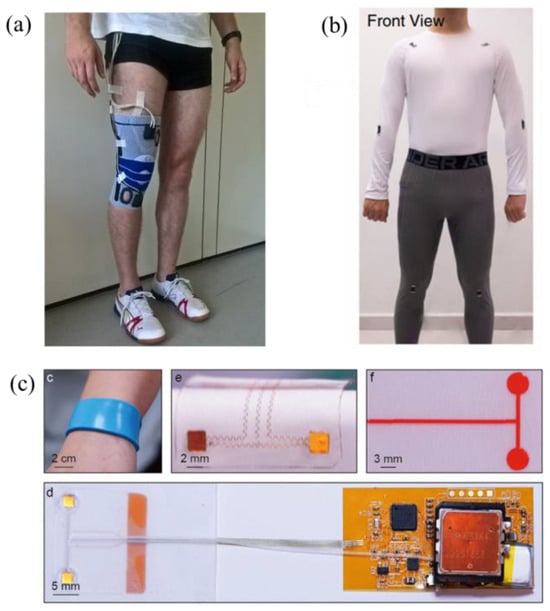

The utilization of electro-mechanical elements in wearable devices for the analysis of gait and recognition of motion holds substantial significance in diverse fields, including healthcare, rehabilitation, and robotics. These devices, encompassing soft sensors [40,41][18][19] and strain gauges [1[20][21],42], facilitate the non-invasive monitoring of human movement patterns, providing invaluable insights for gait analysis and motion tracking. Soft sensors integrated into wearable systems, for example, have the capacity to capture nuanced changes in joint movements and muscle activities, enabling the assessment of gait patterns and the detection of abnormalities in movement. Furthermore, these devices contribute to the creation of intelligent wearable systems, serving purposes such as fall detection, silent communication, and human activity recognition. The incorporation of machine learning algorithms with these wearable devices further amplifies their capabilities, enabling the precise and real-time analysis of gait and motion data. These advancements have the potential to transform personalized healthcare, enhance rehabilitation outcomes, and advance the development of intelligent robotic systems. Advancing the field of hand gesture recognition, Ferrone et al. developed a wearable wristband equipped with strain sensors. The system utilized strain gauge sensors, machine learning algorithms such as linear discriminant analysis (LDA) and support vector machine (SVM) as well as a leap motion system for validation. Featuring stretchable strain gauge sensors and readout electronics, the wristband achieved a reproducibility of over 98% using the LDA classifier [42][21]. Another approach was based on an e-textile, where Zeng et al. developed a highly conductive carbon-based e-textile for gesture recognition using heat transfer printing and screen printing. The system uses AI to recognize eight different gestures with 96.58% accuracy [43][22]. One of the applications of hand gesture recognition was introduced by DelPreto et al., who developed a smart glove with resistive sensors and an accelerometer, using machine learning to classify American Sign Language poses and gestures in real time with high accuracy (96.3%). The system utilizes a strain-sensitive resistive knit for postural information and an accelerometer for motion, with a small custom PCB and microcontroller reading sensors, performing feature extraction, and running a pre-trained neural network [44][23]. Another interesting application of hand motion detection was introduced during the COVID-19 pandemic. Marullo et al. developed No Face-Touch, a system that uses wearable devices and machine learning to detect hand motions ending in face-touches. The system utilizes a recurrent neural network (RNN) with long short-term memory (LSTM) cells and accelerometer data to detect face-touches, achieving a high true detections rate, low false detection rate, and short time to detect the contact. The system is designed to run on smartwatches and low-cost devices, with a focus on battery consumption and generalization to different users [45][24]. In silent communication, Smith et al. developed a wearable patch with a graphene-based strain gauge sensor and haptic feedback for silent communication. They used machine learning algorithms, including neural networks, to classify throat movements and predict spoken words with 82% accuracy for movements and 51% for words. They handcrafted a dataset with 15 words and four movements, and used a sensor attached to the throat to collect resistance readings for training and testing the algorithms [1][20]. In a more recent approach, Tashakori et al. achieved the precise real-time tracking of hand and finger movements using stretchable, washable smart gloves embedded with helical sensor yarns and inertial measurement units. The sensor yarns exhibit a high dynamic range and stability during use and washing. Through multi-stage machine learning, the system achieves low joint-angle estimation errors of 1.21° and 1.45° for intra- and inter-participant validation, matching costly motion-capture cameras’ accuracy. A data augmentation technique enhances robustness to noise, enabling accurate tracking during object interactions and diverse applications, including typing on a simulated keyboard, recognizing dynamic and static gestures from American Sign Language, and object identification [46][25]. Exploring joint analysis, Gholami et al. developed a fabric-based strain sensor system for knee-joint angle estimation. Implementing machine learning algorithms, including random forest and neural networks, the system processed sensor data and achieved an accuracy of around 6 degrees. The study highlighted the potential applications in healthcare, virtual reality, and robotics [47][26]. Following knee flexion and adduction moments estimation, Stetter et al. developed an artificial neural network (ANN) using wearable sensors to estimate knee flexion and adduction moments (KFM and KAM) during various locomotion tasks. The ANN was trained with IMU signals and biomechanical data, and the model architecture included two hidden layers with 100 and 20 neurons. The study used a leave-one-subject-out cross-validation method to evaluate the ANN’s performance. The ANN approach does not require musculoskeletal modeling and can provide accurate predictions for new data [48][27]. Shifting to fall detection, Desai et al. developed a wearable belt using machine learning and signal processing algorithms. With the ability to detect falls within 0.25 s, the system achieved high accuracy using a logistic regression classifier and triggered alerts via a GSM module upon fall detection [49][28]. In medication adherence monitoring, Cheon et al. utilized sensor data from an Apple Watch to detect low medication states in prescription bottles. Employing machine learning, specifically a gradient-boosted tree model, the system predicted low pill counts with high accuracy and F1 scores. The system involved preprocessing sensor data, extracting summary statistics and training the model using Apache Spark’s MLlib platform [50][29]. Another interesting application of gait analysis was introduced by Kirsten et al., who developed a sensor-based OCD detection system using AI, personalized federated learning, and motion sensors. The system achieved high AUPRCs and demonstrated privacy-preserving model training [14][30]. Meanwhile, Chee et al. explored gait analysis and machine learning for diabetes detection. They emphasized the potential of deep learning models like CNN and LSTM in analyzing gait data. The paper highlights the use of gait sensors and features, as well as the need to implement DL models for improved accuracy [11][31]. In another approach, Igene et al.’s SVM model, utilizing accelerometer data, showcased an accuracy of 94.4% in predicting Parkinson’s disease. Employing ANOVA, PCA, and grid search for feature selection and hyperparameter tuning, this application emphasizes the potential of electro-mechanical wearables in early disease detection and monitoring [29][32]. Li et al. developed a multimodal sensor glove to assess Parkinson’s disease symptoms in patients’ hands. They used various algorithms to process signals, achieving a 95.83% accuracy in identifying tremor signals. The glove assessed flexibility, muscle strength, and stability, showing high consistency with clinical observations. The system’s reliability was confirmed through repeated experiments, with intraclass correlation coefficients exceeding 0.9 [51][33]. Moving to stretchable sensors, Nguyen et al. developed a stretchable gold nanowire sensor for motion tracking. They used a machine learning algorithm to characterize the sensor’s response, achieving a high gauge factor of 12 and an error of less than 2 degrees in measuring bending motion [52][34]. As another example on stretchable sensors, Feng et al. developed a sensing-actuation unit for force estimation in soft stretch sensors. They used deep learning methods, including LSTM and Informer, to calibrate and predict force, achieving a mean square error (MSE) of less than 0.28 N2 and normalized root mean square error (NRMSE) of less than 2.0%. The unit has adjustable stiffness and is promising for applications like lightweight flexible exoskeletons [53][35]. Another soft sensor for gait generation was introduced by Kim et al., who introduced a semi-supervised deep learning model using microfluidic soft sensors. Leveraging a deep autoencoder, the model embedded gait motion into a latent motion manifold, reducing the need for a large calibration dataset. The system utilized AI to generate natural human gait motion from sensor outputs [40][18]. Transitioning to upper-limb posture detection, Giorgino et al. introduced a system utilizing conductive elastomer sensors for neurological rehabilitation. Employing machine learning for posture classification, the system addressed challenges related to sensor noise and generalization, achieving high recognition performance for real-time classification [54][36]. Exploring material surface recognition, Liu et al. developed smart gloves with ZNS-01 sensors to recognize five material surfaces. They used machine learning algorithms like XGBoost to achieve 98% classification accuracy. The system extracts time and frequency domain characteristics to train the models [55][37]. Introducing an advanced system for the ongoing wireless monitoring of arterial blood pressure, this technology, created by Li et al., features a thin, soft, and miniaturized design. The system incorporates a sensing module, active pressure adaptation module, and data processing module to identify the blood pulse wave, apply back pressure, and extract the pulse transit time interval. Employing a sophisticated multiple-feature fusion framework and ensemble learning, particularly extreme gradient boosting, the system constructs an estimation model. This model integration includes AI techniques, ensuring meticulous control over blood pressure [56][38]. Shifting to body movement detection, Wang et al. developed a wearable plastic optical fiber sensing system for human motion recognition using machine learning. The system uses AI, such as support vector machines and convolutional neural networks, to analyze motion signals and achieve high recognition accuracy. The system’s key parameters include feature vectors, cumulative contribution rate, and time consumed for recognition [2][39]. On another approach, Mani et al. developed a conductive fabric-based suspender system for human activity recognition (HAR) using machine learning and deep learning techniques. The system achieved an accuracy of 98.11% using eight different classifiers, including KNN, SVM, RF, and LSTM [57][40]. Utilizing MXene technology, Yang et al. developed wearable Ti3C2Tx MXene sensor modules with in-sensor machine learning (ML) models for full-body motion classifications and avatar reconstruction. The sensors exhibited ultrahigh sensitivities within user-designated working windows, and the ML chip enabled in-sensor reconstruction of high-precision avatar animations with an average error of 3.5 cm. The ML models achieved 100% accuracy for full-body motion classification without using image/video data. The edge sensor module with ML chip allowed the real-time and high-accuracy determination of 15 avatar joint locations, leading to personalized avatar animations. The integration of wearable sensors with ML chip for in-sensor machine learning and avatar reconstruction is a significant advancement in the field of wearable sensors and human–machine interaction [58][41]. Jiang et al. summarized the benefits of using advanced algorithms in wearable tactile sensors, including time series models and classification algorithms based on machine learning and signal processing. They discussed the integration of AI in the system, including the use of machine learning for motion recognition and voice recognition [59][42]. Vasdekis et al. developed WeMoD, an AI-based approach for predicting daily step count and setting personalized physical activity goals using a combination of physiological, psychological, and contextual features. They utilized ML algorithms such as ridge regression, decision tree, random forest, and gradient boosting regressor to achieve a mean absolute error of 1908 steps [8][43]. Papaleonidas et al.’s focus on high-accuracy human activity recognition models introduces the potential for health monitoring and smart home management. Utilizing machine learning and raw signals from wearables, the model achieved 99.9% accuracy. The integration of ML algorithms and variable segmentation methodology showcases the versatility of electro-mechanical wearables in recognizing activities [60][44]. Visual representations exemplifying the application of these wearable technologies are presented in Figure 51. The subsequent discussion will delve into a review of recent advanced research focused on the implementation of electro-mechanical wearable devices utilizing machine learning techniques.References

- Banerjee, S.; Kumar, B.; James, A.P.; Tripathi, J.N. Blood Pressure Estimation from ECG Data Using XGBoost and ANN for Wearable Devices. In Proceedings of the 2022 29th IEEE International Conference on Electronics, Circuits and Systems (ICECS), Glasgow, UK, 24–26 October 2022; pp. 1–4.

- Mai, N.-D.; Nguyen, H.-T.; Chung, W.-Y. Real-Time On-Chip Machine-Learning-Based Wearable Behind-The-Ear Electroencephalogram Device for Emotion Recognition. IEEE Access 2023, 11, 47258–47271.

- Nia, N.G.; Kaplanoglu, E.; Nasab, A. EMG-Based Hand Gestures Classification Using Machine Learning Algorithms. In Proceedings of the SoutheastCon 2023, Orlando, FL, USA, 1–16 April 2023; pp. 787–792.

- LeMoyne, R.; Mastroianni, T.; Whiting, D.; Tomycz, N. Parametric Evaluation of Deep Brain Stimulation Parameter Configurations for Parkinson’s Disease Using a Conformal Wearable and Wireless Inertial Sensor System and Machine Learning. In Proceedings of the 2020 42nd Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC), Montreal, QC, Canada, 20–24 July 2020; pp. 3606–3611.

- LeMoyne, R.; Mastroianni, T. Longitudinal Evaluation of Diadochokinesia Characteristics for Hemiplegic Ankle Rehabilitation by Wearable Systems with Machine Learning. In Proceedings of the 2022 E-Health and Bioengineering Conference (EHB), Iasi, Romania, 17–18 November 2022; pp. 1–4.

- Chiang, P.-H.; Wong, M.; Dey, S. Using Wearables and Machine Learning to Enable Personalized Lifestyle Recommendations to Improve Blood Pressure. IEEE J. Transl. Eng. Health Med. 2021, 9, 2700513.

- Pramukantoro, E.S.; Gofuku, A. A Real-Time Heartbeat Monitoring Using Wearable Device and Machine Learning. In Proceedings of the 2022 IEEE 4th Global Conference on Life Sciences and Technologies (LifeTech), Osaka, Japan, 7–9 March 2022; pp. 270–272.

- Maged, Y.; Atia, A. The Prediction Of Blood Glucose Level By Using The ECG Sensor of Smartwatches. In Proceedings of the 2022 2nd International Mobile, Intelligent, and Ubiquitous Computing Conference (MIUCC), Cairo, Egypt, 8–9 May 2022; pp. 406–411.

- Shimazaki, T.; Anzai, D.; Watanabe, K.; Nakajima, A.; Fukuda, M.; Ata, S. Heat Stroke Prevention in Hot Specific Occupational Environment Enhanced by Supervised Machine Learning with Personalized Vital Signs. Sensors 2022, 22, 395.

- Campanella, S.; Altaleb, A.; Belli, A.; Pierleoni, P.; Palma, L. A Method for Stress Detection Using Empatica E4 Bracelet and Machine-Learning Techniques. Sensors 2023, 23, 3565.

- Zhu, L.; Spachos, P.; Gregori, S. Multimodal Physiological Signals and Machine Learning for Stress Detection by Wearable Devices. In Proceedings of the 2022 IEEE International Symposium on Medical Measurements and Applications, MeMeA 2022—Conference Proceedings, Messina, Italy, 22–24 June 2022.

- Tsai, C.-H.; Chen, P.-C.; Liu, D.-S.; Kuo, Y.-Y.; Hsieh, T.-T.; Chiang, D.-L.; Lai, F.; Wu, C.-T. Panic Attack Prediction Using Wearable Devices and Machine Learning: Development and Cohort Study. JMIR Med. Inform. 2022, 10, e33063.

- Avramoni, D.; Virlan, R.; Prodan, L.; Iovanovici, A. Detection of Pill Intake Associated Gestures Using Smart Wearables and Machine Learning. In Proceedings of the 2022 IEEE 22nd International Symposium on Computational Intelligence and Informatics and 8th IEEE International Conference on Recent Achievements in Mechatronics, Automation, Computer Science and Robotics (CINTI-MACRo), Budapest, Hungary, 21–22 November 2022; pp. 251–256.

- Kalasin, S.; Sangnuang, P.; Surareungchai, W. Intelligent Wearable Sensors Interconnected with Advanced Wound Dressing Bandages for Contactless Chronic Skin Monitoring: Artificial Intelligence for Predicting Tissue Regeneration. Anal. Chem. 2022, 94, 6842–6852.

- Meisel, C.; El Atrache, R.; Jackson, M.; Schubach, S.; Ufongene, C.; Loddenkemper, T. Machine Learning from Wristband Sensor Data for Wearable, Noninvasive Seizure Forecasting. Epilepsia 2020, 61, 2653–2666.

- Jeppesen, J.; Christensen, J.; Johansen, P.; Beniczky, S. Personalized Seizure Detection Using Logistic Regression Machine Learning Based on Wearable ECG-Monitoring Device. Seizure—Eur. J. Epilepsy 2023, 107, 155–161.

- Mohan S, A.; Akash, P.; Ranjani, M. Sleep Apnea Detection from Single-Lead ECG A Comprehensive Analysis of Machine Learning and Deep Learning Algorithms. In Proceedings of the 2023 International Conference on Recent Advances in Electrical, Electronics, Ubiquitous Communication, and Computational Intelligence (RAEEUCCI), Chennai, India, 19–21 April 2023; pp. 1–7.

- Kim, D.; Kim, M.; Kwon, J.; Park, Y.-L.; Jo, S. Semi-Supervised Gait Generation With Two Microfluidic Soft Sensors. IEEE Robot. Autom. Lett. 2019, 4, 2501–2507.

- Annabestani, M.; Esmaeili-Dokht, P.; Olyanasab, A.; Orouji, N.; Alipour, Z.; Sayad, M.H.; Rajabi, K.; Mazzolai, B.; Fardmanesh, M. A New 3D, Microfluidic-Oriented, Multi-Functional, and Highly Stretchable Soft Wearable Sensor. Sci. Rep. 2022, 12, 20486.

- Ravenscroft, D.; Prattis, I.; Kandukuri, T.; Samad, Y.A.; Occhipinti, L.G. A Wearable Graphene Strain Gauge Sensor with Haptic Feedback for Silent Communications. In Proceedings of the 2021 IEEE International Conference on Flexible and Printable Sensors and Systems (FLEPS), Manchester, UK, 20–23 June 2021; pp. 1–4.

- Ferrone, A.; Maita, F.; Maiolo, L.; Arquilla, M.; Castiello, A.; Pecora, A.; Jiang, X.; Menon, C.; Ferrone, A.; Colace, L. Wearable Band for Hand Gesture Recognition Based on Strain Sensors. In Proceedings of the 2016 6th IEEE International Conference on Biomedical Robotics and Biomechatronics (BioRob), Singapore, 26–29 June 2016; pp. 1319–1322.

- Zeng, X.; Hu, M.; He, P.; Zhao, W.; Dong, S.; Xu, X.; Dai, G.; Sun, J.; Yang, J. Highly Conductive Carbon-Based E-Textile for Gesture Recognition. IEEE Electron Device Lett. 2023, 44, 825–828.

- DelPreto, J.; Hughes, J.; D’Aria, M.; de Fazio, M.; Rus, D. A Wearable Smart Glove and Its Application of Pose and Gesture Detection to Sign Language Classification. IEEE Robot. Autom. Lett. 2022, 7, 10589–10596.

- Marullo, S.; Baldi, T.L.; Paolocci, G.; D’Aurizio, N.; Prattichizzo, D. No Face-Touch: Exploiting Wearable Devices and Machine Learning for Gesture Detection. In Proceedings of the 2021 IEEE International Conference on Robotics and Automation (ICRA), Xi’an, China, 30 May–5 June 2021; pp. 4187–4193.

- Tashakori, A.; Jiang, Z.; Servati, A.; Soltanian, S.; Narayana, H.; Le, K.; Nakayama, C.; Yang, C.; Wang, Z.J.; Eng, J.J.; et al. Capturing Complex Hand Movements and Object Interactions Using Machine Learning-Powered Stretchable Smart Textile Gloves. Nat. Mach. Intell. 2024, 6, 106–118.

- Gholami, M.; Ejupi, A.; Rezaei, A.; Ferrone, A.; Menon, C. Estimation of Knee Joint Angle Using a Fabric-Based Strain Sensor and Machine Learning: A Preliminary Investigation. In Proceedings of the 2018 7th IEEE International Conference on Biomedical Robotics and Biomechatronics (Biorob), Enschede, The Netherlands, 26–29 August 2018; pp. 589–594.

- Stetter, B.J.; Krafft, F.C.; Ringhof, S.; Stein, T.; Sell, S. A Machine Learning and Wearable Sensor Based Approach to Estimate External Knee Flexion and Adduction Moments During Various Locomotion Tasks. Front. Bioeng. Biotechnol. 2020, 8, 9.

- Desai, K.; Mane, P.; Dsilva, M.; Zare, A.; Shingala, P.; Ambawade, D. A Novel Machine Learning Based Wearable Belt For Fall Detection. In Proceedings of the 2020 IEEE International Conference on Computing, Power and Communication Technologies (GUCON), Greater Noida, India, 2–4 October 2020; pp. 502–505.

- Cheon, A.; Jung, S.Y.; Prather, C.; Sarmiento, M.; Wong, K.; Woodbridge, D.M. A Machine Learning Approach to Detecting Low Medication State with Wearable Technologies. In Proceedings of the 2020 42nd Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC), Montreal, QC, Canada, 20–24 July 2020; pp. 4252–4255.

- Kirsten, K.; Pfitzner, B.; Löper, L.; Arnrich, B. Sensor-Based Obsessive-Compulsive Disorder Detection With Personalised Federated Learning. In Proceedings of the 2021 20th IEEE International Conference on Machine Learning and Applications (ICMLA), Pasadena, CA, USA, 13–16 December 2021; pp. 333–339.

- Chee, L.Z.; Hwong, H.H.; Sivakumar, S. Diabetes Detection Using Gait Analysis and Machine Learning. In Proceedings of the 2023 International Conference on Digital Applications, Transformation & Economy (ICDATE), Miri, Malaysia, 14–16 July 2023; pp. 1–7.

- Igene, L.; Alim, A.; Imtiaz, M.H.; Schuckers, S. A Machine Learning Model for Early Prediction of Parkinson’s Disease from Wearable Sensors. In Proceedings of the 2023 IEEE 13th Annual Computing and Communication Workshop and Conference (CCWC), Las Vegas, NV, USA, 8–11 March 2023; pp. 734–737.

- Li, Y.; Yin, J.; Liu, S.; Xue, B.; Shokoohi, C.; Ge, G.; Hu, M.; Li, T.; Tao, X.; Rao, Z.; et al. Learning Hand Kinematics for Parkinson’s Disease Assessment Using a Multimodal Sensor Glove. Adv. Sci. 2023, 10, 2206982.

- Nguyen, X.A.; Gong, S.; Cheng, W.; Chauhan, S. A Stretchable Gold Nanowire Sensor and Its Characterization Using Machine Learning for Motion Tracking. IEEE Sens. J. 2021, 21, 15269–15276.

- Feng, L.; Gui, L.; Yan, Z.; Yu, L.; Yang, C.; Yang, W. Force Calibration and Prediction of Soft Stretch Sensor Based on Deep Learning. In Proceedings of the 2023 International Conference on Advanced Robotics and Mechatronics (ICARM), Sanya, China, 8–10 July 2023; pp. 852–857.

- Giorgino, T.; Quaglini, S.; Lorassi, F.; Rossi, D.D. Experiments in the Detection of Upper Limb Posture through Kinestetic Strain Sensors. In Proceedings of the International Workshop on Wearable and Implantable Body Sensor Networks (BSN’06), Cambridge, MA, USA, 3–5 April 2006; pp. 4–12.

- Liu, Y.; Zhang, S.; Luo, Q. Recognition of Material Surfaces with Smart Gloves Based on Machine Learning. In Proceedings of the 2021 4th World Conference on Mechanical Engineering and Intelligent Manufacturing (WCMEIM), Shanghai, China, 12–14 November 2021; pp. 224–228.

- Li, J.; Jia, H.; Zhou, J.; Huang, X.; Xu, L.; Jia, S.; Gao, Z.; Yao, K.; Li, D.; Zhang, B.; et al. Thin, Soft, Wearable System for Continuous Wireless Monitoring of Artery Blood Pressure. Nat. Commun. 2023, 14, 5009.

- Wang, S.; Liu, B.; Wang, Y.-L.; Hu, Y.; Liu, J.; He, X.-D.; Yuan, J.; Wu, Q. Machine-Learning-Based Human Motion Recognition via Wearable Plastic-Fiber Sensing System. IEEE Internet Things J. 2023, 10, 17893–17904.

- Mani, N.; Haridoss, P.; George, B. Evaluation of a Combined Conductive Fabric-Based Suspender System and Machine Learning Approach for Human Activity Recognition. IEEE Open J. Instrum. Meas. 2023, 2, 2500310.

- Yang, H.; Li, J.; Xiao, X.; Wang, J.; Li, Y.; Li, K.; Li, Z.; Yang, H.; Wang, Q.; Yang, J.; et al. Topographic Design in Wearable MXene Sensors with In-Sensor Machine Learning for Full-Body Avatar Reconstruction. Nat. Commun. 2022, 13, 5311.

- Jiang, X.; Chen, R.; Zhu, H. Recent Progress in Wearable Tactile Sensors Combined with Algorithms Based on Machine Learning and Signal Processing. APL Mater. 2021, 9, 30906.

- Vasdekis, D.; Yfantidou, S.; Efstathiou, S.; Vakali, A. WeMoD: A Machine Learning Approach for Wearable and Mobile Physical Activity Prediction. In Proceedings of the 2022 IEEE International Conference on Pervasive Computing and Communications Workshops and other Affiliated Events (PerCom Workshops), Pisa, Italy, 21–25 March 2022; pp. 385–390.

- Papaleonidas, A.; Psathas, A.P.; Iliadis, L. High Accuracy Human Activity Recognition Using Machine Learning and Wearable Devices’ Raw Signals. J. Inf. Telecommun. 2022, 6, 237–253.

More