Your browser does not fully support modern features. Please upgrade for a smoother experience.

Please note this is a comparison between Version 2 by Tianlin Li and Version 1 by Tianlin Li.

在弱光条件下检测行人具有挑战性,尤其是在可穿戴平台的背景下。红外摄像机已被用于增强检测能力,而低光摄像机则捕捉行人更复杂的特征。考虑到这一点,我们引入了一个低光行人检测数据集(称为Detecting pedestrians in low-light conditions is challenging, especially in the context of wearable platforms. Infrared cameras have been employed to enhance detection capabilities, whereas low-light cameras capture the more intricate features of pedestrians. With this in mind, we introduce a low-light pedestrian detection (called HRBUST-LLPED),通过使用可穿戴的低光摄像头捕获校园内的行人数据。大部分数据是在星光级照明下收集的。) dataset by capturing pedestrian data on campus using wearable low-light cameras.

- wearable devices

- low-light pedestrian detection dataset

1. 引言

1. Introduction

在过去的二十年中,物联网和人工智能技术取得了重大进步。因此,研究人员将注意力转向开发由可穿戴摄像头、传感器、计算组件和机器学习模型组成的智能可穿戴辅助系统Over the past two decades, there has been a significant advancement in IoT and artificial intelligence technologies. As a result, researchers have turned their attention to developing intelligent wearable assistive systems that are made up of wearable cameras, sensors, computing components, and machine learning models [1]。这导致了旨在帮助各个领域的视障人士的研究的增加,例如旅行. This has led to an increase in studies aimed at assisting visually impaired individuals in various areas, such as travel [2]、食物, food [3]和屏幕检测, and screen detection [4]。其他研究领域包括人与宠物. Other areas of research include human-pet [5]或人与机器 or human-machine [6,7]的交互。可穿戴设备与计算机视觉模型相结合,被用于帮助用户观察通常难以看到的事物。尽管使用可穿戴设备进行物体检测的研究很多,但对场景中的人进行检测的研究仍然有限,因此在夜间监控、消防救援和森林检查等领域应用具有挑战性。 interaction. Wearable devices combined with computer vision models are being used to help users observe things that are typically difficult to see. Despite the numerous studies on object detection using wearable devices, research on detecting humans in a scene is still limited, making it challenging to apply in areas such as nighttime surveillance, fire rescue, and forest inspections.

自Since the maturity of convolutional neural networks in 2012年卷积神经网络成熟以来,目标检测算法经历了蓬勃发展, object detection algorithms have experienced vigorous development [8]。提出了以. Single-stage object detection models represented by SSD [9]和 and YOLO [10]为代表的单阶段目标检测模型,以及以, as well as two-stage object detection models depicted by Faster R-CNN [11]和 and FPN [12], have been proposed, achieving excellent results in terms of speed and accuracy. The maturity of object detection algorithms has also ushered in pedestrian detection algorithms into the era of deep learning. 为代表的两阶段目标检测模型,在速度和精度方面取得了优异的成绩。目标检测算法的成熟也让行人检测算法进入了深度学习时代。为了满足机器学习和深度学习模型的训练数据需求,人们提出了一些常用的行人检测数据集,如In order to fulfill the training data needs of machine learning and deep learning models, some usual pedestrian detection datasets have been proposed, like Caltech [13]和 and KITTI [14]。近年来,使用车载摄像头或监控摄像头从城市、乡村和更广泛的环境中收集了. In recent years, datasets such as CityPersons [15]、, CrowdHuman [16]、, WIDER Pedestrian、, WiderPerson [17]、, EuroCity [18], 和and TJU-Pedestrian [19] 等数据集。这些数据集使经过训练的模型能够适应更广泛的场景。have been collected from cities, the countryside, and broader environments using vehicle-mounted cameras or surveillance cameras. These datasets enable the trained models to adapt to a broader range of scenarios. The EuroCity和 and TJU-Pedestrian数据集还包含低照度条件下的行人数据,旨在实现夜间场景下行人检测模型的良好识别性能。然而,传统相机在弱光条件下难以捕捉到清晰的图像,这极大地影响了数据标注和模型识别性能,如图 datasets also include pedestrian data with low illumination conditions, aiming to achieve good recognition performance in terms of pedestrian detection models in nighttime scenarios. However, conventional cameras struggle to capture clear images under low-light conditions, significantly impacting data annotation and model recognition performance, as shown in Figure 1a 所示。.

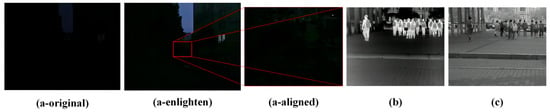

图Figure 1.在星光级照明环境中,可见光相机、红外相机、低照度相机的成像效果如下:(In starlight-level illumination environments, the imaging effects of visible light cameras, infrared cameras, and low-light cameras are as follows: (a-original)表示用手机直接拍摄的图像) represents an image captured directly with a mobile phone;( (a-enlighten)) represents the image enhanced using 表示使用the Zero DCE++ 模型增强的图像;(Amodel; (a-aligned)表示具有相应分辨率的增强版本中的图像。() represents the image in the enhanced version with the corresponding resolution. (b)) represents an image captured with an infrared camera, 表示使用红外相机拍摄的图像,以及 (and (c)) illustrates an image captured with a low-light 表示使用低照度相机拍摄的图像。camera.

人类会散发热量,在较冷的环境下可以使用红外摄像机将其捕获,以将行人与背景区分开来,如图

Humans emit heat, which can be captured using infrared cameras in colder environments to distinguish pedestrians from the background, as shown in Figure 1b. 所示。因此,As a result, OSU [20]提出了一个在白天收集的红外数据集, proposed an infrared dataset collected during the daytime, and TNO [21]也提供了一个结合了夜间捕获的红外和可见光的数据集。后来,随着自动驾驶研究的发展, also provided a dataset that combines infrared and visible light captured at night. Later, with the development of research on autonomous driving, datasets such as CVC-14 [22]、, KAIST [23]和, and FLIR等数据集被引入,这些数据集由使用车载可见光-红外热像仪捕获的行人数据组成,用于模态对齐。随后,引入LLVIP数据集 were introduced, which consist of pedestrian data captured using vehicle-mounted visible light-infrared cameras for modal alignment. Subsequently, the LLVIP dataset [24],推进弱光条件下多光谱融合和行人检测的研究。虽然红外图像可以将个人与背景分开,但它们的成像距离有限,并且包含的细节较少,因此很难区分重叠度高的行人。

采用 was introduced to advance research on multi-spectral fusion and pedestrian detection under low-light conditions. Although infrared images can separate individuals from the background, they have a limited imaging distance and contain less detailed textures, making it difficult to distinguish pedestrians with high overlap.

Low-light cameras with CMOS芯片专门设计用于捕捉长波长光波的低照度相机,可以在星光级照明条件下实现精确成像,如图1c所示。

2. 可穿戴式弱光行人检测基准数据集

2.1. 行人检测数据集

第一个用于行人检测的数据集是麻省理工学院[25],该数据集于2000年提出,其图像分辨率仅为128×64128×64。在随后的几年中,INRIA chips specially designed to capture long-wavelength light waves can achieve [26]、戴姆勒precise [27]imaging 和under TUD-starlight-level illumination conditions, as shown in Figure 1c. By considerussels [28]ing the helpfulness of low-light images for pedestrian detection in low-light environments, we 数据集相继推出,图像分辨率提高到constructed 640×480the 640×480Low-Light 和图像数量不等,从数百到数万不等。手动设计的特征是这些早期数据集的特征。

然而,随着神经网络和深度学习的发展,早期的行人检测数据集太小,无法为模型拟合提供足够的数据。2010年,加州理工学院[13]数据集被提出,该数据集由11个视频组成。训练集包括六个视频,每三帧选择一次,而测试集包括五个视频,每Pedestrian 30Detection 帧选择一次。随后,在2012年,引入了KITTI [14]数据集,该数据集是从车载行车记录仪中收集的。训练集包含7481张图像,测试集包含7518张图像,分辨率为1240×3761240×376。C(HRBUST-LLPED, collected by Harbin University of Science and Technology) dataset in this styPersons[15]源自2017年的Cityscapes[29]数据集,包括训练集udy. The dataset consists of 150 videos captured under low-light conditions, from which 426975张图像、验证集500张图像和测试集1575张图像,分辨率为2048×1024 keyframes were extracted and annotated with 32,148 2048×1024。2018年推出的Cpedestrians. In owdHuman[16]在图像中标注了完整的行人,并标记了每个行人的可见部位和头部。2019rder to meet the requirements of wearable devices, we developed 年的数据集wearable WIDER Plow-light pedestrian 专注于车辆和行人检测,主要从路边监控和车载摄像头中提取图像。WiderPerson[17]于2019年提出,主要关注户外场景而非交通场景下的行人检测,数据主要来自网络来源。Edetection models based on small and nano versions of YOLOv5 and YOLOv8. When considering the fact that the information captured by loCity [18]w-light cameras is relatively limited 数据集于compared 2019 年推出,从欧洲多个城市捕获了各种照明条件下(包括白天和夜间)的行人数据,使其成为第一个全天候行人检测数据集。天津大学于2020年提出的行人检测数据集TJU-Pedestrian [19],涵盖了道路交通和校园两个主要场景,由白天和黑夜的行人数据组成。它是目前最全面的行人检测数据集之一。

上述所有行人检测数据集都是使用可见光相机捕获的。然而,使用传统的可见光摄像机在弱光场景中清晰捕捉行人具有挑战性。2015年,to visible-light cameras, we first trained the models on the KAIST数据集[23]推出了第一个包括红外和可见光模式的行人检测数据集,考虑了可能影响自动化系统性能的昼夜照明变化。TTI, KAIST, 数据集主要提供 12 对完全对齐的可见光和红外视频,其中 6 对用于训练,6 对用于测试。CVC-14数据集[22]于2016年提出,是同时收集可见光和红外图像的数据集,主要针对自动驾驶场景。它由LLVIP, and TJU-Pedestrian datasets separately and then fine-tuned them using our dataset. As a result, our trained models achieved satisfactory results in speed 7085and 张训练图像和accuracy.

Contributions. 1433Our 张测试图像组成,分辨率为 640×512contributions cover 640×512。该数据集的缺点之一是可见光和红外模态之间缺乏完全对齐。2018年推出的FLIR热数据集主要包含9711张红外图像和9233张RGB图像,用于模型训练和验证。它旨在促进FLIR红外热像仪在自动驾驶系统中的使用。LLVIP数据集[24]于2022年提出,构建了一个与可见光和红外图像相对应的数据集。它主要用于图像融合、行人检测和模态传输任务。

2.2. 目标检测

目标检测模型的开发基于Aseveral aspects.

- (1)

-

We have exNpanded the focus of pedestrian detet和VGGNet的成功。早期的目标检测模型可分为两类:两阶段模型和一阶段模型。

两阶段模型的开创性工作是2014年的Rction to low-light images and have constructed a low-CNNlight pedestrian detection [30]模型,这也是第一个基于深度学习的目标检测模型。两阶段目标检测模型的主要思想是通过卷积提取图像特征后,选择可能包含对象的粗略感兴趣区域,然后从对齐的感兴趣区域对特定对象进行分类。随后,Fdataset R-CNN [31]using a low-light camera. The dataset contains denser pedestrian instances compared to existing pedestrian 和detection Fastdatasets.

- (2)

一阶段目标检测模型直接将提取的特征图划分为N×N个区域,以预测每个区域的目标,无需预测感兴趣区域,提高了目标检测模型的速度。该类别中的流行型号是detection YOLOmodels [10]based 和on SSD [9],the YOLOv5 已从and YOLOv1 发展到 YOLOv8。尽管YOLO8 frameworks, considering the lower computational power of wearable platforms when compared to GPUs. We have improved the model’s performance by modifying the activation layer and loss functions.

- (3)

-

We first pretrained our models on four v1isible light pedestrian detection datasets and then fine-tuned them on our constructed [10]通过直接回归边界框的位置来降低计算复杂度,但它在检测密集和小物体方面并不有效。YOHRBUST-LOv2[33]和YOLOv3[34]在此基础上进行了改进,并结合了PED dataset. We achieved a performance of 69.90% in terms of AP@0.5:0.95 and asn inference time of 1.6 ms per image.

2.3. 可穿戴设备上的目标检测

基于数据来源,可穿戴设备目标检测模型的研究大致可分为两类:基于日常生活图像的智能辅助设备和基于thermal stability and high sensitivity of CCD (CMOS) in the video acquisition process of low-light cameras, noise will inevitably be introduced into the video. AR平台的辅助设备。

在基于AR的研究中,Edditionally, sincke the framert等[39]将 rate of the video is 60 Hz and the difference in pedestrian poloLens与YOLOv2相结合,设计了一种辅助系统,为视障人士提供音频导航。Bahri等[40]在Hses between adjacent frames is minimal, we first use a smoothing denoising technique with the neighboring two frames to enhance the current frame. Next, when considering that the pedestrian gaits in the video are usualoLens平台上部署并评估了YOLOv3和Faster R-CNN。Park等[41]利用Mly slow, there is significant redundancy in the pedestrian poses. Therefore, we select one frame every 180 frames (i.e., 3 s per frame) as a keyframe. We then remove frames that do not contain pedestrian targets and search for clear frames within a range of ±7 frames of the blurred frames to update the keyframes. Ultimately, we obtained 4269 frames as the image data for consk R-CNN模型在AR设备中实现对象分割,并评价了其在智能制造中物品匹配、检测和维护中的应用。Park等[6]采用Rtructing our low-light pedestrian detectinaNet进行目标检测,并以on dataset.

Data AR技术为平台,为协作机器人提供智能辅助。Dnnotatimitropoulos等[7]利用AR和深度学习进行可穿戴设备识别,通过语音或手势实现人类操作员和机器人之间的交互。

在基于日常生活图像的研究中,Hon: We used the labelImg tool to annotate the processed image data manually. For each person present in the image with less than 90% occlusion (i.e., except for cases where only a tin等[3]使用Fy portion of the lower leg or arm ister R-CNN进行食物物体检测,将其作为可穿戴设备在眼镜中实现,并在估计咀嚼动作次数的同时自动捕捉就餐场景的图像。Kim等[5]利用可穿戴设备识别和预测狗的行为,并评估了F visible), we labeled them as “Pedestrian”. We cross-referenced the uncertain pedestrian annotationster R-CNN和YOLOv3/v4的性能。Li等[4]采用可穿戴传感器和YOLOv5模型检测电子屏幕,评估电子屏幕的使用时间。A with the original videos to avoid missing pedestrifando等[42]提出了一种基于YOLOs due to v5的轻量级高精度公交车检测模型,帮助视障人士登上正确的公交车并在正确的公交车站下车。Misual reasons or mistaya-Martínez等[43]介绍了一种基于Tkenly labeling trees as pedestrians. As a result, we obtained a total of 32,148 pedestriany YOLOv3的行人检测架构,该架构在低成本可穿戴设备中实现,作为辅助视障人士的替代解决方案。尽管这些研究为使用可穿戴设备检测行人提供了良好的基础,但它们并未考虑夜间弱光下的行人检测。 labels.