You're using an outdated browser. Please upgrade to a modern browser for the best experience.

Please note this is a comparison between Version 1 by Amira Guesmi and Version 2 by Lindsay Dong.

Vision-based perception modules are increasingly deployed in many applications, especially autonomous vehicles and intelligent robots. These modules are being used to acquire information about the surroundings and identify obstacles. Hence, accurate detection and classification are essential to reach appropriate decisions and take appropriate and safe actions at all times. Adversarial attacks can be categorized into digital and physical attacks.

- adversarial machine learning

- physical adversarial attack

- Digital Adversarial Attacks

- security

- autonomous vehicles

- camera-based vision systems

- classification

- intellegent robots

1. Introduction

The revolutionary emergence of deep learning (DL) has shown a profound impact across diverse sectors, particularly in the realm of autonomous driving [1]. Prominent players in the automotive industry, such as Google, Audi, BMW, and Tesla, are actively harnessing this cutting-edge technology in conjunction with cost-effective cameras to develop autonomous vehicles (AVs). These AVs are equipped with state-of-the-art vision-based perception modules, empowering them to navigate real-life scenarios even under high-pressure circumstances, make informed decisions, and execute safe and appropriate actions.

Consequently, the demand for autonomous vehicles has soared, leading to substantial growth in the AV market. Strategic Market Research (SMR) predicts that the autonomous vehicle market will achieve an astonishing valuation of $196.97 billion by 2030, showcasing an impressive compound annual growth rate (CAGR) of 25.7% (ACMS). The integration of DL-powered vision-based perception modules has undeniably accelerated the progress of autonomous driving technology, heralding a transformative era in the automotive industry. With the increasing prevalence of AVs, their potential impact on road safety, transportation efficiency, and overall user experience remains a subject of great interest to consumers, researchers, and investors alike.

However, despite the significant advancements in deep learning models, they are not immune to adversarial attacks, which can pose serious threats to their integrity and reliability. Adversarial attacks involve manipulating the input of a deep learning classifier by introducing carefully crafted perturbations, strategically chosen by malicious actors, to force the classifier into producing incorrect outputs. Such vulnerabilities can be exploited by attackers to compromise the security and integrity of the system, potentially endangering the safety of individuals interacting with it. For instance, a malicious actor could add adversarial noise to a stop sign, causing an autonomous vehicle to misclassify it as a speed limit sign [2][3][2,3]. This kind of misclassification could lead to dangerous consequences, including accidents and loss of life. Notably, adversarial examples have been shown to be effective in real-world conditions [4]. Even when printed out, an image specifically crafted to be adversarial can retain its adversarial properties under different lighting conditions and orientations.

Therefore, it becomes crucial to understand and mitigate these adversarial attacks to ensure the development of safe and trustworthy intelligent systems. Taking measures to defend against such attacks is imperative for maintaining the reliability and security of deep learning models, particularly in critical applications such as autonomous vehicles, robotics, and other intelligent systems that interact with people.

Adversarial attacks can broadly be categorized into two types: Digital Attacks and Physical Attacks, each distinguished by its unique form of attack [3][4][5][3,4,5]. In a Digital Attack, the adversary introduces imperceptible perturbations to the digital input image, specifically tailored to deceive a given deep neural network (DNN) model. These perturbations are carefully optimized to remain unnoticed by human observers. During the generation process, the attacker works within a predefined noise budget, ensuring that the perturbations do not exceed a certain magnitude to maintain imperceptibility. In contrast, Physical Attacks involve crafting adversarial perturbations that can be translated into the physical world. These physical perturbations are then deployed in the scene captured by the victim DNN model. Unlike digital attacks, physical attacks are not bound by noise magnitude constraints. Instead, they are primarily constrained by location and printability factors, aiming to generate perturbations that can be effectively printed and placed in real-world settings without arousing suspicion.

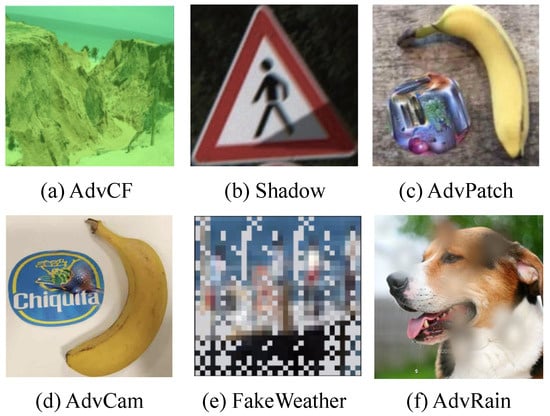

The primary objective of an adversarial attack and its relevance in real-world scenarios is to remain inconspicuous, appearing common and plausible rather than overtly hostile. Many previous works in developing adversarial patches for image classification have focused mainly on maximizing attack performance and enhancing the strength of adversarial noise. However, this approach often results in conspicuous patches that are easily recognizable by human observers. Another line of research has aimed to improve the stealthiness of the added perturbations by making them blend seamlessly into natural styles that appear legitimate to human observers. Examples include camouflaging the perturbations as color films [6], shadows [7], or laser beams [8], among others.

Figure 1 provides a visual comparison of AdvRain with existing physical attacks. While all the adversarial examples in Figure 1 successfully attack deep neural networks (DNNs), AdvRain stands out in its ability to generate adversarial perturbations with natural blurring marks (emulating a similar phenomenon to the actual rain), unlike the conspicuous pattern generated by AdvPatch or the unrealistic patterns generated by FakeWeather [9]. This showcases the effectiveness of AdvRain in creating adversarial perturbations that blend in with the surrounding environment, making them difficult for human observers to detect.

Figure 1. AdvRain vs. existing physical-world attacks: (a) AdvCF [7], (b) Shadow [6], (c) advPatch [10], (d) AdvCam [8], (e) FakeWeather [9], and (f) AdvRain.

2. Adversarial Attacks in Camera-Based Vision Systems

Environment perception has emerged as a crucial application, driving significant efforts in both the industry and research community. The focus on developing powerful deep learning (DL)-based solutions has been particularly evident in applications such as autonomous robots and intelligent transportation systems. Designing reliable recognition systems is among the primary challenges in achieving robust environment perception. In this context, automotive cameras play a pivotal role, along with associated perception models such as object detectors and image classifiers, forming the foundation for vision-based perception modules. These modules are instrumental in gathering essential information about the environment, aiding autonomous vehicles (AVs) in making critical decisions for safe driving.

The pursuit of dependable recognition systems represents a major hurdle in establishing high-performance environment perception. The accuracy and reliability of such systems are critical for the safe operation of AVs and ensuring their successful integration into real-world scenarios. However, it has been demonstrated that these recognition systems are susceptible to adversarial attacks, which can undermine their integrity and pose potential risks to AVs and their passengers. In light of these challenges, addressing the vulnerabilities of DL-based environment perception systems to adversarial attacks becomes paramount. Research and development efforts must focus on building robust defense mechanisms to fortify these systems against potential threats, enabling the safe and trustworthy deployment of AVs and intelligent transportation systems in the future.