Your browser does not fully support modern features. Please upgrade for a smoother experience.

Please note this is a comparison between Version 2 by Lindsay Dong and Version 1 by Lingjun Zhang.

Global photographic aesthetic image generation aims to ensure that images generated by generative adversarial networks (GANs) contain semantic information and have global aesthetic feelings. Existing image aesthetic generation algorithms are still in the exploratory stage, and images screened or generated by a computer have not yet achieved relatively ideal aesthetic quality.

- GANs

- global photographic aesthetic

- image generation

- generative adversarial network

1. Introduction

Aesthetic image generation is widely used and has penetrated every aspect of human life. For example, in print advertising design with pictures, the image is required to be clear, real, and have a high degree of beauty to attract attention. Generative adversarial networks (GANs) are widely used in image generation and have developed rapidly in recent years; several high-quality derived models based on GANs have been proposed. These generative models have made great breakthroughs in the fields of image generation such as indoor and outdoor scenes, animals, and flowers. However, the images generated by adversarial networks often focus more on the reconstruction of the image content, without any consideration of aesthetic factors. The resulting images lack the aesthetic feeling recognized by the public, which leads to their application scenarios being limited by their aesthetic quality.

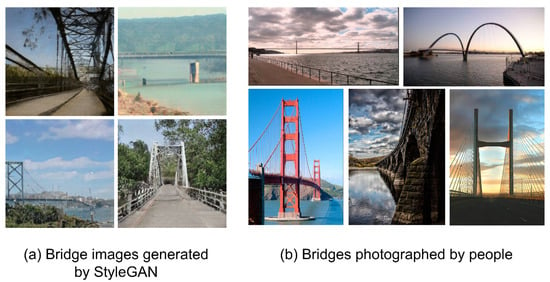

From the perspective of image aesthetics, a photographic image can be divided into two parts: specific content and aesthetic presentation [1]. The generation effect of existing generative models on image content has reached the level of mixing the spurious with genuine and its aesthetic presentation is not satisfactory. Figure 1 shows a bridge image generated by the StyleGAN generation model and one taken by human beings. The image generated by GANs and its semantic expression are very accurate. It is almost impossible to distinguish the true from the false image with the human eye. However, compared with the bridge images taken by humans, there is a significant gap in its aesthetic quality. When human beings select images, they often screen from massive images according to the aesthetic sense of the images. Although the existing image generation models have been able to produce highly realistic images in fixed scenes and in large quantities, there are still no extensive application scenarios due to the uneven aesthetic quality of the generated images. Therefore, the image generation of GANs should not only satisfy high-quality content reconstruction, but also pursue a better visual perception.

Figure 1.

A comparison of the generated images and the images taken artificially.

GANs perform excellently in unconditional generation tasks, image processing, face editing, and other conditional generation tasks. However, their application in aesthetic image generation is still in the exploration stage and the existing global aesthetic image generation algorithm has the following problems:

-

GAN models need to be redesigned and trained. Training GANs requires constructing appropriate loss function, evaluation indicators, and constraints to ensure the stability and effectiveness of the training, which is extremely difficult and resource-consuming. Compared with color, object, artistic style, and other attributes, the aesthetics of the image have strong uncertainty and subjectivity, and there is no basis for completely qualitative aesthetics; therefore; it is difficult to design and add appropriate aesthetic constraints for GANs to generate images with a better aesthetic effect.

-

The current datasets used to train the aesthetic conditions of GANs are small, and the quality of the generated image content semantics is poor. The aesthetic condition training set of the GANs must meet the requirements of both semantic and aesthetic labels, and no large-scale dataset currently meets this requirement. Even with pre-training on larger datasets, the image semantics are less effective compared to those of the existing GANs trained on large-scale datasets.

2. Aesthetic Image Generation Based on Generative Adversarial Networks

2.1. Aesthetic Prediction Model

The evaluation of the aesthetic quality of images occupies an important position in computer vision. At present, some achievements have been made in the technology of image quality prediction [2]. A framework for using convolutional neural networks (CNNs) to predict the continuous aesthetic score of images is proposed, which can effectively evaluate the degree of aesthetic quality similar to the human system [3]. An image aesthetic prediction method based on weighted CNNs is proposed using a histogram prediction model to predict aesthetic scores, and to estimate the difficulty of aesthetic evaluation of the input images. A probabilistic quality representation method for deep blind image quality prediction proposed by Zeng et al. [4] retrains AlexNet and ResNetcnn to predict photo quality. Recently, a new multi-model recurrent attention convolutional neural network [5] was proposed, which consists of two streams: visual flow and language flow. The former uses a recurrent attention network to eliminate irrelevant information and focus on extracting visual features in some key areas. The latter uses Text-CNN to capture the high-level semantics of user comments. Finally, the multimodel decomposition bilinear pooling method is used to effectively integrate text features and visual features.

2.2. Latent Space of GANs

A latent space is a space of a compressed representation of the data. The input variable z of GANs is unstructured, so it is proposed to decompose the latent variable into a conditional variable c and the standard input latent variable z. The decomposition of the latent space specifically includes both supervised methods and unsupervised methods. Typical supervised methods are CGAN [7][6] and ACGAN [8][7]. Recently, a latent space using supervised learning for GANs, which discovers more latent space about GAN by encoding the human future knowledge, was proposed [9][8]. Unsupervised methods do not use any label information and require disentanglement of the latent space to obtain meaningful feature representations. The disentangled representation of GANs is the process of separating the feature representation of the individual generating factors from the latent space. The disentangled representation can separate the explanatory factors of nonlinear interactions in real data, such as object shape, material properties, and light sources. The separation of properties can help researchers to manipulate GAN generation more intuitively. For the study of decoupled representation learning, Lee et al. [10][9] proposed an information distillation generation adversarial network, which learns separated representations based on vaa models and extracts the learned representations and additional interference variables into separate GAN-based generators for high-fidelity synthesis. In InterfaceGAN [11][10], the framework explains disentangled face representations learned by state-of-the-art GANs and deeply analyzes the properties of face semantics in latent space, detail the correlation between different semantics, and better disentangle them through subspace projections to provide more precise control over attribute manipulation.2.3. Aesthetic Image Generation

Aesthetic image generation refers to the generation of an image with aesthetic factors, so that the image has higher quality and is more in line with human aesthetics. Some traditional methods to improve the aesthetic quality of images, such as the super resolution reconstruction proposed by Li et al. [13][11], are to use the original image information to restore the super resolution image with clearer details and stronger authenticity. There is also an image repair algorithm proposed by them [14][12], which performs well in the task of repairing irregular mask images, and the repair results have good performance in the aspects of edge consistency, semantic correctness, and overall image structure. These methods are closely related to improving image quality. To improve the quality of an image generated by GANs, some researchers studied the aesthetic image generation algorithm based on the conditional generation adversarial network, and attempted to design aesthetic losses and aesthetic constraints to train the aesthetic condition GANs, so that the generated images are both semantic and aesthetic [15][13]. Murray et al. proposed PFAGAN [16][14], a conditional GAN with aesthetics and semantics as dual labels, which combines the conditional aesthetic information and conditional semantic information as the training constraints, enabling the generative model to learn the content semantics and image beauty. Zhang et al. proposed a modified aesthetic condition GAN based on unsupervised representation learning with deep convolutional generative adversarial networks [17][15], where the network was trained with a batch size set to 256 for a total of 10,000 rounds of training iterations. There are still some problems in the existing methods of aesthetic image generation. The training of PFAGAN, which was proposed by Murray et al., is extremely time-consuming: it was trained for 40 h on two Nvidia V100 graphics cards with a batch size of 256. The aesthetic condition GAN proposed by Zhang et al. requires training on datasets with both semantic and aesthetic labels, and there are no large-scale datasets that meet these requirements. Therefore, the semantic quality of the image generated by GAN under the existing aesthetic condition is greatly reduced, and its aesthetic improvement is also rather limited. In other words, the existing global aesthetic image generation algorithm cannot make use of the high-quality construction ability of the existing generated model, and it is difficult to learn and generate aesthetic images in small-scale datasets.2.4. Databases

There are many datasets used for image aesthetic research, and different datasets can be studied based on different aesthetic tasks. ImageNet [18][16] is a large visualization database for visual object recognition software research, which has over 14,000,000 images, over 20,000 categories, and more than 1,000,000 images with explicit category annotation and object position annotation. This dataset remains one of the most commonly used datasets for image classification, detection, and localization in the deep learning field. CelebA [19][17] is a large-scale face attributes dataset consisting of 200,000 celebrity images and every image has 40 attribute annotations. CelebA and its associated CelebA-HQ [20][18], CelebAMask-HQ [21][19], and CelebA-Spoof [21][19] are all widely used in the generation and manipulation of face images. LSUN [22][20] is a scene-understanding image dataset, which mainly contains 10 scene categories such as bedroom, living room, church, and 20 object categories such as birds, cats, and buses, with a total of about 1 million labeled images. AVA [23][21] is a dataset for aesthetic quality evaluation that contains 250,000 images, and every image has a series of ratings as well as 60 classes of semantic-level labels. The dataset also contains 14 categories of photographic styles such as complementary colors, duotones, and light on white, etc.References

- Jin, X.; Zhou, B.; Zou, D.; Li, X.; Sun, H.; Wu, L. Image aesthetic quality assessment: A survey. Sci. Technol. Rev. 2018, 36, 10.

- Kao, Y.; Wang, C.; Huang, K. Visual aesthetic quality assessment with a regression model. In Proceedings of the 2015 IEEE International Conference on Image Processing (ICIP), Quebec City, QC, Canada, 1–27 September 2015; IEEE: Quebec City, QC, Canada, 2015; pp. 1583–1587.

- Jin, B.; Segovia, M.V.O.; Süsstrunk, S. Image aesthetic predictors based on weighted CNNs. In Proceedings of the 2016 IEEE International Conference on Image Processing (ICIP), lPhoenix, AZ, USA, 25–28 September 2016; IEEE: Phoenix, AZ, USA, 2016; pp. 2291–2295.

- Zeng, H.; Zhang, L.; Bovik, A.C. A probabilistic quality representation approach to deep blind image quality prediction. arXiv 2017, arXiv:1708.08190.

- Zhang, X.; Gao, X.; Lu, W.; He, L.; Li, J. Beyond vision: A multimodal recurrent attention convolutional neural network for unified image aesthetic prediction tasks. IEEE Trans. Multimed. 2021, 23, 611–623.

- Zaltron, N.; Zurlo, L.; Risi, S. Cg-gan: An interactive evolutionary gan-based approach for facial composite generation. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; AAAI Press: Palo Alto, CA, USA, 2020; pp. 2544–2551.

- Odena, A.; Olah, C.; Shlens, J. Conditional image synthesis with auxiliary classifier gans. In Proceedings of the International Conference on Machine Learning, Sydney, Australia, 6–11 August 2017; pp. 2642–2651.

- Van, T.P.; lNguyen, T.M.; Tran, N.N.; Nguyen, H.V.; Doan, L.B.; Dao, H.Q.; Minh, T.T. Interpreting the latent space of generative adversarial networks using supervised learning. In Proceedings of the 2020 International Conference on Advanced Computing and Applications (ACOMP),l Quy Nhon, Vietnam, 25–27 November 2020; pp. 49–54.

- Lee, W.; Kim, D.; Hong, S.; Lee, H. High-fidelity synthesis with disentangled representation. In Proceedings of the Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; pp. 157–174.

- Shen, Y.; Yang, C.; Tang, X.; Zhou, B. Interfacegan: Interpreting the disentangled face representation learned by gans. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 44, 2004–2018.

- Li, H.; Wang, D.; Zhang, J.; Li, Z.; Ma, T. Image super-resolution reconstruction based on multi-scale dual-attention. Connect. Sci. 2023, 1–19.

- Li, H.-A.; Hu, L.; Zhang, J. Irregular mask image inpainting based on progressive generative adversarial networks. Imaging Sci. J. 2023, 71, 299–312.

- Brock, A.; Donahue, J.; Simonyan, K. Large scale gan training for high fidelity natural image synthesis. arXiv 2018, arXiv:1809.11096.

- Murray, N. Pfagan: An aesthetics-conditional gan for generating photographic fine art. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision Workshop (ICCVW), Seoul, Republic of Korea, 27–28 October 2019; pp. 3333–3341.

- Radford, A.; Metz, L.; Chintala, S. Unsupervised representation learning with deep convolutional generative adversarial networks. arXiv 2015, arXiv:1511.06434.

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, F.F. Imagenet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR 2009), Miami, FL, USA, 20–25 June 2009; pp. 248–255.

- Liu, Z.; Luo, P.; Wang, X.; Tang, X. Deep learning face attributes in the wild. In Proceedings of the International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 3730–3738.

- Karras, T.; Aila, T.; Laine, S.; Lehtinen, J. Progressive growing of gans for improved quality, stability, and variation. arXiv 2017, arXiv:1710.10196.

- Lee, C.-H.; Liu, Z.; Wu, L.; Luo, P. Maskgan: Towards diverse and interactive facial image manipulation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognitione, Seattle, WA, USA, 14–19 June 2020; pp. 5549–5558.

- Yu, F.; Zhang, Y.; Song, S.; Seff, A.; Xiao, J. Lsun: Construction of a large-scale image dataset using deep learning with humans in the loop. arXiv 2015, arXiv:1506.03365.

- Murray, N.; Marchesotti, L.; Perronnin, F. Ava: A large-scale database for aesthetic visual analysis. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, lDaejeon, Republic of Korea, 5–6 November 2012; pp. 2408–2415.

More