Your browser does not fully support modern features. Please upgrade for a smoother experience.

Please note this is a comparison between Version 1 by Panagiotis Gkonis and Version 2 by Rita Xu.

The rapid growth in the number of interconnected devices on the Internet (referred to as the Internet of Things—IoT), along with the huge volume of data that are exchanged and processed, has created a new landscape in network design and operation. Due to the limited battery size and computational capabilities of IoT nodes, data processing usually takes place on external devices.

- IoT

- cloud-based operating systems

- edge computing

- machine learning

1. Introduction

The unstoppable proliferation of novel computing and sensing device technologies, and the ever-growing demand for data-intensive applications in the edge and cloud, are driving the next wave of transformation in computing systems architecture [1][2][1,2]. In the same context, there is a vast number of devices that can collect, process, and transmit data to other devices and systems over the Internet or other communications networks. This new concept, known as the Internet of Things (IoT), enables the collection of data from various and diverse sources in the physical world [3]. Leveraging this concept, many different advanced human-centric services and applications can be widely deployed, such as energy management in smart home environments, remote health monitoring, intelligent transportation, etc. [4].

Since most data are created at the edge, and computationally intensive data processing usually takes place in centralized cloud infrastructures, a flexible interconnection of all involved entities is required to bring the edge as close to the cloud as possible and vice versa. Together with the cloud, edge-based computing is pushing the limits of the traditional centralized cloud computing solutions enabling, among other features, efficient data processing and storage as well as low latency for service execution. In this context, multi-access edge computing (MEC), formerly mobile edge computing, is a new architectural concept that enables cloud computing capabilities and an IT service environment at the edge of any network [5][6][5,6]. Located in close proximity to the end users and connected IoT devices, MEC provides extremely low latency and high bandwidth while always enabling applications to leverage cloud capabilities if necessary. The resulting paradigm shift in computing is centered around the dynamic, intelligent, and yet seamless interconnection of IoT devices, edge, and cloud resources in one computing system to form what is known as a continuum [7][8][7,8]. The goal of this synergy is the provision of advanced services and applications to the end users, which is also leveraged by similar advances in the networking field, such as network function virtualization (NFV) [9][10][9,10], which decouples network operations from specific hardware equipment, as well as software defined networking (SDN) [11], which enables a holistic and intelligent network management approach.

A continuum, today also referred to as cloud, IoT, edge-to-cloud, or fog-to-cloud continuum, is expected to provide high computational capabilities for data processing at both edge and cloud while inferring and persisting important information for post-mortem and offline analysis. The full deployment of such a continuum is expected to leverage the support of latency-critical applications via dynamic task offloading among the IoT nodes and edge or cloud servers. Moreover, data collected directly from all entities of the continuum can be used for optimum resource allocation and scheduling policies [12]. However, there are many technical challenges associated with this new architectural approach:

-

Unlike centrally managed clouds, massively heterogeneous systems in the continuum (including IoT devices, edge devices, and cloud infrastructures) are significantly more complex to manage. Furthermore, distributed data management raises an additional level of complexity by classifying data infrastructures, collecting vast and diverse data volumes, providing transparent data access methods, optimizing the internal data flow, and effectively preserving data collections [13].

-

Because of the heterogeneity of the involved devices and associated technologies, hardware and technology-agnostic protocols are important, not only to manipulate a large number of interconnected entities but also to enable scalability which is a key concept in the IEC continuum.

-

The continuum needs to be effectively managed to optimally meet the application demands during service execution, taking into account multiple constraints, such as the location of the involved nodes (edge or IoT), their transmission and processing capabilities, as well as their energy footprint. Optimum resource allocation in multi-node heterogeneous environments might lead to highly non-convex problems. In this context, machine learning (ML) algorithms have emerged as a promising approach that can solve various optimization problems providing near-optimal solutions [14][15][14,15]. In traditional centralized ML approaches, all collected data are sent to a high-performance computing node for proper model training and inference. However, on one hand, the collection of heterogeneous data from all involved nodes of the continuum might increase the pre-processing load, and on the other hand, centralized ML training might jeopardize latency requirements in critical applications.

-

Due to the distributed and dynamic nature of the continuum, with plenty of devices from different owners and provenance, the application of reliability and trustiness becomes fundamentally challenging. Secure mechanisms for accessing the distributed nodes, preserving data privacy, and providing open and transparent operation are fundamental to enhancing trustworthiness [16][17][19,20].

-

As it was previously mentioned, the continuum puts together a broad and diverse space with multiple heterogeneous devices and protocols. Although there are several standards, open-source projects, and foundations that focus on global communication and management protocols, the envisioned continuum must also consider that some constrained devices will not support any specific tool. Therefore, contributing to an open ecosystem favors interoperability with existing and emerging frameworks, which is a key challenge for next-generation broadband wireless networks [18][21].

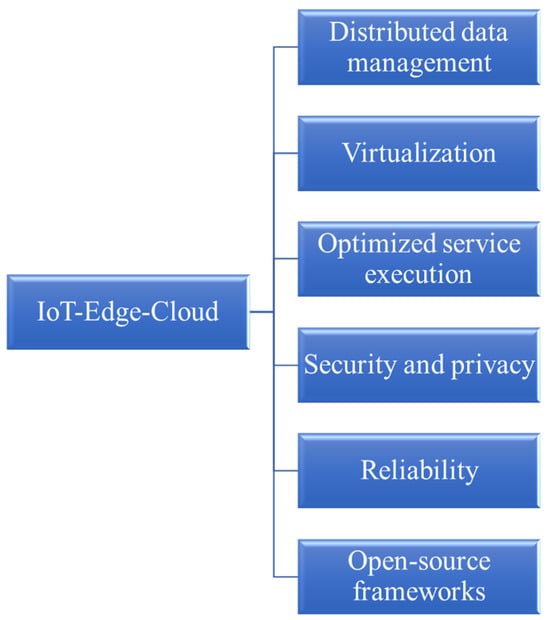

Hence, as it becomes apparent from the above, the optimum design of an IEC infrastructure should address various technical challenges, such as: (i) distributed data management; (ii) continuum infrastructure virtualization and diverse network connectivity; (iii) optimized and scalable service execution and performance; (iv) guaranteed trust, security, and privacy; (v) reliability and trustworthiness; and (vi) support of scalability, extensibility, and adoption of open-source frameworks [19][22]. These challenges are also depicted in Figure 1. The envisioned effects of edge computing in a wide range of potential use cases, from smart environmental monitoring to future fifth-generation (5G) advanced applications (such as e-health, autonomous driving, smart manufacturing, etc.), have fueled several initiatives aimed at addressing the different challenges posed by the full deployment of an IEC continuum. These challenges become even more important as the discussions on sixth-generation (6G) networks have already started taking place [20][21][23,24].

Figure 1. Challenges in the IoT-edge-cloud continuum.

2. Supporting Technologies in the IoT-Edge-Cloud Continuum

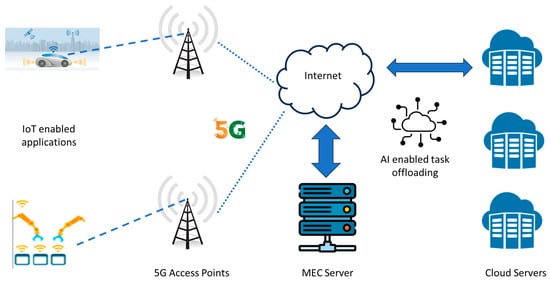

The overall architectural approach of an IEC system is shown in Figure 2 (optional communication with a 5G network has been included as well). As can be observed, various IoT nodes from different operational scenarios may communicate with 5G access points (APs) via either public or private networks. The latter case can be more appealing in latency-demanding applications, such as smart manufacturing, since all network operations can be established within the premises of interest [22][23][34,35]. It should also be noted at this point that inter-node communications can be supported as well, based on well-known communication protocols, such as Sigfox, LoRa, or narrow band (NB)-IoT [24][36]. It is also assumed that MEC servers can be either collocated with APs or alternately deployed in close proximity.

Figure 2. An IoT-edge-cloud operating system.

IoT nodes, which are assumed to have sensing and transmitting capabilities, can offload a particular task to the MEC server either in cases of latency-demanding applications or in cases of extreme computational load. This offloading may also take place in the cloud domain if necessary. In all cases, optimum task offloading should take into consideration additional parameters that may have a direct effect on the system’s performance, such as the energy footprint and computational capabilities of the involved servers. Therefore, as depicted in Figure 2, efficient ML algorithms can be used for resource optimization and efficient task offloading. In all cases, it is essential to identify trusted IoT nodes and secure task offloading/data transfer procedures. Therefore, task offloading may also include the execution of advanced security protocols that might not always be feasible in resource-constraint IoT nodes. Finally, in highly demanding latency scenarios (e.g., autonomous driving in the cases of advanced 5G infrastructure [25][37]), the involved IoT devices should be in a position to operate autonomously and support network functionalities via NFV and ensure uninterrupted connectivity.

In light of the above, the most important key enabling technologies for an efficient IEC deployment include distributed/decentralized ML approaches for efficient resource optimization, serverless computing to leverage software and hardware decoupling, blockchain technology to ensure security during data transfer among the various nodes of the continuum as well as trusted nodes identification, subnetworks for the provision of uninterrupted connectivity, and device to device (D2D) communications for inter-node data transfer and content caching if necessary.

2.1. Distributed and Decentralized Machine Learning

As also mentioned in the introductory part, over the last decade, ML algorithms have emerged as a potential solution to relax the computational burden of traditional optimization approaches and provide near-optimum solutions in highly non-convex problems [26][38]. In centralized ML approaches, data collected directly from different network devices (i.e., mobile terminals, access points, IoT devices, edge servers, etc.), are sent to a high-performance computing server for proper model training. Afterwards, model inference to all involved devices takes place, if necessary.

However, there are several disadvantages with this approach, especially in the modern era of IEC systems: (i) centralized data collection might lead to high computational load, especially for a large number of involved devices and associated datasets, as well as to a single point of failure; (ii) since the vision of the IEC continuum involves multiple connected heterogeneous devices over diverse infrastructures, data preprocessing prior to the actual training of the ML model is necessary, which might increase overall training time and result in system latency deterioration; (iii) frequent transmission of data from IoT devices to centralized servers might drive security and privacy concerns since not all IoT devices have the processing power to execute advanced security protocols; and (iv) computationally demanding ML training might have an impact on the energy footprint of the involved devices.

Distributed ML approaches can reduce the centralized computational burden, either by parallelizing the training procedures or by efficiently distributing training data [27][28][39,40]. The first case, which is also known as model parallelism, enables different parts of the model to be trained on different devices (e.g., certain layers of a neural network (NN) or certain neurons per layer are trained per device). In the second case, each ML node takes into account a subset of the training data. Afterwards, model aggregation takes place. Although both aforementioned approaches can improve training times and relax the computational burden, unavoidably, training data offloading still takes place. Consequently, their deployment on privacy-critical applications might be questionable.

To overcome the aforementioned issue, the concept of FL has emerged over the last years [29][30][41,42] as a promising approach that ensures distributed ML training on the one hand and privacy protection on the other hand. To this end, training is performed locally on the involved devices, with no need for forwarding training data to external servers. At predefined time intervals, the parameters of the trained model are sent to the central processing node, where the master model is periodically updated. Moreover, since training data remain localized, privacy is enhanced, as was previously mentioned. In addition, with FL, data can be distributed across many devices, which can enable the use of much larger datasets. Moreover, the amount of data transfers and the communication burden are reduced, especially in cases where the data are distributed across devices with limited connectivity or bandwidth. Finally, FL allows the model to be trained on a diverse range of data sources, which can improve its robustness and generalizability, as well as overall training times. For example, focusing on the previously mentioned autonomous driving 5G scenario, using this approach, a predefined set of identical cars can be parallelly trained on different landscapes. Results can then be aggregated and sent back to the autonomous cars in order to cover a wide range of driving reactions.

A schematic diagram of FL is shown in Figure 3, in the case of NN training. In this case, each node locally trains the corresponding ML model with the available local data set. The derived parameters (i.e., weights of the NN in the specific case) are sent periodically to the master processing node for proper model aggregation. At the next stage, the new weights of the master model are sent back to the local nodes for a model update. Apart from autonomous driving, which was previously mentioned, FL can be quite beneficial in a wide range of scenarios, such as smart manufacturing and e-health applications, where data privacy protection is of utmost importance [31][43]. However, since FL is based on distributed computations, several types of privacy attacks may take place, such as poisoning and backdoor attacks [32][44]. Hence, privacy enhancement is a crucial step towards large-scale FL deployment.

Figure 3. Federated learning in a two-node distributed system.

2.2. Serverless Computing

Serverless computing expands on state-of-the-art cloud computing by further abstracting away software operations and parts of the hardware–software stack. To this end, and with respect to the already standardized 5G architecture, the execution of vertical applications in the management and orchestration layer initiates the E2E service creation and orchestration. In the context of serverless computing [33][34][45,46], related functions need to be executed in the background for specific time triggers or generally short events. In this case, a container cluster manager (CCM) is required where the appropriate set of function containers is enabled per the requested application. Therefore, supported applications are fully decoupled from hardware infrastructure. This will not only make the support of latency-critical scenarios feasible on the one hand, such as autonomous driving, smart manufacturing, e-health applications, etc., but on the other hand, a more efficient infrastructure management can be supported.

The serverless computing concept benefits from containerization by removing decision-making regarding scaling thresholds, reducing costs by charging only when applications are triggered, and reducing application starting times. Therefore, appropriate business models can be applied in IEC continuum systems, based on the actual usage of applications. Serverless and edge computing are indispensable parts in the heterogeneous cloud environments of 6G networks, since major network functionalities should be able to migrate and be executed at the edge, either in cases of outage of the main core network or in order to leverage flexible network deployment for ultra-low latency applications.

2.3. Blockchain Technology

The ever-increasing number of interconnected devices on the Internet has raised many concerns regarding security and privacy preservation, as was previously mentioned. For example, in domestic or e-health IoT scenarios, multiple attacks may take place due to the diverse nature of the involved communication protocols [35][36][47,48]. To this end, blockchain technology is a credible way to ensure security and privacy in heterogeneous infrastructures. A blockchain is a distributed ledger technology with growing lists of records (blocks) that are securely linked together via cryptographic hashes. Each block contains a cryptographic hash of the previous block, a timestamp, and transaction data. These blocks are interconnected to form a chain. Therefore, for a particular block (i.e., nth block), its hash value is calculated by hashing the whole part of the n 1 block, which in turn includes the hash of the n 2 block, etc. [37][38][49,50].

A key novelty of blockchain technology is that it does not require a central authority for node identification and verification, but transactions are made on a peer-to-peer (P2P) basis. In general, blockchain is a decentralized security mechanism, where multiple copies of blocks are held in different nodes. Therefore, a tampering attempt would have to alter all blocks in all participating nodes. Moreover, since timestamps are inserted in all related blocks, it is not possible to alter the encrypted content after it has been published to the ledger, making it more trustworthy and secure as a result. In addition, timestamps are also helpful for the tracking of the generated blocks and for statistical analysis.

The integration of blockchain technology in IoT networks faces many technical challenges since the encryption and decryption process of the blocks requires computational resources that cannot always be supported by lightweight IoT devices. Recent advances in the development of ‘‘light clients’’ for blockchain platforms have enabled nodes to issue transactions in the blockchain network without downloading the entire blockchain [39][51]. Therefore, by combining blockchain with FL, IoT sensing devices can offload a portion of their data to an edge server for local model training. However, there are still open issues to be addressed, such as a common blockchain framework that can be adopted by all involved entities, which is a key concept towards scalability in large-scale networks. Blockchain is usually combined with smart contracts, stored on a blockchain, and run only when predetermined conditions are met [40][41][52,53]. Therefore, human intervention is minimized. Smart contracts do not contain legal language, terms, or agreements—only code that executes actions. Hence, the need for trusted intermediators is reduced, while at the same time, malicious and accidental exceptions are minimized.

2.4. Subnetworks

The increased number of wireless applications deployed at the network edge involving a limited number of network components, such as a sensor network in a manufacturing environment or vehicle-to-vehicle (V2V) communications, requires minimum latency with short-range transmission. To this end, the concept of subnetworks has been introduced, where a network component in the edge acts as a serving AP [42][43][54,55]. However, the concept of subnetworks extends the provision of zero latency to the connected devices, as in cases where the connection with the core network is lost. In this case, as also mentioned in the autonomous driving application, the subnetwork should be in a position to operate autonomously for the provision of uninterrupted E2E connectivity. Sub-networks will be a key driving factor towards the 6G architectural concept due to their local topology in conjunction with the specialized performance attributes required, such as extreme latency or reliability. Moreover, the concept of subnetworks is crucial for the design of energy-efficient networks, where topology reconfiguration might take place in time-varying IoT sensor networks [44][56].

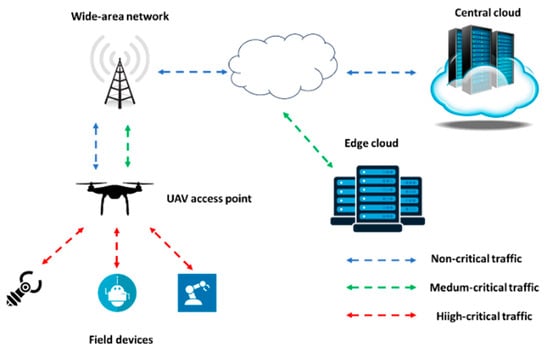

In 6G terminology, subnetworks are also referred to as ‘in-X’ subnetworks, with the ‘X’ standing for the entity where the subnetwork is deployed, such as a production module in a smart manufacturing environment, a robot, a vehicle, a house, or even the human body in cases of wearable devices that can monitor various parameters [45][57]. A schematic diagram of such a network is shown in Figure 4, where data flows are categorized according to their latency requirements: low, such as in the cases of monitoring non-latency-critical key performance indicators (KPIs); medium, such as task offloading in edge servers; and high. The latter case includes, for example, control signals from the involved IoT devices in a smart manufacturing environment that necessitate immediate production termination in cases of malfunction. Therefore, the highly critical data flows are kept within the in-X subnetwork, as the tight latency requirement does not allow for external processing. For this reason, a local edge server can be in close proximity to the AP under consideration (in this case, an unmanned aerial vehicle—UAV).

Figure 4. X subnetwork deployment within wide area networks.

2.5. Device to Device Communications

In an IoT environment, a specific type of content might be requested from several user terminals or other IoT devices in close geographical proximity. In this case, user-experienced latency can be improved if the content is requested from adjacent IoT devices that share the same content, instead of centralized APs. In this case, a particular node requests content by broadcasting a short-range signal in order to set up a link connection with the node having the content. Therefore, D2D connectivity should be supported in this case [46][47][58,59]. However, apart from content caching, D2D connectivity can also support subnetwork organization, as well as dynamic IoT node deployment and reconfiguration if necessary.

In general, D2D communication offers autonomous intelligent services or mechanisms without centralized supervision. Hence, the provision of ultra-low latency services in the IEC continuum can be achieved, as D2D communication offers more reliable connectivity between devices. In addition, the concept of green network deployment can be supported as well, due to the shorter propagation paths and consequently reduced transmission power.

In the same context, device interconnection can be established via mesh networking [48][49][60,61]. A mesh network comprises a type of local area network (LAN) topology, where multiple devices or nodes are connected in a non-hierarchical manner so that they can cooperate and provide significant network coverage to a wider area compared to the area coverage achieved by a single router. As mesh networks consist of multiple nodes, which are responsible for signal sending and information relaying, every node of the network needs to be connected to another via a dedicated link. Since mesh networks leverage a multi-hop wireless backbone formed by stationary routers, they can serve both mobile and stationary users. Mesh networks have significant advantages such as fast and easy network extension, self-configuration, self-healing, and reliability, as a single node failure does not result in total network failure.