You're using an outdated browser. Please upgrade to a modern browser for the best experience.

Please note this is a comparison between Version 3 by Rita Xu and Version 2 by Rita Xu.

“Active Scene Recognition” (ASR) is an approach for mobile robots to identify scenes in configurations of objects spread across dense environments. This identification is enabled by intertwining the robotic object search and the scene recognition on already detected objects. “Implicit Shape Model (ISM) trees” are proposed as the scene model underpinning the ASR approach. These trees are a hierarchical model of multiple interconnected ISMs.

- Hough transform

- spatial relations

- object arrangements

- object search

1. Introduction

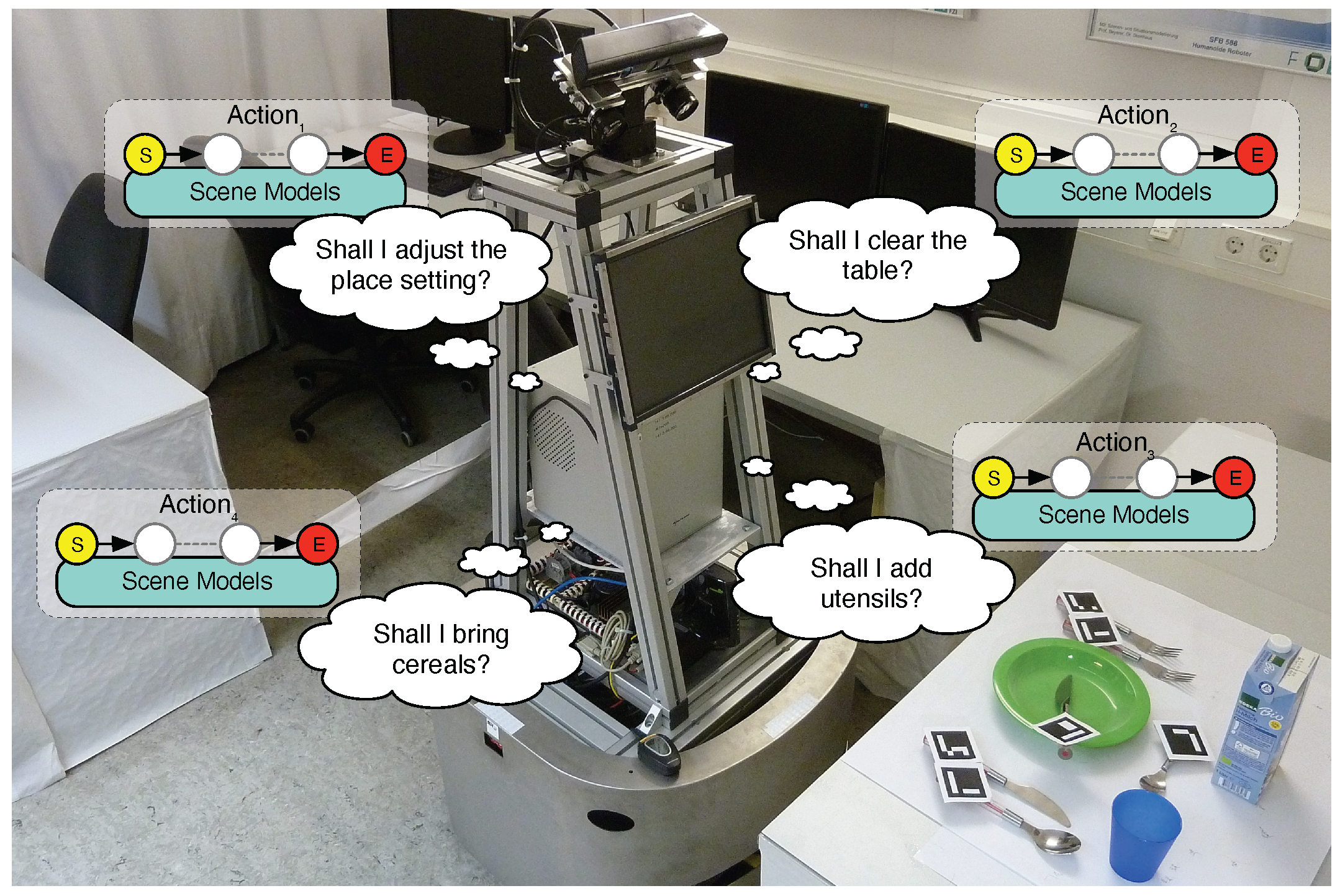

To act autonomously in various situations, robots not only need the capabilities to perceive and act, but must also be provided with models of the possible states of the world. If we imagine such a robot as a household helper, it will have to master tasks such as setting, clearing, or rearranging tables. Let us imagine that such a robot looks at the table in Figure 1 and tries to determine which of these tasks is pending. More precisely, the robot must choose between four different actions, each of which contributes to the solution of one of the tasks. An autonomous robot may choose an action based on a comparison of its perceptions with its world model, i.e., its assessment of the state of the world. Which scenes are present is an elementary aspect of such a world state. In particular, we model scenes not by the absolute poses of the objects in them, but by the spatial relations between these objects. Such a model can be more easily reused across different environments because it models a scene regardless of where it occurs.

Figure 1. Motivating example for scene recognition: The mobile robot MILD looking at an object configuration (a.k.a. an arrangement). It reasons which of its actions to apply.

1.1. Scene Recognition—Problem and Approach

“Implicit Shape Model (ISM) trees” provide a solution to the problem of classifying into scenes a configuration (a.k.a. an arrangement) of objects whose poses are given in six degrees of freedom (6-DoF). Recognizing scenes based on the objects present is an approach suggested for indoor environments by [1] and successfully investigated by [2]. The trees, i.e., the classifier proposed, not only describe a single configuration of objects, but rather a multitude of configurations that these objects can take on while still representing the same scene. Hereinafter, this multitude of configurations will be referred to as a “scene category” rather than as a “scene”, which is a specific object configuration. For Figure 1, the classification problem addressed can be paraphrased as follows: “Is the present tableware an example of the modeled scene category?”, “How well does each of these objects fit our scene category model?”, “Which objects on the table belong to the scene category?”, and “How many objects are missing from the scene category?” This classifier is learned from object configurations demonstrated by a human in front of a robot and perceived by the robot using 6-DoF object pose estimation.

Many scene categories require that the spatial characteristics of relations, including uncertainties, be accurately described. For example, a table setting requires that some utensils be exactly parallel to each other, whereas their positions relative to the table are less critical. To meet such requirements, single implicit shape models (ISMs) were proposed as scene classifiers in [3]. Inspired by Hough voting, a classic but still popular approach (see [4][5]), these ISMs let each detected object in a configuration vote on which scenes it might belong to, thus using the spatial relations in which the object participates. The fit of these votes yields a confidence level for the presence of a scene category in an object configuration.

1.2. Object Search—Problem and Approach

Figure 2 shows an experimental kitchen setup as an example of the many indoor environments where objects are spatially distributed and surrounded by clutter. A robot will have to acquire several points of view before it has observed all the objects in such a scene. This problem is addressed in a field called three-dimensional object search ([6]). The existing approaches often rely on an informed search. This method is based on the fact that detected objects specify areas where other objects should be searched. However, these areas are predicted by individual objects rather than by entire scenes. Predicting poses utilizing individual objects can lead to ambiguities, since, e.g., a knife in a table setting would expect the plate to be beneath itself when a meal is finished, whereas it would expect the plate to be beside itself when the meal has not yet started. Instead, using estimates for scenes to predict poses resolves this problem.

Figure 2. Experimental setup mimicking a kitchen. The objects are distributed over a table, a cupboard, and some shelves. Colored dashed boxes are used to discern the searched objects from the clutter and to assign objects to exemplary scene categories.

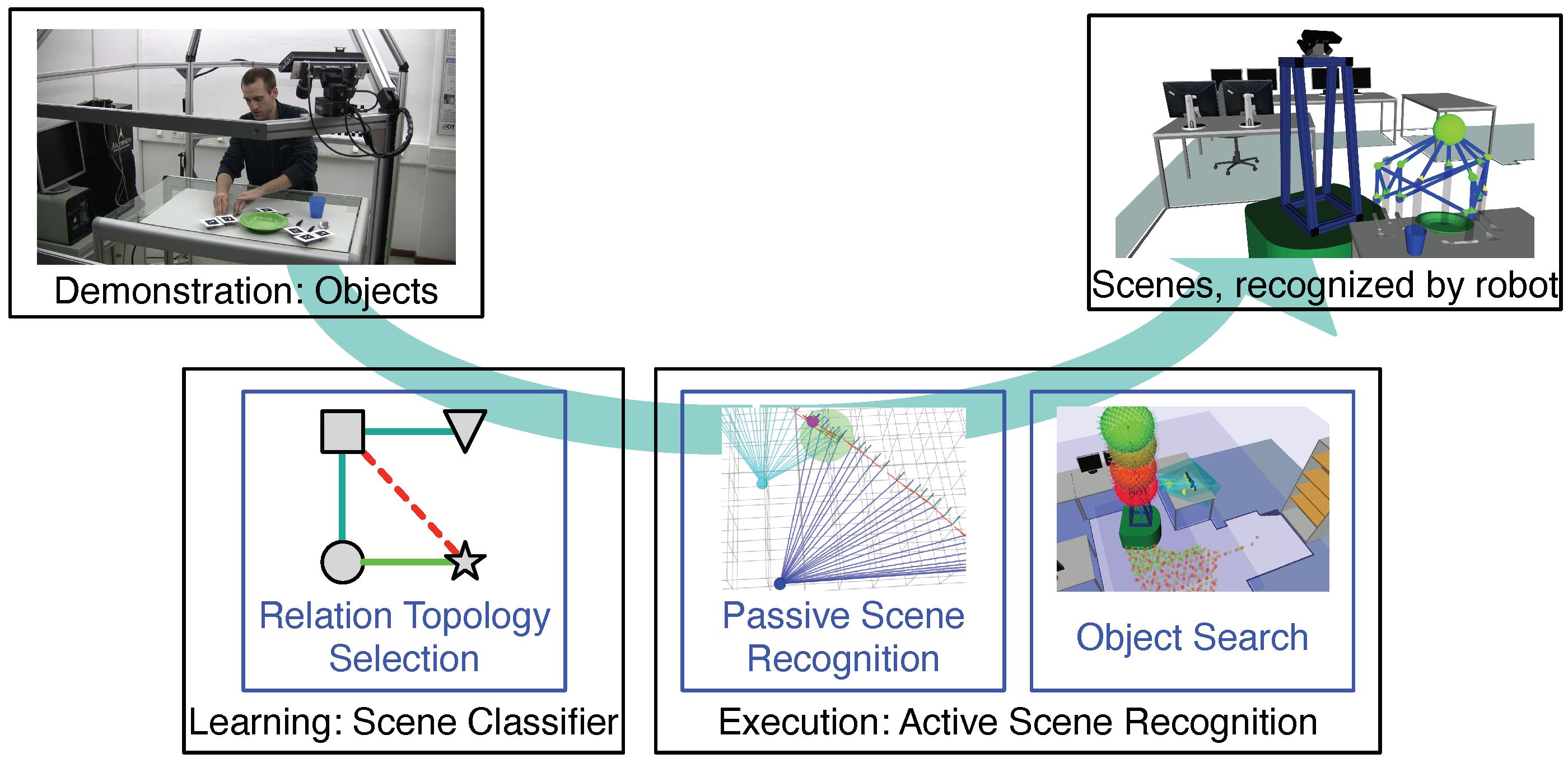

To this end, ’Active Scene Recognition’ (ASR) was presented in [7][8], which is a procedure that integrates scene recognition and object search. Roughly, the procedure is as follows: The robot first detects some objects and computes which scene categories these objects may belong to. Assuming that these scene estimates are valid, it then predicts where missing objects, which would also belong to these estimates, could be located. Based on these predictions, camera views are computed for the robot to check. The flow of the overall approach (see [9]), which consists of two phases—first the learning of scene classifiers and then the execution of active scene recognition—is shown in Figure 3.

Figure 3. Overview of the research problems (in blue) addressed by the overall approach. At the top are the inputs and outputs of the approach. Below are the two phases of the approach: scene classifier learning and ASR execution.

1.3. Relation Topology Selection—Problem

The authors train their scene classifiers using sensory-perceived demonstrations (see [10]), which consist of a two- to three-digit number of recorded object configurations. This learning task involves the problem of selecting pairs of objects in a scene to be related by spatial relations. Which combination of relations is modeled determines the number of false positives returned by a classifier and the runtime of the scene recognition. Combinations of relations are hereinafter referred to as relation topologies.

2. Scene Recognition

The research generally defines scene understanding as an image labeling problem. There are two approaches to address it. One derives scenes from detected objects and relations between them, and the other derives scenes directly from image data without intermediary concepts such as objects, as [11] has investigated. Descriptions of scenes in the form of graphs (modeling existing objects and relation types), as derived by an object-based approach, are far more informative for further use, e.g., for mapping ([12][13]) or object search, than the global labels for images that are instead derived using the “direct” approach. Work in line with [14] or [15] (see [16] for an overview) that follows the object-based approach relies on neural nets for object detection, including feature extraction (e.g., through the work by [17][18]), wherein they combined it with neural nets to generate scene graphs. This approach is made possible by datasets that include relations ([19][20]), which have been published in recent years alongside object detection datasets ([21][22]). These scene graph nets are very powerful, but they are designed to learn models of relations that focus on relation types or meanings rather than the spatial characteristics of relations. In contrast, the authors want to focus on accurately modeling the spatial properties of relations and their uncertainties. Nevertheless, their model should be able to cope with small amounts of data, since the authors want it to learn from demonstrations of people’s personal preferences concerning object configurations. Indeed, users must provide personal data, wherein they tend to put in a limited effort.

Examples of preferences in object configurations can be breakfast tables, which only few people will want to have set in the same way. Nevertheless, people will expect household robots to consider their preferences when arranging objects. For example, ref. [23] addressed personal preferences by combining a relation model for learning preferences for arranging objects on a shelf with object detection. However, while their approach could even successfully handle conflicting preferences, it also missed subtle differences between spatial relations in terms of their extent. Classifiers explicitly designed to model relations and their uncertainties, such as the part-based models [24] from the 2000s, are a more expressive alternative. They also have low sample complexity, thus making them suitable for learning from demonstrations. By replacing their outdated feature extraction component with CNN-based object detectors or pose estimators (e.g., DOPE [25], PoseCNN [26], or CenterPose [27]), the authors obtain an object-based scene classifier that combines the power of CNNs with the expressiveness of part-based models in relation modeling. Thus, the authors' approach combines pretrained object pose estimators with the relation modeling of part-based models.

Ignoring the outdated feature extraction of part-based models, the authors note that [28] already successfully used a part-based model, the constellation model [29], to represent scenes. Constellation models define spatial relations using a parametric representation over Cartesian coordinates (a normal distribution), just like the pictorial structures models [30] (another part-based model) do. Recently, ref. [31]’s approach of using probability distributions over polar coordinates to define relations has proven to be more effective for describing practically relevant relations. Whereas such distributions are more expressive than the model in [23], they are still too coarse for us. Moreover, they use a fixed number of parameters to represent relations. What would be most appropriate when learning from demonstrations of varying length is a relation model whose complexity grows with the number of training samples demonstrated, i.e., a nonparametric model [10]. Such flexible models are the implicit shape models (ISMs) of [32][33]. Therefore, the authors chose ISMs as the basis for their approach. One shortcoming that ISMs have in common with constellation and pictorial structures models is that they can only represent a single type of relation topology. However, the topology that yields the best tradeoff between the number of false positives and scene recognition runtime can vary from scene to scene. The authors extended the ISMs to our hierarchical ISM trees to account for this. The authors also want to mention scene grammars ([34]), which are similar to part-based models but motivated by formal languages. Again, they model relations probabilistically and only use star topologies. For these reasons, the authors chose ISMs over scene grammars.

3. Object Pose Prediction

The research addresses the search for objects in 3D either as an active vision ([35][36][37][38][39][40]) or as a manipulation problem ([41][42][43][44][45][46]). Active vision approaches are divided into direct ([47][48]) and indirect ([49]) searches depending on the type of knowledge about the potential object poses used. An indirect search uses spatial relations to predict from the known poses of objects those of searched objects. An indirect search can be classified according to the type ([50]) of relations used to predict the poses. Refs. [51][52][53], for example, used the relations corresponding to natural language concepts such as ’above’ in robotics. Even though such symbolic relations provide high-quality generalization, they can only provide coarse estimates of metric object poses—too coarse for many object search tasks. For example, ref. [54] successfully adapted and used Boltzmann machines to encode symbolic relations. Representing relations metrically with probability distributions showed promising results in [55]. However, their pose predictions were derived exclusively from the known locations of individual objects, thereby leading to ambiguities that the use of scenes can avoid.

References

- Quattoni, A.; Torralba, A. Recognizing indoor scenes. In Proceedings of the IEEE Conference on IEEE Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009.

- Espinace, P.; Kollar, T.; Soto, A.; Roy, N. Indoor scene recognition through object detection. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Anchorage, Alaska, 3–8 May 2010.

- Meißner, P.; Reckling, R.; Jäkel, R.; Schmidt-Rohr, S.; Dillmann, R. Recognizing Scenes with Hierarchical Implicit Shape Models based on Spatial Object Relations for Programming by Demonstration. In Proceedings of the 2013 16th International Conference on Advanced Robotics (ICAR), Montevideo, Uruguay, 25–29 November 2013.

- Qi, C.R.; Litany, O.; He, K.; Guibas, L.J. Deep hough voting for 3d object detection in point clouds. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019.

- Sommer, C.; Sun, Y.; Bylow, E.; Cremers, D. PrimiTect: Fast Continuous Hough Voting for Primitive Detection. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020.

- Ye, Y.; Tsotsos, J.K. Sensor planning for 3D object search. Comput. Vis. Image Underst. 1999, 73, 145–168.

- Meißner, P.; Reckling, R.; Wittenbeck, V.; Schmidt-Rohr, S.; Dillmann, R. Active Scene Recognition for Programming by Demonstration using Next-Best-View Estimates from Hierarchical Implicit Shape Models. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–7 June 2014.

- Meißner, P.; Schleicher, R.; Hutmacher, R.; Schmidt-Rohr, S.; Dillmann, R. Scene Recognition for Mobile Robots by Relational Object Search using Next-Best-View Estimates from Hierarchical Implicit Shape Models. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Daejeon, Republic of Korea, 9–14 October 2016.

- Meißner, P. Indoor Scene Recognition by 3-D Object Search for Robot Programming by Demonstration; Springer: Cham, Switzerland, 2020; Volume 135.

- Kroemer, O.; Niekum, S.; Konidaris, G. A Review of Robot Learning for Manipulation: Challenges, Representations, and Algorithms. J. Mach. Learn. Res. 2021, 22, 1395–1476.

- Zhou, B.; Lapedriza, A.; Xiao, J.; Torralba, A.; Oliva, A. Learning deep features for scene recognition using places database. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 8–13 December 2014.

- Rosinol, A.; Violette, A.; Abate, M.; Hughes, N.; Chang, Y.; Shi, J.; Gupta, A.; Carlone, L. Kimera: From SLAM to spatial perception with 3D dynamic scene graphs. Int. J. Robot. Res. 2021, 40, 1510–1546.

- Hughes, N.; Chang, Y.; Hu, S.; Talak, R.; Abdulhai, R.; Strader, J.; Carlone, L. Foundations of Spatial Perception for Robotics: Hierarchical Representations and Real-time Systems. arXiv 2023, arXiv:2305.07154.

- Xu, D.; Zhu, Y.; Choy, C.B.; Fei-Fei, L. Scene graph generation by iterative message passing. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017.

- Zellers, R.; Yatskar, M.; Thomson, S.; Choi, Y. Neural motifs: Scene graph parsing with global context. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018.

- Chang, X.; Ren, P.; Xu, P.; Li, Z.; Chen, X.; Hauptmann, A. A comprehensive survey of scene graphs: Generation and application. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 45, 1–26.

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149.

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the CVPR IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016.

- Krishna, R.; Zhu, Y.; Groth, O.; Johnson, J.; Hata, K.; Kravitz, J.; Chen, S.; Kalantidis, Y.; Li, L.J.; Shamma, D.A.; et al. Visual genome: Connecting language and vision using crowdsourced dense image annotations. Int. J. Comput. Vis. 2017, 123, 32.

- Kuznetsova, A.; Rom, H.; Alldrin, N.; Uijlings, J.; Krasin, I.; Pont-Tuset, J.; Kamali, S.; Popov, S.; Malloci, M.; Kolesnikov, A.; et al. The open images dataset v4: Unified image classification, object detection, and visual relationship detection at scale. Int. J. Comput. Vis. 2020, 128, 1956–1981.

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Fei-Fei, L. Imagenet: A large-scale hierarchical image database. In Proceedings of the CVPR IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009.

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft coco: Common objects in context. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; Springer: Cham, Switzerland, 2014.

- Abdo, N.; Stachniss, C.; Spinello, L.; Burgard, W. Organizing objects by predicting user preferences through collaborative filtering. Int. J. Robot. Res. 2016, 35, 1587–1608.

- Grauman, K.; Leibe, B. Visual object recognition. In Synthesis Lectures on Artificial Intelligence and Machine Learning; Springer: Cham, Switzerland, 2011.

- Tremblay, J.; To, T.; Sundaralingam, B.; Xiang, Y.; Fox, D.; Birchfield, S. Deep Object Pose Estimation for Semantic Robotic Grasping of Household Objects. In Proceedings of the Conference on Robot Learning, Zurich, Switzerland, 29–31 October 2018.

- Xiang, Y.; Schmidt, T.; Narayanan, V.; Fox, D. PoseCNN: A Convolutional Neural Network for 6D Object Pose Estimation in Cluttered Scenes. In Proceedings of the Robotics: Science and Systems, Pittsburgh, PA, USA, 26–30 June 2018.

- Lin, Y.; Tremblay, J.; Tyree, S.; Vela, P.A.; Birchfield, S. Single-Stage Keypoint-based Category-level Object Pose Estimation from an RGB Image. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Philadelphia, PA, USA, 23–27 May 2022.

- Ranganathan, A.; Dellaert, F. Semantic modeling of places using objects. In Proceedings of the Robotics: Science and Systems, Atlanta, GA, USA, 27–30 June 2007.

- Fergus, R.; Perona, P.; Zisserman, A. Object Class Recognition by Unsupervised Scale-Invariant Learning. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, CVPR 2003, Madison, WI, USA, 18–20 June 2003; Volume 2.

- Felzenszwalb, P.F.; Huttenlocher, D.P. Pictorial structures for object recognition. Int. J. Comput. Vis. 2005, 61, 55–79.

- Kartmann, R.; Zhou, Y.; Liu, D.; Paus, F.; Asfour, T. Representing Spatial Object Relations as Parametric Polar Distribution for Scene Manipulation Based on Verbal Commands. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 24 October 2020–24 January 2021.

- Leibe, B.; Leonardis, A.; Schiele, B. Combined object categorization and segmentation with an implicit shape model. In Proceedings of the Workshop on Statistical Learning in Computer Vision ECCV, Prague, Czech Republic, 15 May 2004; Volume 2, p. 7.

- Leibe, B.; Leonardis, A.; Schiele, B. Robust object detection with interleaved categorization and segmentation. Int. J. Comput. Vis. 2008, 77, 259–289.

- Yu, B.; Chen, C.; Zhou, F.; Wan, F.; Zhuang, W.; Zhao, Y. A Bottom-up Framework for Construction of Structured Semantic 3D Scene Graph. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 24 October 2020–24 January 2021.

- Shubina, K.; Tsotsos, J.K. Visual search for an object in a 3D environment using a mobile robot. Comput. Vis. Image Underst. 2010, 114, 535–547.

- Aydemir, A.; Sjöö, K.; Folkesson, J.; Pronobis, A.; Jensfelt, P. Search in the real world: Active visual object search based on spatial relations. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Shanghai, China, 9–13 May 2011.

- Eidenberger, R.; Grundmann, T.; Schneider, M.; Feiten, W.; Fiegert, M.; Wichert, G.v.; Lawitzky, G. Scene Analysis for Service Robots. In Towards Service Robots for Everyday Environments; Springer: Berlin/Heidelberg, Germany, 2012.

- Rasouli, A.; Lanillos, P.; Cheng, G.; Tsotsos, J.K. Attention-based active visual search for mobile robots. Auton. Robot. 2020, 44, 131–146.

- Ye, X.; Yang, Y. Efficient robotic object search via hiem: Hierarchical policy learning with intrinsic-extrinsic modeling. IEEE Robot. Autom. Lett. 2021, 6, 9387146.

- Zheng, K.; Paul, A.; Tellex, S. A System for Generalized 3D Multi-Object Search. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), London, UK, 29 May–2 June 2023.

- Wong, L.L.; Kaelbling, L.P.; Lozano-Pérez, T. Manipulation-based active search for occluded objects. In Proceedings of the 2013 IEEE International Conference on Robotics and Automation, Karlsruhe, Germany, 6–10 May 2013.

- Dogar, M.R.; Koval, M.C.; Tallavajhula, A.; Srinivasa, S.S. Object search by manipulation. In Proceedings of the 2013 IEEE International Conference on Robotics and Automation, Karlsruhe, Germany, 6–10 May 2013.

- Li, J.K.; Hsu, D.; Lee, W.S. Act to see and see to act: POMDP planning for objects search in clutter. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Daejeon, Republic of Korea, 9–14 October 2016.

- Danielczuk, M.; Kurenkov, A.; Balakrishna, A.; Matl, M.; Wang, D.; Martín-Martín, R.; Garg, A.; Savarese, S.; Goldberg, K. Mechanical search: Multi-step retrieval of a target object occluded by clutter. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019.

- Huang, H.; Fu, L.; Danielczuk, M.; Kim, C.M.; Tam, Z.; Ichnowski, J.; Angelova, A.; Ichter, B.; Goldberg, K. Mechanical search on shelves with efficient stacking and destacking of objects. In Proceedings of the the International Symposium of Robotics Research, Geneva, Switzerland, 25–30 September 2022; Springer: Cham, Switzerland, 2022.

- Sharma, S.; Huang, H.; Shivakumar, K.; Chen, L.Y.; Hoque, R.; Ichter, B.; Goldberg, K. Semantic Mechanical Search with Large Vision and Language Models. In Proceedings of the 7th Annual Conference on Robot Learning, Atlanta, GA, USA, 6 November 2023.

- Druon, R.; Yoshiyasu, Y.; Kanezaki, A.; Watt, A. Visual object search by learning spatial context. IEEE Robot. Autom. Lett. 2020, 5, 1279–1286.

- Hernandez, A.C.; Derner, E.; Gomez, C.; Barber, R.; Babuška, R. Efficient Object Search Through Probability-Based Viewpoint Selection. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 24 October 2020–24 January 2021.

- Garvey, T.D. Perceptual Strategies for Purposive Vision; Technical Note 117; SRI International: Menlo Park, CA, USA, 1976.

- Thippur, A.; Burbridge, C.; Kunze, L.; Alberti, M.; Folkesson, J.; Jensfelt, P.; Hawes, N. A comparison of qualitative and metric spatial relation models for scene understanding. In Proceedings of the AAAI Conference on Artificial Intelligence, Austin, TX, USA, 25–30 January 2015; Volume 29.

- Southey, T.; Little, J. 3D Spatial Relationships for Improving Object Detection. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Karlsruhe, Germany, 6–10 May 2013.

- Lorbach, M.; Hofer, S.; Brock, O. Prior-assisted propagation of spatial information for object search. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Chicago, IL, USA, 14–18 September 201.

- Zeng, Z.; Röfer, A.; Jenkins, O.C. Semantic linking maps for active visual object search. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020.

- Bozcan, I.; Kalkan, S. Cosmo: Contextualized scene modeling with boltzmann machines. Robot. Auton. Syst. 2019, 113, 132–148.

- Kunze, L.; Doreswamy, K.K.; Hawes, N. Using qualitative spatial relations for indirect object search. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–7 June 2014.

More