Your browser does not fully support modern features. Please upgrade for a smoother experience.

Please note this is a comparison between Version 1 by wang wei and Version 3 by Lindsay Dong.

Simultaneous localization and mapping (SLAM) plays a crucial role in the field of intelligent mobile robots. However, the traditional Visual SLAM (VSLAM) framework is based on strong assumptions about static environments, which are not applicable to dynamic real-world environments. The correctness of re-localization and recall of loop closure detection are both lower when the mobile robot loses frames in a dynamic environment.

- semantic topology graph

- Visual SLAM

- ORB-SLAM2

- dynamic environment

- mobile robots

1. Introduction

Simultaneous localization and mapping (SLAM) is one of the core problems in mobile robotics research [1][2][1,2]. Compared to laser sensors, vision sensors have the advantages of fine perception, low price, smaller size, and lighter weight. Thus, Visual SLAM (VSLAM) has made great progress in the last few decades. Among various VSLAM algorithms, feature-based algorithms are widely used in long-term robot deployment because of their high efficiency and scalability. However, most existing SLAM systems rely on hand-crafted visual features, such as SIFT [3], the Shi–Tomasi method [4], and ORB [5], which may not provide consistent feature detection and association results in dynamic environments. For example, when either the scene or the viewpoint has been changed, ORB-SLAM2 frequently fails to recognize previously visited scenes because of less visual feature information [6]. Thus, the mobile robot needs to use re-localization and loop closure detection to identify whether this scene has been visited before. Generally, the re-localization is accomplished using loop closure detection to correlate information. Firstly, the bag-of-words model is used to extract candidate frames for relocation with high similarity to the current frame. Then, the frame-to-frame matching method is used to match the local features of the current frame and re-localize candidate frames until all the candidate frames are traversed or the information is associated. However, in dynamic environments, Visual SLAM often fails in re-localization and loop closure detection due to the insufficient numbers of matched local feature point pairs. However, the numbers of matched local feature point pairs are insufficient because of dynamic objects, which can vastly impair the performance of the Visual SLAM system [7]. Therefore, Visual SLAM often fails in re-localization and loop closure detection. In order to address this challenging topic, some researchers have performed some work on feature removal [8][9][8,9]. The stability of the system’s visual odometer is enhanced through removing feature points from dynamic objects.

In the real dynamic scene, re-localization and loop closure detection will fail with limited feature information [10]. Therefore, the mobile robot often needs to go back to the previous place to extract more feature information, so as to complete the feature information matching to realize re-localization and loop closure detection. The combination of Visual SLAM and deep learning can solve this problem better than traditional methods [11]. The main idea of deep learning is to extract image features using a network trained in advance [12]. However, the disadvantage of deep learning is that it is computationally intensive and requires high-performance equipment. Furthermore, it is difficult to construct suitable models to store high-dimensional feature vectors. Thus, in recent years, researchers have attempted to introduce semantic information into Visual SLAM [13][14][13,14]. Using semantic information to describe the environment can effectively simplify the process of saving and comparing environmental information [15][16][15,16].

ORB-SLAM2 is the most widely used of the Visual SLAM frameworks [6]. The re-localization and loop closure detection of the ORB-SLAM2 framework are mainly accomplished by using the current frame to match feature points with the candidate keyframes. Therefore, the numbers of extracted feature points determine the accuracy and matching speed of re-localization and loop closure detection. The more feature points are extracted, the more accurate the localization and the faster the corresponding speed. However, it will affect the feature point extraction if the dynamic object moves fast in a dynamic environment, which will affect the accuracy of re-localization and loop closure detection. Despite this, the relative positions and distances of static objects do not change, irrespective of the motion of dynamic objects in the dynamic environment.

2. Simultaneous Localization and Mapping System in Dynamic Environment

2.1. Re-Localization

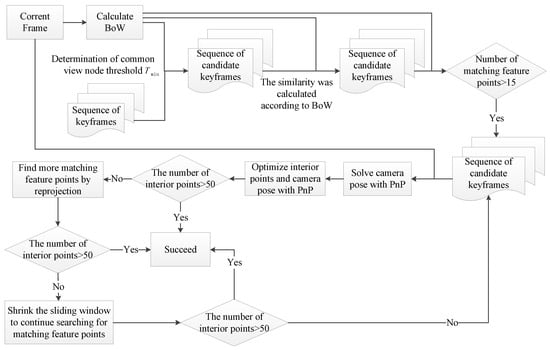

ORB-SLAM2, as a sparse feature-based VSLAM method, is prone to tracking loss in position estimation. The re-localization function of ORB-SLAM2 is activated when frames are lost. The re-localization is realized by discriminant coordinate regression. This method uses the PnP algorithm to obtain the essential matrix after using RANSAC to obtain the fundamental matrix describing the relationship between two image positions, and then uses the PnP algorithm to obtain the essential matrix jointly with the camera internal parameters to solve the camera poses [17]. The purpose is to obtain a sufficient number of matched feature points from the before and after sequence frame images for rotation and translation to solve the camera poses so that the lost camera poses can be estimated and the tracking process can be resumed [18]. The core idea of re-localization is to find the keyframe that is closest to the current frame among the previous keyframes. Firstly, the BoW of the current frame must be calculated. Then, the frames with high similarity and a higher number of matching feature points than 15 in the BoW model are determined as the sequence of candidate keyframes. Finally, the success of re-localization is determined by whether the number of interior points matching the current frame with the candidate frames is greater than 50. The specific process is shown in Figure 1. Due to the presence of dynamic objects in the environment, incorrect matching information often occurs during feature matching.

Figure 1.

The process of re-localization.

2.2. Loop Closure Detection (LCD)

Loop closure detection (LCD) is the ability of a mobile robot to recognize a scene that has been reached and to realize loop closure. The basic process is to calculate the similarity by comparing between keyframes and determine whether they pass through the same place or “return to the origin”. The LCD problem is the process of determining the correlation between current and historical data to identify whether a location has been reached before. The essence of the LCD problem is to reduce the cumulative error in map construction [19]. With the development of computer vision, the LCD algorithm based on appearance information has become the mainstream algorithm in the early stage, among which BoVW is the most common algorithm [20]. BoVW has been widely used in the LCD of VSLAM systems because of its high detection efficiency and retrieval accuracy. However, the presence of dynamic objects will interfere with the judgment of LCD [21]. In recent years, the continuous development of deep learning technology in the fields of image recognition, computer vision, and mobile robotics has provided new solution ideas for the LCD module in SLAM systems. DS-SLAM [22] and SegNet [23] were employed to segment dynamic objects. DS-SLAM removed the feature points located in the area of the dynamic scene to alleviate dynamic interference in loop detection. The authors of reference [24] proposed to realize LCD by integrating visual–spatial–semantic information with features of a topological graph and a convolutional neural network. The authors first built semantic topological graphs based on semantic information and then used random walk descriptors to characterize the topological graphs for graph matching. Finally, the authors calculated the geometric similarity and the appearance similarity to determine the loop closure detection. Reference [25] presents a strategy that models the visual scene as a semantic sub-graph by only preserving the semantic and geometric information from object detection. The authors used a sparse Kuhn–Munkres algorithm to speed up the search for correspondence among nodes. The shape similarity and the Euclidean distance between objects in the 3D space were leveraged and united to measure the image similarity through graph matching.