Your browser does not fully support modern features. Please upgrade for a smoother experience.

Please note this is a comparison between Version 2 by Catherine Yang and Version 1 by Guang Lin.

Non-dominated sorting genetic algorithm (NSGA)-Physics-informed neural networks (PINNs), a multi-objective optimization framework for the effective training of PINNs.

- machine learning

- data-driven scientific computing

- multi-objective optimization

1. Introduction

Physics-informed neural networks (PINNs) [1,2][1][2] have proven to be successful in solving partial differential equations (PDEs) in various fields, including applied mathematics [3], physics [4], and engineering systems [5,6,7][5][6][7]. For example, PINNs have been utilized for solving Reynolds-averaged Navier–Stokes (RANS) simulations [8] and inverse problems related to three-dimensional wake flows, supersonic flows, and biomedical flows [9]. PINNs have been especially helpful in solving PDEs that contain significant nonlinearities, convection dominance, or shocks, which can be challenging to solve using traditional numerical methods [10]. The universal approximation capabilities of neural networks [11] have enabled PINNs to approach exact solutions and satisfy initial or boundary conditions of PDEs, leading to their success in solving PDE-based problems. Moreover, PINNs have successfully handled difficult inverse problems [12,13][12][13] by combining them with data (i.e., scattered measurements of the states).

PINNs use multiple loss functions, including residual loss, initial loss, boundary loss, and, if necessary, data loss for inverse problems. The most common approach for training PINNs is to optimize the total loss (i.e., the weighted sum of the loss functions) using standard stochastic gradient descent (SGD) methods [14[14][15],15], such as ADAM. However, optimizing highly non-convex loss functions for PINN training with SGD methods can be challenging because there is a risk of being trapped in various suboptimal local minima, especially when solving inverse problems or dealing with noisy data [16,17][16][17]. Additionally, SGD can only satisfy initial and boundary conditions as soft constraints, which may limit the use of PINNs in the optimization and control of complex systems, which require the exact fulfillment of these constraints.

2. The NSGA-PINN Framework

This section describes the proposed NSGA-PINN framework for multi-objective optimization-based training of a PINN.2.1. Non-Dominated Sorting

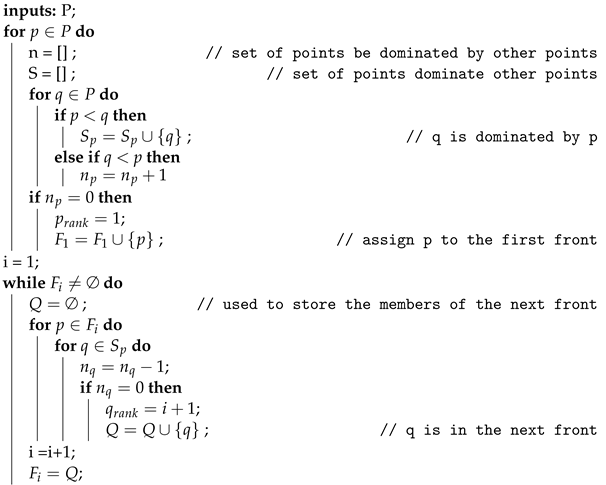

The proposed NSGA-PINN utilizes non-dominated sorting (see Algorithm 1 for more detailed information) during PINN training. The input P can consist of multiple objective functions, or loss functions, depending on the problem setting. For a simple ODE problem, these objective functions may include a residual loss function, an initial loss function, and a data loss function (if experimental data are available and wthe researchers are tackling an inverse problem). Similarly, for a PDE problem, the objective functions may include a residual loss function, a boundary loss function, and a data loss function. In the EAs, the solutions refer to the elements in the parent population. WThe researchers randomly choose two solutions in the parent population p and q; if p has a lower loss value than q in all the objective functions, wthe researchers define p as dominating q. If p has at least one loss value lower than q, and all others are equal, the previous definition also applies. For each p element in the parent population, wthe researchers calculate two entities: (1) domination count 𝑛𝑝, which represents the number of solutions that dominate solution p, and (2) 𝑆𝑝, the set of solutions that solution p dominates. Solutions with a domination count of 𝑛𝑝=0 are considered to be in the first front. WThe researchers then look at 𝑆𝑝 and, for each solution in it, decrease their domination count by 1. The solutions with a domination count of 0 are considered to be in the second front. By performing the non-dominated sorting algorithm, the weresearchers obtain the front value for each solution [21][18].| Algorithm 1: Non-dominated sorting |

|

2.2. Crowding-Distance Calculation

In addition to achieving convergence to the Pareto-optimal set for multi-objective optimization problems, it is important for an evolutionary algorithm (EA) to maintain a diverse range of solutions within the obtained set. WThe researchers implement the crowding-distance calculation method to estimate the density of each solution in the population. To do this, first, sort the population according to each objective function value in ascending order. Then, for each objective function, assign infinite distance values to the boundary solutions, and assign all other intermediate solutions a distance equal to the absolute normalized difference in function values between two adjacent solutions. The overall crowding-distance value is calculated as the sum of individual distance values corresponding to each objective. A higher density value represents a solution that is far away from other solutions in the population.2.3. Crowded Binary Tournament Selection

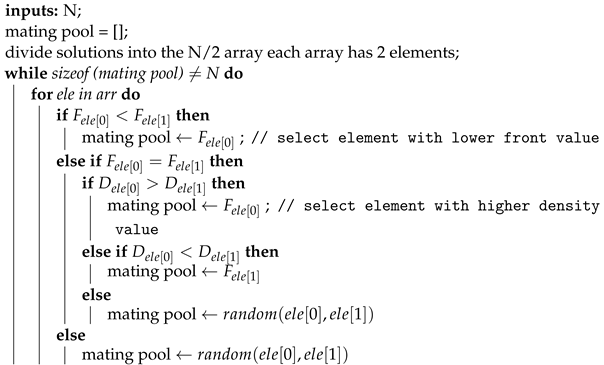

The crowded binary tournament selection, explained in more detail in Algorithm 2, was used to select the best PINN models for the mating pool and further operations. Before implementing this selection method, wthe researchers labeled each PINN model so that wethe researchers could track the one with the lower loss value. The population of size n was then randomly divided into 𝑛/2 groups, each containing two elements. For each group, wthe researchers compared the two elements based on their front and density values. We The researchers preferred the element with a lower front value and a higher density value. In Algorithm 2, F denotes the front value and D denotes the density value.| Algorithm 2: Crowded binary tournament selection |

|

2.4. NSGA-PINN Main Loop

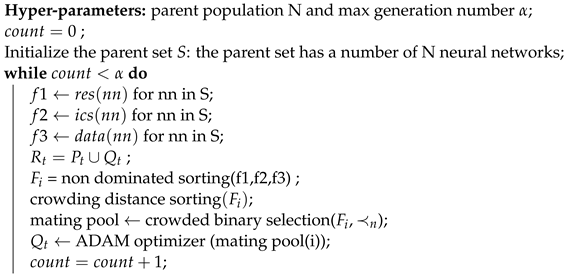

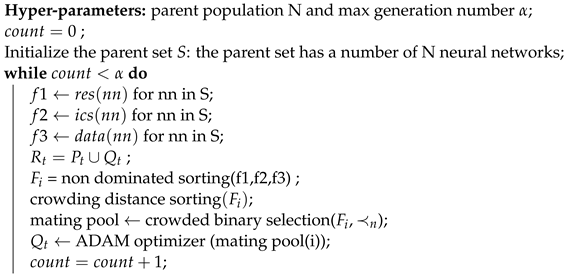

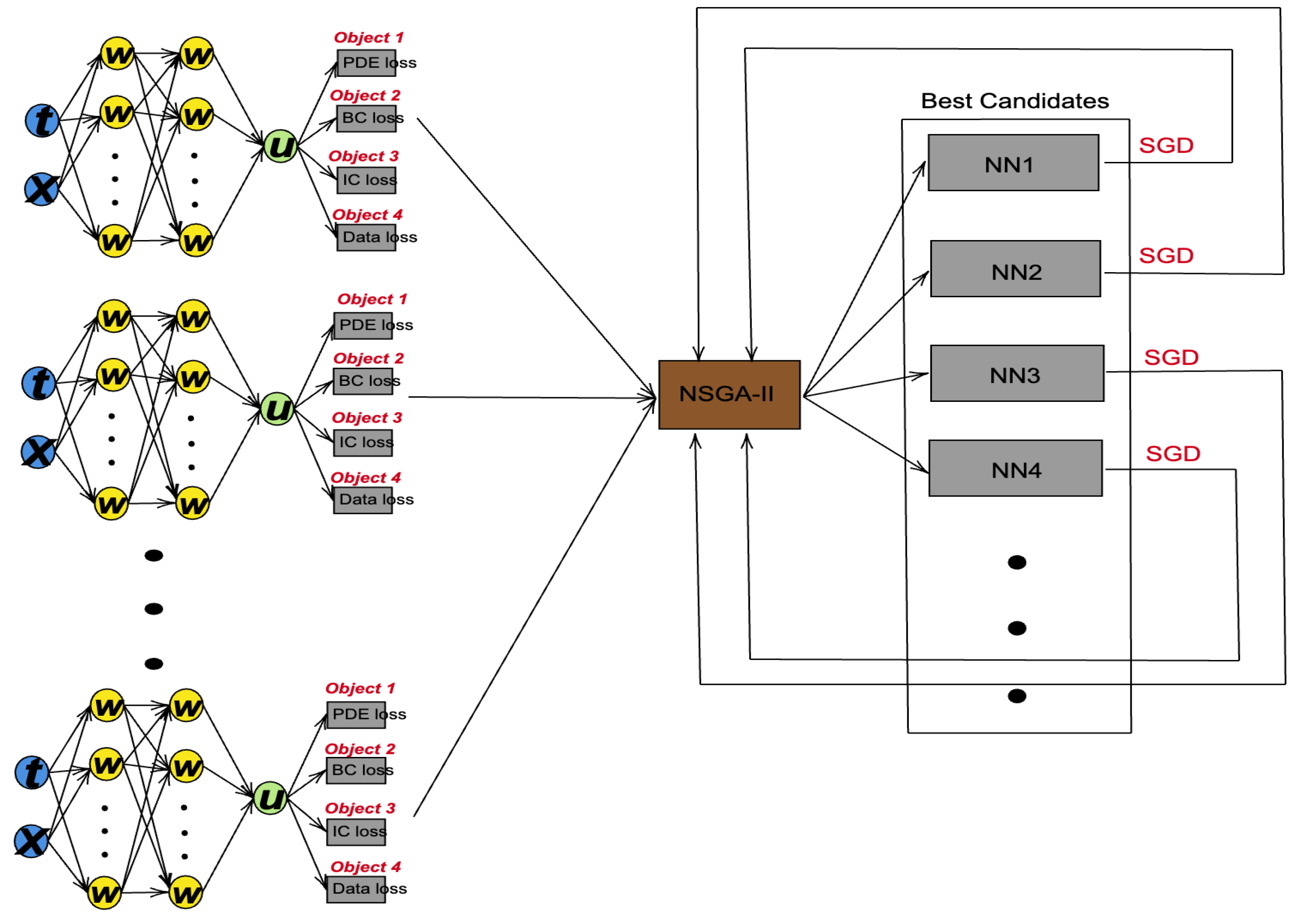

The main loop of the proposed NSGA-PINN method is described in Algorithm 3. The algorithm first initializes the number of PINNs to be used (N) and sets the maximum number of generations (𝛼) to terminate the algorithm. Then, the PINN pool is created with N PINNs. For each loss function in a PINN, N loss values are obtained from the network pool. When there are three loss functions in a PINN, 3𝑁 loss values are used as the parent population. The population is sorted based on non-domination, and each solution is assigned a fitness (or rank) equal to its non-domination level [21][18]. The density of each solution is estimated using crowding-distance sorting. Then, by performing a crowded binary tournament selection, PINNs with lower front values and higher density values are selected to be put into the mating pool. In the mating pool, the ADAM optimizer is used to further reduce the loss value. The NSGA-II algorithm selects the PINN with the lowest loss value as the starting point for the ADAM optimizer. By repeating this process many times, the proposed method helps the ADAM optimizer escape the local minima. Figure 21 shows the main process of the proposed NSGA-PINN framework.| Algorithm 3: |

| Algorithm 3: Training PINN by NSGA-PINN method |

|

| Training PINN by NSGA-PINN method |

|

Figure 21. NSGA-PINN structure diagram.

References

- Raissi, M.; Yazdani, A.; Karniadakis, G.E. Hidden fluid mechanics: Learning velocity and pressure fields from flow visualizations. Science 2020, 367, 1026–1030.

- Karniadakis, G.E.; Kevrekidis, I.G.; Lu, L.; Perdikaris, P.; Wang, S.; Yang, L. Physics-informed machine learning. Nat. Rev. Phys. 2021, 3, 422–440.

- Larsson, S.; Thomée, V. Partial Differential Equations with Numerical Methods; Springer: Berlin/Heidelberg, Germany, 2003; Volume 45.

- Geroch, R. Partial differential equations of physics. In General Relativity; Routledge: London, UK, 2017; pp. 19–60.

- Rudy, S.H.; Brunton, S.L.; Proctor, J.L.; Kutz, J.N. Data-driven discovery of partial differential equations. Sci. Adv. 2017, 3, e1602614.

- Lu, N.; Han, G.; Sun, Y.; Feng, Y.; Lin, G. Artificial intelligence assisted thermoelectric materials design and discovery. ES Mater. Manufacturing. 2021, 14, 20–35.

- Moya, C.; Lin, G. DAE-PINN: A physics-informed neural network model for simulating differential algebraic equations with application to power networks. Neural Comput. Appl. 2023, 35, 3789–3804.

- Thuerey, N.; Weißenow, K.; Prantl, L.; Hu, X. Deep learning methods for Reynolds-averaged Navier–Stokes simulations of airfoil flows. AIAA J. 2020, 58, 25–36.

- Cai, S.; Mao, Z.; Wang, Z.; Yin, M.; Karniadakis, G.E. Physics-informed neural networks (PINNs) for fluid mechanics: A review. Acta Mech. Sin. 2021, 37, 1727–1738.

- Cuomo, S.; Di Cola, V.S.; Giampaolo, F.; Rozza, G.; Raissi, M.; Piccialli, F. Scientific machine learning through physics–informed neural networks: Where we are and what’s next. J. Sci. Comput. 2022, 92, 88.

- Hornik, K.; Stinchcombe, M.; White, H. Multilayer feedforward networks are universal approximators. Neural Netw. 1989, 2, 359–366.

- Mao, Z.; Jagtap, A.D.; Karniadakis, G.E. Physics-informed neural networks for high-speed flows. Comput. Methods Appl. Mech. Eng. 2020, 360, 112789.

- Fernández-Fuentes, X.; Mera, D.; Gómez, A.; Vidal-Franco, I. Towards a fast and accurate eit inverse problem solver: A machine learning approach. Electronics 2018, 7, 422.

- Ruder, S. An overview of gradient descent optimization algorithms. arXiv 2016, arXiv:1609.04747.

- Cheridito, P.; Jentzen, A.; Rossmannek, F. Non-convergence of stochastic gradient descent in the training of deep neural networks. J. Complex. 2021, 64, 101540.

- Jain, P.; Kar, P. Non-convex optimization for machine learning. Found. Trends® Mach. Learn. 2017, 10, 142–363.

- Krishnapriyan, A.; Gholami, A.; Zhe, S.; Kirby, R.; Mahoney, M.W. Characterizing possible failure modes in physics-informed neural networks. Adv. Neural Inf. Process. Syst. 2021, 34, 26548–26560.

- Deb, K.; Pratap, A.; Agarwal, S.; Meyarivan, T. A fast and elitist multiobjective genetic algorithm: NSGA-II. IEEE Trans. Evol. Comput. 2002, 6, 182–197.

More